Submitted:

22 October 2024

Posted:

24 October 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- A new adversarial-training approach with a perceptual-quality-labels penalty. It outperforms standard adversarial training by correlating with subjective quality.

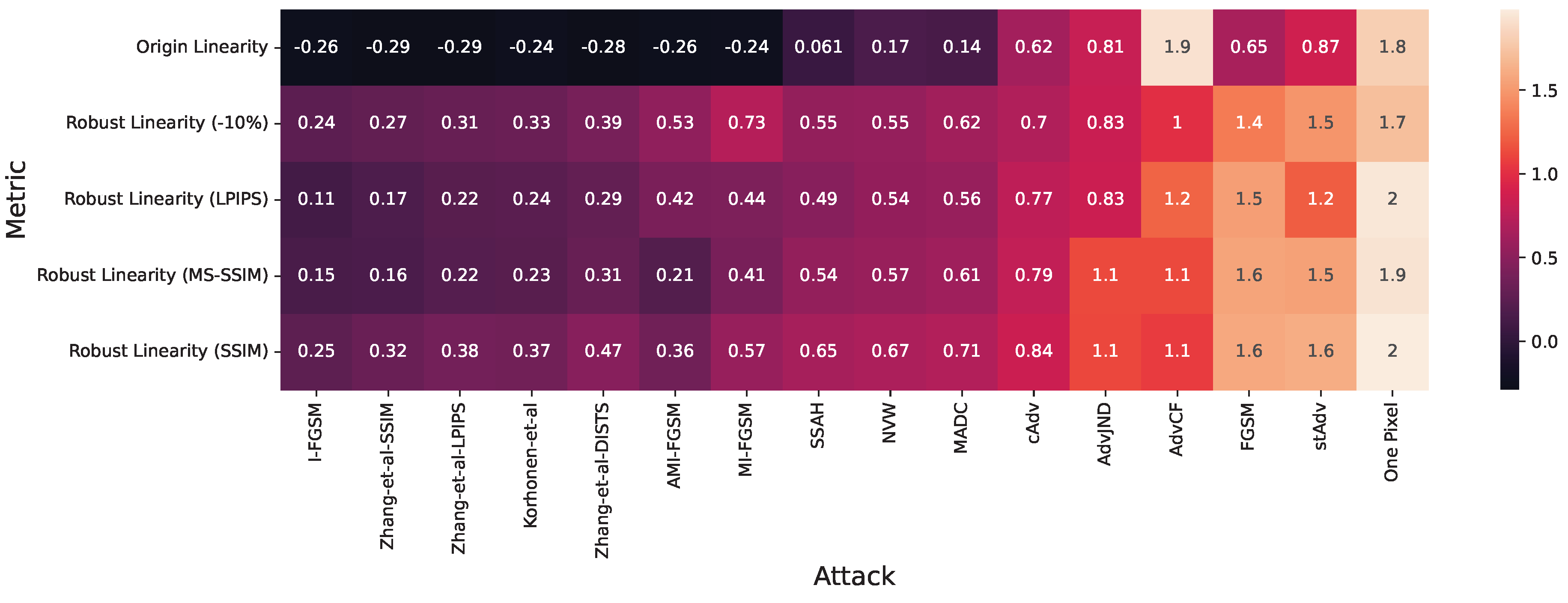

- A new method for comparing the robustness of trained IQA models, as well as a new robustness-evaluation metric.

- Comprehensive experiments that tested our method’s efficiency on four IQA models: LinearityIQA, KonCept512, HyperIQA, and WSP-IQA.

2. Related Work

2.1. Image Quality Assessment

2.2. Adversarial Attacks on IQA Models

2.3. Adversarial Training

2.4. Robust IQA Models

3. Proposed Method

3.1. Problem Setting

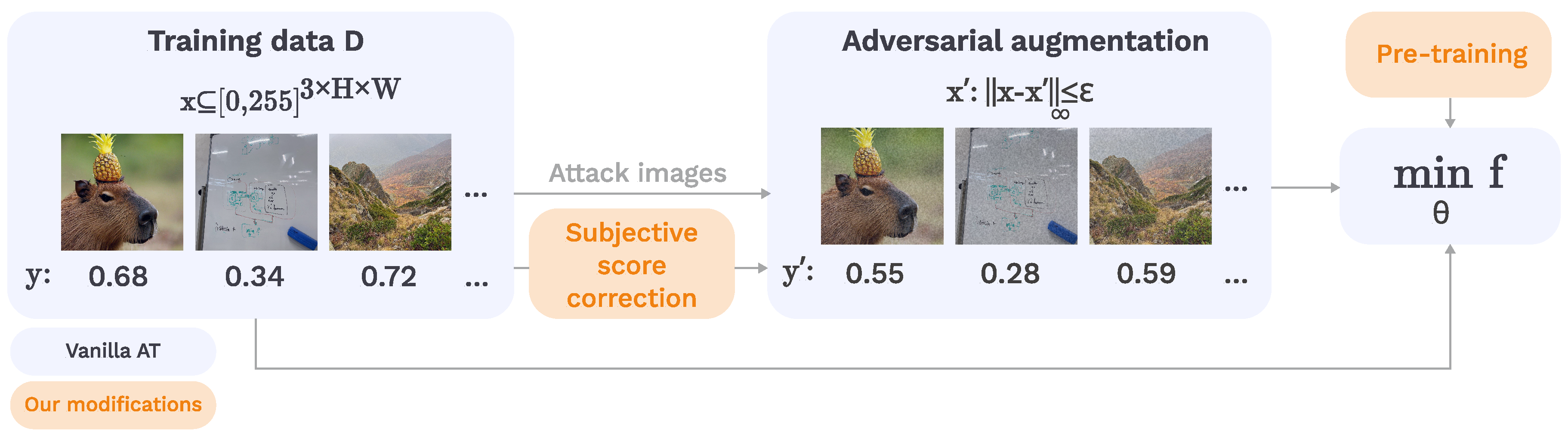

3.2. Method

| Algorithm 1 Proposed adversarial training for T epochs, given attack magnitude , step size , and dataset D for pre-trained NR-IQA model , loss function , and FR metric M. |

|

for do

for do

// PGD-1 adversarial attack:

// Subjective score correction:

// norm is calculated according to Equation (7)

// Update model with some optimizer and step size β

end for

end for

|

4. Materials and Methods

4.1. Experimental Setup

4.2. Evaluation Metrics

4.3. Implementation Details

5. Results

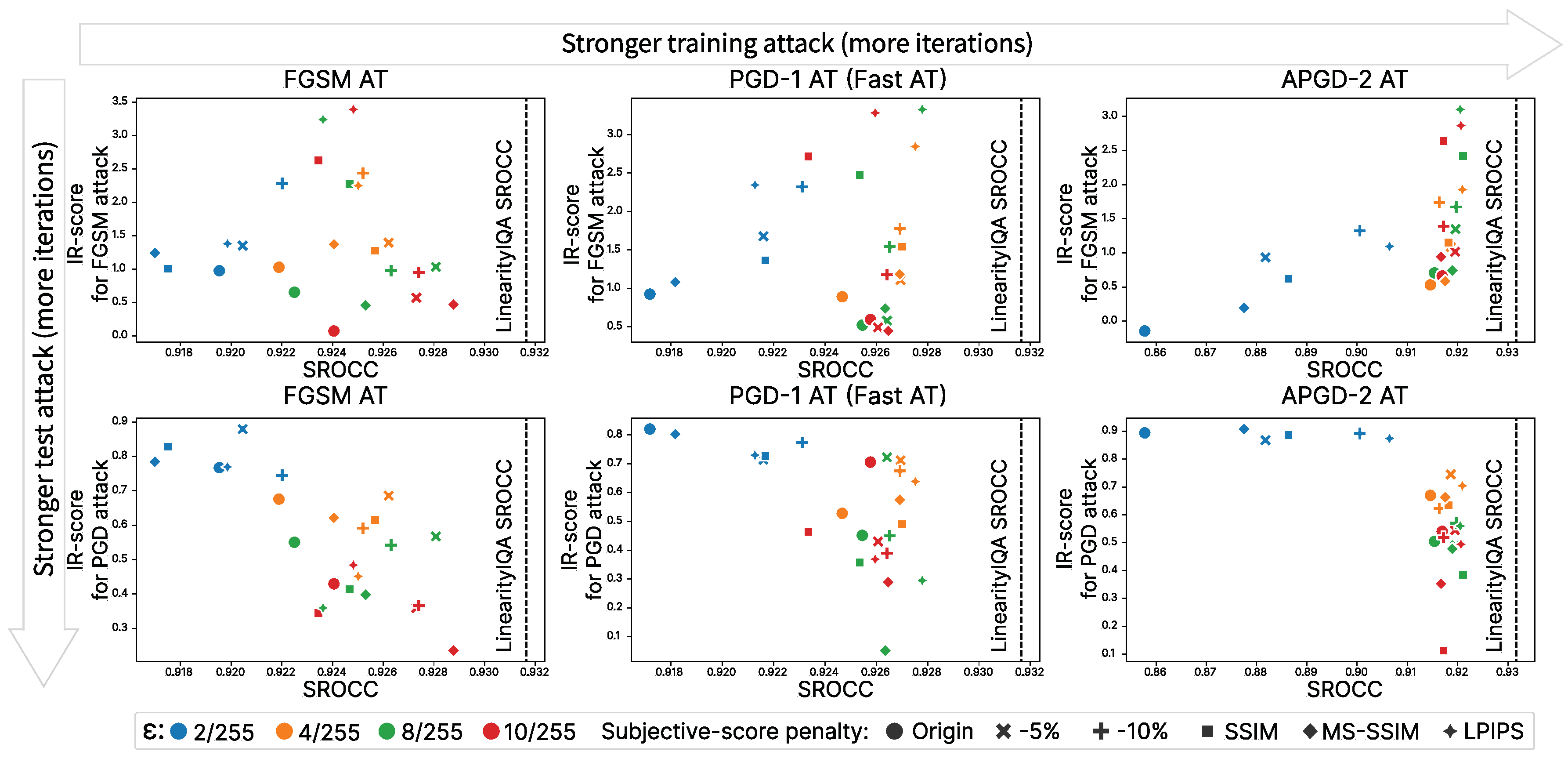

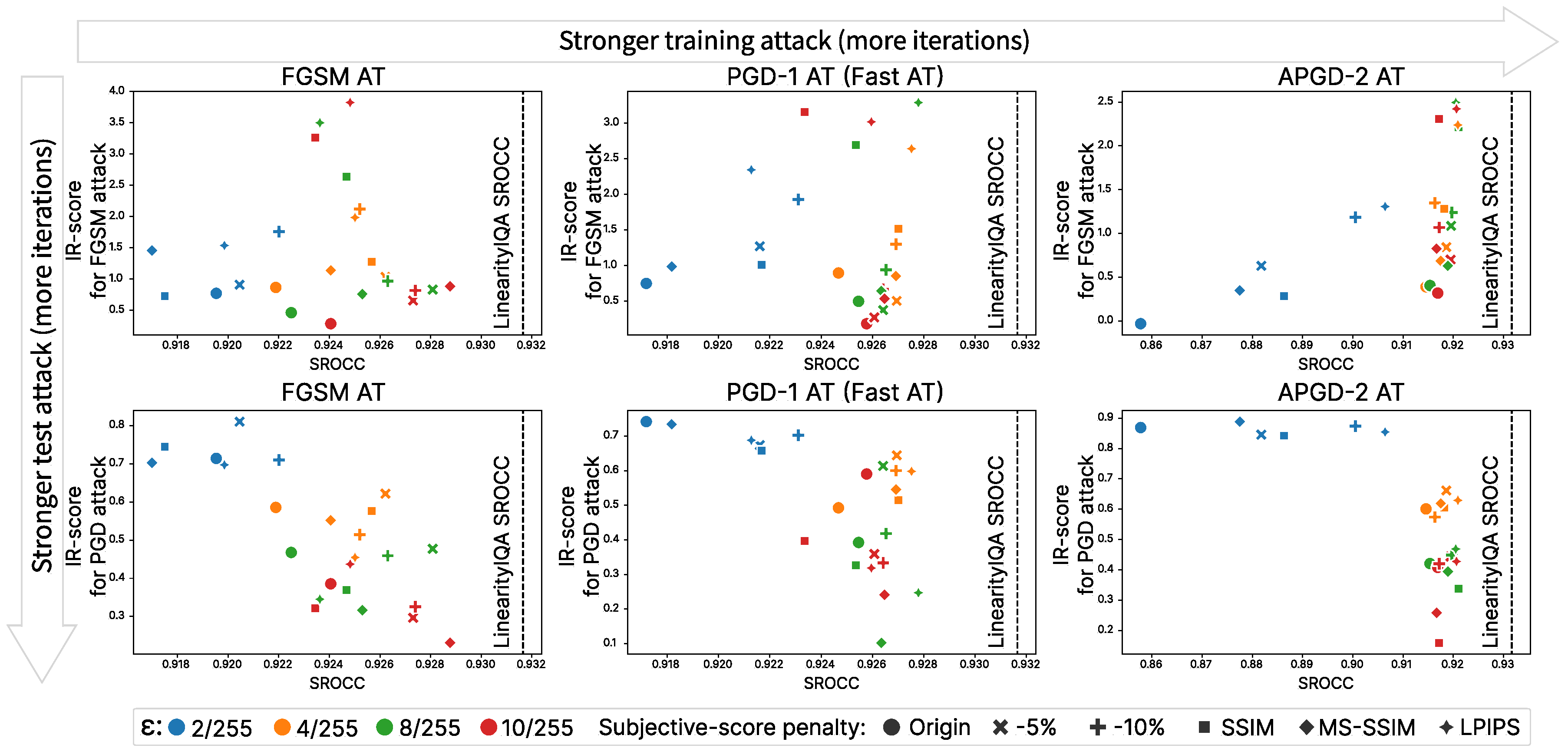

5.1. Ablation Study

5.2. Overall Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| IQA | Image quality assessment |

| FR | Full reference |

| NR | No reference |

| CNN | Convolutional neural network |

| ViT | Vision transformer |

| SROCC | Spearman rank order correlation coefficient |

| NOT | Neural optimal transport |

| AT | Adversarial Training |

| NT | Norm regularization Training |

Appendix A. Applicability of Proposed Method to other Adversarial Attacks

Appendix B. Empirical Probabilistic Estimation

Appendix C. Comparison with Adversarial Purification Methods

Appendix D. Additional Attack Details

Appendix E. Ablation Study Results

| Attack | Description | Invisibility Metric | Optimizer | Optimization parameters |

|---|---|---|---|---|

| FGSM [24] | One-step gradient attack. |

distance |

Gradient descent |

Number of iterations |

| I-FGSM [43] | Iterative version of FGSM. |

distance |

Gradient descent |

Step size at each iteration Number of iterations |

| MI-FGSM [44] | I-FGSM with momentum. |

distance |

Gradient descent |

Step size at each iteration Number of iterations Decay factor |

| AMI-FGSM [45] | MI-FGSM with . |

distance |

Gradient descent |

Step size at each iteration Number of iterations Decay factor |

| Korhonen et al. [6] | Using spatial activity map to concentrate perturbations in textured regions. Spatial activity map is computed using the Sobel filter. |

distance | Adam | Learning rate Number of iterations |

| NVW [48] | Using variance map to concentrate perturbations in the high variance areas. |

distance | Adam | Learning rate Number of iterations |

| Zhang-SSIM Zhang-LPIPS [7] Zhang-DISTS |

Adding a full-reference metric as an additional term of the objective function. |

distance | Adam | Learning rate Number of iterations |

| SSAH [49] | Attacking the semantic similarity of the image. |

Low-Frequency component distortion |

Adam | Learning rate Number of iterations Hyperparameter Wavelet type – haar |

| AdvJND [46] | Adding the just noticeable difference (JND) coefficients in the constraint to improve the quality of images. |

distance |

Gradient descent |

Number of iterations |

| MADC [47] | Updating image in the direction of increasing the metric score while keeping MSE fixed. |

distance |

Gradient descent |

Learning rate Number of iterations |

| AdvCF [42] | Using gradient information in the parameter space of a simple color filter. |

Unrestricted color perturbation |

Adam | Learning rate Number of iterations |

| cAdv [50] | Adaptive selection of locations in the image to change their colors. |

Unrestricted color perturbation |

Adam | Learning rate Number of iterations |

| StAdv [51] | Adversarial examples based on spatial transformation. |

The sum of spatial movement distance for any two adjacent pixels |

L-BFGS-B | Hyperparameter to balance two losses Number of iterations |

| One Pixel [52] | Using differential evolution to perturbe several pixels without gradient-based methods. |

distance Number of perturbed pixels |

Differential evolution |

Number of iterations A multiplier for setting the total population size |

| Attack | Description | Perturbation budget |

Number of iterations n |

Step size |

|---|---|---|---|---|

| FGSM [24] | One-step gradient attack. | 1 | ||

| PGD-1 [26] | FGSM with initial random perturbation. | 1 | ||

| APGD-2 [28] | Step size-free variant of PGD. | 2 | Adaptive |

| Attack | Description | Perturbation budget |

Number of iterations n |

Step size |

|---|---|---|---|---|

| FGSM [24] | One-step gradient attack. | 1 | ||

| PGD-1 [26] | FGSM with initial random perturbation. | 1 | ||

| PGD-10 [25] | Iterative FGSM with initial random perturbation. | 10 |

| Defense method |

SROCC | R () ↑ | IR-score ↑ | ||||||

|---|---|---|---|---|---|---|---|---|---|

| FGSM |

Adaptive FGSM |

PGD-10 |

Adaptive PGD-10 |

FGSM |

Adaptive FGSM |

PGD-10 |

Adaptive PGD-10 |

||

| w/o | 0.925 | 0.706 | - | 0.353 | - | - | - | - | - |

| Crop (0.79) | 0.909 | 0.893 | 0.832 | 0.552 | 0.465 | 0.540 | 0.443 | 0.452 | 0.428 |

| Resize (0.8) | 0.910 | 0.992 | 0.651 | 0.833 | 0.250 | 0.612 | 0.019 | 0.668 | 0.067 |

| AT | 0.913 | 1.221 | 1.221 | 0.587 | 0.587 | 1.030 | 1.030 | 0.604 | 0.604 |

| AT+Resize (0.9) | 0.911 | 1.347 | 1.270 | 1.002 | 0.581 | 1.360 | 0.967 | 0.792 | 0.596 |

| AT+Crop (0.9) | 0.913 | 1.309 | 1.213 | 0.901 | 0.770 | 1.306 | 1.203 | 0.743 | 0.718 |

| Training strategy |

Penalty | SROCC | IR-score↑ | |||

|---|---|---|---|---|---|---|

| FGSM | PGD-10 | |||||

| KonIQ-10k | NIPS2017 | KonIQ-10k | NIPS2017 | |||

| Base model | – | 0.931 | – | – | – | – |

| adv | – | 0.837 | 1.106 | 1.366 | 0.542 | 0.555 |

| + pretr. | – | 0.848 | 1.337 | 1.407 | 0.445 | 0.510 |

| + clean | – | 0.920 | 1.257 | 1.489 | 0.569 | 0.645 |

| + clean + pretr. | – | 0.921 | 0.865 | 1.026 | 0.585 | 0.675 |

| adv | -5% | 0.844 | 0.993 | 0.982 | 0.635 | 0.686 |

| + pretr. | -5% | 0.841 | 1.500 | 1.549 | 0.648 | 0.718 |

| + clean | -5% | 0.922 | 1.482 | 1.910 | 0.596 | 0.602 |

| + clean + pretr. | -5% | 0.926 | 1.036 | 1.393 | 0.621 | 0.685 |

| adv | LPIPS | 0.784 | 1.001 | 1.011 | 0.424 | 0.323 |

| + pretr. | LPIPS | 0.717 | 1.554 | 1.685 | 0.565 | 0.603 |

| + clean | LPIPS | 0.924 | 2.092 | 2.218 | 0.516 | 0.527 |

| + clean + pretr. | LPIPS | 0.925 | 1.984 | 2.248 | 0.454 | 0.451 |

| Trained with |

FGSM AT | PGD-1 AT | APGD-2 AT | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Label strategy | Origin | 0.920 | 0.922 | 0.922 | 0.924 | 0.917 | 0.925 | 0.925 | 0.926 | 0.858 | 0.915 | 0.915 | 0.917 |

| Min | 0.908 | 0.917 | 0.921 | 0.920 | 0.911 | 0.925 | 0.926 | 0.926 | 0.870 | 0.876 | 0.847 | 0.843 | |

| -5% | 0.920 | 0.926 | 0.928 | 0.927 | 0.922 | 0.927 | 0.926 | 0.923 | 0.882 | 0.919 | 0.920 | 0.919 | |

| -10% | 0.922 | 0.925 | 0.926 | 0.927 | 0.923 | 0.927 | 0.927 | 0.926 | 0.901 | 0.916 | 0.920 | 0.917 | |

| PSNR | 0.906 | 0.913 | 0.916 | 0.917 | 0.907 | 0.914 | 0.923 | 0.924 | 0.922 | 0.912 | 0.911 | 0.912 | |

| SSIM | 0.917 | 0.926 | 0.925 | 0.923 | 0.922 | 0.927 | 0.925 | 0.923 | 0.886 | 0.918 | 0.921 | 0.917 | |

| MS_SSIM | 0.917 | 0.924 | 0.925 | 0.928 | 0.918 | 0.927 | 0.926 | 0.926 | 0.878 | 0.918 | 0.919 | 0.917 | |

| LPIPS | 0.920 | 0.925 | 0.924 | 0.925 | 0.921 | 0.928 | 0.928 | 0.926 | 0.906 | 0.921 | 0.921 | 0.921 | |

| Trained with |

FGSM AT | PGD-1 AT | APGD-2 AT | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Label strategy | Origin | 0.772 | 0.866 | 0.462 | 0.285 | 0.749 | 0.896 | 0.498 | 0.184 | -0.032 | 0.390 | 0.405 | 0.318 |

| Min | 8.134 | 7.269 | 6.539 | 5.644 | 8.173 | 7.051 | 5.184 | 4.635 | 6.716 | 5.747 | 4.415 | 3.416 | |

| -5% | 0.908 | 1.036 | 0.831 | 0.656 | 1.270 | 0.504 | 0.375 | 0.271 | 0.629 | 0.842 | 1.085 | 0.700 | |

| -10% | 1.761 | 2.119 | 0.969 | 0.817 | 1.927 | 1.300 | 0.938 | 0.664 | 1.184 | 1.348 | 1.240 | 1.067 | |

| PSNR | 7.422 | 7.712 | 6.381 | 5.997 | 7.114 | 7.663 | 5.518 | 4.827 | 5.609 | 7.468 | 4.196 | 3.102 | |

| SSIM | 0.724 | 1.278 | 2.638 | 3.262 | 1.010 | 1.518 | 2.691 | 3.153 | 0.282 | 1.281 | 2.210 | 2.305 | |

| MS_SSIM | 1.455 | 1.138 | 0.761 | 0.883 | 0.986 | 0.853 | 0.642 | 0.534 | 0.348 | 0.687 | 0.629 | 0.825 | |

| LPIPS | 1.537 | 1.984 | 3.500 | 3.825 | 2.343 | 2.640 | 3.288 | 3.016 | 1.306 | 2.238 | 2.495 | 2.422 | |

| FGSM AT | PGD-1 AT | APGD-2 AT | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Label strategy | Origin | 0.714 | 0.586 | 0.468 | 0.386 | 0.742 | 0.493 | 0.392 | 0.590 | 0.869 | 0.601 | 0.421 | 0.408 |

| Min | 1.777 | 0.316 | 0.156 | 0.174 | 1.795 | 0.359 | 0.260 | -0.048 | 1.528 | 0.406 | 0.403 | 0.110 | |

| -5% | 0.810 | 0.621 | 0.477 | 0.297 | 0.674 | 0.644 | 0.614 | 0.359 | 0.845 | 0.662 | 0.448 | 0.444 | |

| -10% | 0.710 | 0.514 | 0.459 | 0.325 | 0.702 | 0.600 | 0.418 | 0.334 | 0.874 | 0.574 | 0.449 | 0.420 | |

| PSNR | 1.404 | 0.637 | 0.392 | 0.617 | 1.333 | 0.389 | 0.012 | 0.089 | 1.261 | 1.001 | 0.113 | -0.045 | |

| SSIM | 0.744 | 0.576 | 0.370 | 0.322 | 0.658 | 0.515 | 0.326 | 0.397 | 0.842 | 0.606 | 0.339 | 0.160 | |

| MS_SSIM | 0.702 | 0.552 | 0.317 | 0.231 | 0.734 | 0.546 | 0.103 | 0.241 | 0.889 | 0.619 | 0.395 | 0.259 | |

| LPIPS | 0.697 | 0.455 | 0.345 | 0.437 | 0.688 | 0.598 | 0.247 | 0.319 | 0.854 | 0.629 | 0.469 | 0.428 | |

| FGSM AT | PGD-1 AT | APGD-2 AT | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Label strategy | Origin | 0.974 | 1.027 | 0.651 | 0.072 | 0.925 | 0.891 | 0.522 | 0.596 | -0.144 | 0.531 | 0.708 | 0.662 |

| Min | 12.589 | 11.962 | 10.624 | 9.024 | 13.022 | 11.728 | 8.580 | 7.558 | 10.455 | 10.206 | 7.685 | 6.859 | |

| -5% | 1.350 | 1.394 | 1.032 | 0.570 | 1.678 | 1.109 | 0.579 | 0.493 | 0.934 | 1.085 | 1.348 | 1.016 | |

| -10% | 2.280 | 2.435 | 0.976 | 0.948 | 2.323 | 1.775 | 1.541 | 1.179 | 1.324 | 1.739 | 1.673 | 1.388 | |

| PSNR | 11.401 | 12.235 | 10.438 | 9.254 | 10.852 | 12.372 | 9.356 | 8.329 | 7.008 | 11.493 | 6.807 | 5.043 | |

| SSIM | 1.004 | 1.274 | 2.272 | 2.627 | 1.364 | 1.542 | 2.476 | 2.717 | 0.619 | 1.152 | 2.420 | 2.636 | |

| MS_SSIM | 1.239 | 1.368 | 0.457 | 0.467 | 1.081 | 1.184 | 0.739 | 0.446 | 0.194 | 0.585 | 0.741 | 0.941 | |

| LPIPS | 1.378 | 2.249 | 3.237 | 3.388 | 2.346 | 2.846 | 3.328 | 3.283 | 1.095 | 1.927 | 3.101 | 2.864 | |

| FGSM AT | PGD-1 AT | APGD-2 AT | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Label strategy | Origin | 0.767 | 0.676 | 0.550 | 0.430 | 0.820 | 0.528 | 0.451 | 0.706 | 0.894 | 0.670 | 0.505 | 0.541 |

| Min | 1.826 | 0.447 | 0.250 | 0.224 | 1.846 | 0.461 | 0.333 | 0.000 | 1.389 | 0.411 | 0.521 | 0.235 | |

| -5% | 0.879 | 0.686 | 0.567 | 0.360 | 0.714 | 0.712 | 0.723 | 0.430 | 0.867 | 0.745 | 0.490 | 0.545 | |

| -10% | 0.745 | 0.591 | 0.542 | 0.366 | 0.774 | 0.676 | 0.450 | 0.389 | 0.892 | 0.623 | 0.570 | 0.519 | |

| PSNR | 1.445 | 0.650 | 0.490 | 0.735 | 1.300 | 0.512 | 0.037 | 0.101 | 1.126 | 0.775 | 0.000 | -0.118 | |

| SSIM | 0.828 | 0.615 | 0.414 | 0.345 | 0.727 | 0.491 | 0.358 | 0.464 | 0.886 | 0.635 | 0.385 | 0.113 | |

| MS_SSIM | 0.784 | 0.622 | 0.398 | 0.236 | 0.803 | 0.575 | 0.052 | 0.289 | 0.908 | 0.663 | 0.478 | 0.352 | |

| LPIPS | 0.769 | 0.452 | 0.360 | 0.484 | 0.730 | 0.639 | 0.295 | 0.369 | 0.874 | 0.704 | 0.560 | 0.494 | |

| FGSM AT | PGD-1 AT | APGD-2 AT | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Label strategy | Origin | 1.431 | 1.202 | 1.309 | 1.032 | 1.474 | 1.359 | 1.278 | 1.251 | 1.361 | 1.472 | 1.429 | 1.304 |

| Min | 0.157 | 0.222 | 0.175 | 0.189 | 0.159 | 0.199 | 0.199 | 0.165 | 0.345 | 0.334 | 0.269 | 0.261 | |

| -5% | 1.418 | 1.371 | 1.336 | 1.346 | 1.413 | 1.348 | 1.253 | 1.229 | 1.508 | 1.534 | 1.259 | 1.261 | |

| -10% | 1.424 | 1.420 | 1.345 | 1.328 | 1.359 | 1.258 | 1.194 | 1.160 | 1.222 | 1.260 | 1.084 | 1.076 | |

| PSNR | 0.218 | 0.176 | 0.208 | 0.184 | 0.240 | 0.167 | 0.211 | 0.142 | 0.265 | 0.173 | 0.192 | 0.154 | |

| SSIM | 1.426 | 1.312 | 0.850 | 0.723 | 1.332 | 1.190 | 0.591 | 0.431 | 1.607 | 1.302 | 0.623 | 0.464 | |

| MS_SSIM | 1.407 | 1.179 | 1.116 | 1.153 | 1.435 | 1.355 | 1.225 | 1.107 | 1.554 | 1.458 | 1.235 | 1.163 | |

| LPIPS | 1.218 | 1.129 | 0.647 | 0.497 | 1.192 | 0.751 | 0.508 | 0.449 | 1.288 | 0.899 | 0.546 | 0.452 | |

| FGSM AT | PGD-1 AT | APGD-2 AT | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Label strategy | Origin | 0.826 | 0.446 | 0.277 | 0.200 | 0.840 | 0.337 | 0.210 | 0.422 | 1.313 | 0.439 | 0.240 | 0.228 |

| Min | 0.178 | 0.212 | 0.096 | 0.102 | 0.164 | 0.252 | 0.149 | -0.010 | 0.426 | 0.242 | 0.248 | 0.039 | |

| -5% | 1.107 | 0.520 | 0.300 | 0.129 | 0.759 | 0.535 | 0.465 | 0.205 | 1.074 | 0.495 | 0.273 | 0.250 | |

| -10% | 0.991 | 0.396 | 0.290 | 0.179 | 0.881 | 0.462 | 0.250 | 0.196 | 1.336 | 0.412 | 0.276 | 0.245 | |

| PSNR | 0.323 | 0.308 | 0.252 | 0.517 | 0.381 | 0.247 | 0.016 | 0.043 | 0.447 | 0.518 | 0.047 | -0.055 | |

| SSIM | 0.928 | 0.445 | 0.214 | 0.187 | 0.731 | 0.366 | 0.182 | 0.231 | 1.084 | 0.434 | 0.177 | 0.053 | |

| MS_SSIM | 0.779 | 0.441 | 0.178 | 0.119 | 0.842 | 0.395 | 0.053 | 0.080 | 1.495 | 0.457 | 0.208 | 0.129 | |

| LPIPS | 0.854 | 0.319 | 0.204 | 0.279 | 0.831 | 0.459 | 0.133 | 0.180 | 1.186 | 0.485 | 0.277 | 0.240 | |

| FGSM AT | PGD-1 AT | APGD-2 AT | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Label strategy | Origin | 1.467 | 1.338 | 1.459 | 1.267 | 1.473 | 1.391 | 1.327 | 1.340 | 1.488 | 1.583 | 1.497 | 1.461 |

| Min | 0.146 | 0.151 | 0.123 | 0.135 | 0.147 | 0.130 | 0.135 | 0.114 | 0.471 | 0.247 | 0.262 | 0.263 | |

| -5% | 1.678 | 1.629 | 1.441 | 1.455 | 1.556 | 1.613 | 1.400 | 1.193 | 1.495 | 1.540 | 1.291 | 1.282 | |

| -10% | 1.382 | 1.401 | 1.422 | 1.284 | 1.333 | 1.404 | 0.979 | 0.933 | 1.257 | 1.243 | 1.148 | 1.094 | |

| PSNR | 0.183 | 0.133 | 0.147 | 0.136 | 0.244 | 0.114 | 0.138 | 0.093 | 0.341 | 0.143 | 0.152 | 0.076 | |

| SSIM | 1.639 | 1.472 | 1.037 | 0.964 | 1.562 | 1.387 | 0.795 | 0.632 | 1.695 | 1.440 | 0.803 | 0.573 | |

| MS_SSIM | 1.567 | 1.482 | 1.295 | 1.308 | 1.590 | 1.595 | 1.193 | 1.117 | 1.666 | 1.538 | 1.389 | 1.193 | |

| LPIPS | 1.489 | 1.329 | 0.837 | 0.660 | 1.410 | 1.018 | 0.631 | 0.540 | 1.514 | 1.148 | 0.612 | 0.517 | |

| FGSM AT | PGD-1 AT | APGD-2 AT | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Label strategy | Origin | 0.997 | 0.585 | 0.380 | 0.269 | 1.042 | 0.428 | 0.286 | 0.580 | 1.440 | 0.585 | 0.354 | 0.382 |

| Min | 0.158 | 0.322 | 0.187 | 0.162 | 0.156 | 0.349 | 0.228 | 0.055 | 0.463 | 0.332 | 0.400 | 0.216 | |

| -5% | 1.334 | 0.635 | 0.415 | 0.212 | 0.905 | 0.681 | 0.618 | 0.279 | 1.188 | 0.687 | 0.346 | 0.371 | |

| -10% | 1.129 | 0.507 | 0.389 | 0.248 | 1.115 | 0.597 | 0.312 | 0.265 | 1.401 | 0.535 | 0.417 | 0.356 | |

| PSNR | 0.307 | 0.413 | 0.357 | 0.686 | 0.434 | 0.349 | 0.071 | 0.096 | 0.566 | 0.270 | 0.034 | -0.033 | |

| SSIM | 1.170 | 0.547 | 0.279 | 0.227 | 0.923 | 0.408 | 0.238 | 0.306 | 1.279 | 0.540 | 0.252 | 0.070 | |

| MS_SSIM | 0.992 | 0.574 | 0.276 | 0.163 | 1.032 | 0.490 | 0.078 | 0.168 | 1.607 | 0.571 | 0.312 | 0.227 | |

| LPIPS | 0.982 | 0.370 | 0.246 | 0.338 | 0.915 | 0.552 | 0.200 | 0.251 | 1.265 | 0.632 | 0.390 | 0.325 | |

| Attack type | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Defense type | No attack | FGSM | PGD | ||||||||||

| 2 | 4 | 6 | 8 | 10 | 2 | 4 | 6 | 8 | 10 | ||||

| No defense | 0.738 | 0.327 | 0.281 | 0.252 | 0.227 | 0.205 | 0.171 | 0.053 | 0.021 | 0.009 | 0.005 | ||

| R-LPIPS [33] | 0.728 | 0.471 | 0.445 | 0.427 | 0.414 | 0.405 | 0.279 | 0.163 | 0.114 | 0.091 | 0.077 | ||

| FGSM | 2 | 0.729 | 0.501 | 0.477 | 0.459 | 0.444 | 0.430 | 0.311 | 0.193 | 0.139 | 0.111 | 0.094 | |

| 4 | 0.725 | 0.500 | 0.476 | 0.458 | 0.444 | 0.430 | 0.318 | 0.200 | 0.147 | 0.117 | 0.099 | ||

| 8 | 0.726 | 0.504 | 0.481 | 0.464 | 0.449 | 0.435 | 0.318 | 0.202 | 0.150 | 0.120 | 0.102 | ||

| 10 | 0.727 | 0.505 | 0.484 | 0.467 | 0.451 | 0.439 | 0.317 | 0.203 | 0.150 | 0.120 | 0.103 | ||

| PGD-1 | 2 | 0.729 | 0.491 | 0.466 | 0.447 | 0.430 | 0.415 | 0.307 | 0.184 | 0.129 | 0.100 | 0.083 | |

| 4 | 0.727 | 0.500 | 0.476 | 0.459 | 0.445 | 0.431 | 0.317 | 0.197 | 0.144 | 0.114 | 0.096 | ||

| 8 | 0.724 | 0.516 | 0.497 | 0.483 | 0.472 | 0.461 | 0.323 | 0.210 | 0.161 | 0.132 | 0.115 | ||

| 10 | 0.721 | 0.518 | 0.499 | 0.485 | 0.472 | 0.463 | 0.326 | 0.214 | 0.164 | 0.135 | 0.118 | ||

| APGD-2 | 2 | 0.733 | 0.483 | 0.454 | 0.435 | 0.418 | 0.403 | 0.297 | 0.175 | 0.121 | 0.094 | 0.077 | |

| 4 | 0.726 | 0.498 | 0.473 | 0.455 | 0.442 | 0.428 | 0.318 | 0.202 | 0.150 | 0.120 | 0.103 | ||

| 8 | 0.722 | 0.512 | 0.490 | 0.475 | 0.462 | 0.449 | 0.332 | 0.224 | 0.175 | 0.149 | 0.131 | ||

| 10 | 0.722 | 0.514 | 0.494 | 0.479 | 0.467 | 0.457 | 0.332 | 0.228 | 0.180 | 0.154 | 0.139 | ||

| Attack type | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Defense type | No attack | FGSM | PGD | ||||||||||

| 2 | 4 | 6 | 8 | 10 | 2 | 4 | 6 | 8 | 10 | ||||

| No defense | 0.887 | 0.873 | 0.866 | 0.849 | 0.819 | 0.778 | 0.625 | 0.459 | 0.338 | 0.245 | 0.169 | ||

| R-LPIPS [33] | 0.869 | 0.865 | 0.861 | 0.856 | 0.828 | 0.801 | 0.821 | 0.771 | 0.717 | 0.666 | 0.608 | ||

| FGSM | 2 | 0.802 | 0.795 | 0.792 | 0.782 | 0.767 | 0.745 | 0.679 | 0.631 | 0.592 | 0.548 | 0.499 | |

| 4 | 0.806 | 0.799 | 0.796 | 0.786 | 0.771 | 0.748 | 0.685 | 0.636 | 0.596 | 0.553 | 0.503 | ||

| 8 | 0.804 | 0.796 | 0.793 | 0.783 | 0.766 | 0.743 | 0.668 | 0.616 | 0.575 | 0.528 | 0.476 | ||

| 10 | 0.805 | 0.797 | 0.793 | 0.783 | 0.766 | 0.743 | 0.659 | 0.605 | 0.557 | 0.507 | 0.453 | ||

| PGD-1 | 2 | 0.865 | 0.862 | 0.86 | 0.854 | 0.84 | 0.82 | 0.828 | 0.8 | 0.765 | 0.725 | 0.685 | |

| 4 | 0.859 | 0.856 | 0.854 | 0.849 | 0.838 | 0.822 | 0.829 | 0.811 | 0.787 | 0.758 | 0.727 | ||

| 8 | 0.852 | 0.849 | 0.847 | 0.842 | 0.834 | 0.822 | 0.828 | 0.819 | 0.806 | 0.788 | 0.767 | ||

| 10 | 0.847 | 0.844 | 0.842 | 0.838 | 0.83 | 0.819 | 0.823 | 0.815 | 0.803 | 0.787 | 0.769 | ||

| APGD-2 | 2 | 0.837 | 0.832 | 0.829 | 0.820 | 0.803 | 0.780 | 0.749 | 0.703 | 0.658 | 0.611 | 0.562 | |

| 4 | 0.830 | 0.825 | 0.822 | 0.814 | 0.800 | 0.779 | 0.752 | 0.716 | 0.682 | 0.645 | 0.605 | ||

| 8 | 0.818 | 0.813 | 0.810 | 0.802 | 0.787 | 0.766 | 0.736 | 0.701 | 0.668 | 0.633 | 0.595 | ||

| 10 | 0.817 | 0.812 | 0.808 | 0.800 | 0.785 | 0.764 | 0.730 | 0.695 | 0.660 | 0.623 | 0.585 | ||

References

- Antsiferova, A.; Abud, K.; Gushchin, A.; Shumitskaya, E.; Lavrushkin, S.; Vatolin, D. Comparing the robustness of modern no-reference image-and video-quality metrics to adversarial attacks. In Proceedings of the Proceedings of the AAAI Conference on Artificial Intelligence, 2024, Vol. 38, pp. 700–708. [CrossRef]

- Meftah, H.F.B.; Fezza, S.A.; Hamidouche, W.; Déforges, O. Evaluating the Vulnerability of Deep Learning-based Image Quality Assessment Methods to Adversarial Attacks. In Proceedings of the 2023 11th European Workshop on Visual Information Processing (EUVIP). IEEE, 2023, pp. 1–6. [CrossRef]

- Wang, H.; Zhang, W.; Ren, P. Self-organized underwater image enhancement. ISPRS Journal of Photogrammetry and Remote Sensing 2024, 215, 1–14. [CrossRef]

- Zhou, J.; Pang, L.; Zhang, D.; Zhang, W. Underwater image enhancement method via multi-interval subhistogram perspective equalization. IEEE Journal of Oceanic Engineering 2023, 48, 474–488. [CrossRef]

- Wu, Y.; Pan, C.; Wang, G.; Yang, Y.; Wei, J.; Li, C.; Shen, H.T. Learning semantic-aware knowledge guidance for low-light image enhancement. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 1662–1671.

- Korhonen, J.; You, J. Adversarial attacks against blind image quality assessment models. In Proceedings of the Proceedings of the 2nd Workshop on Quality of Experience in Visual Multimedia Applications, 2022, pp. 3–11. [CrossRef]

- Zhang, W.; Li, D.; Min, X.; Zhai, G.; Guo, G.; Yang, X.; Ma, K. Perceptual Attacks of No-Reference Image Quality Models with Human-in-the-Loop. Advances in Neural Information Processing Systems 2022, 35, 2916–2929.

- Shumitskaya, E.; Antsiferova, A.; Vatolin, D.S. Universal Perturbation Attack on Differentiable No-Reference Image- and Video-Quality Metrics. In Proceedings of the 33rd British Machine Vision Conference 2022, BMVC 2022, London, UK, November 21-24, 2022. BMVA Press, 2022.

- Shumitskaya, E.; Antsiferova, A.; Vatolin, D.S. Fast Adversarial CNN-based Perturbation Attack on No-Reference Image- and Video-Quality Metrics. In Proceedings of the The First Tiny Papers Track at ICLR 2023, Tiny Papers @ ICLR 2023, Kigali, Rwanda, May 5, 2023; Maughan, K.; Liu, R.; Burns, T.F., Eds. OpenReview.net, 2023.

- Shumitskaya, E.; Antsiferova, A.; Vatolin, D. IOI: Invisible One-Iteration Adversarial Attack on No-Reference Image-and Video-Quality Metrics. arXiv preprint arXiv:2403.05955 2024.

- Duanmu, Z.; Liu, W.; Wang, Z.; Wang, Z. Quantifying visual image quality: A bayesian view. Annual Review of Vision Science 2021, 7, 437–464. [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: from error visibility to structural similarity. IEEE transactions on image processing 2004, 13, 600–612. [CrossRef]

- Sheikh, H.R.; Bovik, A.C.; De Veciana, G. An information fidelity criterion for image quality assessment using natural scene statistics. IEEE Transactions on image processing 2005, 14, 2117–2128. [CrossRef]

- Antsiferova, A.; Lavrushkin, S.; Smirnov, M.; Gushchin, A.; Vatolin, D.; Kulikov, D. Video compression dataset and benchmark of learning-based video-quality metrics. Advances in Neural Information Processing Systems 2022, 35, 13814–13825.

- Tu, Z.; Wang, Y.; Birkbeck, N.; Adsumilli, B.; Bovik, A.C. UGC-VQA: Benchmarking blind video quality assessment for user generated content. IEEE Transactions on Image Processing 2021, 30, 4449–4464. [CrossRef]

- Zhu, H.; Li, L.; Wu, J.; Dong, W.; Shi, G. MetaIQA: Deep Meta-Learning for No-Reference Image Quality Assessment. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Jun. 2020, pp. 14143–14152.

- Hosu, V.; Lin, H.; Sziranyi, T.; Saupe, D. KonIQ-10k: An Ecologically Valid Database for Deep Learning of Blind Image Quality Assessment. IEEE Transactions on Image Processing 2020, 29, 4041–4056. https://doi.org/10.1109/tip.2020.2967829. [CrossRef]

- Su, S.; Yan, Q.; Zhu, Y.; Zhang, C.; Ge, X.; Sun, J.; Zhang, Y. Blindly Assess Image Quality in the Wild Guided by a Self-Adaptive Hyper Network. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2020, pp. 3664–3673.

- Su, Y.; Korhonen, J. Blind Natural Image Quality Prediction Using Convolutional Neural Networks And Weighted Spatial Pooling. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), 2020, pp. 191–195. https://doi.org/10.1109/ICIP40778.2020.9190789. [CrossRef]

- Li, D.; Jiang, T.; Jiang, M. Norm-in-Norm Loss with Faster Convergence and Better Performance for Image Quality Assessment. In Proceedings of the Proceedings of the 28th ACM International Conference on Multimedia. ACM, 2020, MM ’20. https://doi.org/10.1145/3394171.3413804. [CrossRef]

- Ke, J.; Wang, Q.; Wang, Y.; Milanfar, P.; Yang, F. MUSIQ: Multi-Scale Image Quality Transformer. In Proceedings of the Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), October 2021, pp. 5148–5157.

- Yang, S.; Wu, T.; Shi, S.; Lao, S.; Gong, Y.; Cao, M.; Wang, J.; Yang, Y. MANIQA: Multi-dimension Attention Network for No-Reference Image Quality Assessment. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 1191–1200.

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks, 2014, [arXiv:cs.CV/1312.6199].

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. CoRR 2014, abs/1412.6572.

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards Deep Learning Models Resistant to Adversarial Attacks. ArXiv 2017, abs/1706.06083.

- Wong, E.; Rice, L.; Kolter, J.Z. Fast is better than free: Revisiting adversarial training. ArXiv 2020, abs/2001.03994.

- Singh, N.D.; Croce, F.; Hein, M. Revisiting Adversarial Training for ImageNet: Architectures, Training and Generalization across Threat Models. ArXiv 2023, abs/2303.01870.

- Croce, F.; Hein, M. Reliable evaluation of adversarial robustness with an ensemble of diverse parameter-free attacks. In Proceedings of the International Conference on Machine Learning, 2020.

- Li, Z. On VMAF’s property in the presence of image enhancement operations, 2021.

- Siniukov, M.; Antsiferova, A.; Kulikov, D.; Vatolin, D. Hacking VMAF and VMAF NEG: vulnerability to different preprocessing methods. In Proceedings of the 2021 4th Artificial Intelligence and Cloud Computing Conference, 2021, pp. 89–96. [CrossRef]

- Kettunen, M.; Härkönen, E.; Lehtinen, J. E-LPIPS: Robust Perceptual Image Similarity via Random Transformation Ensembles, 2019, [arXiv:cs.CV/1906.03973].

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric, 2018, [arXiv:cs.CV/1801.03924].

- Ghazanfari, S.; Garg, S.; Krishnamurthy, P.; Khorrami, F.; Araujo, A. R-LPIPS: An Adversarially Robust Perceptual Similarity Metric, 2023, [arXiv:cs.CV/2307.15157].

- Liu, Y.; Yang, C.; Li, D.; Ding, J.; Jiang, T. Defense Against Adversarial Attacks on No-Reference Image Quality Models with Gradient Norm Regularization, 2024, [arXiv:cs.CV/2403.11397].

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multiscale structural similarity for image quality assessment. In Proceedings of the The Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, 2003. Ieee, 2003, Vol. 2, pp. 1398–1402. [CrossRef]

- K, A.; Hamner, B.; Goodfellow, I. NIPS 2017: Adversarial Learning Development Set. https://www.kaggle.com/datasets/google-brain/nips2017-adversarial-learning-development-set, 2017.

- Siniukov, M.; Kulikov, D.; Vatolin, D. Applicability limitations of differentiable full-reference image-quality metrics. In Proceedings of the 2023 Data Compression Conference (DCC). IEEE, 2023, pp. 1–1. [CrossRef]

- Lin, H.; Hosu, V.; Saupe, D. KADID-10k: A Large-scale Artificially Distorted IQA Database. In Proceedings of the 2019 Eleventh International Conference on Quality of Multimedia Experience (QoMEX), 2019, pp. 1–3. https://doi.org/10.1109/QoMEX.2019.8743252. [CrossRef]

- Carlini, N.; Athalye, A.; Papernot, N.; Brendel, W.; Rauber, J.; Tsipras, D.; Goodfellow, I.; Madry, A.; Kurakin, A. On evaluating adversarial robustness. arXiv preprint arXiv:1902.06705 2019. [CrossRef]

- Korotin, A.; Selikhanovych, D.; Burnaev, E. Neural Optimal Transport. CoRR 2022, abs/2201.12220, [2201.12220]. [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE conference on computer vision and pattern recognition. Ieee, 2009, pp. 248–255. [CrossRef]

- Zhao, Z.; Liu, Z.; Larson, M. Adversarial Color Enhancement: Generating Unrestricted Adversarial Images by Optimizing a Color Filter, 2020, [arXiv:cs.CV/2002.01008]. [CrossRef]

- Kurakin, A.; Goodfellow, I.J.; Bengio, S. Adversarial examples in the physical world. CoRR 2016, abs/1607.02533, [1607.02533].

- Dong, Y.; Liao, F.; Pang, T.; Hu, X.; Zhu, J. Discovering Adversarial Examples with Momentum. CoRR 2017, abs/1710.06081, [1710.06081].

- Sang, Q.; Zhang, H.; Liu, L.; Wu, X.; Bovik, A.C. On the generation of adversarial examples for image quality assessment. The Visual Computer 2023, pp. 1–16. [CrossRef]

- Zhang, Z.; Qiao, K.; Jiang, L.; Wang, L.; Yan, B. AdvJND: Generating Adversarial Examples with Just Noticeable Difference, 2020, [arXiv:cs.CV/2002.00179]. [CrossRef]

- Wang, Z.; Simoncelli, E.P. Maximum differentiation (MAD) competition: a methodology for comparing computational models of perceptual quantities. Journal of vision 2008, 8 12, 8.1–13. [CrossRef]

- Karli, B.T.; Sen, D.; Temizel, A. Improving Perceptual Quality of Adversarial Images Using Perceptual Distance Minimization and Normalized Variance Weighting. 2021.

- Luo, C.; Lin, Q.; Xie, W.; Wu, B.; Xie, J.; Shen, L. Frequency-Driven Imperceptible Adversarial Attack on Semantic Similarity. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2022, pp. 15315–15324.

- Bhattad, A.; Chong, M.J.; Liang, K.; Li, B.; Forsyth, D.A. Unrestricted Adversarial Examples via Semantic Manipulation, 2020, [arXiv:cs.CV/1904.06347].

- Xiao, C.; Zhu, J.Y.; Li, B.; He, W.; Liu, M.; Song, D. Spatially Transformed Adversarial Examples, 2018, [arXiv:cs.CR/1801.02612]. [CrossRef]

- Su, J.; Vargas, D.V.; Sakurai, K. One Pixel Attack for Fooling Deep Neural Networks. IEEE Transactions on Evolutionary Computation 2019, 23, 828–841. https://doi.org/10.1109/tevc.2019.2890858. [CrossRef]

| Training | IR-score↑ | ||||

|---|---|---|---|---|---|

| strategy | SROCC | FGSM | PGD-10 | ||

| KonIQ-10K | NIPS2017 | KonIQ-10K | NIPS2017 | ||

| AT | 0.784 | 1.001 | 1.011 | 0.424 | 0.323 |

| + pretr. | 0.717 | 1.554 | 1.685 | 0.565 | 0.603 |

| + clean | 0.924 | 2.092 | 2.218 | 0.516 | 0.527 |

| + clean, pretr. | 0.925 | 1.984 | 2.248 | 0.454 | 0.451 |

| Training strategy |

SROCC | IR-score↑ | |

|---|---|---|---|

| FGSM | PGD-10 | ||

| AT | 0.668 | 0.665 | 0.597 |

| + pretr. | 0.817 | 0.801 | 0.732 |

| + clean | 0.701 | 0.696 | 0.621 |

| + clean, pretr. | 0.832 | 0.872 | 0.765 |

| SROCC (train ) | IR-score↑ (trained with PGD-1) | ||||||

|---|---|---|---|---|---|---|---|

| Trained with | FGSM | PGD-1 | APGD-2 | ||||

| Label strategy | – | 0.920 | 0.917 | 0.858 | 0.848 | 1.231 | 1.013 |

| Min | 0.908 | 0.911 | 0.870 | 6.252 | 5.730 | 4.253 | |

| -5% | 0.920 | 0.922 | 0.882 | 1.331 | 1.301 | 0.773 | |

| -10% | 0.922 | 0.923 | 0.901 | 1.819 | 1.637 | 1.264 | |

| PSNR | 0.906 | 0.907 | 0.922 | 5.938 | 5.872 | 4.504 | |

| SSIM | 0.917 | 0.922 | 0.886 | 1.350 | 1.905 | 2.458 | |

| MS-SSIM | 0.917 | 0.918 | 0.878 | 1.002 | 1.384 | 1.165 | |

| LPIPS | 0.920 | 0.921 | 0.906 | 1.857 | 2.608 | 2.679 | |

| Avg | 0.916 | 0.918 | 0.888 | ||||

| FR-IQA model |

Train |

SROCC | IR-score↑ | |

|---|---|---|---|---|

| FGSM | PGD-10 | |||

| R-LPIPS [33] | 0.858 | 0.791 | 0.777 | |

| AT-LPIPS (FGSM) |

2 / 255 | 0.855 | 0.804 | 0.782 |

| 4 / 255 | 0.843 | 0.811 | 0.791 | |

| 8 / 255 | 0.845 | 0.807 | 0.786 | |

| 10 / 255 | 0.832 | 0.792 | 0.775 | |

| AT-LPIPS (PGD-1) |

2 / 255 | 0.848 | 0.817 | 0.801 |

| 4 / 255 | 0.837 | 0.821 | 0.808 | |

| 8 / 255 | 0.856 | 0.814 | 0.799 | |

| 10 / 255 | 0.849 | 0.802 | 0.783 | |

| AT-LPIPS (APGD-2) |

2 / 255 | 0.841 | 0.802 | 0.788 |

| 4 / 255 | 0.834 | 0.805 | 0.791 | |

| 8 / 255 | 0.830 | 0.799 | 0.783 | |

| 10 / 255 | 0.835 | 0.788 | 0.775 | |

| SROCC | [7] () | IR-score ↑ | Train. | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| NR-IQA model | ↑ | FGSM | PGD-10 | FGSM | PGD-10 | time | |||||

| KonIQ | NIPS | KonIQ | NIPS | KonIQ | NIPS | KonIQ | NIPS | (min) | |||

| LinearityIQA [20] | base | 0.931 | 0.699 | 0.780 | 0.182 | 0.266 | - | - | - | - | 73 |

| AT | 0.824 | 1.507 | 1.628 | 1.485 | 1.573 | 0.162 | 0.069 | 0.901 | 0.918 | 237 | |

| NT [34] | 0.930 | 0.797 | 0.844 | 0.304 | 0.382 | 0.029 | -0.356 | 0.239 | 0.227 | 79 | |

| AT (ours) | 0.922 | 1.555 | 1.567 | 0.731 | 0.921 | 1.020 | 1.366 | 0.657 | 0.726 | 275 | |

| KonCept512 [17] | base | 0.925 | 0.706 | 0.620 | 0.353 | 0.033 | - | - | - | - | 93 |

| AT | 0.868 | 1.246 | 1.383 | 0.873 | 1.135 | 0.547 | 0.850 | 0.657 | 0.957 | 222 | |

| NT [34] | 0.822 | 1.459 | 1.246 | 1.096 | 1.008 | 0.687 | 0.784 | 0.774 | 0.946 | 101 | |

| AT (ours) | 0.913 | 1.265 | 1.416 | 0.632 | 0.726 | 1.044 | 0.900 | 0.538 | 0.831 | 255 | |

| HyperIQA [18] | base | 0.894 | 0.531 | 0.505 | -0.168 | -0.090 | - | - | - | - | 133 |

| AT | 0.778 | 1.624 | 1.779 | 1.376 | 1.634 | 0.547 | 0.682 | 0.945 | 0.961 | 141 | |

| NT [34] | 0.846 | 1.093 | 1.013 | 0.742 | 0.733 | 0.243 | 0.043 | 0.826 | 0.775 | 219 | |

| AT (ours) | 0.891 | 0.848 | 1.625 | 0.622 | 1.010 | 1.385 | 1.413 | 0.762 | 0.911 | 163 | |

| WSP-IQA [19] | base | 0.916 | 0.618 | 0.711 | 0.124 | 0.285 | - | - | - | - | 68 |

| AT | 0.882 | 0.948 | 1.140 | 0.405 | 0.609 | 0.534 | 0.662 | 0.459 | 0.506 | 76 | |

| NT [34] | 0.915 | 0.644 | 0.742 | 0.109 | 0.280 | 0.279 | 0.243 | -0.094 | -0.109 | 92 | |

| AT (ours) | 0.899 | 1.135 | 1.224 | 0.319 | 0.461 | 1.352 | 1.428 | 0.355 | 0.317 | 117 | |

| LPIPS version | SROCC | 2AFC ↑ | [7] | IR-score ↑ | Train. time | ||||

|---|---|---|---|---|---|---|---|---|---|

| No attack | FGSM | PGD-10 | FGSM | PGD-10 | FGSM | PGD-10 | (min) | ||

| base LPIPS | 0.893 | 0.742 | 0.260 | 0.102 | 0.525 | 0.311 | - | - | 46 |

| R-LPIPS [33] | 0.858 | 0.741 | 0.487 | 0.306 | 0.701 | 0.658 | 0.791 | 0.777 | 663 |

| AT-LPIPS (ours) | 0.852 | 0.742 | 0.495 | 0.440 | 0.753 | 0.737 | 0.817 | 0.801 | 101 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).