Submitted:

24 October 2024

Posted:

25 October 2024

Read the latest preprint version here

Abstract

Keywords:

1. Introduction

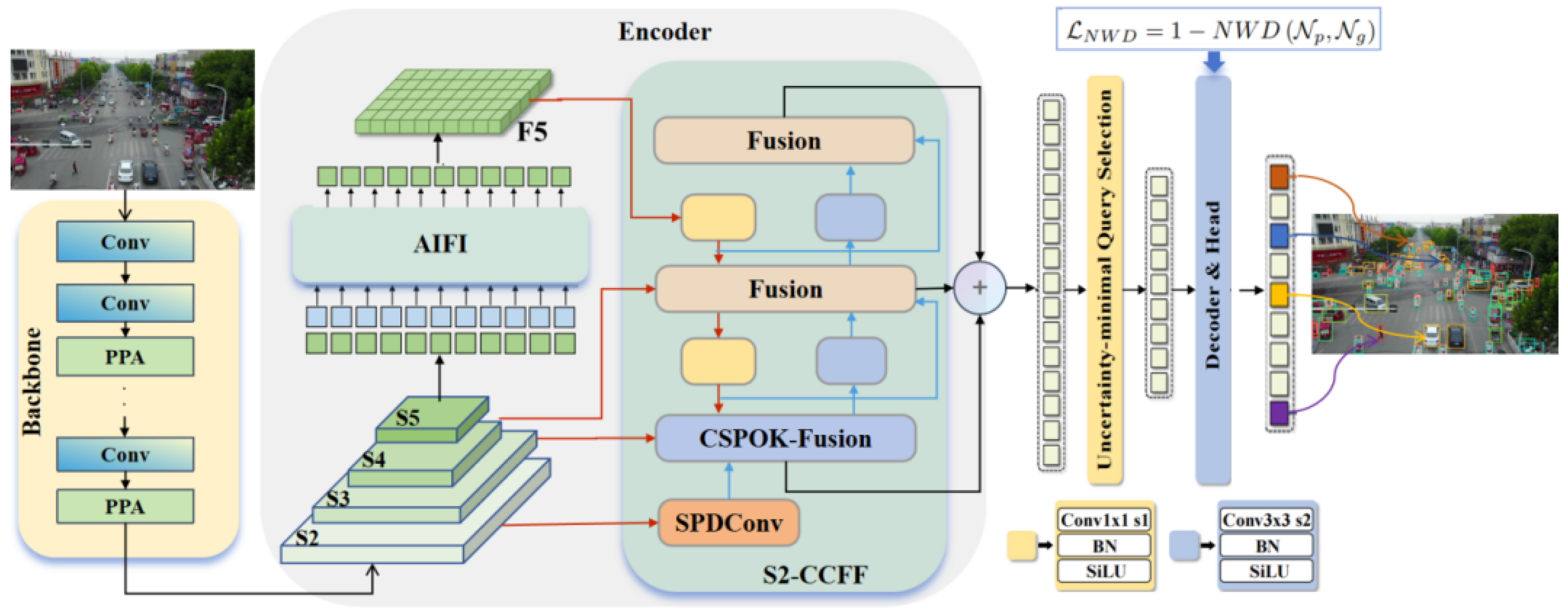

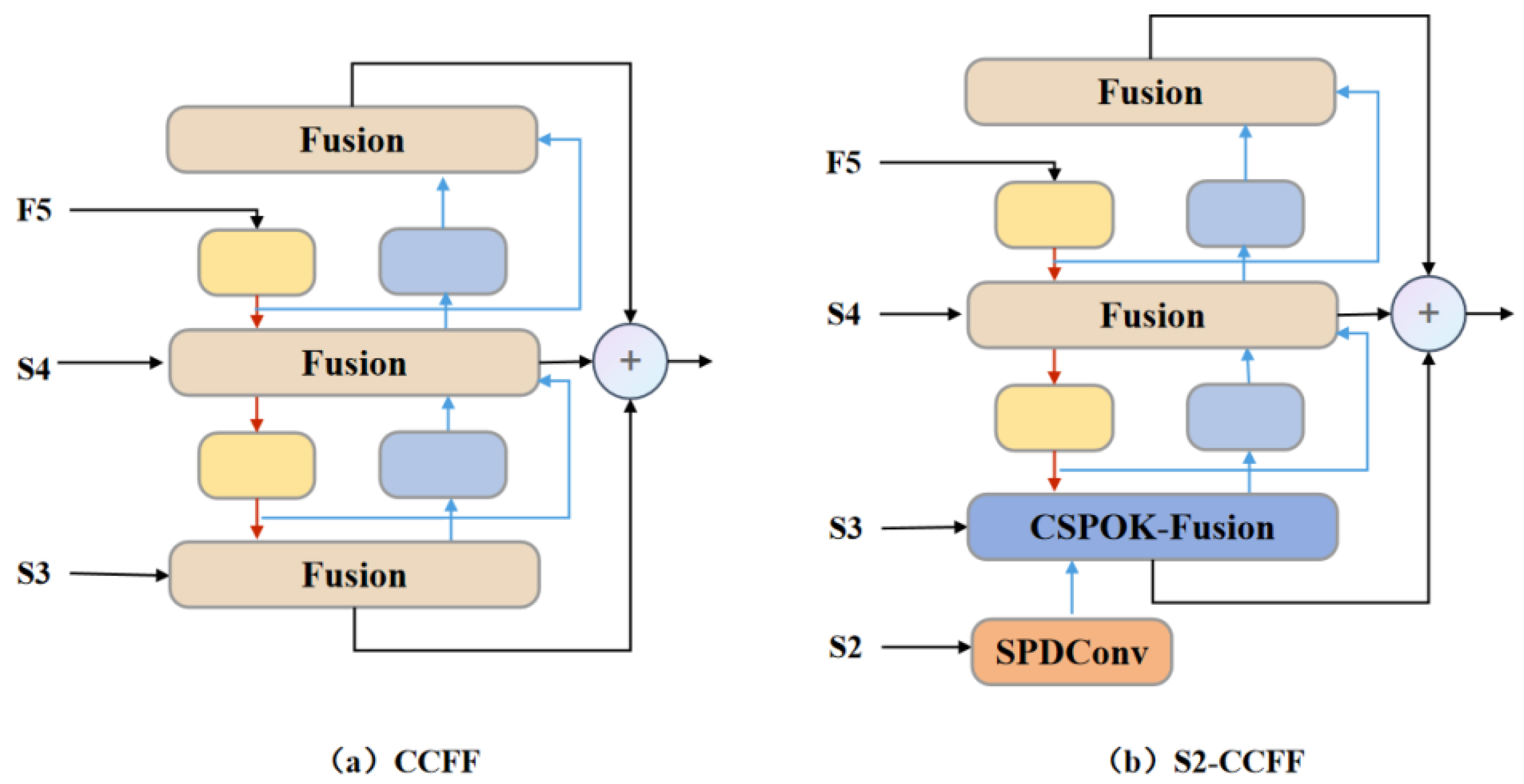

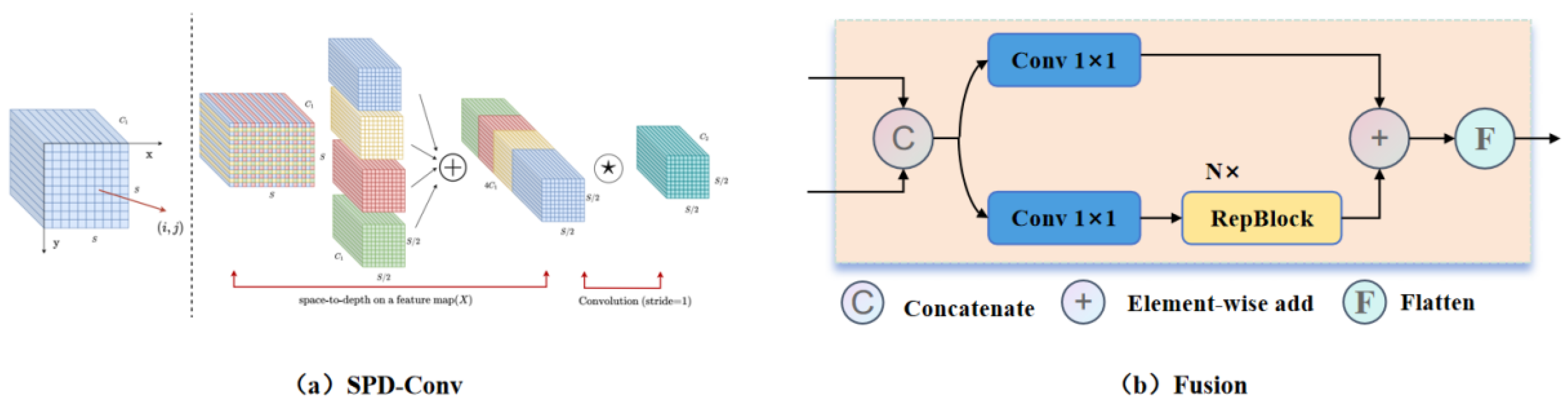

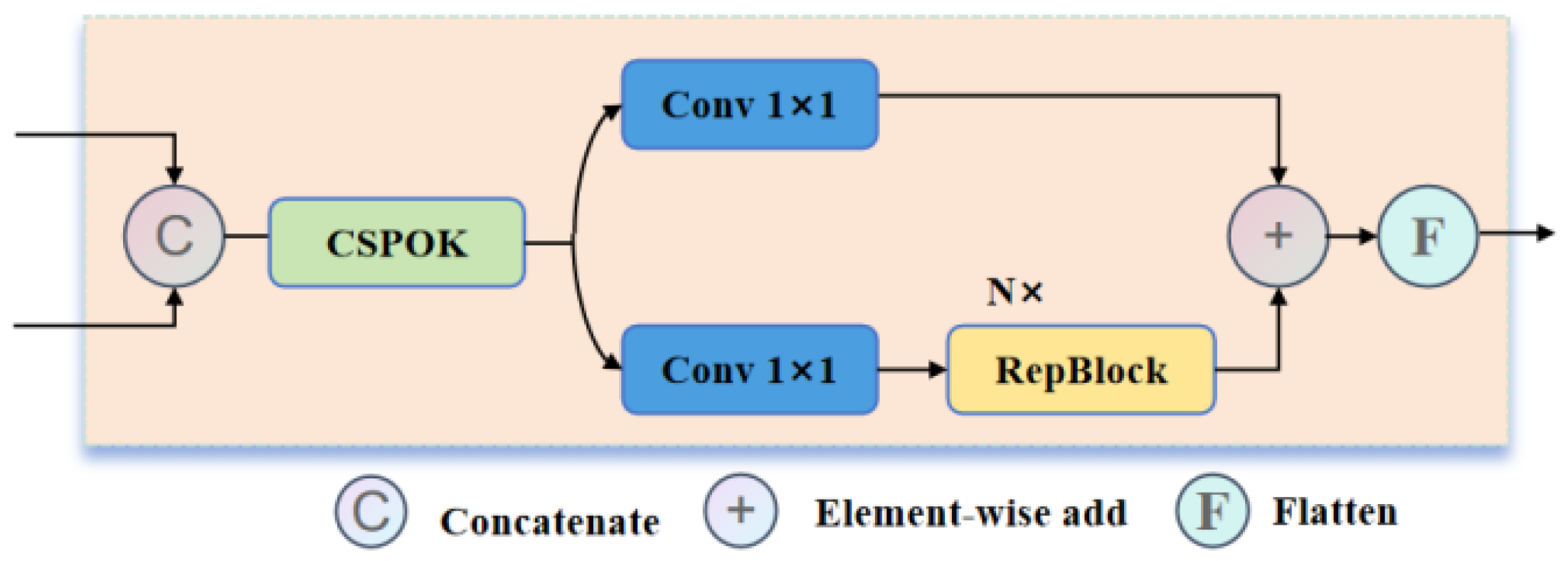

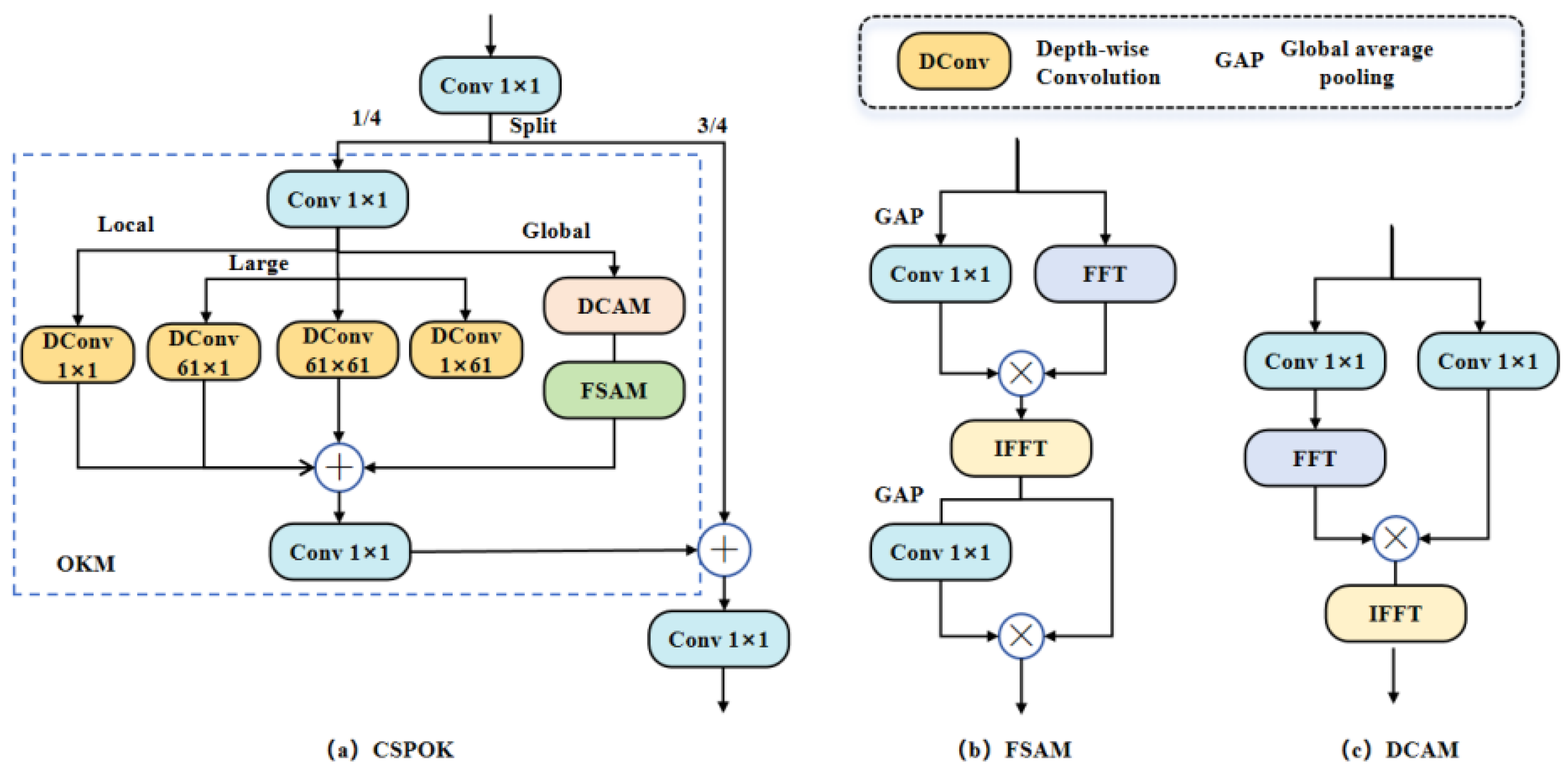

- The S2-CCFF module is proposed, where an S2 layer is added during cross-scale feature fusion to enrich small object information. To mitigate information loss caused by conventional downsampling, spatial downsampling is performed on the S2 layer using SPDConv, preserving key details while reducing computational complexity. Additionally, the CSPOK-Fusion module is designed to integrate multi-scale features across global, local, and large branches, effectively suppressing noise, capturing feature representations from global to local scales, and addressing complex background interference and occlusion issues, thereby improving detection accuracy.

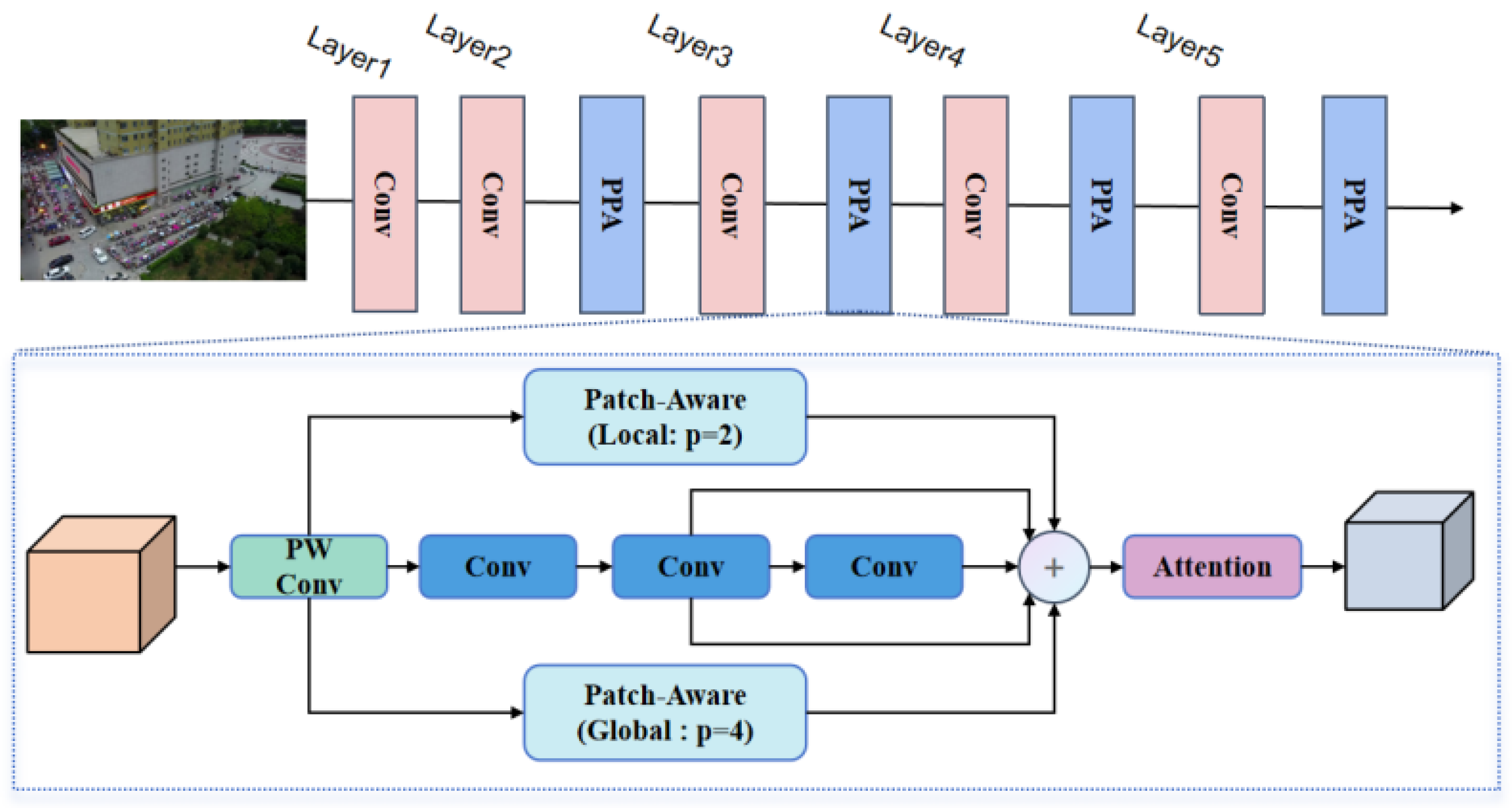

- The PPA module is incorporated into the Backbone network, employing multi-level feature fusion and attention mechanisms to preserve and enhance small object representations. This ensures key information is retained across multiple downsampling stages, effectively mitigating small object information loss and improving subsequent detection accuracy.

- To address the low tolerance of bounding box perturbations, we introduce the NWD [14] loss function, which better captures differences in the relative position, shape, and size of bounding boxes. It focuses on the relative positional relationships between boxes rather than merely relying on overlap. This approach offers greater tolerance to minor bounding box perturbations.

2. Related Work

2.1. Convolutional Neural Network-Based Detection Methods

2.2. Transformer-Based Detection Methods

2.3. Detection Methods for Small Objects in Aerial Images

3. Methodologies

3.1. Overall Architecture

3.2. S2-CCFF Module

3.2.1. Module Structure

3.2.2. CSPOK-Fusion Module

3.3. Backbone Network Structure

3.4. NWD Loss Function

4. Experiment

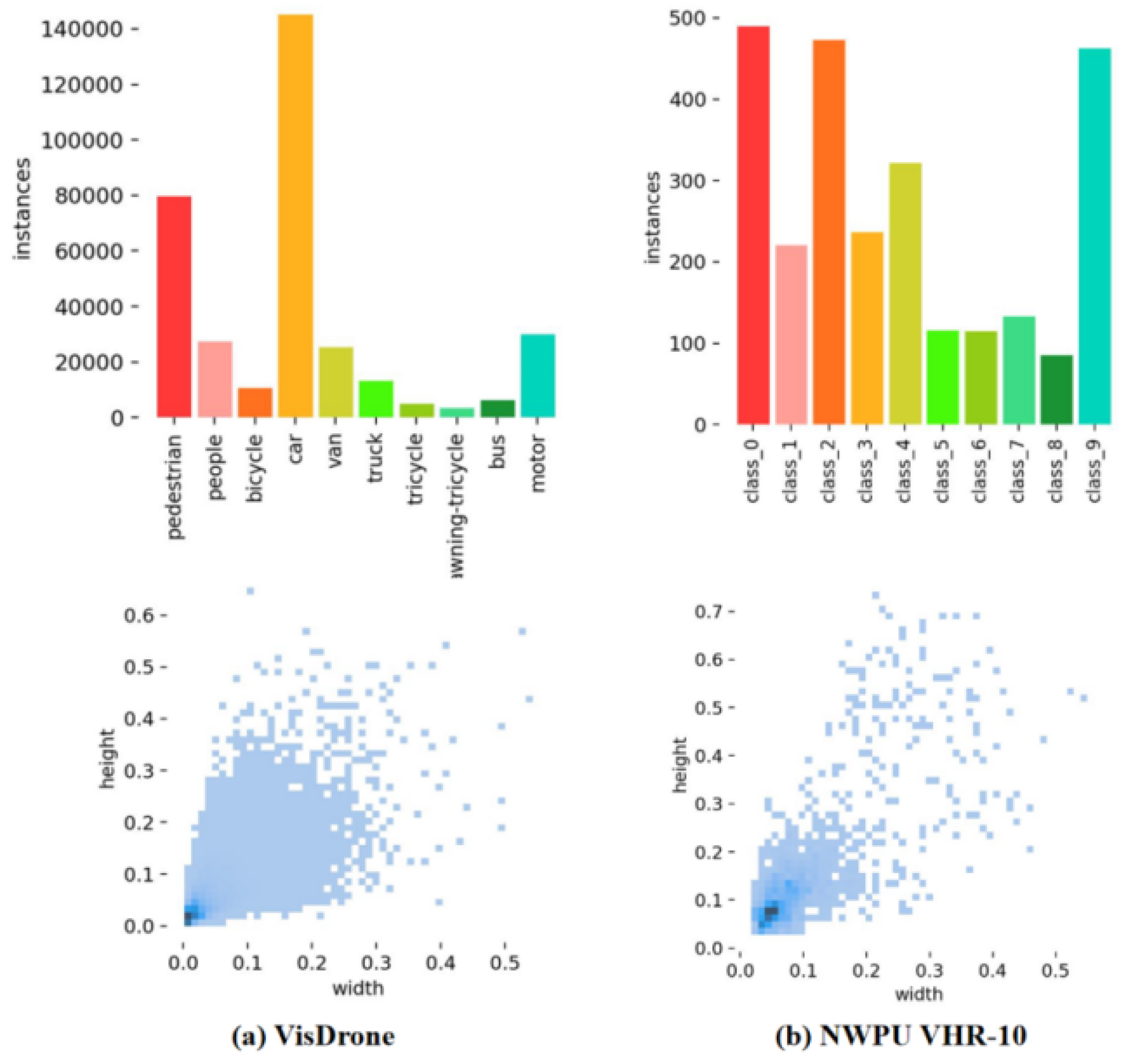

4.1. DataSet

4.2. Evaluation Metrics and Environment

4.2.1. Evaluation Metrics

4.2.2. Experimental Environment

| Parameter | Value |

|---|---|

| optimizer | AdamW |

| base_learning_rate | 0.0001 |

| weight_decay | 0.0001 |

| global_gradient_clip_norm | 0.1 |

| linear_warmup_steps | 2000 |

| minimum learning rate | 0.00001 |

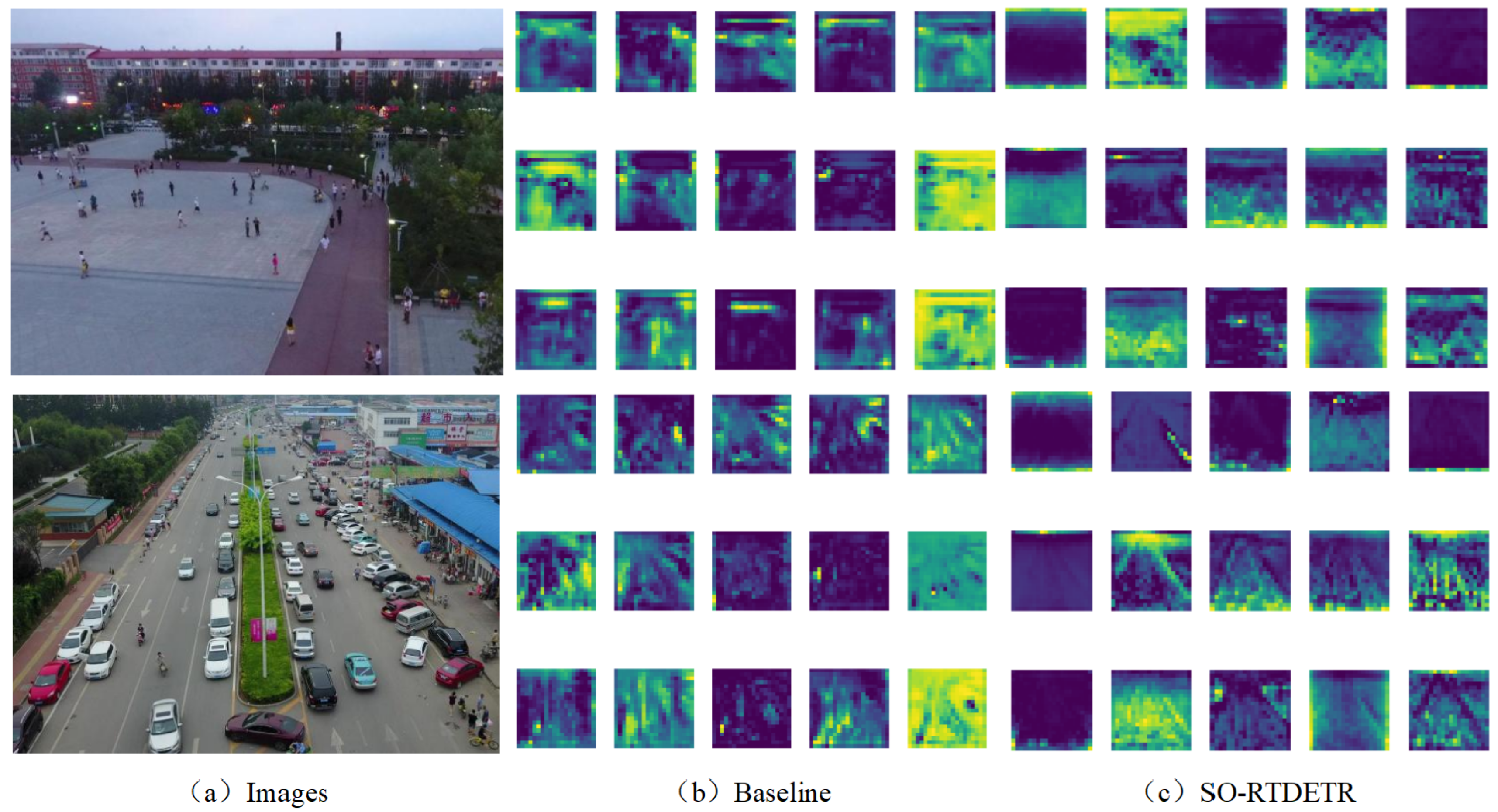

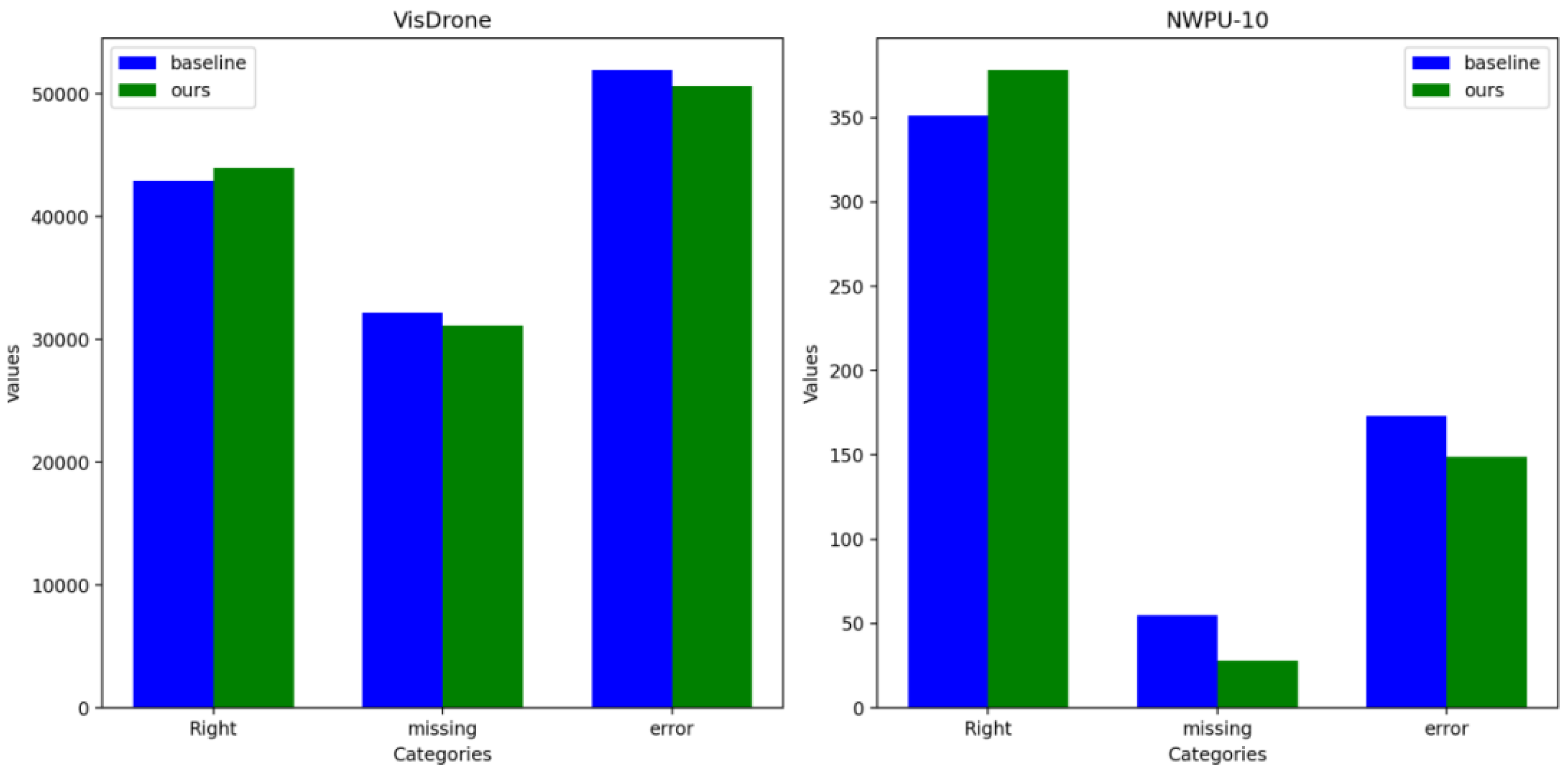

4.3. Ablation Study

4.4. Comparative Experiment

4.4.1. VisDrone

4.4.2. NWPU VHR-10

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

References

- Vaswani, A. Attention is all you need. Advances in Neural Information Processing Systems 2017. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. European conference on computer vision. Springer, 2020, pp. 213–229.

- Girshick, R. Fast r-cnn. arXiv preprint arXiv:1504.08083, 2015. [Google Scholar]

- Redmon, J. You only look once: Unified, real-time object detection. Proceedings of the IEEE conference on computer vision and pattern recognition, 2016.

- Redmon, J. Yolov3: An incremental improvement. arXiv preprint arXiv:1804.02767, 2018. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv preprint arXiv:2004.10934, 2020. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2023, pp. 7464–7475.

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. Yolov9: Learning what you want to learn using programmable gradient information. arXiv preprint arXiv:2402.13616, 2024. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. arXiv preprint arXiv:2405.14458, 2024. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv preprint arXiv:2010.04159, 2020. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024, pp. 16965–16974.

- Miri Rekavandi, A.; Rashidi, S.; Boussaid, F.; Hoefs, S.; Akbas, E.; others. Transformers in Small Object Detection: A Benchmark and Survey of State-of-the-Art. arXiv e-prints 2023, pp. arXiv–2309.

- Xiao, J.; Wu, Y.; Chen, Y.; Wang, S.; Wang, Z.; Ma, J. LSTFE-Net: Long Short-Term Feature Enhancement Network for Video Small Object Detection. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023, pp. 14613–14622.

- Xu, C.; Wang, J.; Yang, W.; Yu, H.; Yu, L.; Xia, G.S. Detecting tiny objects in aerial images: A normalized Wasserstein distance and a new benchmark. ISPRS Journal of Photogrammetry and Remote Sensing 2022, 190, 79–93. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE transactions on pattern analysis and machine intelligence 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. Proceedings of the IEEE international conference on computer vision, 2017, pp. 2961–2969.

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: High quality object detection and instance segmentation. IEEE transactions on pattern analysis and machine intelligence 2019, 43, 1483–1498. [Google Scholar] [CrossRef] [PubMed]

- Pang, J.; Chen, K.; Shi, J.; Feng, H.; Ouyang, W.; Lin, D. Libra r-cnn: Towards balanced learning for object detection. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 821–830.

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14. Springer, 2016, pp. 21–37.

- Lin, T. Focal Loss for Dense Object Detection. arXiv preprint arXiv:1708.02002, 2017. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 2117–2125.

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 8759–8768.

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. Nas-fpn: Learning scalable feature pyramid architecture for object detection. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 7036–7045.

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 10781–10790.

- Huang, W.; Li, G.; Chen, Q.; Ju, M.; Qu, J. CF2PN: A cross-scale feature fusion pyramid network based remote sensing target detection. Remote Sensing 2021, 13, 847. [Google Scholar] [CrossRef]

- Chen, K.; Cao, Y.; Loy, C.C.; Lin, D.; Feichtenhofer, C. Feature pyramid grids. arXiv preprint arXiv:2004.03580, arXiv:2004.03580 2020.

- Yang, G.; Lei, J.; Zhu, Z.; Cheng, S.; Feng, Z.; Liang, R. AFPN: Asymptotic feature pyramid network for object detection. 2023 IEEE International Conference on Systems, Man, and Cybernetics (SMC). IEEE, 2023, pp. 2184–2189.

- Gong, Y.; Yu, X.; Ding, Y.; Peng, X.; Zhao, J.; Han, Z. Effective fusion factor in FPN for tiny object detection. Proceedings of the IEEE/CVF winter conference on applications of computer vision, 2021, pp. 1160–1168.

- Gao, T.; Niu, Q.; Zhang, J.; Chen, T.; Mei, S.; Jubair, A. Global to local: A scale-aware network for remote sensing object detection. IEEE Transactions on Geoscience and Remote Sensing 2023. [Google Scholar] [CrossRef]

- Hu, X.; Xu, W.; Gan, Y.; Su, J.; Zhang, J. Towards disturbance rejection in feature pyramid network. IEEE Transactions on Artificial Intelligence 2022, 4, 946–958. [Google Scholar] [CrossRef]

- Liu, H.I.; Tseng, Y.W.; Chang, K.C.; Wang, P.J.; Shuai, H.H.; Cheng, W.H. A DeNoising FPN with Transformer R-CNN for Tiny Object Detection. IEEE Transactions on Geoscience and Remote Sensing 2024. [Google Scholar] [CrossRef]

- Du, Z.; Hu, Z.; Zhao, G.; Jin, Y.; Ma, H. Cross-Layer Feature Pyramid Transformer for Small Object Detection in Aerial Images. arXiv preprint arXiv:2407.19696, 2024. [Google Scholar]

- Li, H.; Zhang, R.; Pan, Y.; Ren, J.; Shen, F. Lr-fpn: Enhancing remote sensing object detection with location refined feature pyramid network. arXiv preprint arXiv:2404.01614, 2024. [Google Scholar]

- Dosovitskiy, A. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. Proceedings of the IEEE/CVF international conference on computer vision, 2021, pp. 10012–10022.

- Ma, T.; Mao, M.; Zheng, H.; Gao, P.; Wang, X.; Han, S.; Ding, E.; Zhang, B.; Doermann, D. Oriented object detection with transformer. arXiv preprint arXiv:2106.03146, 2021. [Google Scholar]

- Huang, Z.; Zhang, C.; Jin, M.; Wu, F.; Liu, C.; Jin, X. Better Sampling, towards Better End-to-end Small Object Detection. arXiv preprint arXiv:2407.06127, 2024. [Google Scholar]

- Yang, F.; Fan, H.; Chu, P.; Blasch, E.; Ling, H. Clustered object detection in aerial images. Proceedings of the IEEE/CVF international conference on computer vision, 2019, pp. 8311–8320.

- Li, C.; Yang, T.; Zhu, S.; Chen, C.; Guan, S. Density map guided object detection in aerial images. proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, 2020, pp. 190–191.

- Huang, Y.; Chen, J.; Huang, D. UFPMP-Det: Toward accurate and efficient object detection on drone imagery. Proceedings of the AAAI conference on artificial intelligence, 2022, Vol. 36, pp. 1026–1033.

- Du, B.; Huang, Y.; Chen, J.; Huang, D. Adaptive sparse convolutional networks with global context enhancement for faster object detection on drone images. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2023, pp. 13435–13444.

- Chen, L.; Liu, C.; Li, W.; Xu, Q.; Deng, H. DTSSNet: Dynamic Training Sample Selection Network for UAV Object Detection. IEEE Transactions on Geoscience and Remote Sensing 2024. [Google Scholar] [CrossRef]

- Zhang, Z. Drone-YOLO: an efficient neural network method for target detection in drone images. Drones 2023, 7, 526. [Google Scholar] [CrossRef]

- Liu, K.; Fu, Z.; Jin, S.; Chen, Z.; Zhou, F.; Jiang, R.; Chen, Y.; Ye, J. ESOD: Efficient Small Object Detection on High-Resolution Images. arXiv preprint arXiv:2407.16424, 2024. [Google Scholar]

- Zhang, Y.; Ye, M.; Zhu, G.; Liu, Y.; Guo, P.; Yan, J. FFCA-YOLO for small object detection in remote sensing images. IEEE Transactions on Geoscience and Remote Sensing 2024. [Google Scholar] [CrossRef]

- Khalili, B.; Smyth, A.W. SOD-YOLOv8—Enhancing YOLOv8 for Small Object Detection in Aerial Imagery and Traffic Scenes. Sensors 2024, 24, 6209. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Gao, G.; Huang, Z.; Hu, Z.; Liu, Q.; Wang, Y. YOLC: You Only Look Clusters for Tiny Object Detection in Aerial Images. IEEE Transactions on Intelligent Transportation Systems 2024. [Google Scholar] [CrossRef]

- Xiao, J.; Guo, H.; Zhou, J.; Zhao, T.; Yu, Q.; Chen, Y. Tiny object detection with context enhancement and feature purification. Expert Systems with Applications 2023, 211, 118665. [Google Scholar] [CrossRef]

- Sunkara, R.; Luo, T. No more strided convolutions or pooling: A new CNN building block for low-resolution images and small objects. Joint European conference on machine learning and knowledge discovery in databases. Springer, 2022, pp. 443–459.

- Cui, Y.; Ren, W.; Knoll, A. Omni-Kernel Network for Image Restoration. Proceedings of the AAAI Conference on Artificial Intelligence, 2024, Vol. 38, pp. 1426–1434.

- Xu, S.; Zheng, S.; Xu, W.; Xu, R.; Wang, C.; Zhang, J.; Teng, X.; Li, A.; Guo, L. HCF-Net: Hierarchical Context Fusion Network for Infrared Small Object Detection. arXiv preprint arXiv:2403.10778, 2024. [Google Scholar]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. Tood: Task-aligned one-stage object detection. 2021 IEEE/CVF International Conference on Computer Vision (ICCV). IEEE Computer Society, 2021, pp. 3490–3499.

- Biffi, L.J.; Mitishita, E.; Liesenberg, V.; Santos, A.A.d.; Gonçalves, D.N.; Estrabis, N.V.; Silva, J.d.A.; Osco, L.P.; Ramos, A.P.M.; Centeno, J.A.S.; others. ATSS deep learning-based approach to detect apple fruits. Remote Sensing 2020, 13, 54.

- Lyu, C.; Zhang, W.; Huang, H.; Zhou, Y.; Wang, Y.; Liu, Y.; Zhang, S.; Chen, K. Rtmdet: An empirical study of designing real-time object detectors. arXiv preprint arXiv:2212.07784, 2022. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv preprint arXiv:2107.08430, 2021. [Google Scholar]

- Liu, J.; Jing, D.; Zhang, H.; Dong, C. SRFAD-Net: Scale-Robust Feature Aggregation and Diffusion Network for Object Detection in Remote Sensing Images. Electronics 2024, 13, 2358. [Google Scholar] [CrossRef]

| PPA | S2-CCFF | NWD | #P(M) | GFLOPS | mAP |

|---|---|---|---|---|---|

| × | × | × | 38.61 | 56.8 | 44.8 |

| ✓ | × | × | 27.46 | 62.1 | 45.6 |

| × | ✓ | × | 39.8 | 66.9 | 46.8 |

| × | × | ✓ | 38.61 | 57.0 | 45.1 |

| ✓ | ✓ | × | 37.39 | 77.7 | 47.7 |

| ✓ | ✓ | ✓ | 37.39 | 76.0 | 47.9 |

| PPA | S2-CCFF | NWD | #P(M) | GFLOPS | mAP |

|---|---|---|---|---|---|

| × | × | × | 38.61 | 57.0 | 86.4 |

| ✓ | × | × | 35.05 | 60.2 | 87.2 |

| × | ✓ | × | 39.80 | 65.2 | 88.2 |

| × | × | ✓ | 38.61 | 57.0 | 87.3 |

| ✓ | ✓ | × | 39.2 | 76.0 | 89.3 |

| ✓ | ✓ | ✓ | 39.2 | 76.0 | 89.5 |

| Method | mAP | mAP_0.5 | mAP_0.75 | mAP_s | mAP_m | mAP_l |

|---|---|---|---|---|---|---|

| Faster-RCNN [15] | 0.205 | 0.342 | 0.219 | 0.100 | 0.295 | 0.433 |

| Cascade-RCNN [17] | 0.208 | 0.337 | 0.224 | 0.101 | 0.299 | 0.452 |

| TOOD [52] | 0.214 | 0.346 | 0.230 | 0.104 | 0.303 | 0.416 |

| ATSS [53] | 0.216 | 0.349 | 0.231 | 0.102 | 0.308 | 0.458 |

| RetinaNet [20] | 0.178 | 0.294 | 0.189 | 0.067 | 0.265 | 0.430 |

| RTMDet [54] | 0.184 | 0.312 | 0.213 | 0.077 | 0.288 | 0.445 |

| YOLOX [55] | 0.156 | 0.283 | 0.155 | 0.078 | 0.213 | 0.288 |

| RT-DETR [11] | 0.159 | 0.365 | 0.107 | 0.138 | 0.284 | 0.231 |

| SO-RTDETR | 0.176 | 0.393 | 0.123 | 0.157 | 0.330 | 0.227 |

| Method | mAP | mAP_0.5 | mAP_0.75 | mAP_s | mAP_m | mAP_l |

|---|---|---|---|---|---|---|

| Faster-RCNN [15] | 0.512 | 0.878 | 0.55 | 0.45 | 0.48 | 0.544 |

| Cascade-RCNN [17] | 0.543 | 0.881 | 0.583 | 0.35 | 0.486 | 0.576 |

| TOOD [52] | 0.482 | 0.876 | 0.498 | 0.45 | 0.446 | 0.549 |

| ATSS [53] | 0.459 | 0.813 | 0.481 | 0.114 | 0.463 | 0.487 |

| RetinaNet [20] | 0.512 | 0.815 | 0.611 | 0.412 | 0.621 | 0.562 |

| RTMDet [54] | 0.562 | 0.878 | 0.641 | 0.419 | 0.636 | 0.571 |

| YOLOX [55] | 0.522 | 0.841 | 0.615 | 0.345 | 0.505 | 0.588 |

| RT-DETR [11] | 0.499 | 0.853 | 0.570 | 0.274 | 0.507 | 0.679 |

| SO-RTDETR | 0.570 | 0.882 | 0.629 | 0.403 | 0.546 | 0.678 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).