1. Introduction

1.1. Background and Motivation

Self-organization is key to understanding the existence of, and the changes in all systems that lead to higher levels of complexity and perfection in development and evolution. It is a scientific as well as a philosophical question, as our understanding deepens and our realization of the importance of the process grows. Self-organization often leads to more efficient use of resources and optimized performance, which is one measure of the degree of perfection. By degree of perfection here we mean a more organized, robust, resilient, competitive, and alive system. Because competition for resources is always the selective evolutionary pressure in systems of different natures, the more efficient systems will survive at all levels of Cosmic Evolution.

Our goal is to contribute to the explanation of the mechanisms of self-organization that drive Cosmic Evolution from the Big Bang to the present, and into the future, and its measures[

1,

2,

3]. Self-organization has a universality independent of the substrate of the system - physical, chemical, biological, or social - and explains all of its structures [

4,

5,

6,

7]. Establishing a universal, quantitative, absolute method to measure the organization of any system will help us understand the mechanisms of functioning and organization in general and enable us to design specific systems with the highest level of perfection [

8,

9,

10,

11,

12].

Previous attempts to quantify organization have used static measures, such as information [

13,

14,

15,

16,

17,

18,

19] and entropy [

20,

21,

22,

23,

24,

25], but our approach offers a new, dynamic perspective. We use the expanded Hamilton’s action principle and derive from it a dynamic action principle for the system studied, where average unit action for one trajectory is continuously decreasing and total action for the whole system is continuously increasing with self-organization.

Despite its significance, the mechanisms driving self-organization remain only partially understood due to the non-linearity in the dynamics of complex systems. Existing approaches often rely on specific metrics like entropy or information which are static, and while they are valuable, they have limitations in their universality and ability to predict the most organized state of a system. In many cases, they fail to describe the dynamics of the processes that lead to the increase of order and complexity. For example, a less organized system may require more bits to be described using information, compared to the same system in a more organized, i.e. efficient state. These traditional measures often fall short in providing a comprehensive, quantitative framework that can be applied across various types of complex systems, and to describe the mechanisms that lead to higher levels of organization. This gap in understanding highlights the need for a new measure that can universally and quantitatively assess the level of organization in complex systems and the transitions between them.

The motivation for this study stems from the desire to bridge this gap by introducing a novel measure of organization based on dynamical variational principles. More specifically we use Hamilton’s principle of stationary action, which is the basis of all laws of physics. In the limiting case, when the second variation of the action is positive, this makes it a true principle of least action. The principle of least action posits that the path taken by a physical system between two states is the one for which the action is minimized. Extending this principle to complex systems, we propose Average Action Efficiency as a new, dynamic measure of organization. It quantifies the level of organization and serves as a predictive tool for determining the most organized state of a system. It also correlates with all other measures of complex systems, justifying and validating its use.

Understanding the mechanisms of self-organization has profound implications across various scientific disciplines. Understanding these natural optimization processes can inspire the development of more efficient algorithms and strategies in engineering and technology. It can enhance our understanding of biological and ecological processes. It can allow us to design more efficient economic and social systems. Studying self-organization also has profound scientific and philosophical implications. It challenges traditional notions of causality and control, emphasizing the role of local interactions and feedback loops in shaping global patterns. In our model, each characteristic of a complex system is simultaneously a cause and an effect of all others. By developing a universal, quantitative measure of organization, we aim to advance our understanding of self-organization and provide practical tools for optimizing complex systems across different fields.

1.2. Novelty and Scientific Contributions

In this paper we will explore the following topics. Here are the highlights of them.

1.2.1. Dynamical Variational Principles

Extension of Hamilton’s Least Action Principle to Non-Equilibrium Systems This work extends Hamilton’s principle by applying it to stochastic and non-equilibrium systems, offering a framework that proposes a connection of classical mechanics to entropy and information-based variational principles to analyze self-organization in complex systems. We define novel dual dynamical principles, such as increasing total action, information and entropy while decreasing unit action, information and entropy, which reflect the system’s evolution and self-organization. These principles manifest in power-law relationships and reveal a unit-total (or local-global) duality across scales. Agent-Based Simulations confirm these dual variational principles, which support a multiscale approach to modeling hierarchical and networked systems by linking micro-level interactions with macro-level organizational structures.

1.2.2. Positive Feedback Model of Self-Organization

Positive Feedback Model with Power-Law and Exponential Growth Predictions We introduce and test a model of positive feedback loops within self-organizing systems, predicting power-law relationships and exponential growth. This model goes beyond empirical observations by mathematically deriving the power-law relations, providing a predictive framework for system dynamics in complex systems.

Prediction of Power-Law and Exponential Growth Patterns in Complex System Characteristics By showing that the feedback mechanisms between the characteristics can predict power-law relationships among system variables, the paper goes beyond qualitative descriptions. Traditional models often observe power-law scaling relationships without predicting them. Here, the work mathematically derives these relationships, offering a framework that could extend to empirical verification across disciplines.

1.2.3. Average Action Efficiency (AAE)

Introduction of Average Action Efficiency (AAE) as a Dynamic Measure AAE provides a real-time, universal metric for quantifying organization, based on the motion of the agents, surpassing traditional static measures. Application of this measure can be explored across disciplines, including biology and engineering. It is validated through simulations. AAE enables real-time system diagnosis and control, with applications in robotics, environmental management, and adaptive systems.

1.2.4. Agent-Based Modeling (ABM)

Dynamic ABM Our ABM includes dynamic effects such as pheromone feedback, allowing for realistic simulations of complex behaviors that validate the theoretical framework. This model demonstrates the applicability of the variational principles and dualism in stochastic and dissipative settings, enhancing the framework’s utility for future research and experimental studies.

1.2.5. Intervention and Control in Complex Systems

Real-Time Metric for Adaptive Control With AAE as a real-time measurable metric, we establish a basis for guiding systems toward optimized states. This diagnostic potential is relevant in fields like engineering and sustainability.

1.2.6. Average Action-Efficiency as a Predictor of System Robustness

Average Action Efficiency (AAE) is proposed not only as a measure of organization but also as a predictor of system robustness. We suggests that systems with higher AAE are more robust and resilient to perturbations, providing a measurable link between action efficiency and system stability. This has scientific relevance for fields like ecology, network theory, and engineering, where robustness is key to system survival and functionality in changing environments.

Theoretical Framework Linking AAE to System Efficiency and Stability The paper’s theory posits that AAE reflects the level of organization within a system, where higher AAE corresponds to more efficient, streamlined configurations that minimize wasted energy or time. This theoretical underpinning aligns well with the concept of robustness, as more organized and efficient systems are generally better equipped to withstand disturbances due to their optimized internal structure.

Positive Feedback Loops Reinforcing Stability The positive feedback model presented in the paper suggests that as AAE increases, there is an exponential reinforcement of organized structures within the system. This self-reinforcing organization implies that systems with high AAE are not only efficient but also maintain structural coherence, which can enhance their ability to absorb and recover from perturbations. This resilience is a characteristic of robust systems in fields like ecology and network theory.

Simulation Results Demonstrating Stability at High AAE The agent-based simulations provide empirical support by showing that systems reach stable, organized states with increased AAE, despite initial stochastic movements and random perturbations. For example, in the ant simulation, paths converge to efficient routes over time, demonstrating the system’s ability to stabilize around high-efficiency configurations. This illustrates that systems with higher AAE can naturally resist or recover from randomness, showing robustness.

1.2.7. Philosophical Contribution

Fundamental Understanding of Self-Organization and Causality This work deepens the theoretical understanding of self-organization, suggesting that each characteristic within a system functions as both cause and effect, providing a foundation for new research on causality in complex systems.

Contribution to the Philosophy of Self-Organization and Evolution Beyond technical applications, this work deepens the philosophical understanding of self-organization by framing it as a universal process governed by variational principles that transcend specific system boundaries. The dynamic minimization of unit action combined with total action growth introduces a novel concept of evolution that is proposed to apply to all open, complex systems. This conceptualization could inspire further philosophical inquiry into the nature of causality, emergence, and evolution in complex systems.

1.2.8. Novel Conceptualization of Evolution as a Path to Increased Action Efficiency

This paper introduces a unique evolutionary perspective, where self-organization drives systems toward states of increased action efficiency. This approach departs from more static views of evolution in complex systems, framing evolution not merely as survival optimization but as an open-ended journey toward dynamically minimized unit actions within the context of system growth. We propose that evolution in complex systems is driven at least in part by increasing action efficiency, offering a quantitative basis for directional evolution as systems optimize organization over time. This evolution of internal structure, in general is coupled with the environment, a question that we will explore in further research.

1.3. Overview of the Theoretical Framework

We use the extension of Hamilton’s Principle of Stationary Action to a Principle of Dynamic Action, according to which action in self-organizing systems is changing in two ways: decreasing the average action for one event and increasing the total amount of action in the system during the process of self-organization, growth, evolution, and development. This view can lead to a deeper understanding of the fundamental principles of nature’s self-organization, evolution, and development in the universe, ourselves, and our society.

1.4. Hamilton’s Principle and Action Efficiency

Hamilton’s principle of stationary action is the most fundamental principle in nature, from which all other physics laws are derived [

26,

27]. Everything derived from it is guaranteed to be self-consistent [

28]. Beyond classical and quantum mechanics, relativity, and electrodynamics, it has applications in statistical mechanics, thermodynamics, biology, economics, optimization, control theory, engineering, and information theory [

29,

30,

31]. We propose its application, extension, and connection to other characteristics of complex systems as part of the complex systems theory.

Enders notably says: "One extremal principle is undisputed “the least-action principle (for conservative systems), which can be used to derive most physical theories, ...Recently, the stochastic least-action principle was also established for dissipative systems. Information theory and the stochastic least-action principle are important corner stones of modern stochastic thermodynamics." [

32] and "Our analytical derivations show that MaxEPP is a consequence of the least-action principle applied to dissipative systems (stochastic least-action principle) [

32].

Similar dynamic variational principles have also been proposed in considering the dynamics of systems away from thermodynamic equilibrium. Martyushev has published reviews on Maximum Entropy Production Principle (MEPP) saying: "A nonequilibrium system develops so as to maximize its entropy production under present constraints. " [

33]. "Sawada emphasized that the maximal entropy production state is most stable to perturbations among all possible (metastable) states." [

34], which we will connect with dynamical action principles in the second part of this work.

The derivation of the MEPP from LAP was first done by Dewar in 2003[

35] [

36], basing his work on Jayne’s theory from 1957 [

37,

38], and extending it to non-equilibrium systems.

The papers by Umberto Lucia "Entropy Generation: Minimum Inside and Maximum Outside" (2014) [

39] and "The Second Law Today: Using Maximum-Minimum Entropy Generation" [

40] examine the thermodynamic behavior of open systems in terms of entropy generation and the principle of least action. Lucia explores the concept that within open systems, entropy generation tends to a minimum inside the system and reaches a maximum outside it, which relates to our observations of dualities of the same characteristic.

François Gay-Balmaz and Hiroshi Yoshimura derive a form of dissipative Least Action Principle (LAP) for systems out of equilibrium. Specifically, they extend the classical variational approaches used in reversible mechanics to dissipative systems. Their work involves the use of Lagrangian and Hamiltonian mechanics in combination with thermodynamic forces and fluxes, and they introduce modifications to the standard variational calculus to account for irreversible processes [

41,

42,

43]

Arto Annila derives the Maximum Entropy Production Principle (MEPP) from the Least Action Principle (LAP) and demonstrates how the principle of least action underlies natural selection processes, showing that systems evolve to consume free energy in the least amount of time, thereby maximizing entropy production. He links LAP to the second law of thermodynamics and, consequently, MEPP[

44]. evolutionary processes in both living and non-living systems can be explained by the principle of least action, which inherently leads to maximum entropy production. [

45]. Both papers provide a detailed account of how MEPP can be understood as an outcome of the Least Action Principle, grounding it in thermodynamic and physical principles.

The potential of the stochastic least action principle has been shown in [

46] and a connection has been made to entropy.

The concept of least action has been generalized by applying it to both heat absorption and heat release processes [

47]. This minimization of action corresponds to the maximum efficiency of the system, reinforcing the connection between the least action principle and thermodynamic efficiency. By applying the principle of least action to thermodynamic processes, the authors link this principle to the optimization of efficiency.

The increase in entropy production was related to the system’s drive towards a more ordered, synchronized state, and this process is consistent with MEPP, which suggests that systems far from equilibrium will evolve in ways that maximize entropy production. Thus, a basis is provided for the increase in entropy using LAP [48]

The least action principle has been used to derive Maximum entropy change for nonequilibrium systems. [49]

Variational methods have been emphasized in the context of non-equilibrium thermodynamics for fluid systems, especially in relation to MEPP emphasizing thermodynamic variational principles in nonlinear systems [50]

MEPP and the Least Action Principle (LAP) are connected through the Riemannian geometric framework, which provides a generalized least action bound applicable to probabilistic systems, including both equilibrium and non-equilibrium systems [51].

Herglotz principle introduces dissipation directly into the variational framework by modifying the classical action functional with a dissipation term. This is significant because it provides a way to account for energy loss and the irreversible nature of processes in non-equilibrium systems. Herglotz’s principle provides a powerful tool for non-equilibrium thermodynamics by allowing for the incorporation of dissipative processes into a variational framework. This enables the modeling of systems far from equilibrium, where energy dissipation and entropy production play key roles. By extending classical mechanics to include irreversibility, Herglotz principle offers a way to describe the evolution of systems in non-equilibrium thermodynamics, potentially linking it to other key concepts like the Onsager relations and the MEPP [52–54].

In Beretta’s fourth law of thermodynamics, the steepest entropy ascent could be seen as analogous to a least action path in the context of non-equilibrium thermodynamics, where the system follows the most "efficient" path toward equilibrium by maximizing entropy production. Both principles are forms of optimization, where one minimizes physical action and the other maximizes entropy, providing deep structural insights into the behavior of systems across physics [55].

In most cases in classical mechanics, Hamilton’s stationary action is minimized, in some cases, it is a saddle point, and it is never maximized. The minimization of average unit action is proposed as a driving principle and the arrow of evolutionary time, and the saddle points are temporary minima that transition to lower action states with evolution. Thus, globally, on long-time scales, average action is minimized and continuously decreasing, when there are no external limitations. This turns it into a dynamic action principle for open-ended processes of self-organization evolution and development.

Our thesis is that we can complement other measures of organization and self-organization by applying a new, absolute, and universal measure based on Hamilton’s principle and its extension to dissipative and stochastic systems. This measure can be related to previously used measures, such as entropy and information, as in our model for the mechanism of self-organization, progressive development, and evolution. We demonstrate this with power-law relationships in the results.

This paper presents a derivation of a quantitative measure of action efficiency and a model in which all characteristics of a complex system reinforce each other, leading to exponential growth and power law relations between each pair of characteristics. The principle of least action is proposed as the driver of self-organization, as agents of the system follow natural laws in their motion, resulting in the most action-efficient paths. This explains why complex systems form structures and order, and continue self-organizing in their evolution and development.

Our measure of action efficiency assumes dynamical flow networks away from thermodynamic equilibrium that transport matter and energy along their flow channels and applies to such systems. The significance of our results is that they empower natural and social sciences to quantify organization and structure in an absolute, numerical, and unambiguous way. Providing a mechanism through which the least action principle and the derived measure of average action efficiency as the level of organization interact in a positive feedback loop with other characteristics of complex systems explains the existence of observed events in Cosmic Evolution. The tendency to minimize average unit action for one crossing between nodes in a complex flow network comes from the principle of least action and is proposed as the arrow of time, the main driving principle towards, and explanation of progressive development and evolution that leads to the enormous variety of systems and structures that we observe in nature and society.

1.5. Mechanism of Self-Organization

The research in this study demonstrates the driving principle and mechanism of self-organization and evolution in general open, complex, non-equilibrium thermodynamic systems, employing agent-based modeling. We propose that the state with the least average unit action is the attractor for all processes of self-organization and development in the universe across all systems. We measure this state through Average Action Efficiency (AAE).

We present a model for quantitatively calculating the amount of organization in a general complex system and its correlation with all other characteristics through power law relationships. We also show the cause for the progressive development and evolution which is the positive feedback loop between all characteristics of the system that leads to an exponential growth of all of them until an external limit is reached. Always, the internal organization of all complex systems in nature reflects their external environment where the flows of energy and matter come from. This model also predicts power law relationships between all characteristics. Numerous measured complexity-size scaling relationships confirm the predictions of this model [

32,

33,

34].

Our work addresses a gap in complex system science by providing an absolute and quantitative measure of organization, namely AAE, based on the movement of agents and their dynamics. This measure is functional and dynamic, not relative and static as in many other metrics. We show that the amount of organization is inversely proportional to the average physical amount of action in a system. We derive the expression for organization, apply it to a simple example, and validate it with results from agent-based modeling (ABM) simulations which allow us to verify experimental data, and to vary conditions to address specific questions[

35,

36]. We discuss extensions of the model for a large number of agents and state the limitations and applicability of this model in our list of assumptions.

Measuring the level of organization in a system is crucial because it provides a long-sought criterion for evaluating and studying the mechanisms of self-organization in natural and technological systems. All those are dynamic processes, which necessitate searching for a new, dynamic measure. By measuring the amount of organization, we can analyze and design complex systems to improve our lives, in ecology, engineering, economics, and other disciplines. The level of organization corresponds to the system’s robustness, which is vital for survival in case of accidents or events endangering any system’s existence [

37]. Philosophically and mathematically, each characteristic of the system is a cause and effect of all the others, similar to auto-catalytic cycles, which is well-studied in cybernetics [

38].

1.6. Negative Feedback

Negative feedback is evident in the fact that large deviations from the power law proportionality between the characteristics are not observed or predicted. This proportionality between all characteristics at any stage of the process of self-organization is the balanced state of functioning which is usually known as a Homeostatic, or dynamical equilibrium state of the system. Complex systems function as wholes only at values of all characteristics close to this Homeostatic state. If some external influence causes large deviations even on one of the characteristics from this homeostatic value, the system functioning is compromised[

38].

1.7. Unit-Total Dualism

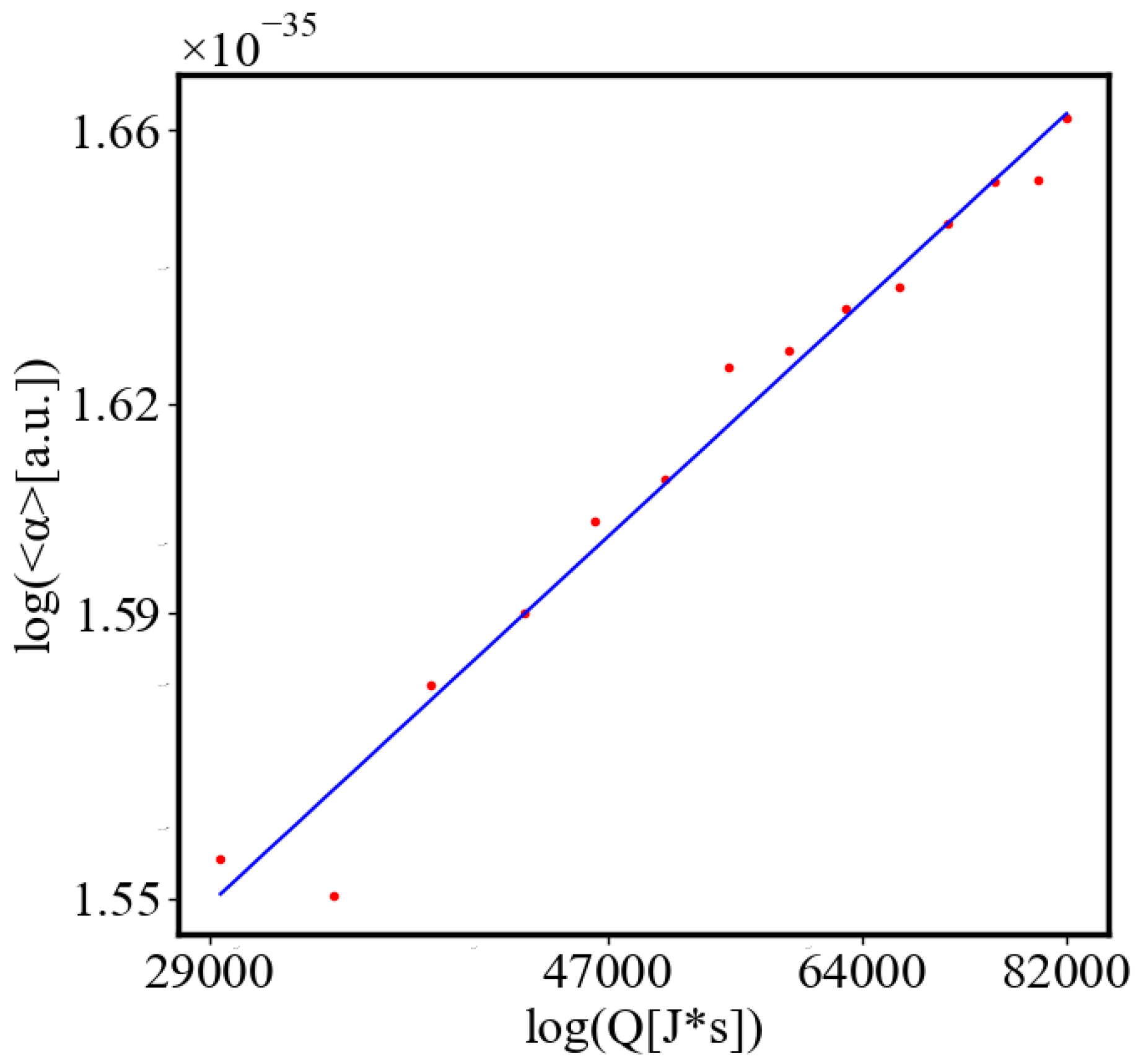

We find a unit-total dualism: unit quantities of the characteristics are minimized while total quantities are maximized with systems’ growth. For example, the average unit action for one event, which is one edge crossing in networks, is derived from the average path length and path time, and it is minimized as calculated by the average action efficiency . At the same time, the total amount of action Q in the whole system increases, as the system grows, which can be seen in the results from our simulation. This is an expression of the principles of decreasing average unit action and increasing total action. Similarly, unit entropy per one trajectory decreases in self-organization, as the total entropy of the system increases with its growth, expansion, and increasing number of agents. Those can be termed the principles of decreasing unit entropy and of increasing total entropy. The information for describing one event in the system, with increased efficiency and shorter paths is decreasing, while the total information in the system as it grows is increasing. They are also related by a power law relationship, which means, that one can be correlated to the other, and for one of them to change, the other must also change proportionally.

1.8. Unit Total Dualism Examples

Analogous qualities are evidenced in data for real systems and appear in some cases so often that they have special names. For example, the Jevons paradox (Jevons effect) was published in 1866 by the English economist William S. Jevons [

39] . In one example, as the fuel efficiency of cars increased, the total miles traveled also increased to increase the total fuel expenditure. This is also named a "rebound effect" from increased energy efficiency [

40]. The naming of this effect as a "paradox" shows that it is unexpected, not well studied, and sometimes considered as undesirable. In our model, it is derived mathematically as a result of the positive feedback loops of the characteristics of complex systems, which is the mechanism of its self-organization, and supported by the simulation results. It is not only unavoidable, but also necessary for the functioning, self-organization, evolution, and development of those systems.

In economics, it is evident that with increased efficiency, the costs decrease which increases the demand, which is named the "law of demand" [

41]. This is another example of a size-complexity rule, whereas the efficiency increases, which in our work is a measure of complexity, the demand increases, which means that the size of the system also increases. In the 1980s the Jevons paradox was expanded to a Khazzoom–Brookes postulate, formulated by Harry Saunders in 1992 [

42], which says that it is supported by the "growth theory" which is the prevailing economic theory for long-run economic growth and technological progress. Similar relations have been observed in other areas, such as in the Downs–Thomson paradox [

43], where increasing road efficiency increases the number of cars driving on the road. These are just a few examples that point out that this unit-total dualism has been observed for a long time in many complex systems and it was thought to be paradoxical.

1.9. Action Principles in this Simulation, Potential Well

In each run of this specific simulation, the average unit action has the same stationary point, which is a true minimum of the average unit action, and the shortest path between the fixed nodes is a straight line. This is the theoretical minimum and remains the same across simulations. The closest analogy is with a particle in free fall, where it minimizes action and falls in a straight line, which is a geodesic. The difference in the simulation is that the ants have a wiggle angle and, at each step, deposit pheromone that evaporates and diffuses, therefore the difference with gravity is that the effective attractive potential is not uniform. Due to this the potential landscape changes dynamically. The shape of the walls of the potential well changes slightly with fluctuations around the average at each step. It also changes when the number of ants is varied between runs.

The potential well is steeper higher on its walls, and the system cannot be trapped there in local minima of the fluctuations. This is seen in the simulation as initially, the agents form longer paths that disintegrate into shorter ones. In this region away from the minimum, the action is truly always minimized, with some stochastic fluctuations. Near the bottom of the well, the slope of its wall is smaller, and local minima of the fluctuations cannot be overcome easily by the agents. Then the the system temporarily gets trapped in one of those local minima and the average unit action is a dynamical saddle point.

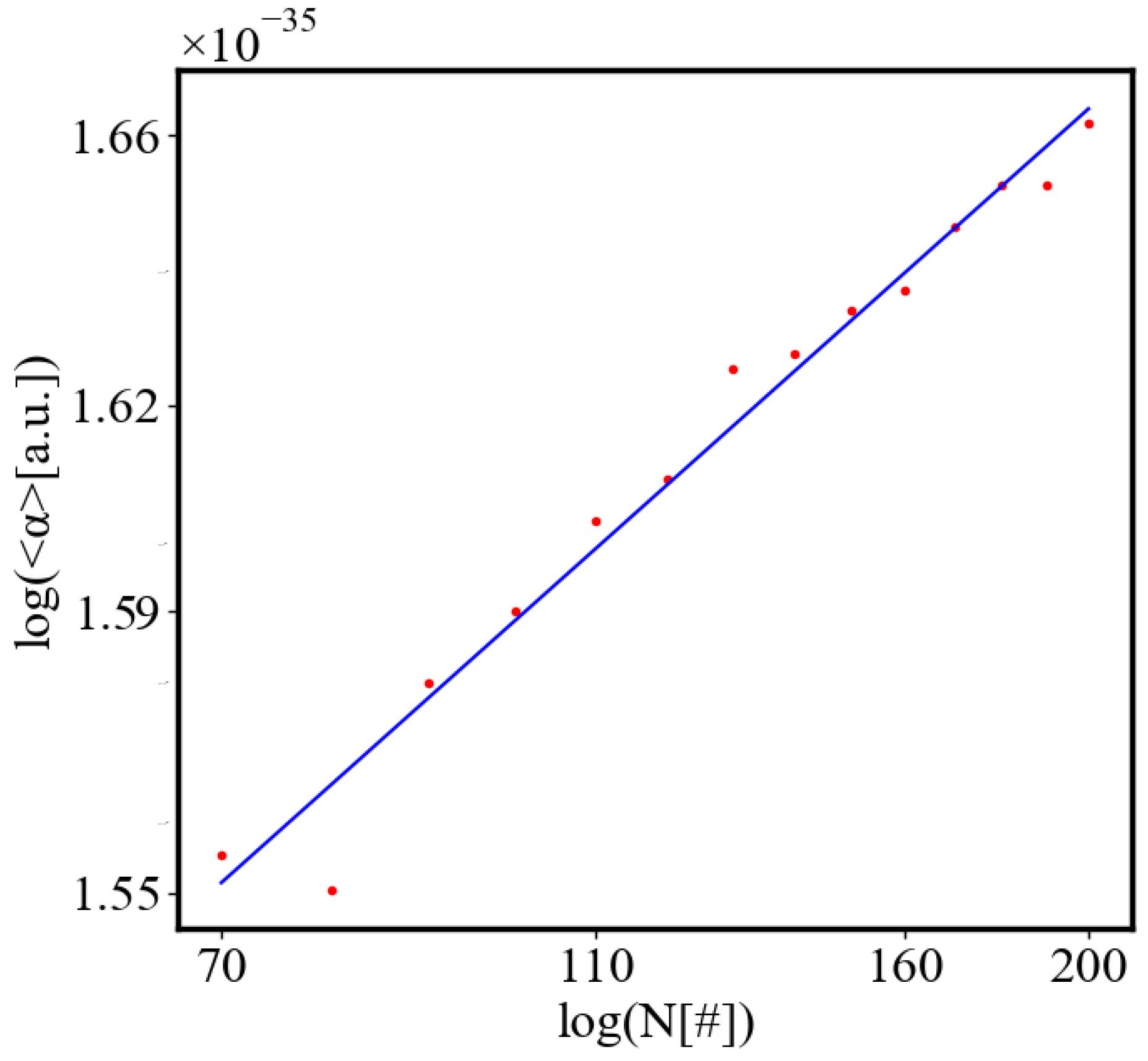

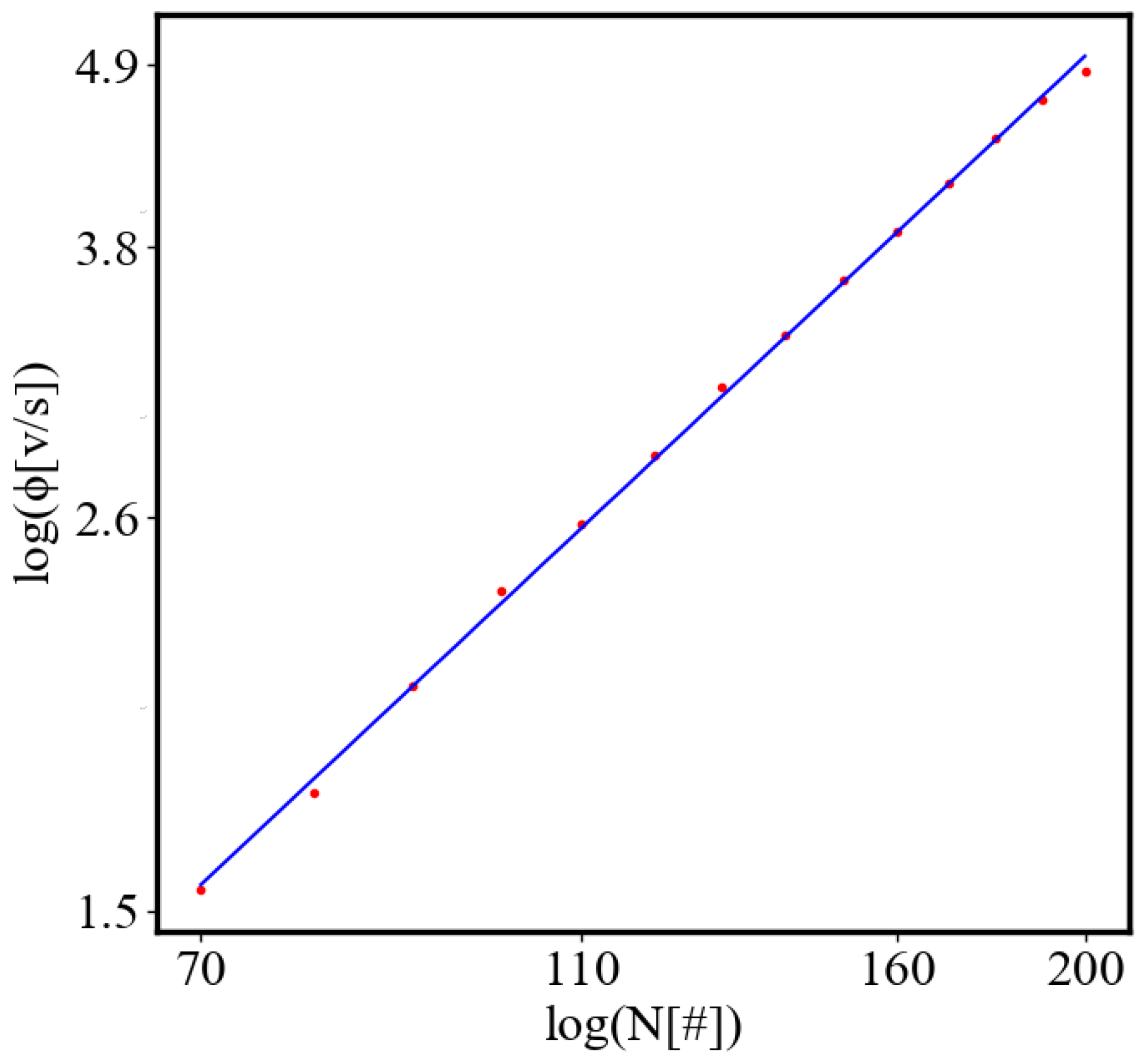

The simulation shows that with fewer ants, the system is more likely to get trapped in a local minimum, resulting in a path with greater curvature and higher final average action (lower average action efficiency) compared to the theoretical minimum. With an increasing number of ants, they can explore more neighboring states, find lower local minima, and find lower average action states. Therefore, increasing the number of ants allows the system to explore more effectively neighboring paths and find shorter ones. This is evident as the average action efficiency improves when there are more ants, which can escape higher local minima and find lower action values (see Fig. 8). As the number of ants (agents, system size) increases, they asymptotically find lower local minima or lower average action, improving average action efficiency, though never reaching the theoretical minimum.

In future simulations, if the distance between nodes is allowed to shrink and external obstacles are reduced, the shape of the entire potential well changes dynamically. Its minimum becomes lower, the steepness of its walls increases and the system more easily escapes local minima. However, it still does not reach the theoretical minimum, due to its fluctuations near the minimum of the well. The average action decreases, and average action efficiency increases with the lowering of this minimum, demonstrating continuous open-ended self-organization and development. This illustrates the dynamical action principle.

1.10. Research Questions and Hypotheses

This study aims to answer the following research questions:

How can a dynamical variational action principle explain the continuous self-organization, evolution, and development of complex systems?

Can Average Action Efficiency (AAE) be a measure for the level of organization of complex systems?

Can the proposed positive feedback model accurately predict the self-organization processes in systems?

What are the relationships between various system characteristics, such as AAE, total action, order parameter, entropy, flow rate, and others?

Our hypotheses are:

A dynamical variational action principle can explain the continuous self-organization, evolution and development of complex systems.

AAE is a valid and reliable measure of organization that can be applied to complex systems.

The model can accurately predict the most organized state based on AAE.

The model can predict the power-law relationships between system characteristics that can be quantified.

1.11. Summary of the Specific Objectives of the Paper

1. Define and Apply the Dynamical Action Principle: Define and apply the dynamical action principle, which extends the classical stationary action principle to dynamic, self-organizing systems, in open-ended evolution, showing that unit action decreases while total action increases during self-organization.

2. Demonstrate the Predictive Power of the Model: Build and test a model that quantitatively and numerically measures the amount of organization in a system, and predicts the most organized state as the one with the least average unit action and highest average action efficiency. Define the cases in which action is minimized, and based on that predict the most organized state of the system. The theoretical most organized state is where the edges in a network are geodesics. Due to the stochastic nature of complex systems, those states are approached asymptotically, but in their vicinity, the action is stationary due to local minima.

3. Validate a New Measure of Organization: Based on 1 and 2, develop and apply the concept of average action efficiency, rooted in the principle of least action, as a quantitative measure of organization in complex systems.

4. Explain Mechanisms of Progressive Development and Evolution: Apply a model of positive feedback between system characteristics to predict exponential growth and power-law relationships, providing a mechanism for continuous self-organization. Test it by fitting its solutions to the simulation data, and compare them to real-world data from the literature.

5. Simulate Self-Organization Using Agent-Based Modeling: Use agent-based modeling (ABM) to simulate the behavior of an ant colony navigating between a food source and its nest to explore how self-organization emerges in a complex system.

6. Define unit-total (local-global) dualism: Investigate and define the concept of unit-total dualism, where unit quantities are minimized while total quantities are maximized as the system grows, and explain its implications as variational principles for complex systems.

7. Contribute to the Fundamental and Philosophical Understanding of Self-Organization and Causality: Enhance the theoretical understanding of self-organization in complex systems, offering a robust framework for future research and practical applications.

This research aims to provide a robust framework for understanding and quantifying self-organization in complex systems based on a dynamical principle of decreasing unit action for one edge in a complex system represented as a network. By introducing Average Action Efficiency (AAE) and developing a predictive model based on the principle of least action, we address critical gaps in existing theories and offer new insights into the dynamics of complex systems. The following sections will delve deeper into the theoretical foundations, model development, methodologies, results, and implications of our study.

2. Building the Model

2.1. Hamilton’s Principle of Stationary Action for a System

In this work, we utilize Hamilton’s Principle of Stationary Action, a variational method, to study self-organization in complex systems. Stationary action is found when the first derivative is zero. When the second variation is positive, the action is a minimum. Only in this case, do we have the true least action principle. We will discuss in what situations this is the case. Hamilton’s Principle of Stationary Action asserts that the evolution of a system between two states occurs along the path that makes the action functional stationary. By identifying and extremizing this functional, we can gain a deeper understanding of the dynamics and driving forces behind self-organization and describe it from first principles.

This is a first order approximation, simplified model, as an example, and the lagrangian for the agent based simulation is described in following sections.

The classical Hamilton’s principle is:

where is an infinitesimally small variation in the action integral I, L is the Lagrangian, are the generalized coordinates, are the time derivatives of the generalized coordinates, p is the momentum, and t is the time. and are the initial and final times of the motion.

For brevity, further in the text, we will use when appropriate , and .

This is the principle from which all physics and all observed equations of motion are derived. The above equation is for one object. For a complex system, there are many interacting agents. That means that we can propose that the sum of all actions of all agents is taken into account. This sum is minimized in its most action-efficient state, which we define as being the most organized. In previous papers [

8,

10,

11,

12] we have stated that for an organized system we can find the natural state of that system as the one in which the variation of the sum of actions of all of the agents is zero:

where is the action of the i-th agent, is the Lagrangian of the i-th agent, and n represents the number of agents in the system, and are the initial and final times of the motions.

A network representation of a complex system.When we represent the system as a network, we can define one edge crossing as a unit of motion, or one event in the system, for which the unit average action efficiency is defined. In this case, the sum of the actions of all agents for all of the crossings of edges per agent per unit time, which is the total number of crossings (the flow of events, ), is the total amount of action in the network, Q. In the most organized state of the system, the variation of the total action, Q, is zero, which means that it is extremised as well and for the complex system in our example this extremum is a maximum.

2.2. An Example of True Action Minimization: Conditions

This is just an example to understand the conceptual idea of the model. Later we will specify it for our simulation with the actual interactions between the agents.

The agents are free particles, not subject to any forces, so the potential energy is a constant and can be set to be zero because the origin for the potential energy can be chosen arbitrarily, therefore

. Then, the Lagrangian

L of the element is equal only to the kinetic energy

of that element:

where

m is the mass of the element, and

v is its speed.

We are assuming that there is no energy dissipation in this system, so the Lagrangian of the element is a constant:

The mass m and the speed v of the element are assumed to be constants.

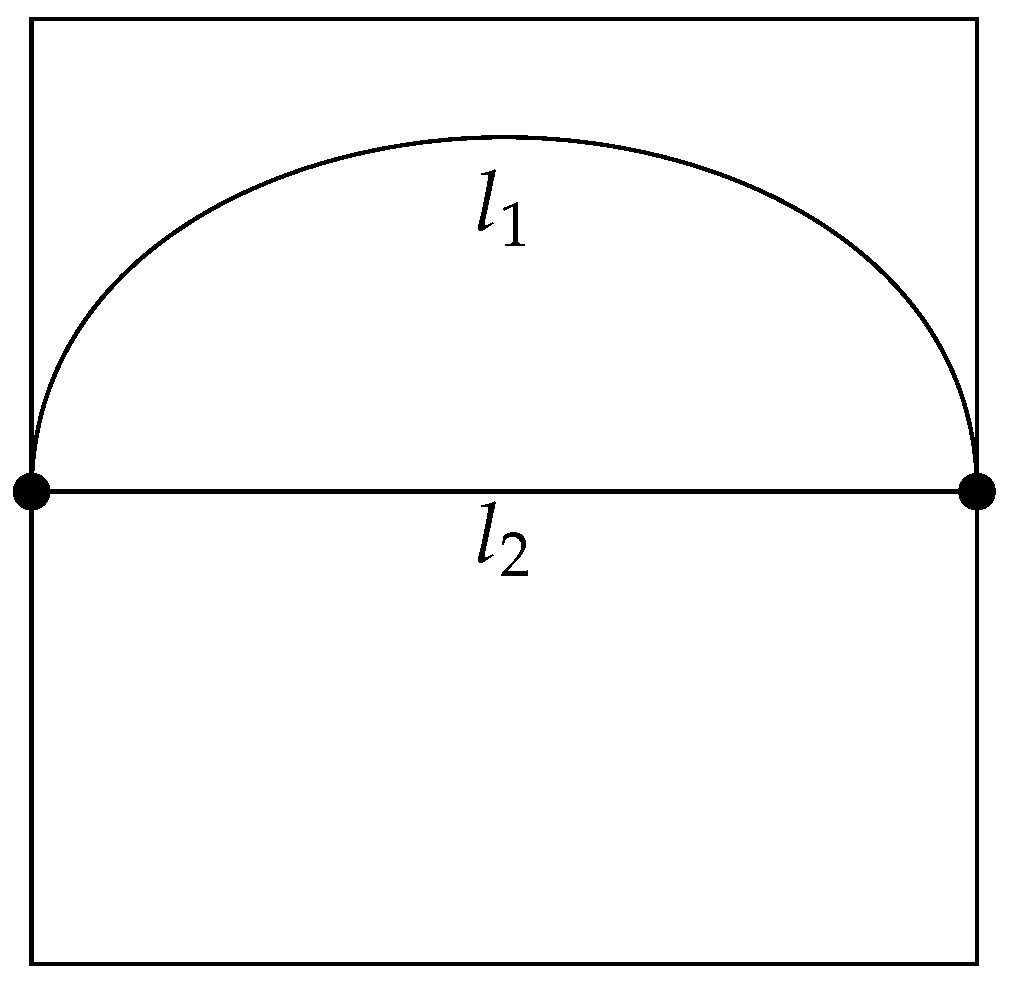

The start point and the end point of the trajectory of the element are fixed at opposite sides of a square (see Fig. A1). This produces the consequence that the action integral cannot become zero, because the endpoints cannot get infinitely close together:

The action integral cannot become infinity, i.e., the trajectory cannot become infinitely long:

In each configuration of the system, the actual trajectory of the element is determined as the one with the Least Action from Hamilton’s Principle:

The medium inside the system is isotropic (it has all its properties identical in all directions). The consequence of this assumption is that the constant velocity of the element allows us to substitute the interval of time with the length of the trajectory of the element.

The second variation of the action is positive, because , and , therefore the action is a true minimum.

2.3. Building the Model

In our model, the organization is proportional to the inverse of the average of the sum of actions of all elements (

8). This is the average action efficiency and we can label it with a symbol

. Here average action efficiency measures the amount of organization of the system. In a complex network, many different arrangements can correspond to the same action efficiency and therefore have the same level of organization. Thus, the average action efficiency represents the macrostate of the system. Many possible microstates of combinations of nodes, paths, and agents on the network can correspond to the same macrostate as measured by

. This is analogous to temperature in statistical mechanics representing a macrostate corresponding to many microstates of the molecular arrangements in the gas.

We multiply the numerator by Planck’s constant h. Now it takes the meaning that the average action efficiency is inversely proportional to the average number of action quanta for one crossing between two nodes in the system, in a given interval of time. This also provides an absolute reference point h for the measure of organization. The units in this case are the total number events in the system per unit time, divided by the number of quanta of action.

where n is the number of agents, and m is the average number of nodes each agent crosses per unit time. If we multiply the number of agents by the number of crossings for each agent, we can define it as the flow of events in the system per unit of time,

In the denominator, the sum of all actions of all agents and all crossings is defined as the total action per unit time in the system. When it is divided by the Planck’s constant it takes the meaning of the number of quanta of action,

Q.

For simplicity and clarity of the presentation, when appropriate, we will set h=1.

Then the equation for average action efficiency can be rewritten simply as the total number of events in the system per unit time, divided by the total number of quanta of action:

In our simulation, the average path length is equal to the average time because the speed of the agents in the simulation is set to one patch per second.

When the Lagrangian does not depend on time, because the speed is constant and there is no friction, as in this simulation, the kinetic energy is a constant (assumption #2), so the action integral takes the form:

Where

is the interval of time that the motion of the agent takes.

This is for an individual trajectory. Summing over all trajectories, we get the total number of events, the flow, times the average time of one crossing for all agents. The sum of all times for all events is the number of events times the average time. Then for identical agents, the denominator of the equation for average action efficiency Eq.

9 becomes:

Therefore:

and:

We are free to set the mass to two and the velocity is one patch per second. Therefore, we can have the kinetic energy to be equal to one.

Since Planck’s constant is a fundamental unit of action, even though action can vary continuously, this equation represents how far is the organization of the system from this highly action-efficient state, when there will be only one Planck unit of action per event. The action itself can be even smaller than

h [

44]. This provides a path to further continuous improvement in the levels of organization of systems below one quantum of action.

An example for one agent:

To illustrate the simplest possible case, for clarity, we apply this model to the example of a closed system in two dimensions with only one agent. We define the boundaries of the fixed system to form a square.

The endpoints here represent two nodes in a complex network. Thus the model is limited only to the path between the two nodes. The expansion of this model will be to include many nodes in the network and to average over all of them. Another extension is to include many elements, different kinds of elements, obstacles, friction, etc.

Figure 1 shows the boundary conditions for the system used in this example. In this figure, we present the boundaries of the system and define the initial and final points of the motion of an agent as two of the nodes in a complex network. It shows the comparison between two different states of organization of the system. It is a schematic representation of the two states of the system, and the path of the agent in each case. Here

and

are the lengths of the trajectory of the agent in each case. (a) a trajectory of an agent in a certain state of the system, given by the configuration of the internal constraints,

. (b) a different configuration allowing the trajectory of the element to decrease by

,

- the shortest possible path.

For this case, we set , , which is one crossing of one agent between two nodes in the network. An approximation for an isotropic medium (Assumption #7) allows us to express the time using the speed of the element when it is constant (Assumption #3). In this case, then we can solve which is the definition of average velocity for the interval of time as , where l is the length of the trajectory of the element in each case between the endpoints.

The speed of the element

v is fixed to be another constant, so the action integral takes the form:

When we substitute this equation in the equation for action efficiency for when n=1 and m=1, we obtain:

For the simulation in this example,

is the distance that the ants travel between food and nest. Because h, v, and

l are all constants, we can simplify this as we set

We can set this constant to , when necessary.

2.4. Analysis of System States

Now we turn to the two states of the system with different actions of the elements, as shown in

Figure 1. The organization of those two states is respectively:

In

Figure 1, the length of the trajectory in the second case (b) is less,

, which indicates that state 2 has better organization. The difference between the organizations in the two states of the same system is generally expressed as:

This can be rewritten as:

Where , , and .

This is for one agent in the system. If we describe the multi-agent system, then, we use average path-length.

2.5. Average Action Efficiency (AAE)

In the previous example, we can say that the shorter trajectory represents a more action-efficient state, in terms of how much total action is necessary for the event in the system, which here is for the agent to cross between the nodes. If we expand to many agents between the same two nodes, all with slightly different trajectories, we can define that the average of the action necessary for each agent to cross between the nodes is used to calculate the average action efficiency. Average action efficiency is how efficiently a system utilizes energy and time to perform the events in the system. More organized systems are more action-efficient because they can perform the events in the system with fewer resources, in this example, energy and time.

We can start from the presumption that the average action efficiency in the most organized state is always greater than or equal to its value in any other configuration, arrangement, or structure of the system. By varying the configurations of the structure until the average action efficiency is maximized, we can identify the most organized state of the system. This state corresponds to the minimum average action per event in the system, adhering to the principle of least action. We refer to this as the ground or most stable state of the system, as it requires the least amount of action per event. All other states are less stable because they require more energy and time to perform the same functions.

If we define average action efficiency as the ratio of useful output, here it is the crossing between the nodes, and, in other systems, it can be any other measure, to the total input or the energy and time expended, a system that achieves higher action efficiency is more organized. This is because it indicates a more coordinated, effective interaction among the system’s components, minimizing wasted energy or resources for its functions.

During the process of self-organization, a system transitions from a less organized to a more organized state. If we monitor the action efficiency over time, an increase in efficiency could indicate that the system is becoming more organized, as its components interact in a more coordinated way and with fewer wasted resources. This way we can measure the level of organization and the rate of increase of action efficiency which is the level and the rate of self-organization, evolution, and development in a complex system.

To use action efficiency as a quantitative measure, we need to define and calculate it precisely for the system in question. For example, in a biological system, efficiency might be measured in terms of energy conversion efficiency in cells. In an economic system, it can be the ratio of production of an item to the total time, energy, and other resources expended. In a social system, it could be the ratio of successful outcomes to the total efforts or resources expended.

The predictive power of the Principle of Least Action for Self-Organization

For the simplest example here of only two nodes, calculating theoretically the least action state as the straight line between the nodes we arrive at the same state as the final organized state in the simulation in this paper. This is the same result from minimizing action and from any experimental result. It results in the geodesic of the natural motion of objects. When there are obstacles to the motion of agents, the geodesic is a curve described by the metric tensor. To achieve this prediction for multiagent systems we minimize the average action between the endpoints. Therefore the most organized state in the current simulation is predicted theoretically from the principle of least action. Therefore, the Principle of Least Action provides a predictive power for calculating the most organized state of a system, and verifying it with simulations or experiments. In engineered or social systems, it can be used to predict the most organized state and then construct it.

2.6. Multi-Agent

Now we turn to the two states of the system with different average actions of the elements on

Figure 1. The organization of those two states is respectively:

The average length of the trajectories in the second case is less,

, which indicates that state 2 has better organization. The difference between the organizations in the two states of the same system is generally expressed as:

This can be rewritten as:

Where , , and .

This is when we use the average lengths of the trajectories and when the velocity is constant and the time and length are the same. In general, when the velocity varies we need to use time.

2.7. Using Time

In this case, the two states of the system are with different average actions of the elements. The organization of those two states is respectively:

In

Figure 1, the length of the trajectory in the second case (b) is less, the average time for the trajectories is

, which indicates that state 2 has better organization. The difference between the organizations in the two states of the same system is generally expressed as:

This can be rewritten as:

Where , , and .

2.8. An Example

For the simplest example of one agent and one crossing between two nodes if

, or the first trajectory is twice as long as the second, this expression produces the result:

indicating that state 2 is twice as well organized as state 1. Alternatively, substituting in eq.

29 we have:

or there is a 50% difference between the two organizations, which is the same as saying that the second state is quantitatively twice as well organized as the first one. This example illustrates the purpose of the model for direct comparison between the amounts of organization in two different states of a system. When the changes in the average action efficiency are followed in time, we can measure the rates of self-organization, which we will explore in future work.

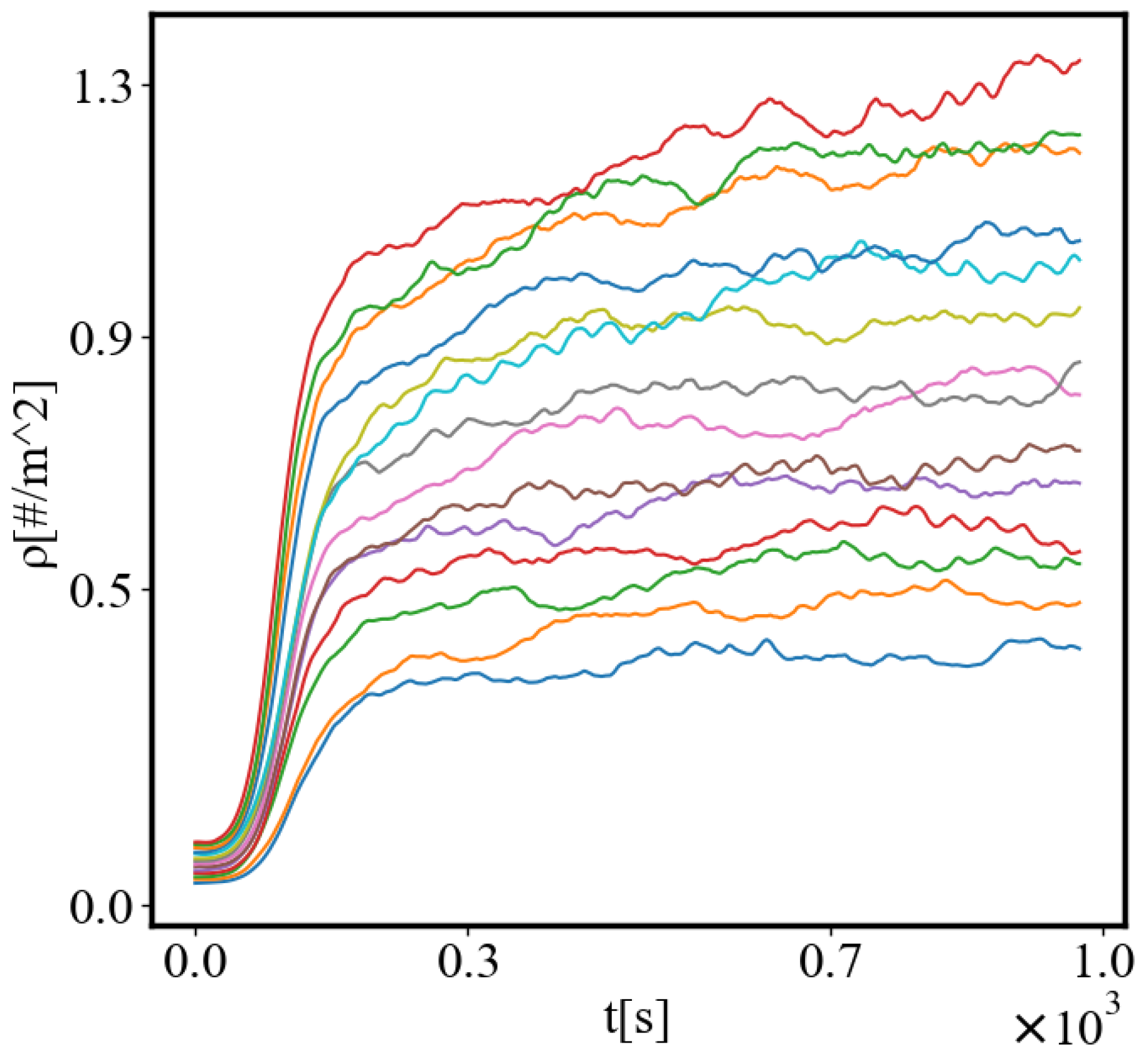

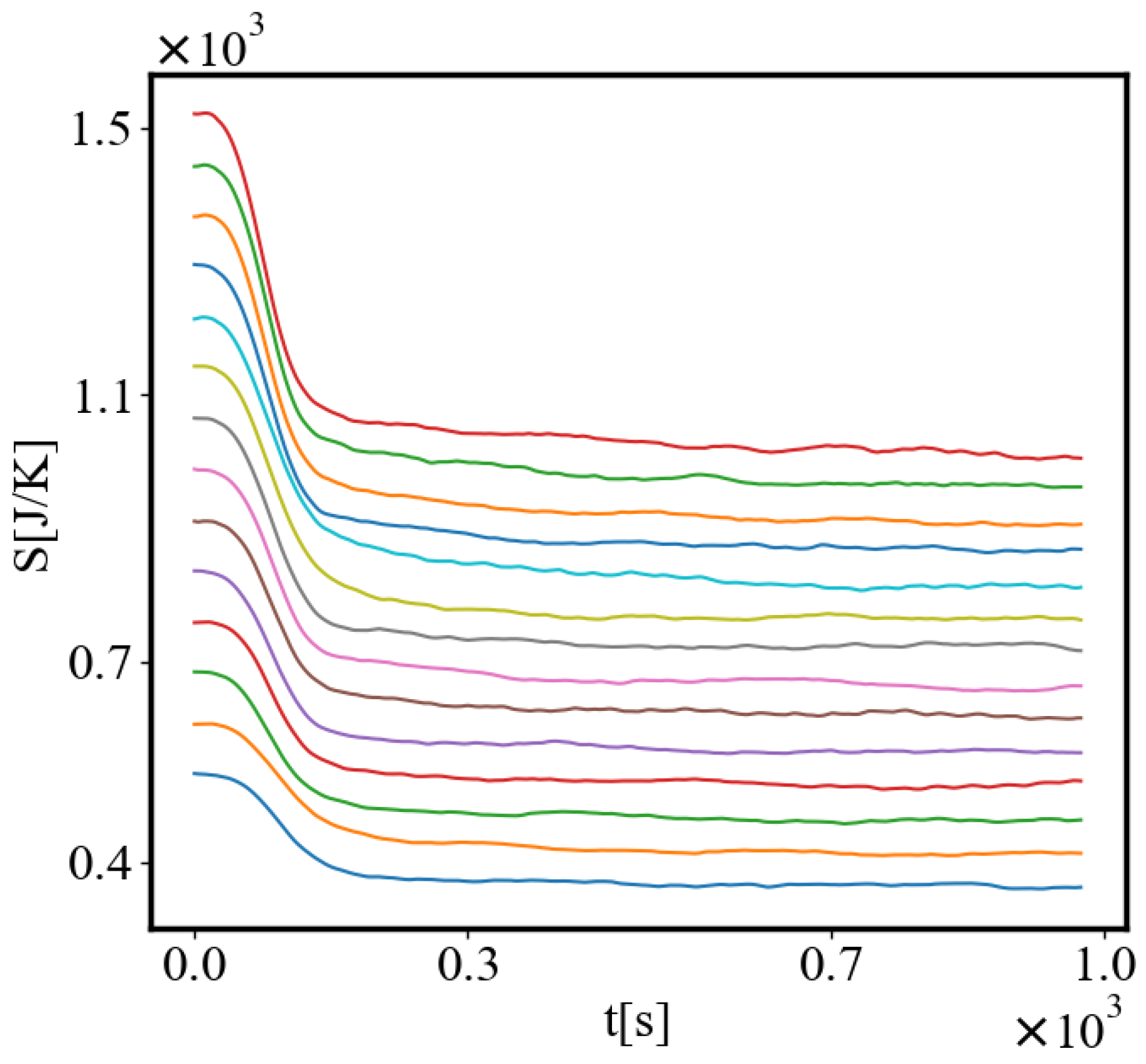

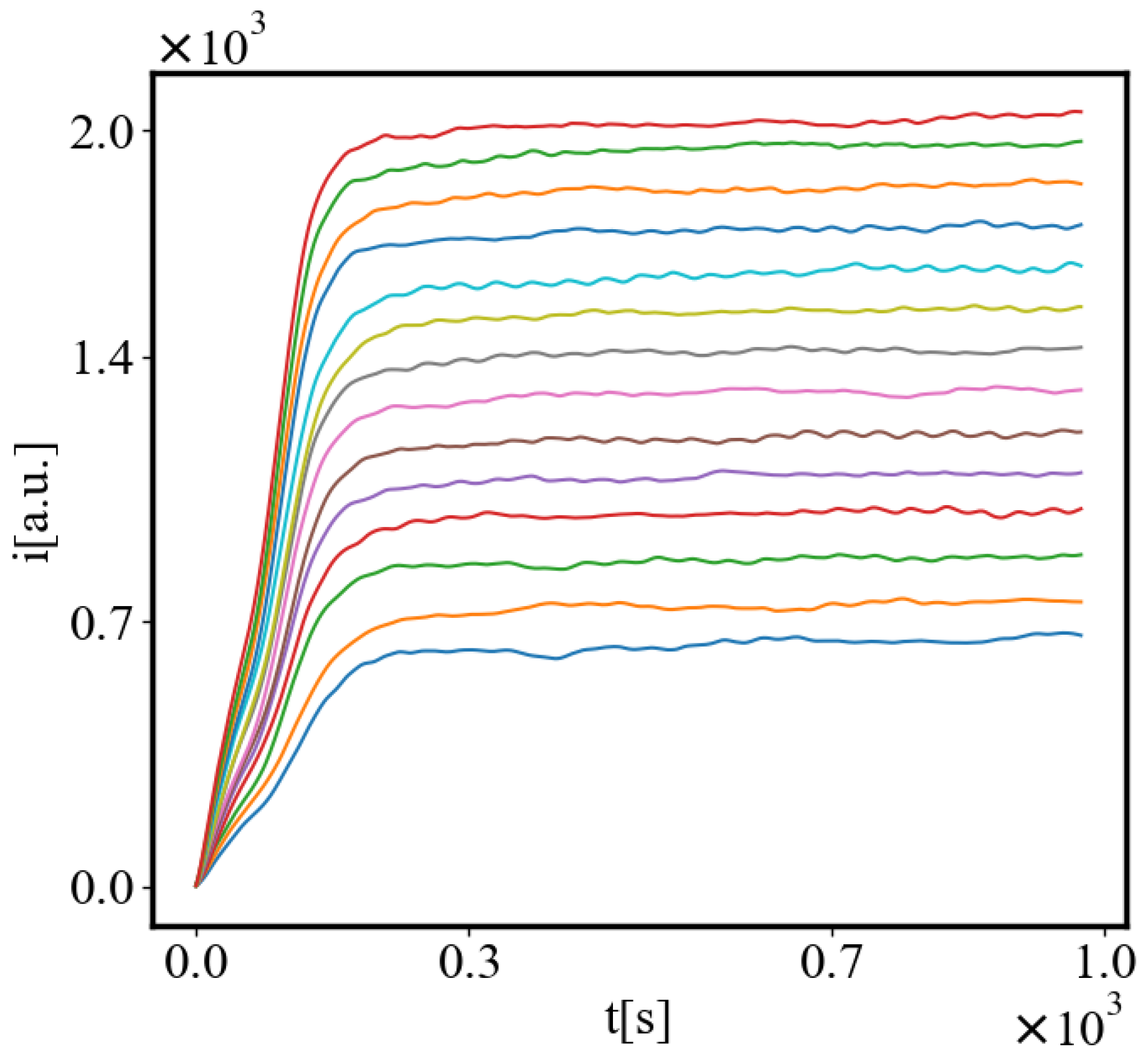

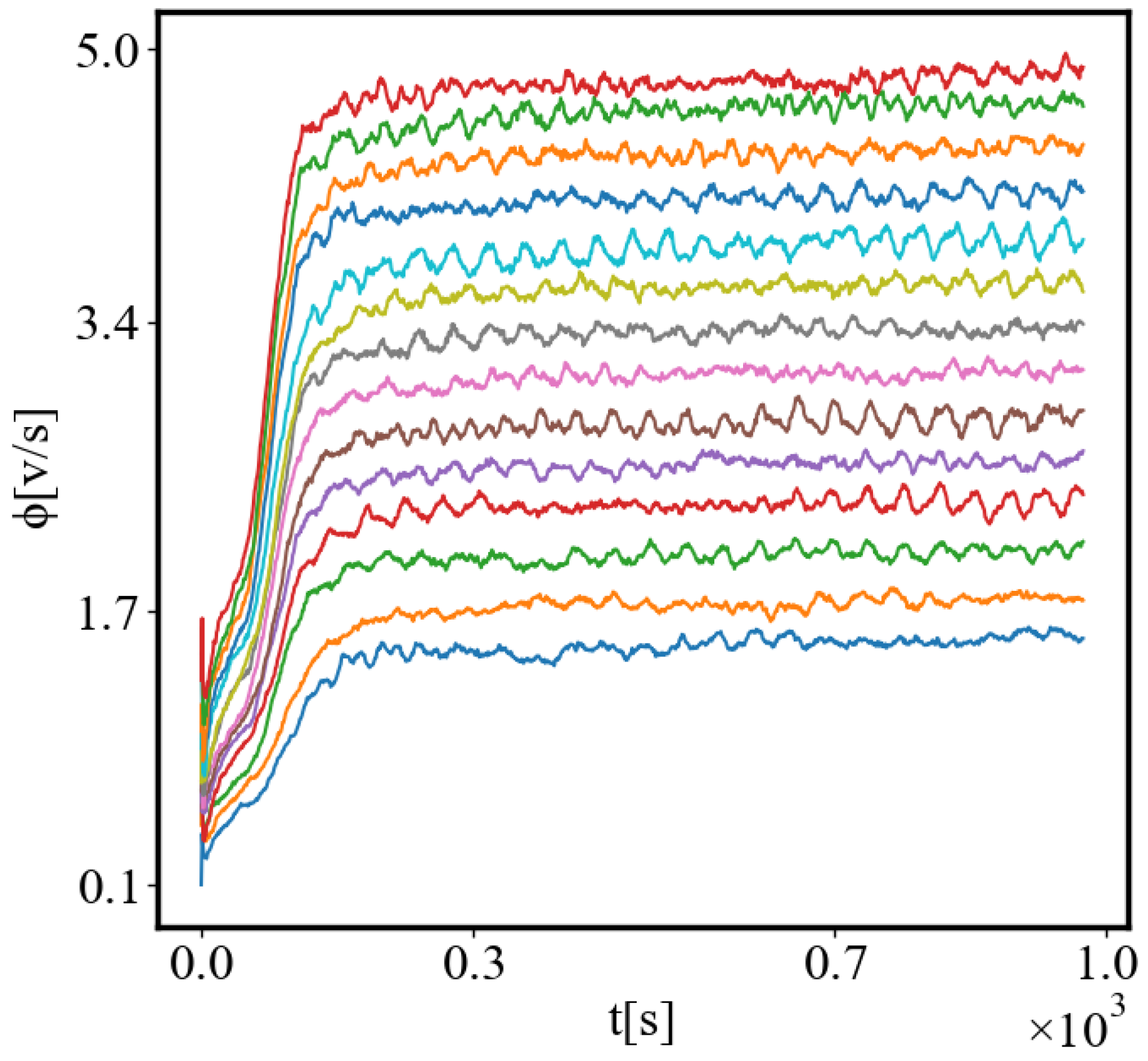

In our simulations, the higher the density and the lower the entropy of the agents, the shorter the paths and the time for crossing them, and the more the action efficiency.

2.9. Unit-Total (Local-Global) Dualism

In addition to the classical stationary action principle for fixed, non-growing, non-self-organizing systems:

we find a dynamical action principle:

This principle exhibits a unit-total (local-global, min-max) dualism:

1. Average unit action for one edge decreases:

This is a principle for decreasing unit action for a complex system during self-organization, as it becomes more action-efficient until a limit is reached.

2. Total action of the system increases:

This is a principle for increasing total action for a complex system during self-organization, as the system grows until a limit is reached.

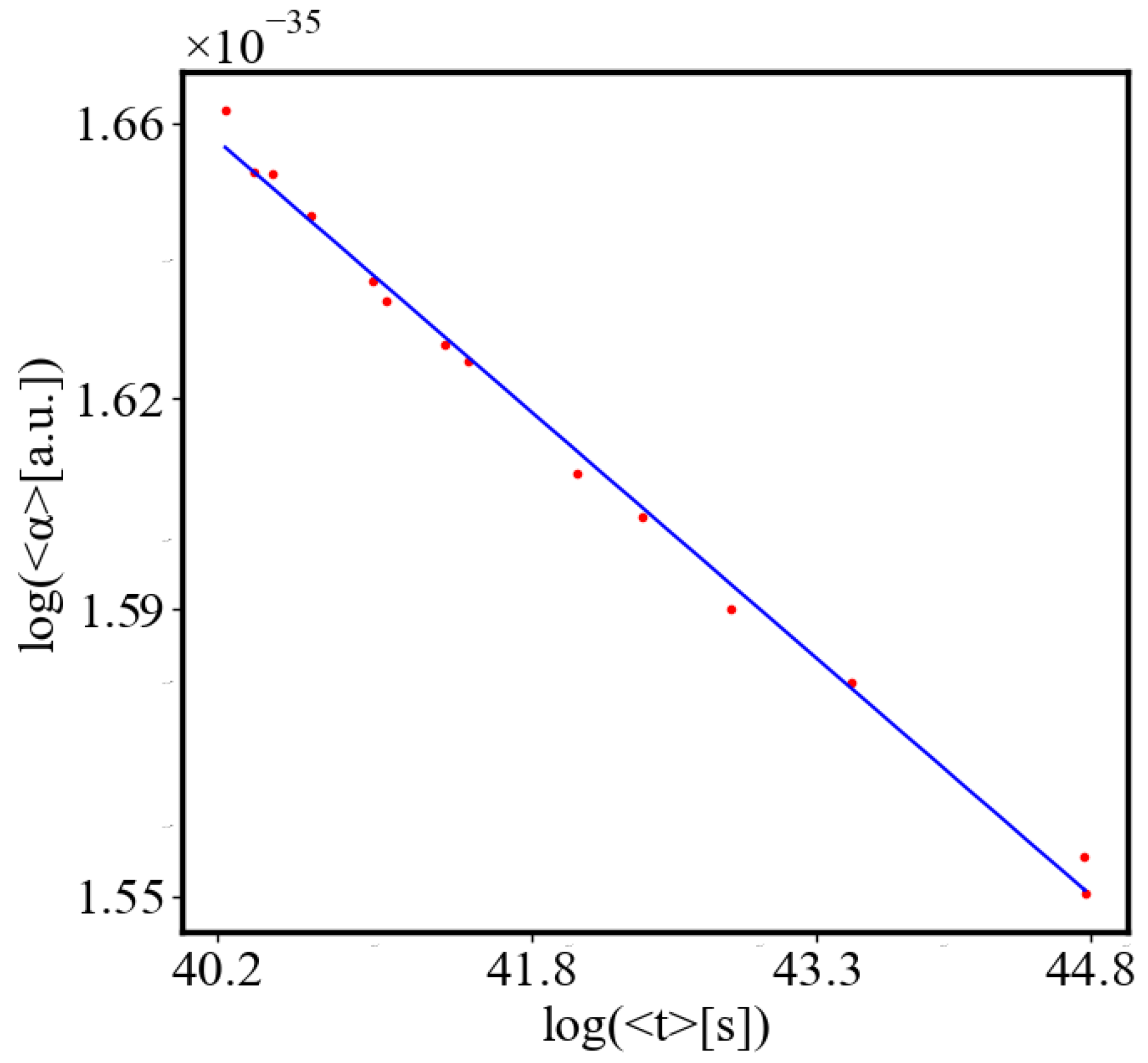

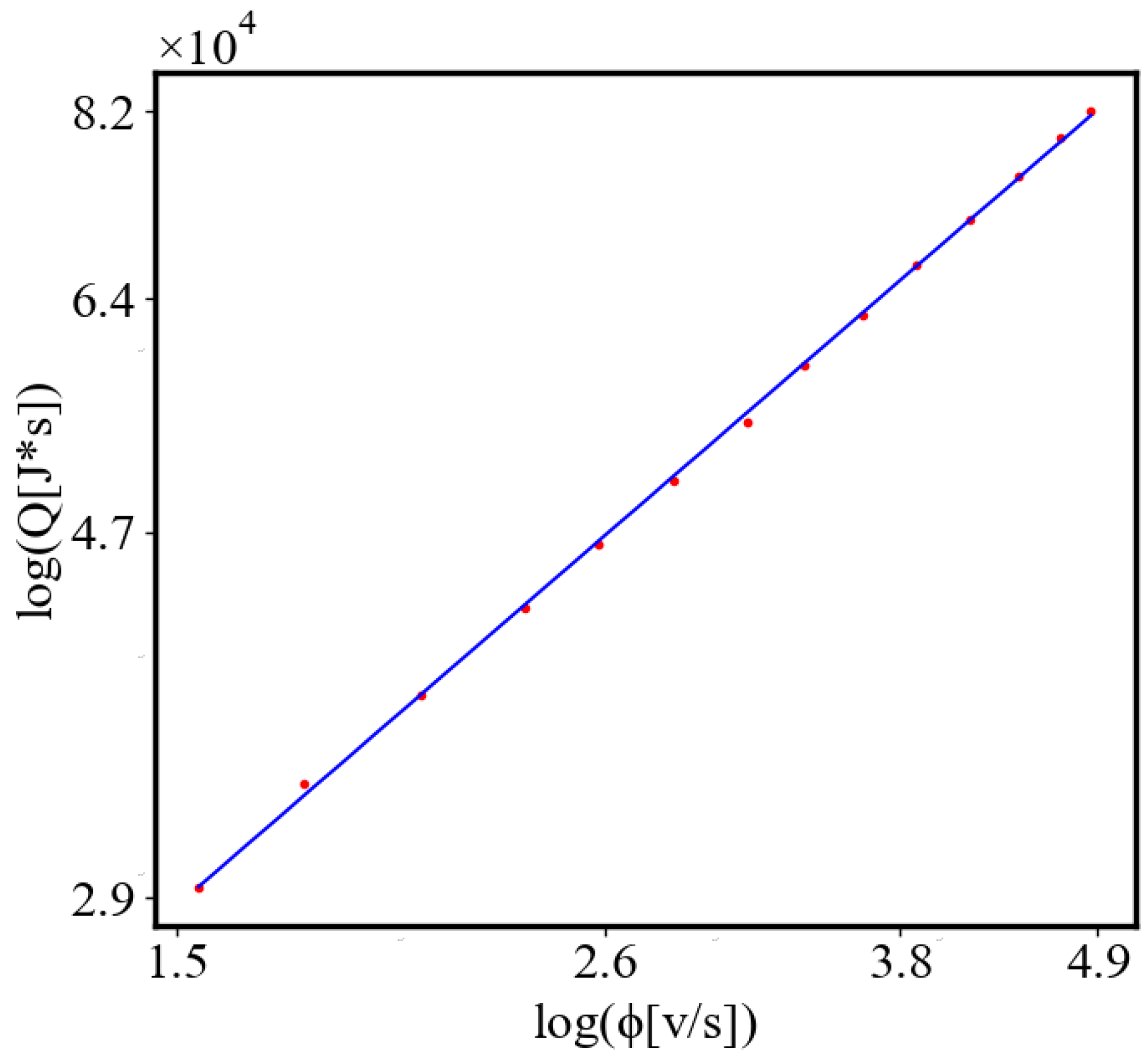

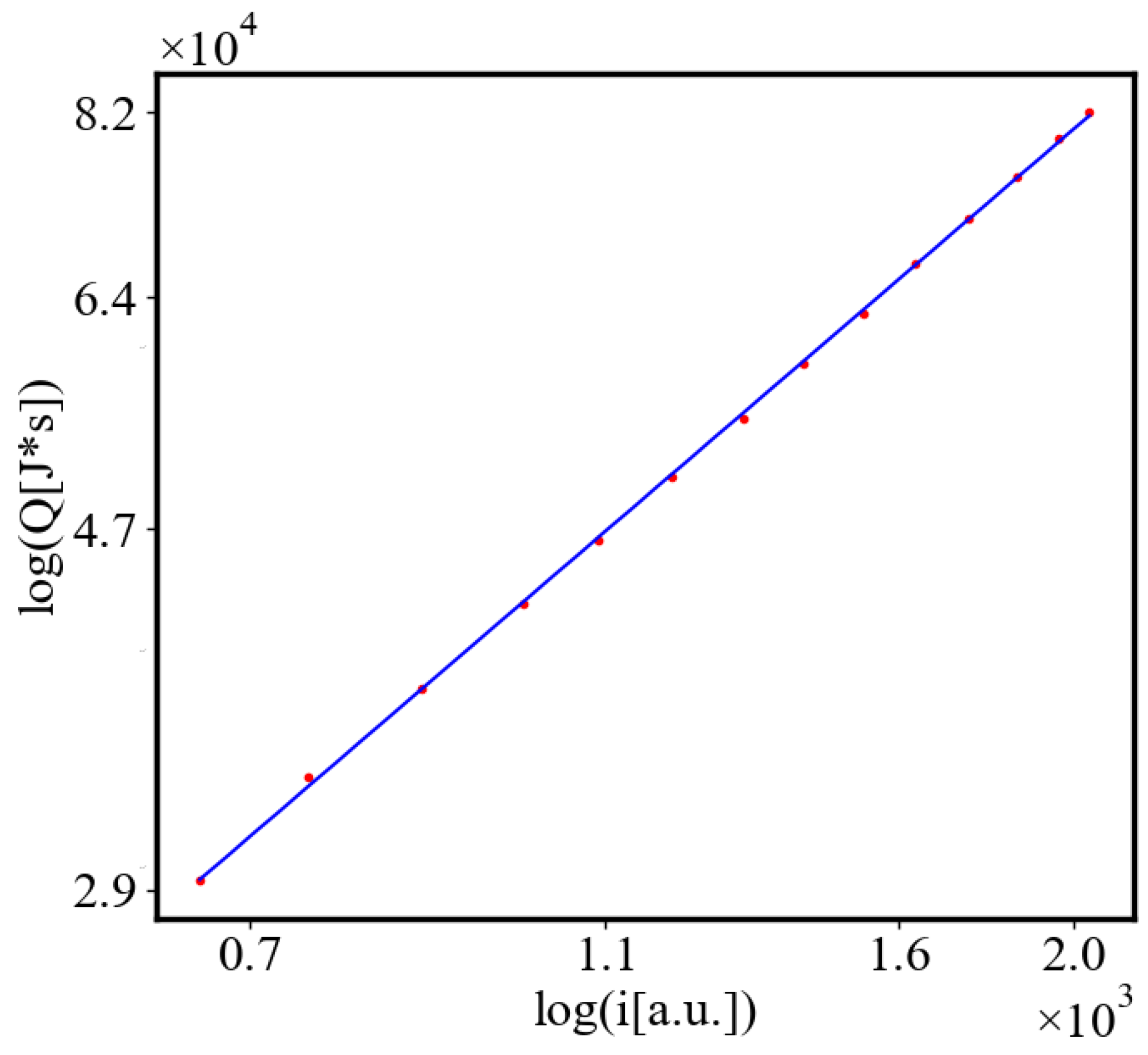

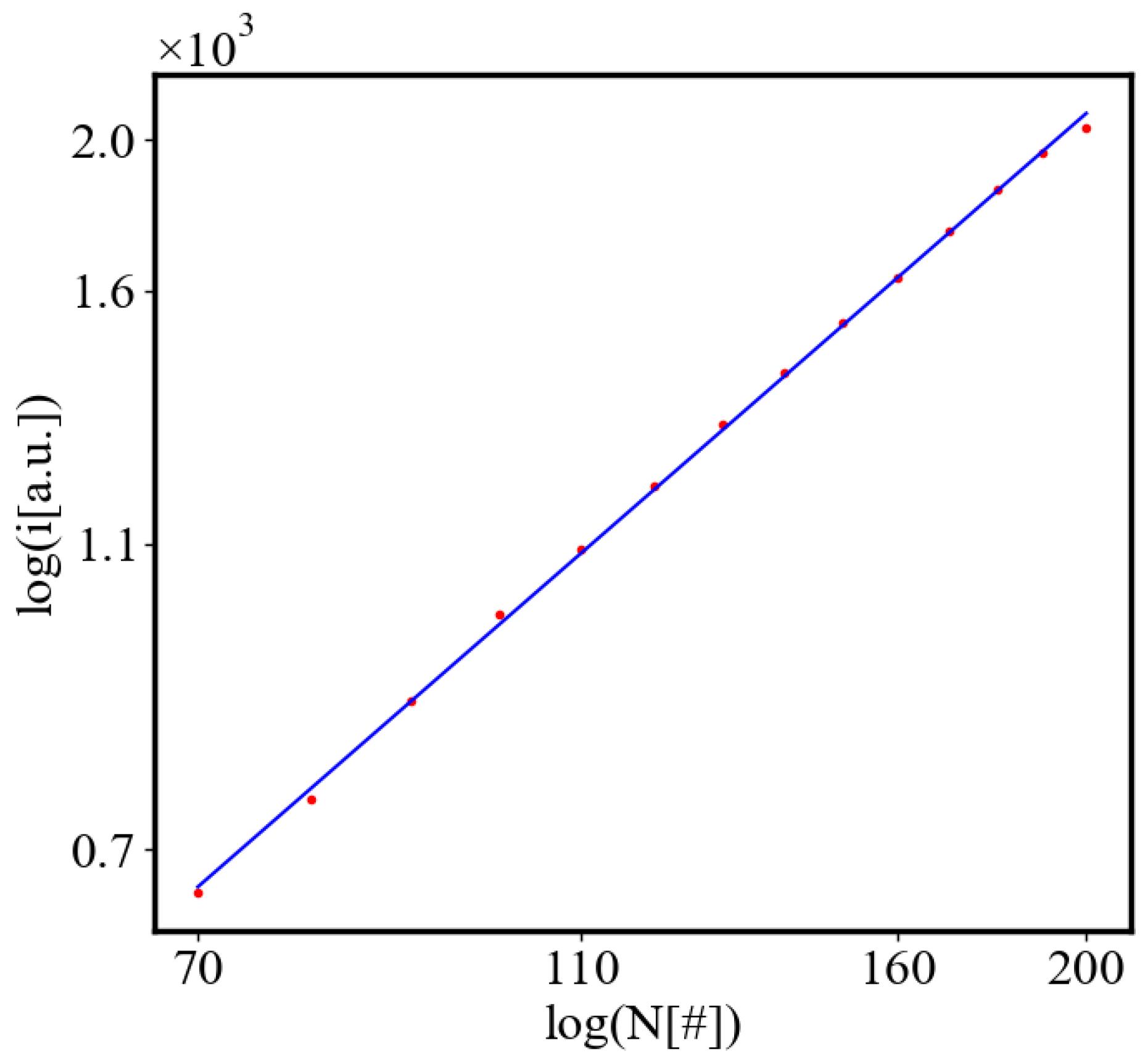

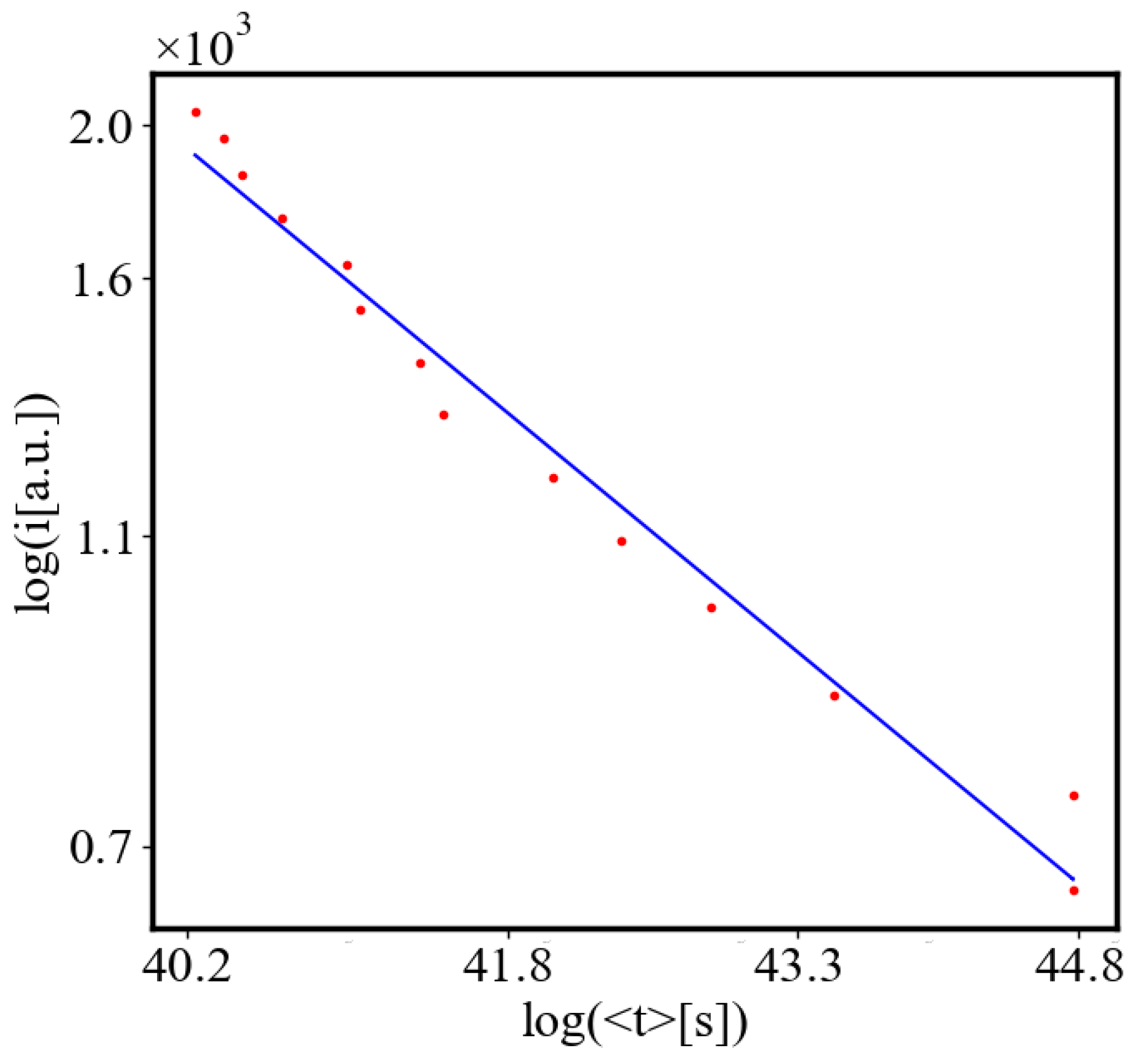

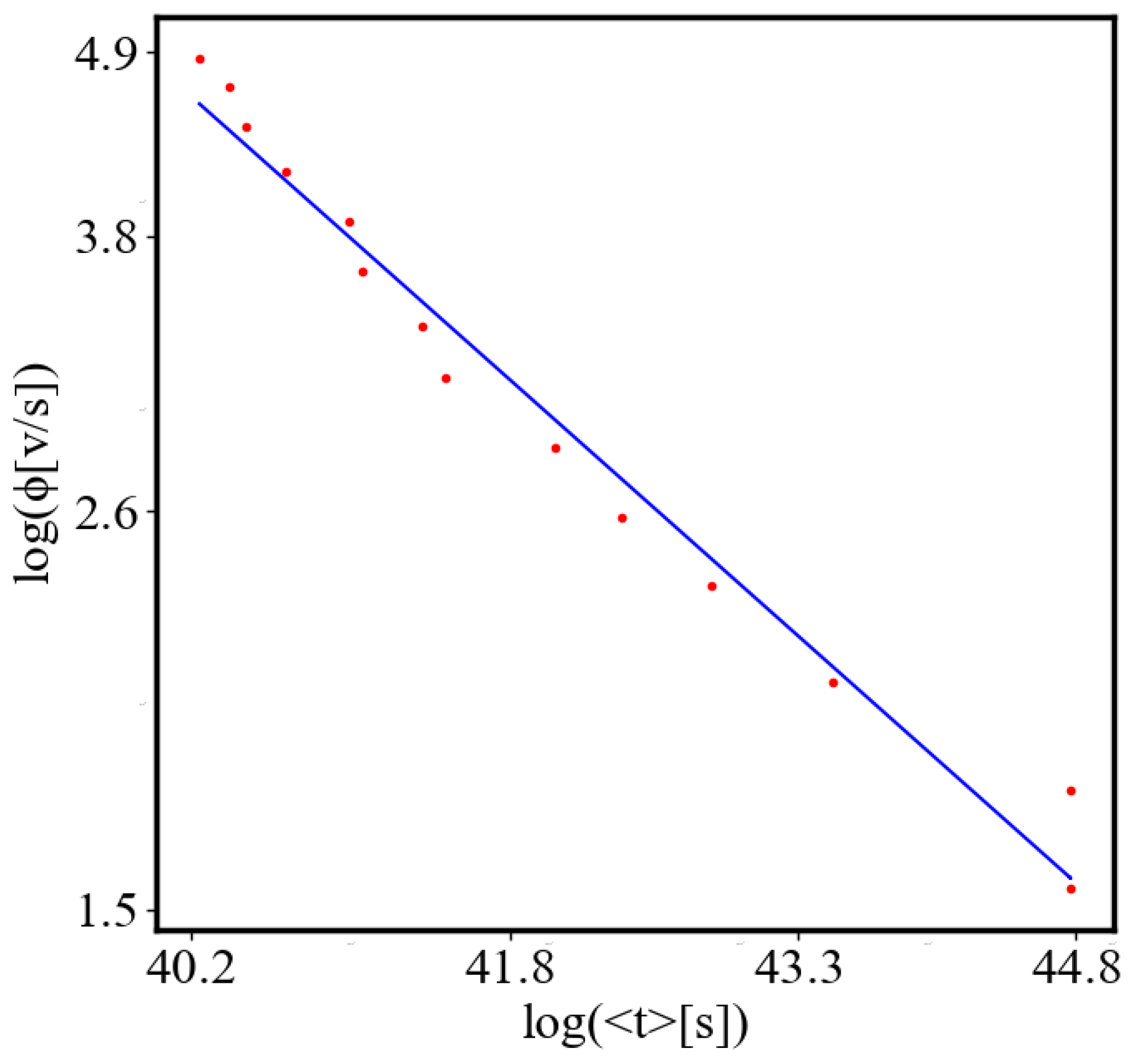

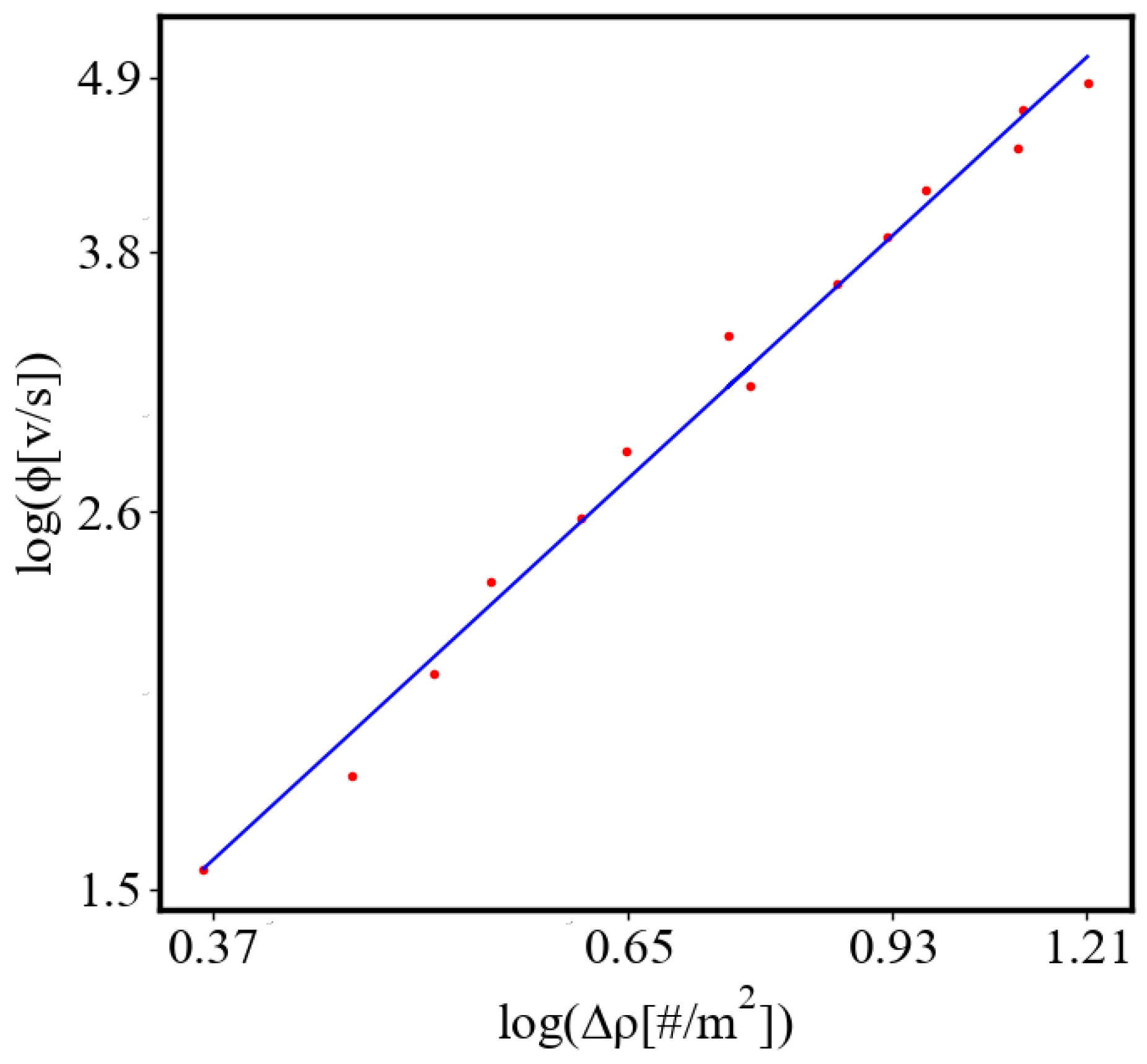

In our data, we see that average unit action, in terms of action efficiency decreases while total action increases

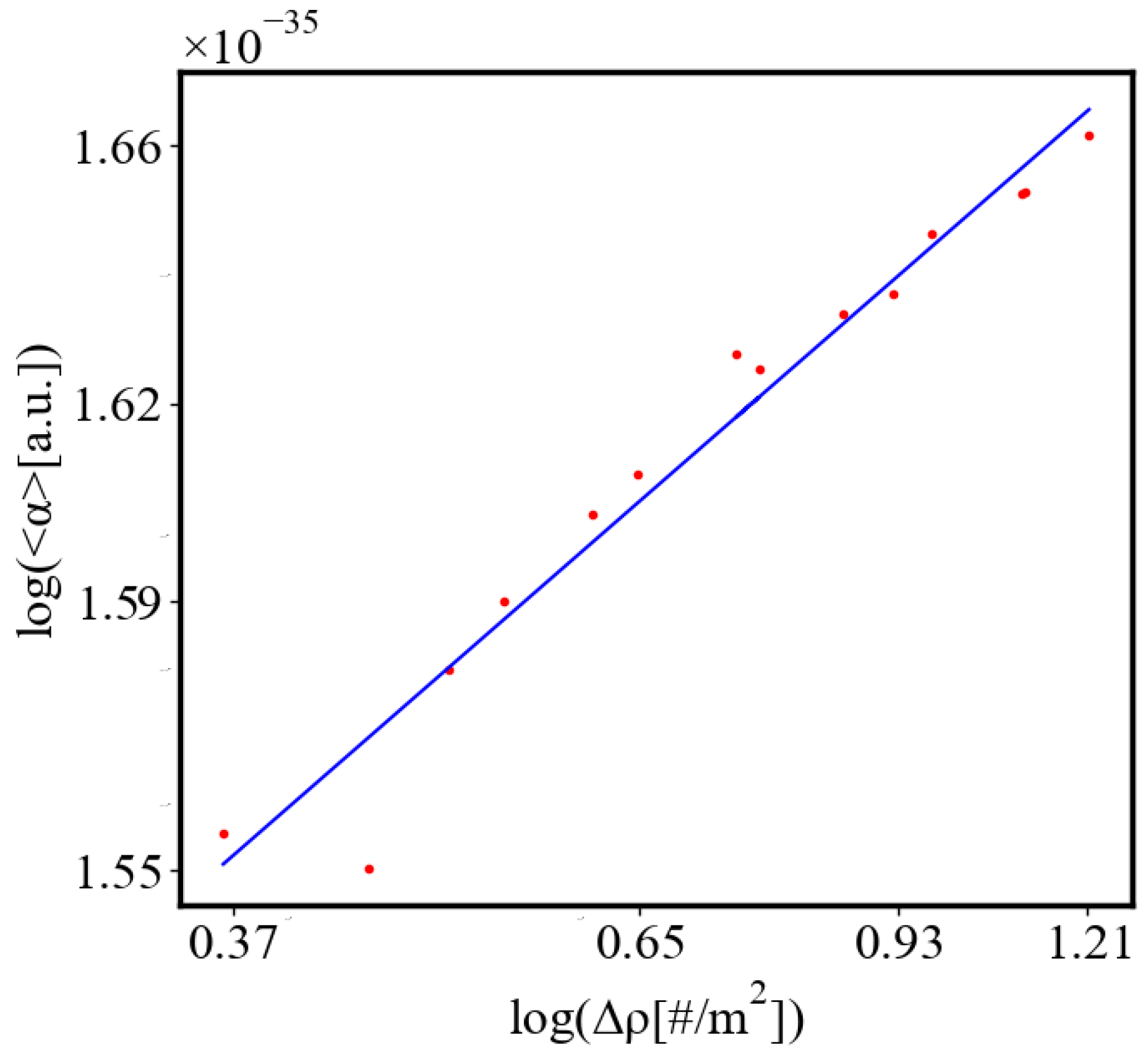

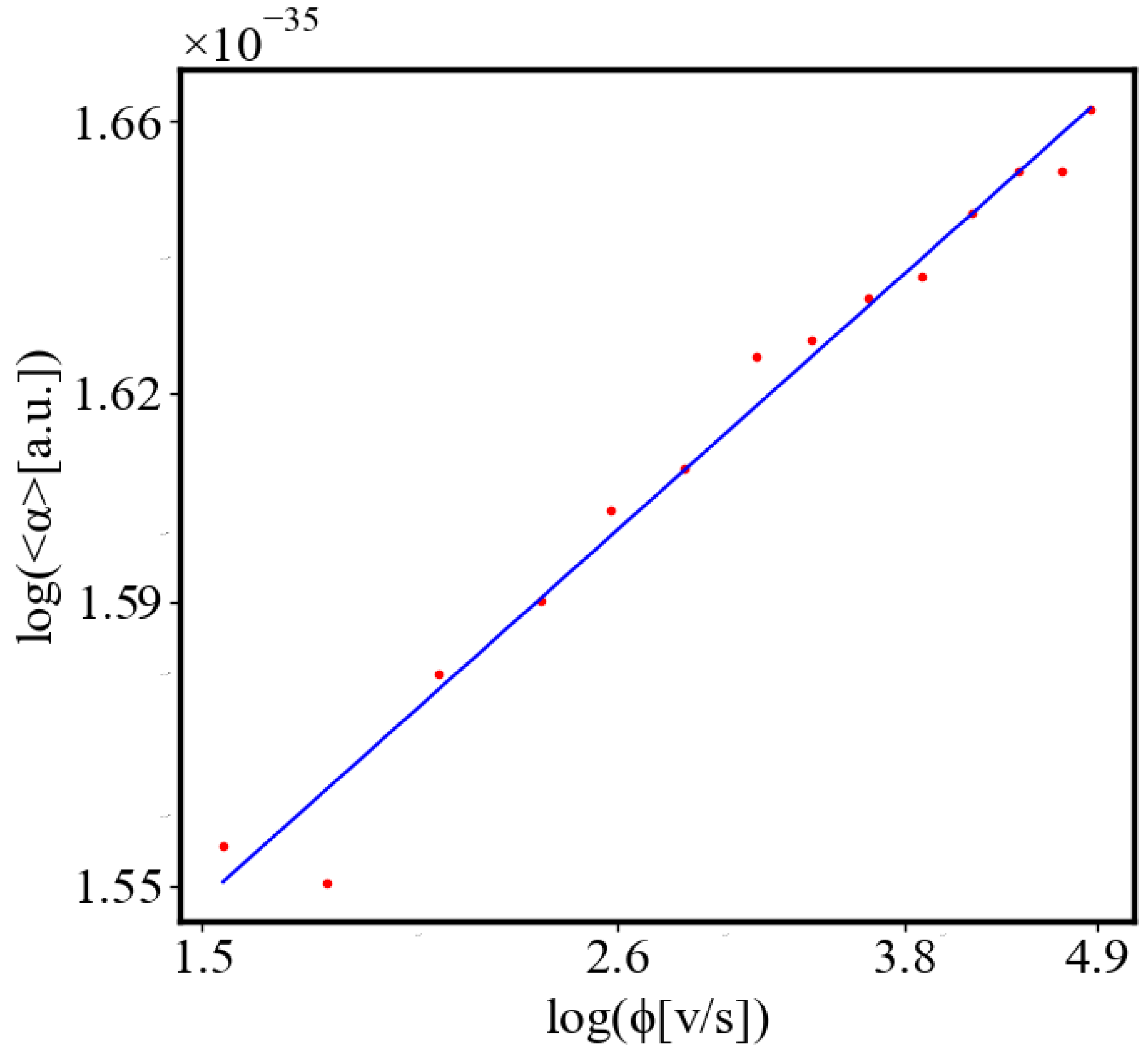

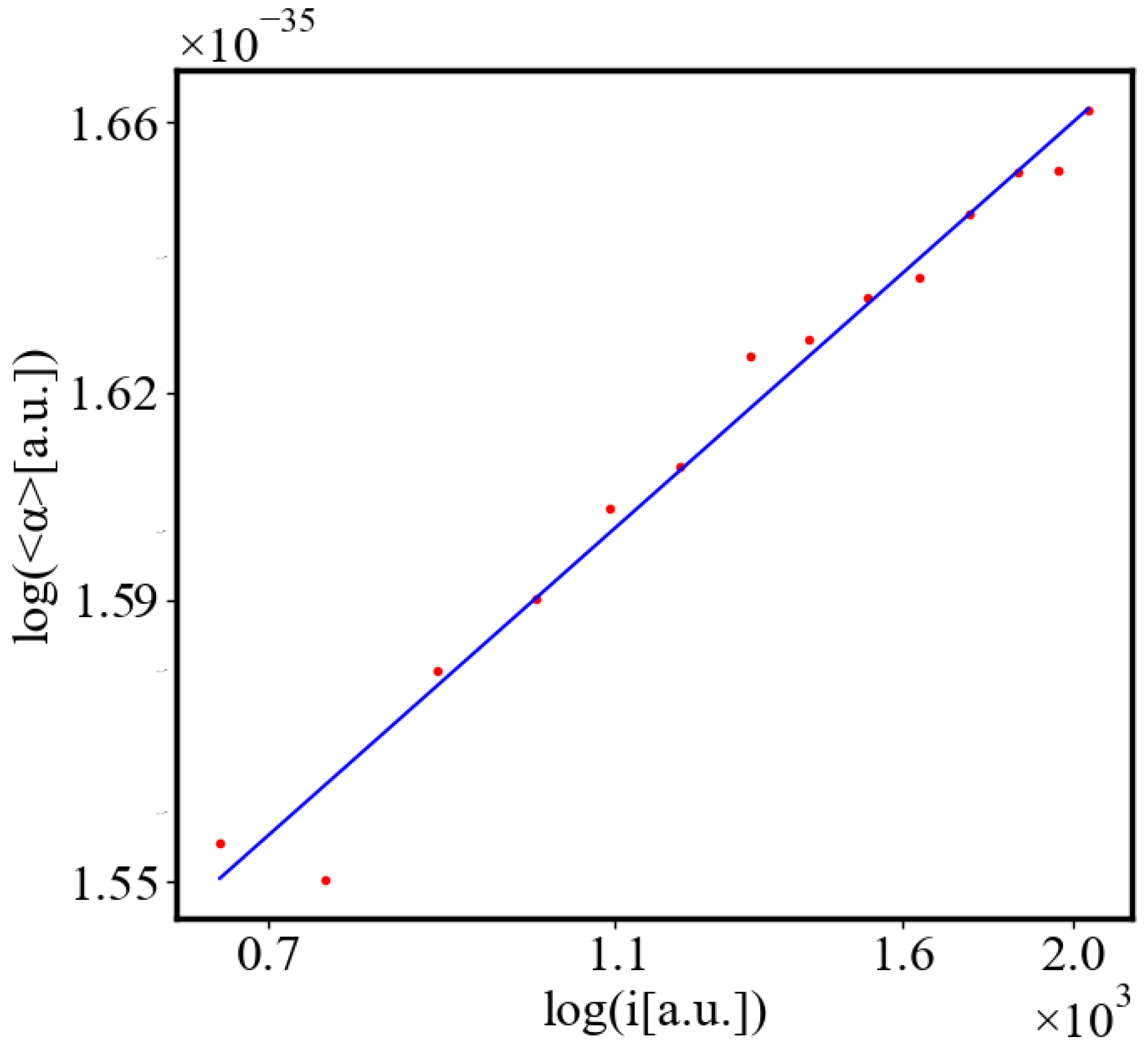

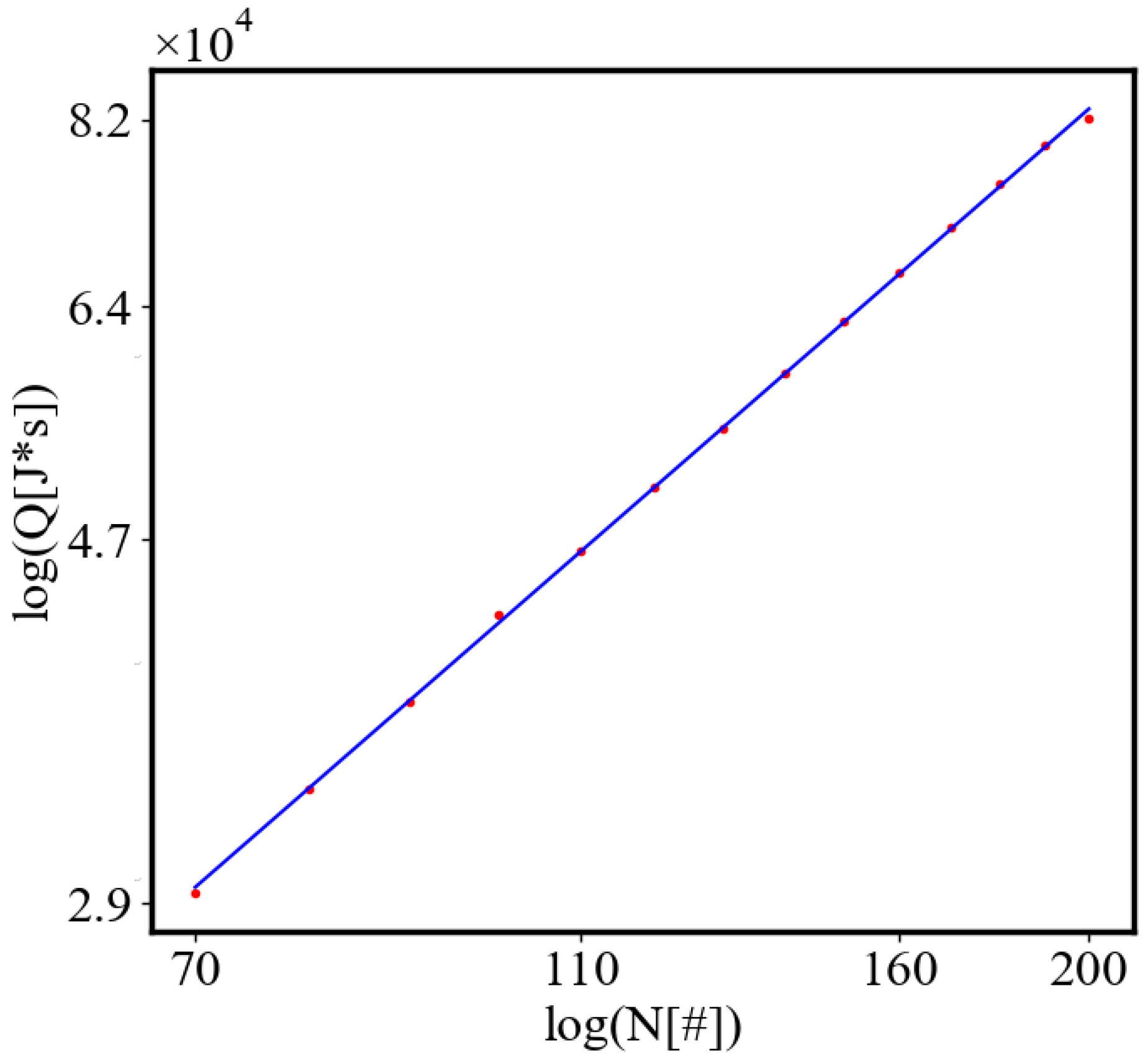

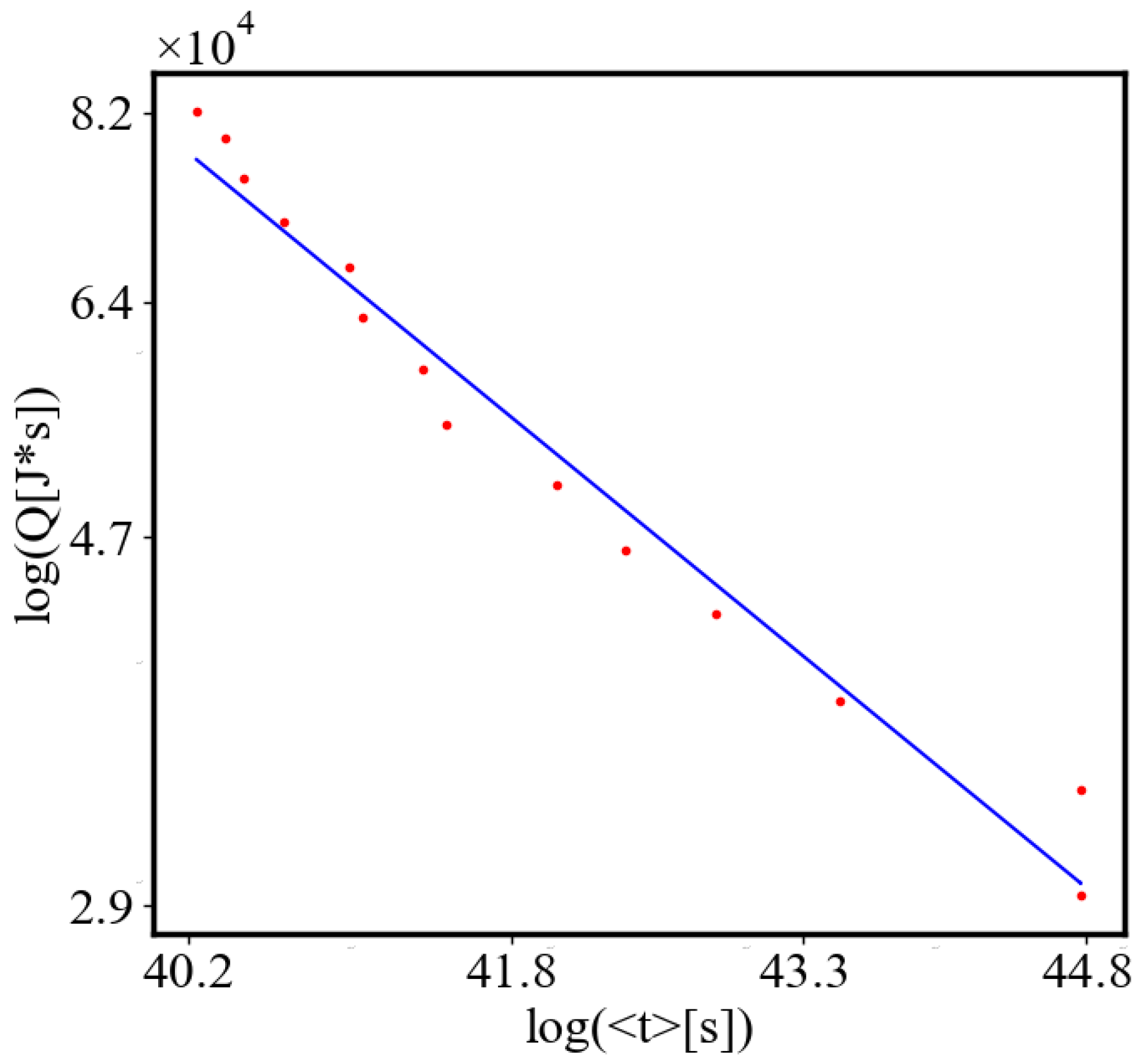

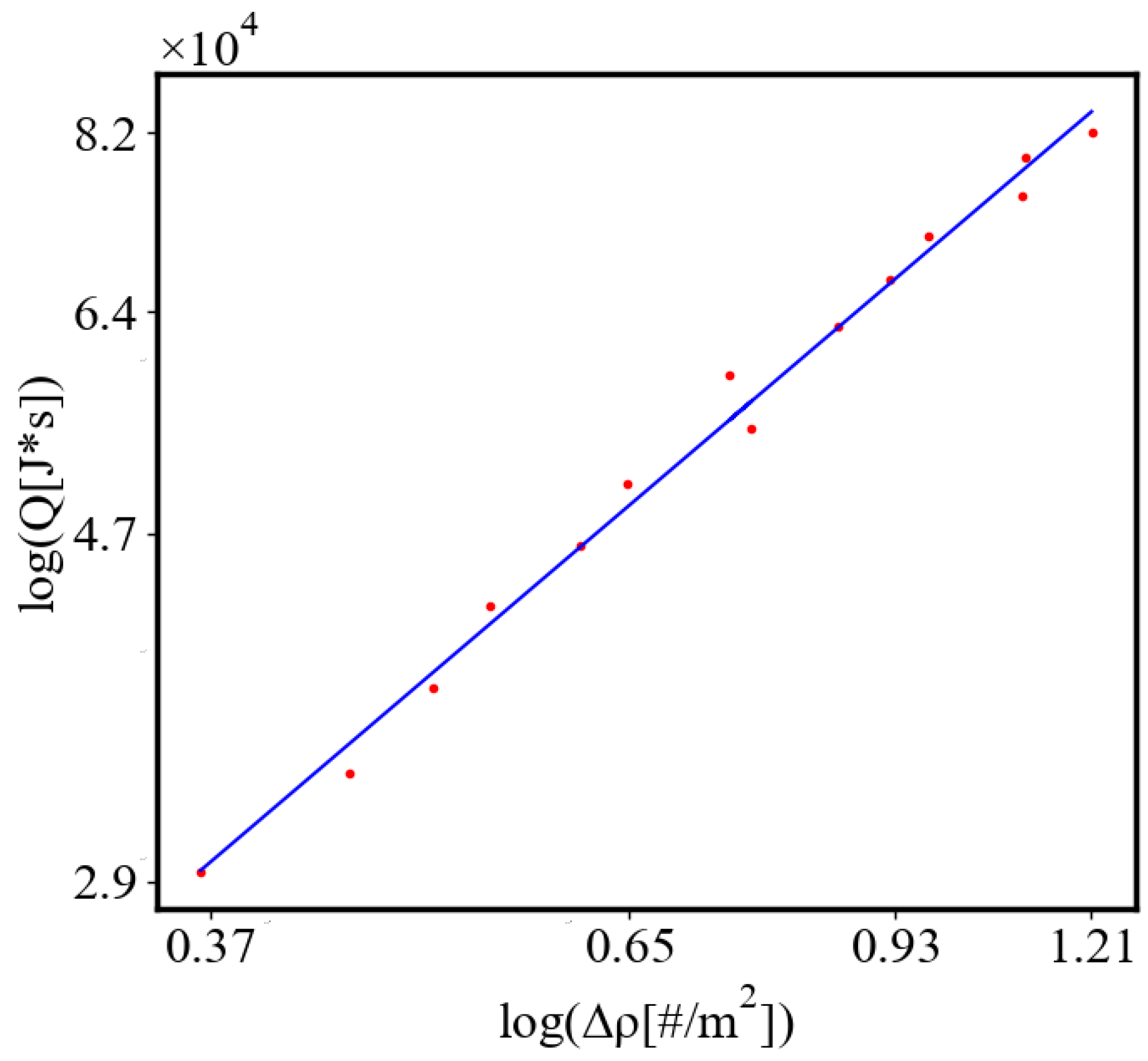

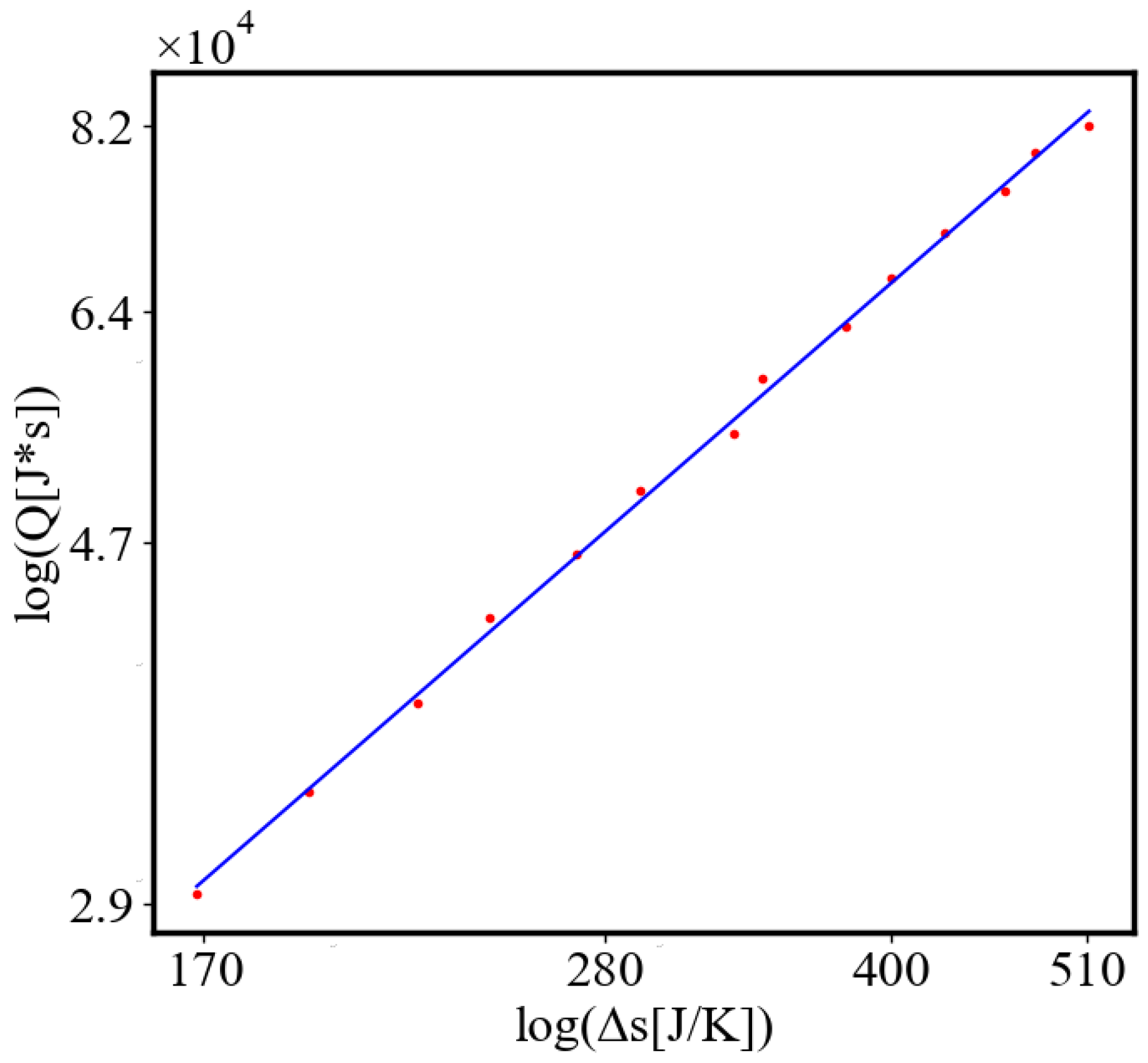

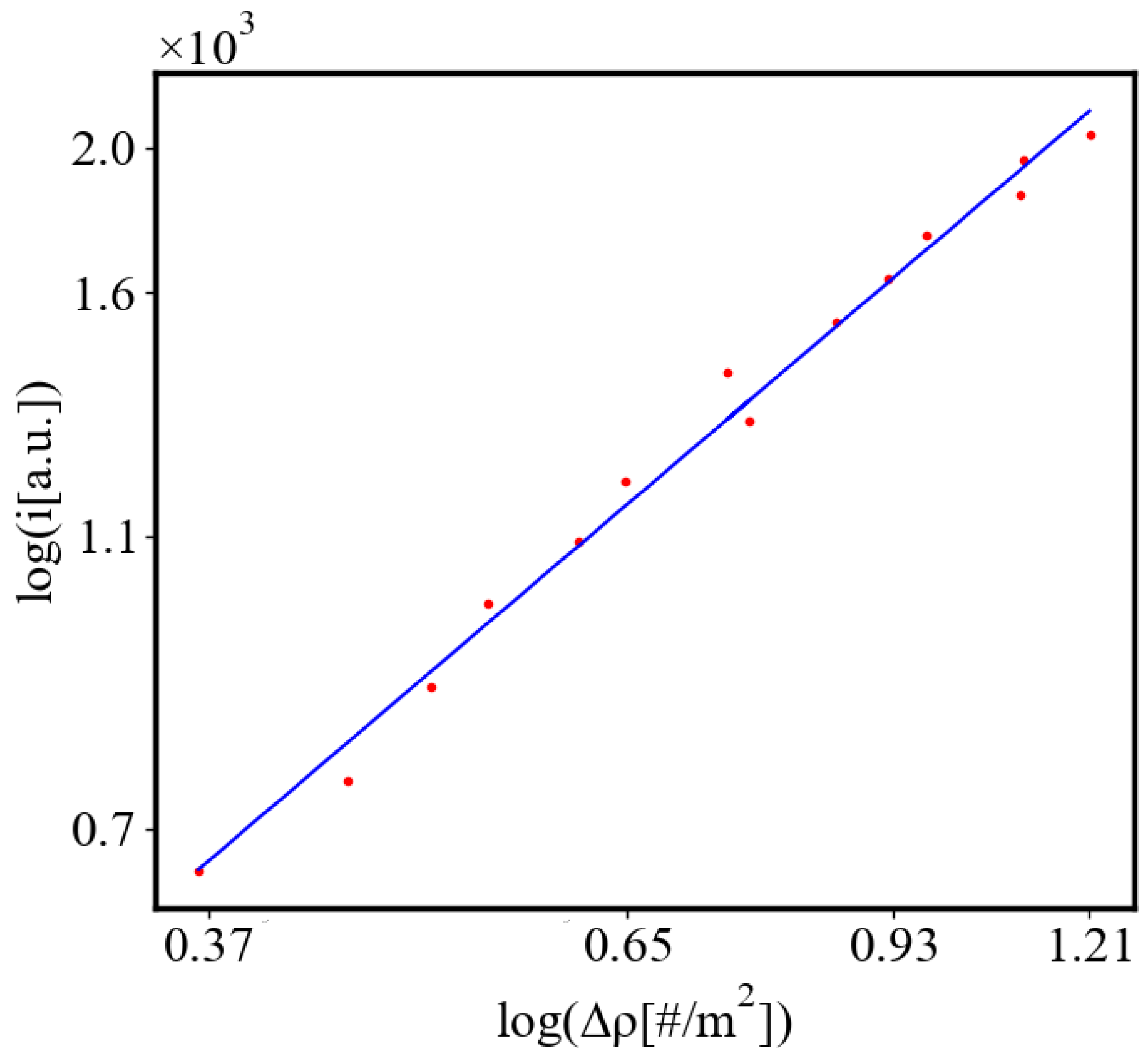

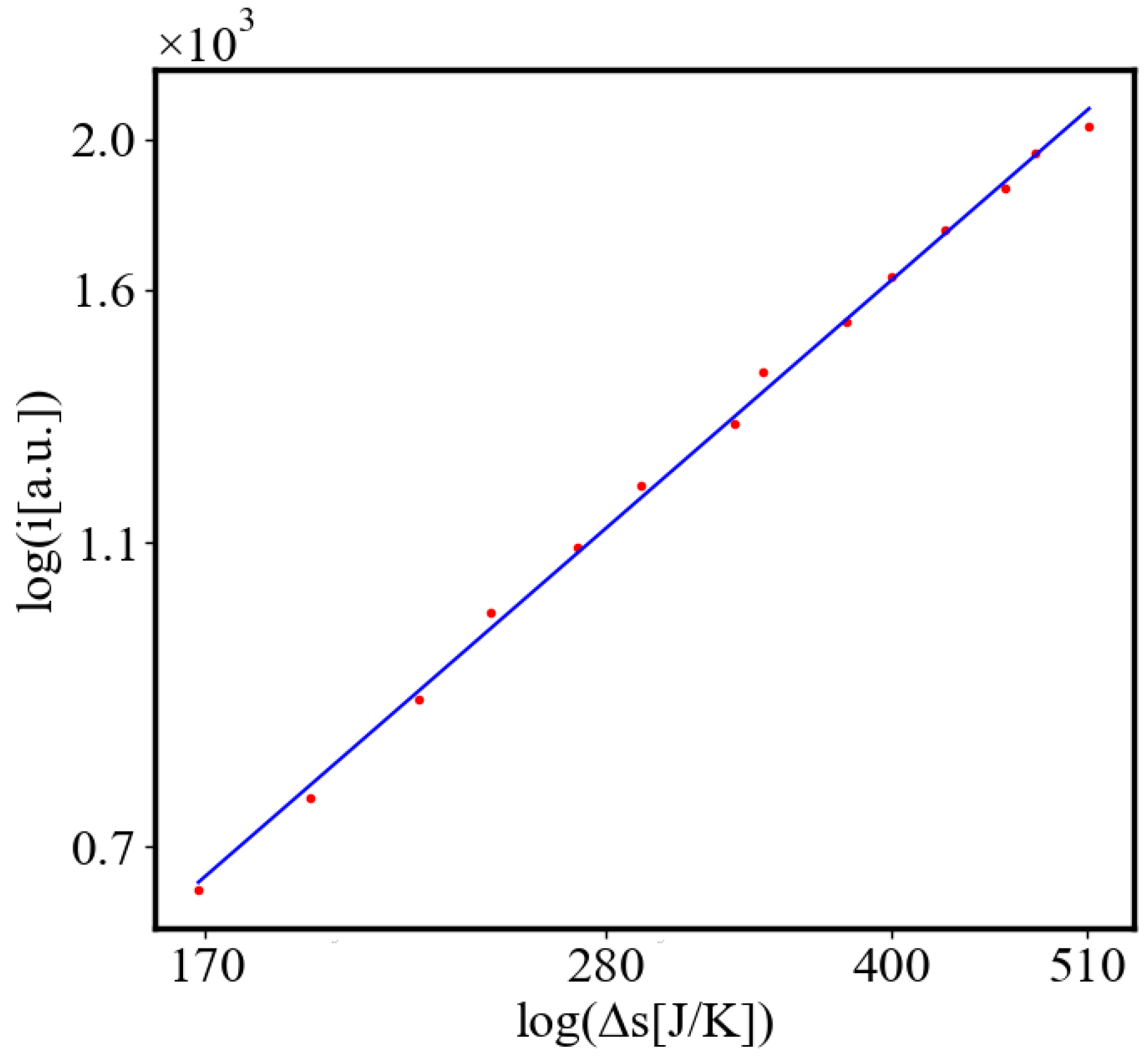

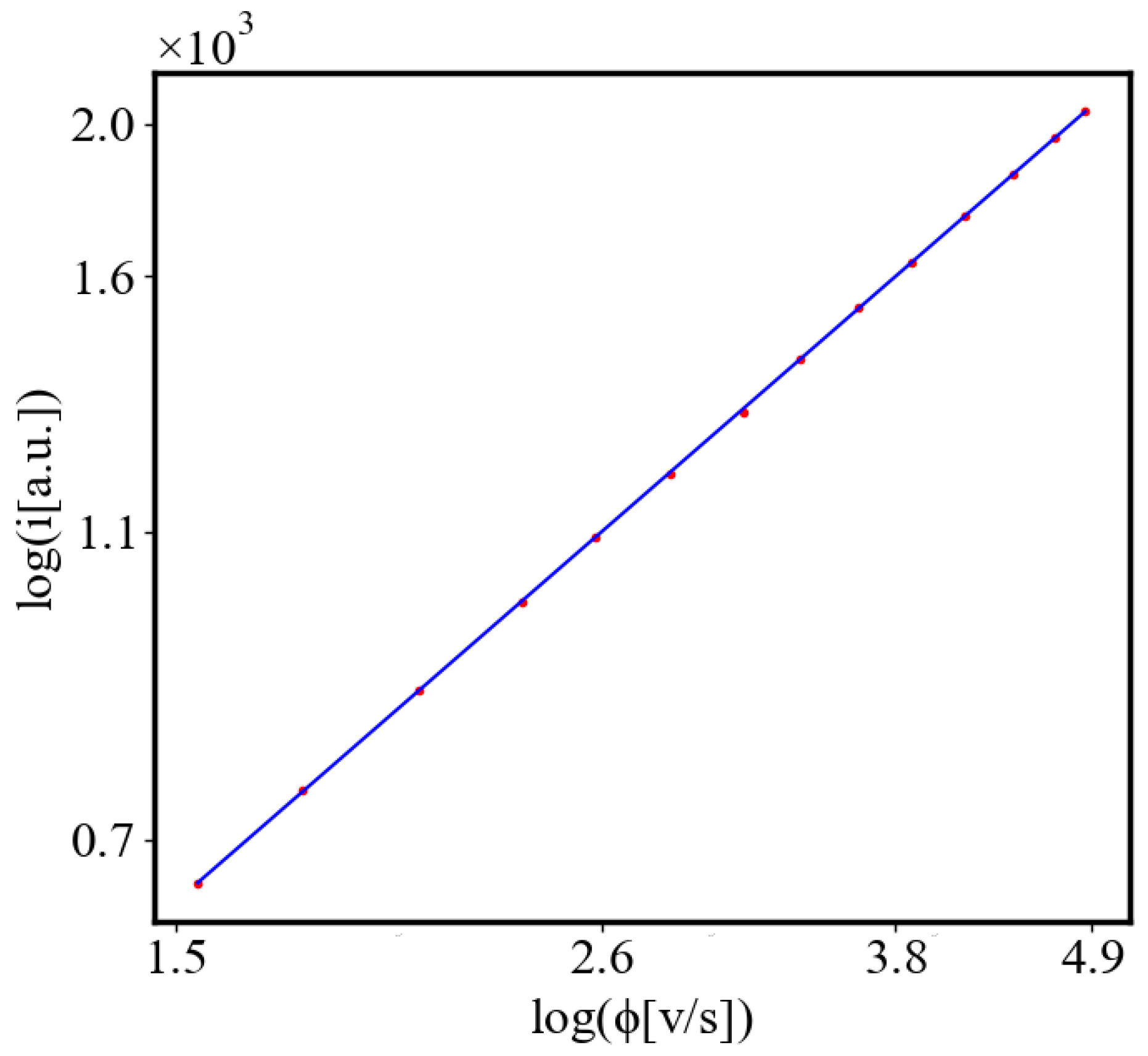

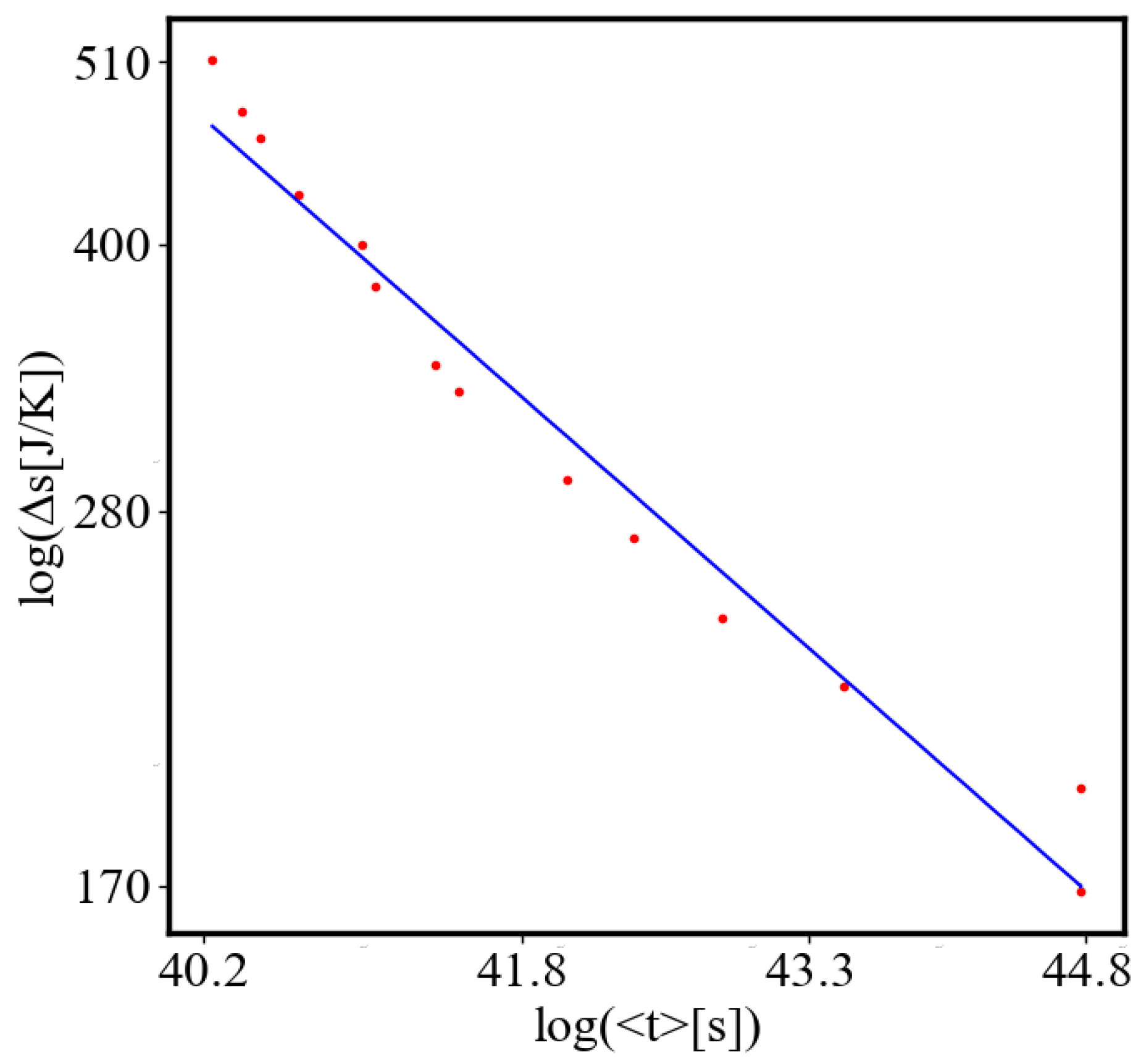

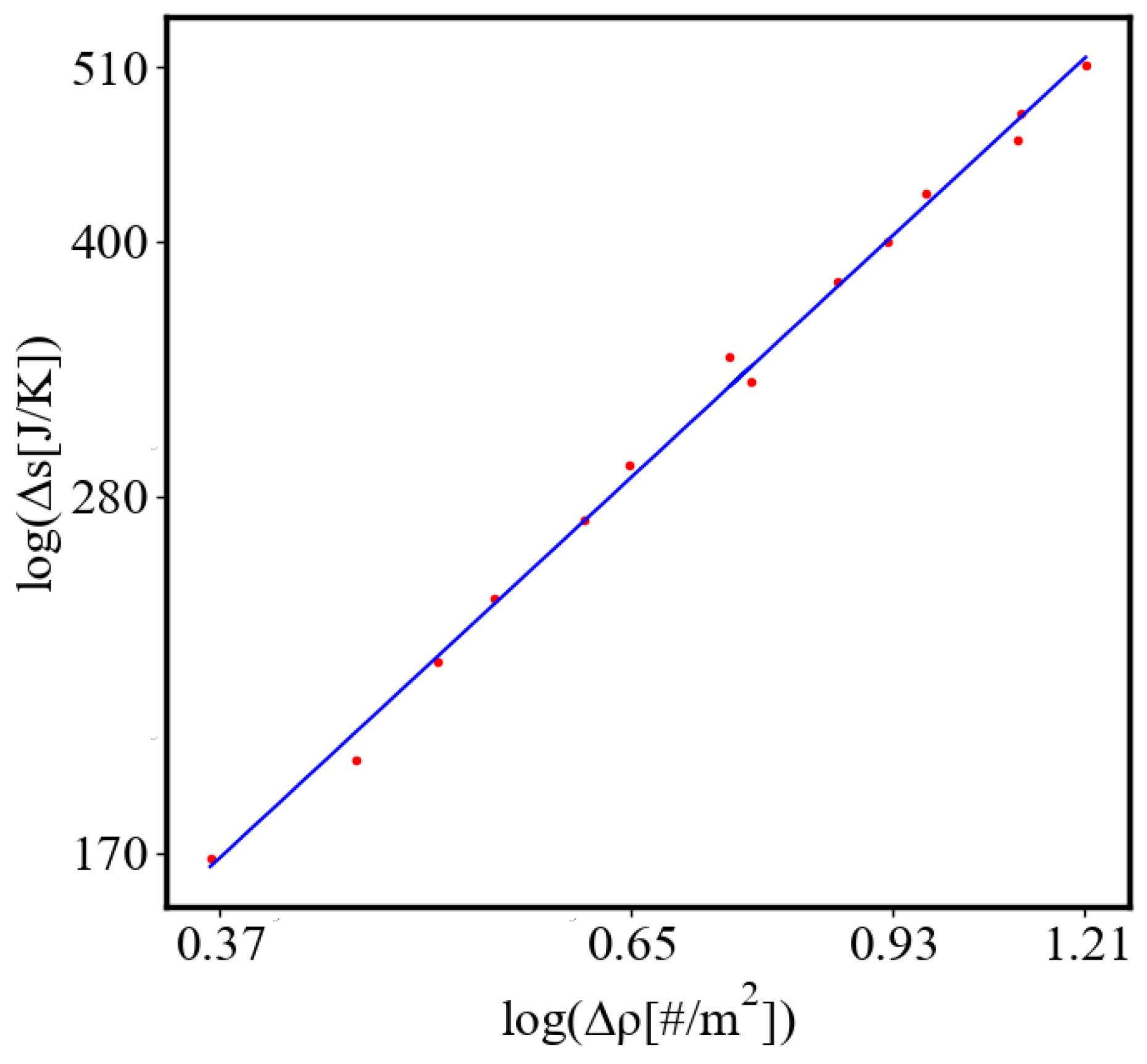

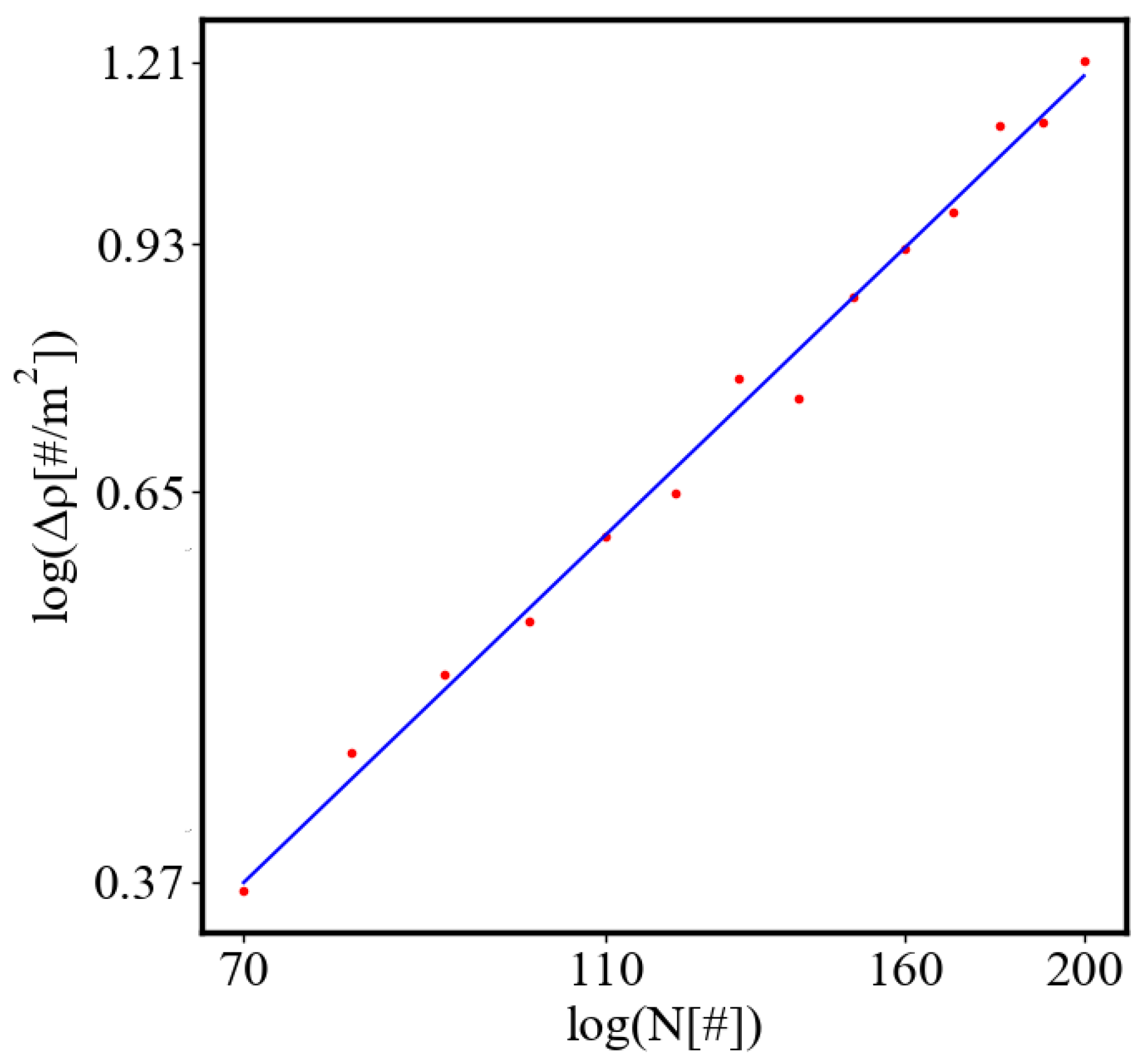

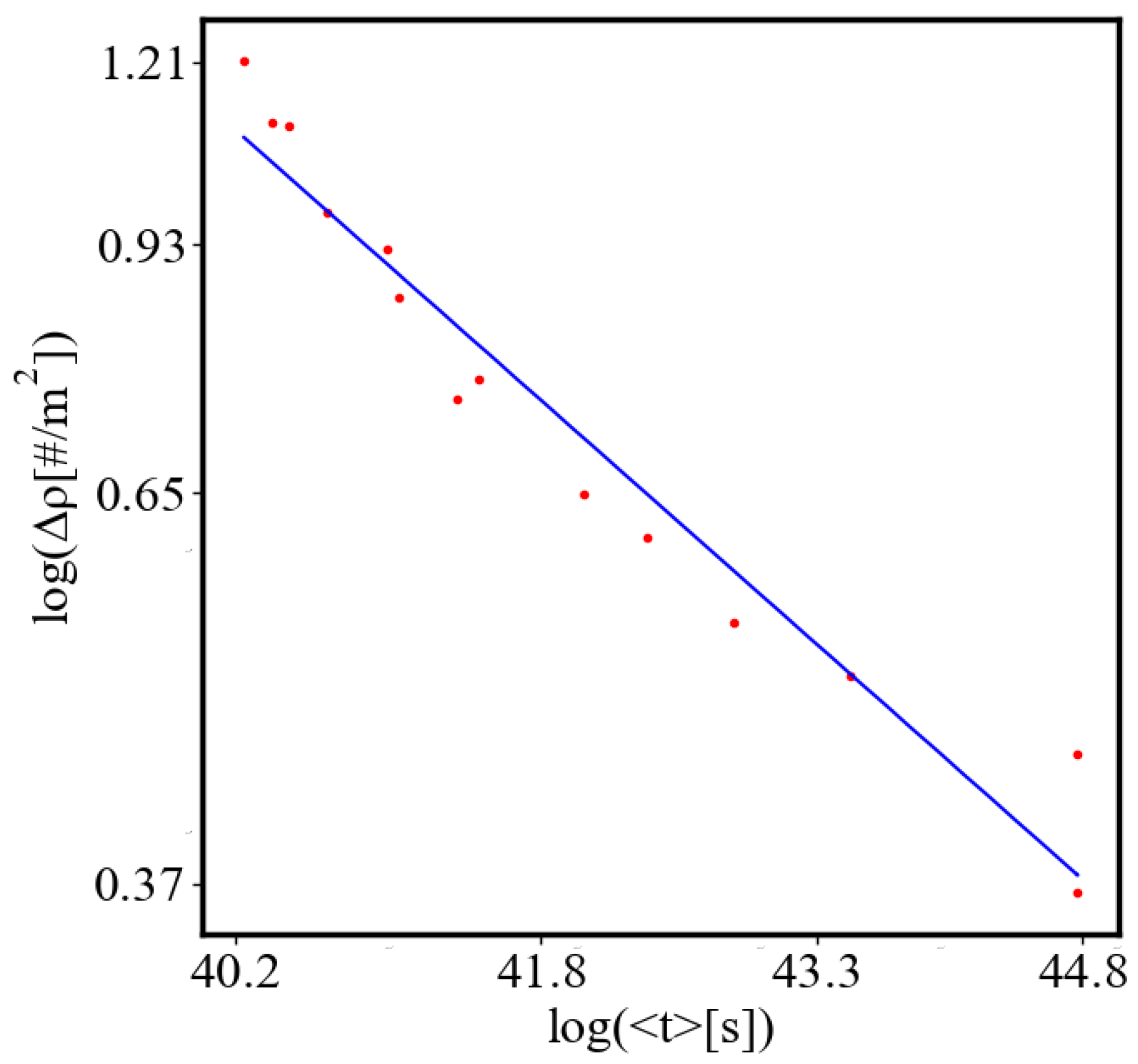

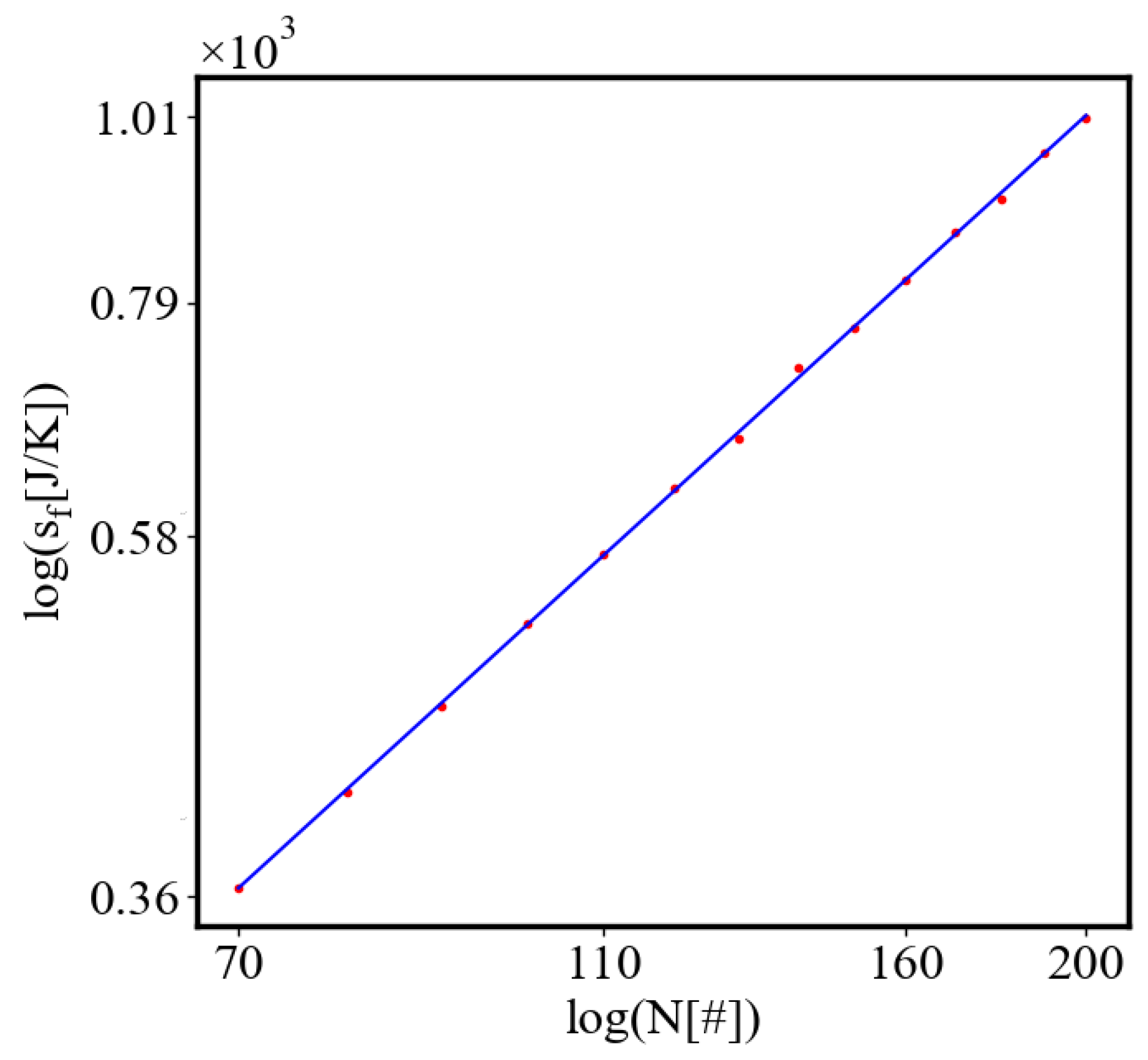

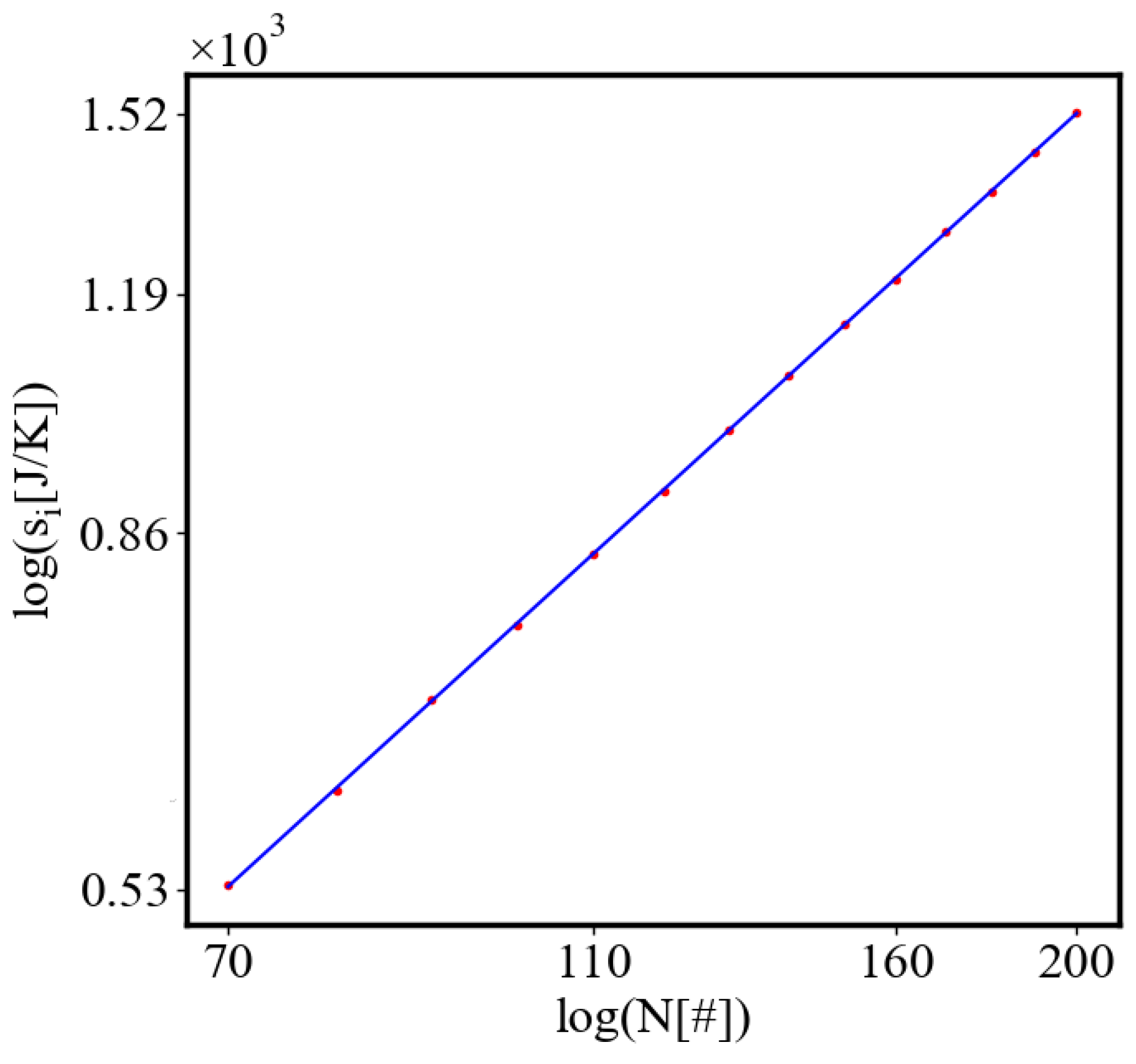

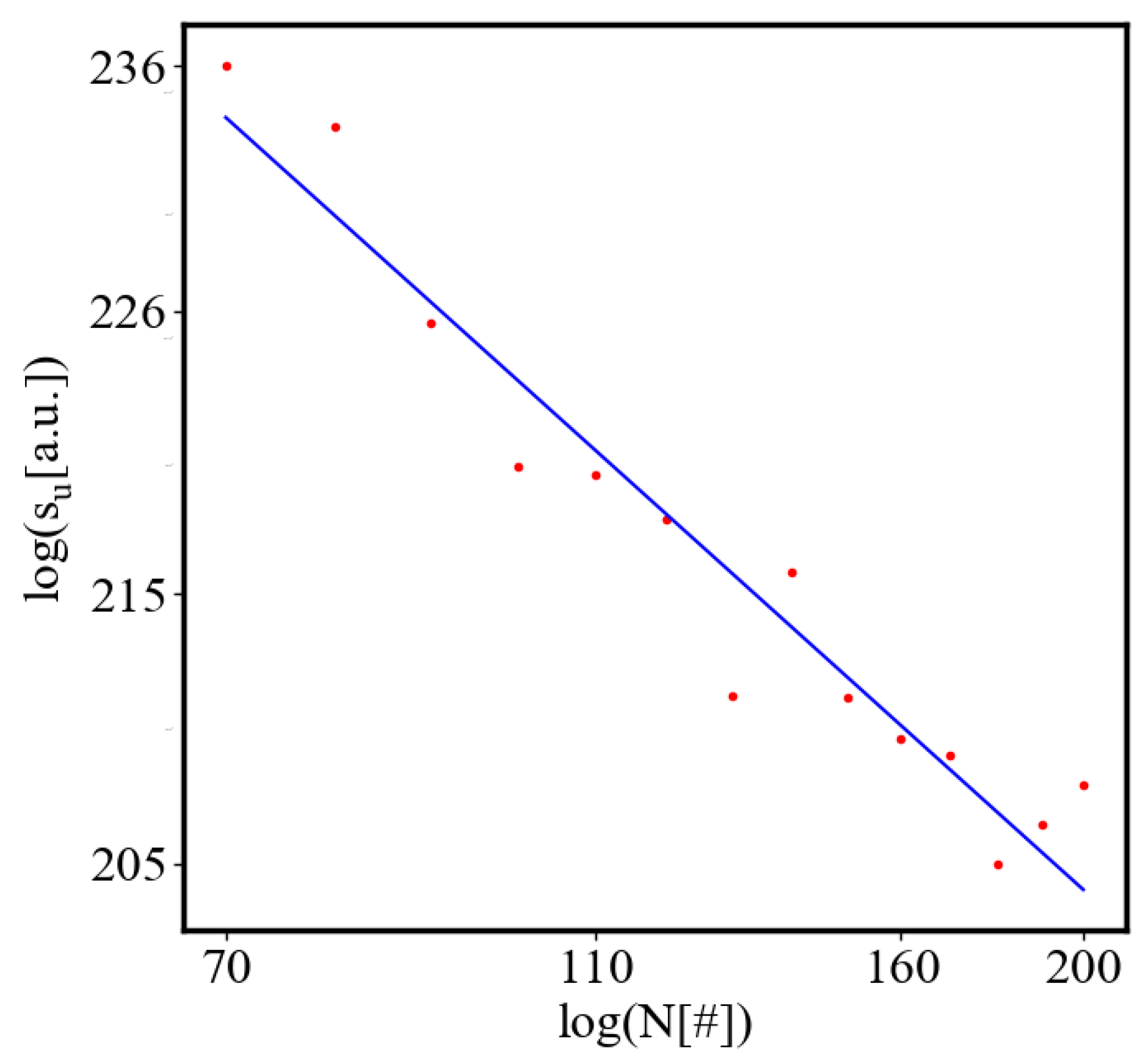

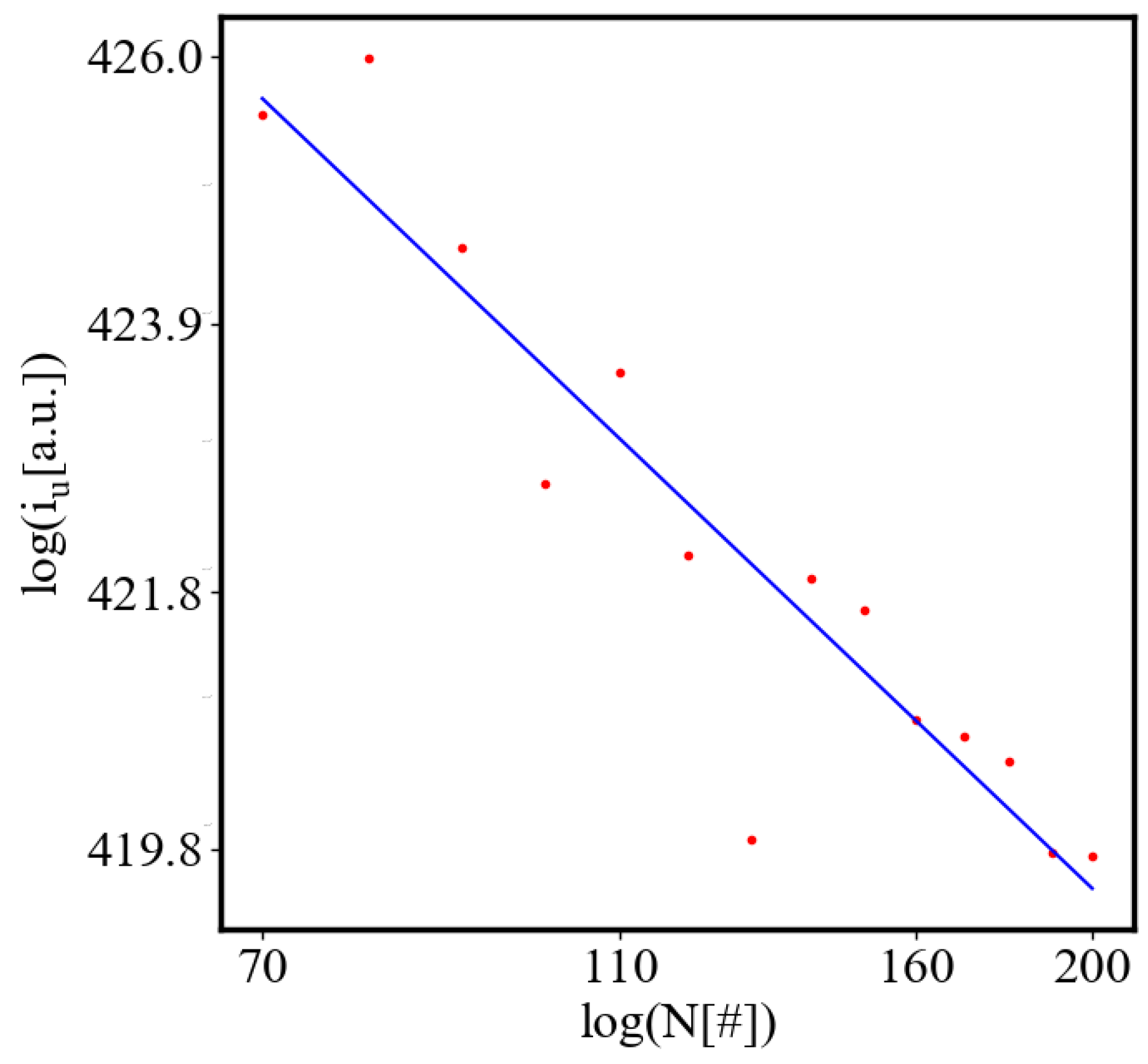

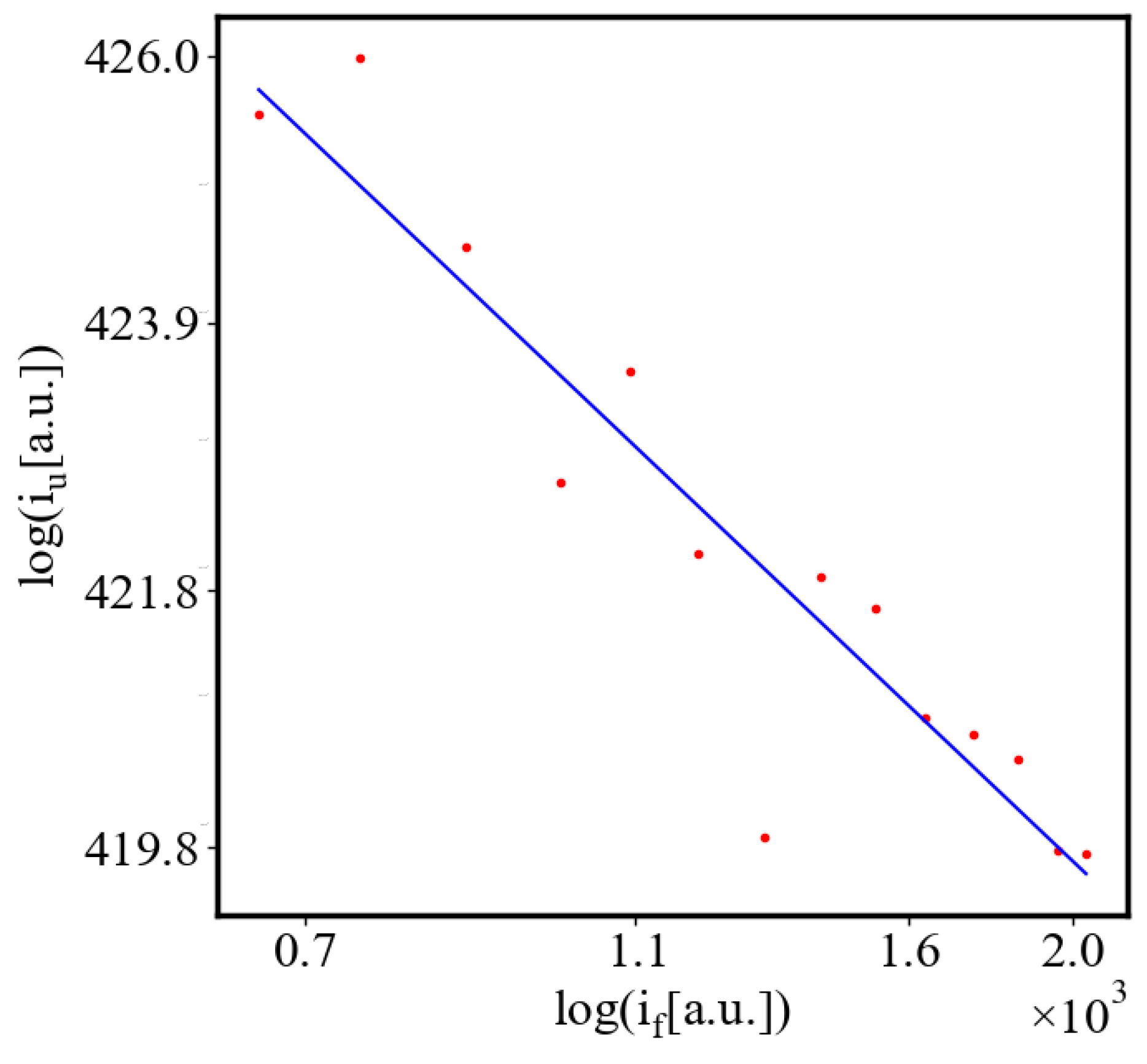

Figure 9. Both are related strictly with a power law relationship, predicted by the model of positive feedback between the characteristics of the system.

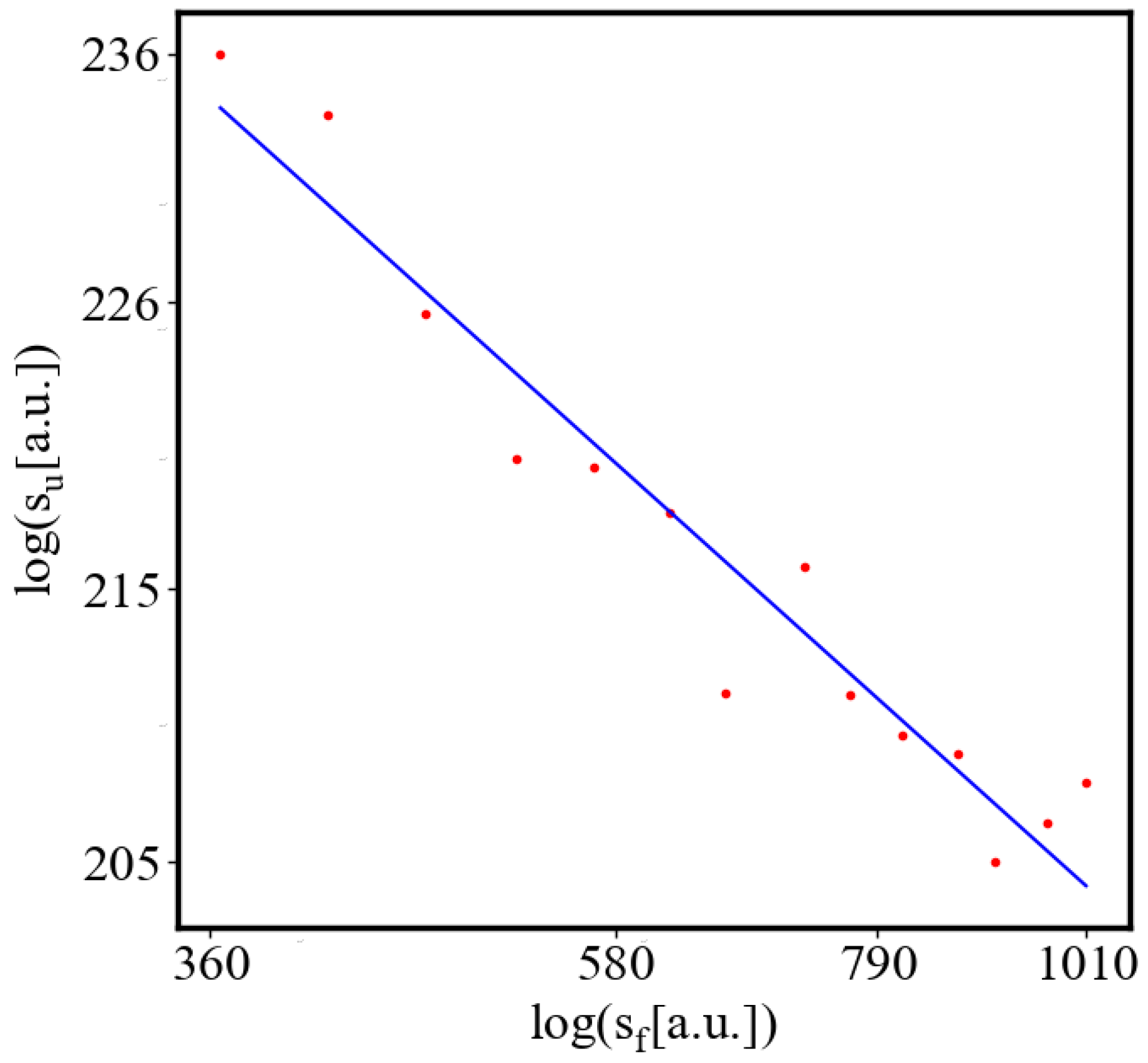

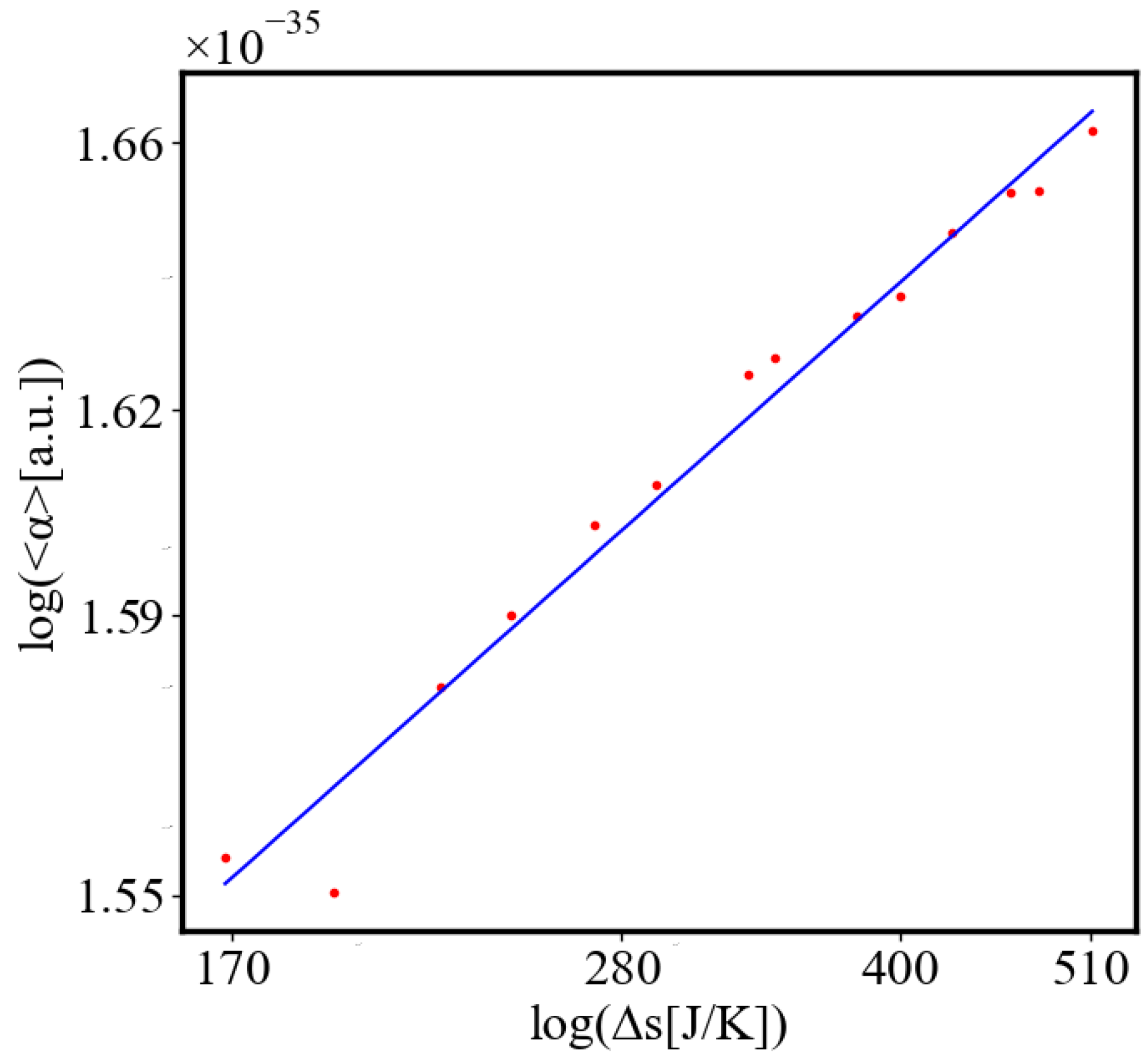

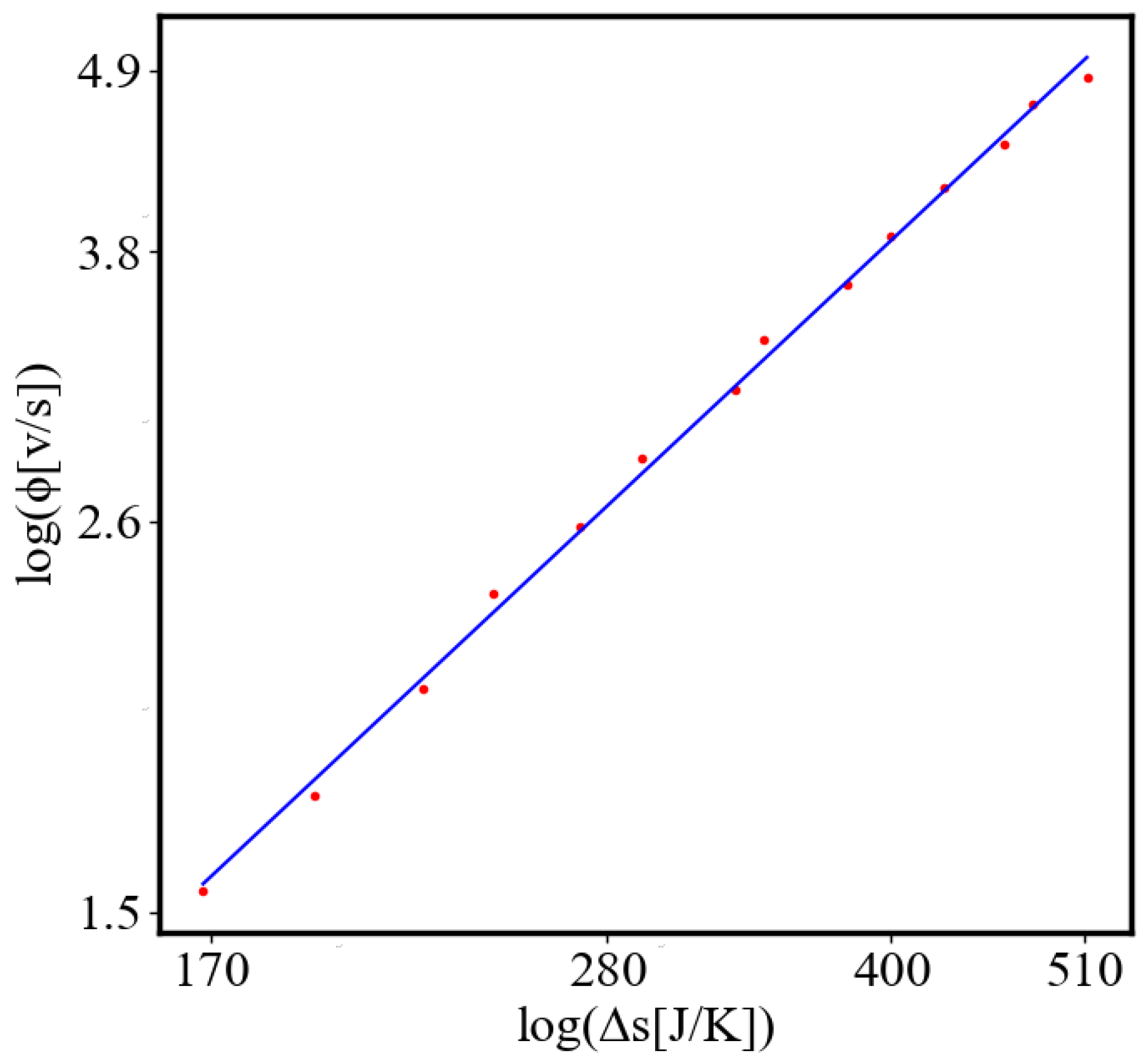

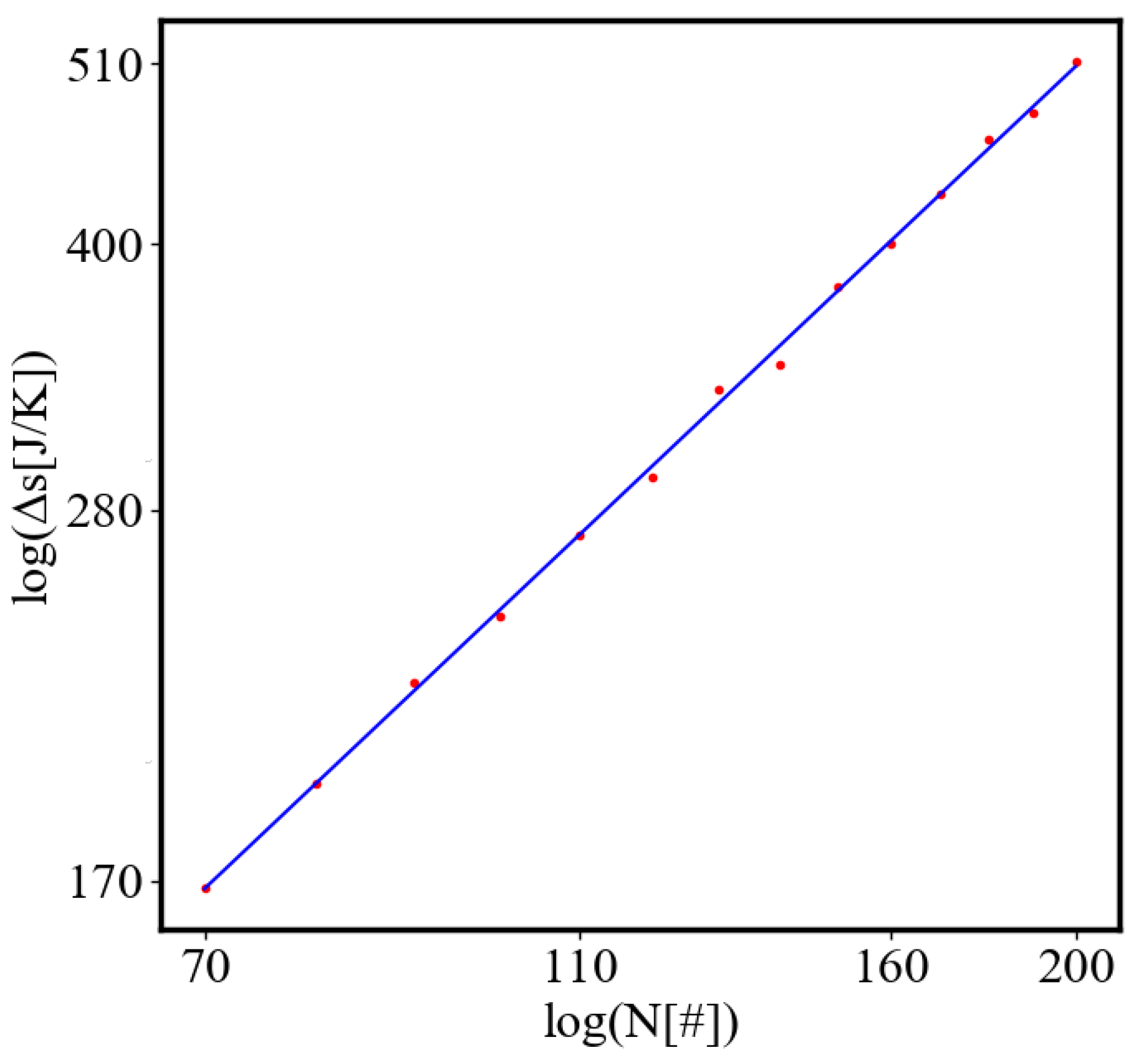

Analogously, unit internal Boltzmann entropy for one path is decreasing while total internal Boltzmann entropy is increasing for a complex system during self-organization and growth

Figure 10. These two characteristics are also related strictly to a power law relationship, predicted by the model of positive feedback between the characteristics of the system.

For the Gauss’ principle of least constraint [

45] this will translate as the unit constraint (obstacles) for one edge decreases, the total constraints in the network of the whole complex system during self-organization increases as it grows and expands.

For Hertz’s principle of least curvature [

27], this will translate as the unit curvature for one edge decreases, the total curvature in the network of the whole complex system during self-organization increases as it grows and expands and adds more nodes.

Some examples of unit-total (local-global) dualism in other systems are: In economies of scale as the size of the system grows, the total production cost increases as the unit cost per one item decreases. In the same example, the total profits increase, but the unit profit per item decreases. Also, as the cost per one computation decreases, the cost for all computations grows. As the cost per one bit of data transmission decreases the cost for all transmissions increases as the system increases. In biology, as the unit time for one reaction in a metabolic autocatalytic cycle decreases in evolution, due to increased enzymatic activity, the total number of reactions in the cycle increases. In ecology, as one species becomes more efficient in finding food, its time and energy expenditure for foraging a unit of food decreases, the numbers of that species increase and the total amount of food that they collect increases. We can keep naming other unit-total (local-global) dualisms in systems of a very different nature, to test the universality of this principle.

3. Simulations Model

In our simulation, the ants are interacting through pheromones. We can formulate an effective Lagrangian to describe their dynamics. The Lagrangian L depends on the kinetic energy T and the potential energy V. We can start building it slowly by adding necessary terms to the Lagrangian. Given that ants are influenced by pheromone concentrations, the potential energy component should reflect this interaction.

Components of the Lagrangian: 1. Kinetic Energy (

T): In our simulation, the ants have a constant mass

m, and their kinetic energy is given by:

where

v is the velocity of the ant.

2. Effective Potential Energy (

V): The potential energy due to pheromone concentration

at position

and time

t can be modeled as:

where

k is a constant that scales the influence of the pheromone concentration.

Effective Lagrangian (

L): The Lagrangian

L is given by the difference between the kinetic and potential energies:

For an ant moving in a pheromone field, the effective Lagrangian becomes:

Formulating the Equations of Motion:

Using the Lagrangian, we can derive the equations of motion via the Euler-Lagrange equation:

where

represents the spatial coordinates (e.g.,

) and

represents the corresponding velocities.

Example Calculation for a Single Coordinate:

2. Potential Energy Term:

The equation of motion for the

x-coordinate is then:

Full Equations of Motion:

For both

x and

y coordinates, the equations of motion are:

The ants are moving following the gradient of the concentration.

Testing for stationary Points of Action:

Minimum: If the second variation of the action is positive, the path corresponds to a minimum of the action.

Saddle Point: If the second variation of the action can be both positive and negative depending on the direction of the variation, the path corresponds to a saddle point.

Maximum: If the second variation of the action is negative, the path corresponds to a maximum of the action.

Determining the Nature of the Stationary Point:

To determine whether the action is a minimum, maximum, or saddle point, we examine the second variation of the action, . This involves considering the second derivative (or functional derivative in the case of continuous systems) of the action with respect to variations in the path.

Given the Lagrangian for ants interacting through pheromones

First Variation:

The first variation

leads to the Euler-Lagrange equations, which give the equations of motion:

Second Variation:

The second variation

determines the nature of the stationary point. In general, for a Lagrangian

:

Analyzing the Effective Lagrangian:

1. Kinetic Energy Term : The second variation of the kinetic energy is typically positive, as it involves terms like .

2. Potential Energy Term : The second variation of the effective potential energy depends on the nature of . If C is a smooth, well-behaved function, the second variation can be analyzed by examining .

Nature of the Stationary Point:

Kinetic Energy Contribution: Positive definite, contributing to a positive second variation.

Effective Potential Energy Contribution: Depends on the curvature of . If has regions where its second derivative is positive, the effective potential energy contributes positively, and vice versa.

Therefore, given the typical form of the Lagrangian and assuming is well-behaved (smooth and not overly irregular), the action I is most likely a saddle point. This is because:

The kinetic energy term tends to make the action a minimum.

The potential energy term, depending on the pheromone concentration field, can contribute both positively and negatively.

Thus, variations in the path can lead to directions where the action decreases (due to the kinetic energy term) and directions where it increases (due to the potential energy term), characteristic of a saddle point.

Incorporating factors such as the wiggle angle of ants and the evaporation of pheromones introduces additional dynamics to the system, which can affect whether the action remains stationary, a saddle point, a minimum, or a maximum. Here’s how these changes influence the nature of the action:

3.0.1. Effects of Wiggle Angle and Pheromone Evaporation on the Action

1. Wiggle Angle: Impact: The wiggle angle introduces stochastic variability into the ants’ paths. This randomness can lead to fluctuations in the paths that ants take, affecting the stability and stationarity of the action. Mathematical Consideration: The additional term representing the wiggle angle’s variance in the Lagrangian adds a stochastic component,

:

where

where variance in the wiggle angle is , and is a random function of time that introduces variability into the system.

This term will then influence the dynamics by adding random fluctuations at each time step, making the effect of noise vary over time rather than being a constant shift.

Consequence: The action is less likely to be strictly stationary due to the inherent variability introduced by the wiggle angle. This can lead to more dynamic behavior in the system.

2. Pheromone Evaporation: Impact: Pheromone evaporation reduces the concentration of pheromones over time, making previously attractive paths less so as time progresses. Mathematical Consideration: Including the evaporation term in the Lagrangian:

Consequence: The time-dependent decay of pheromones means that the action integral changes dynamically. Paths that were optimal at one point may no longer be optimal later, leading to continuous adaptation.

3.1. Considering the Nature of the Action

Given these modifications, the nature of the action can be characterized as follows:

1. Stationary Action: - Before Changes: In a simpler model without wiggle angles and evaporation, the action might be stationary at certain paths. - After Changes: With wiggle angle variability and pheromone evaporation, the action is less likely to be stationary. Instead, the system continuously adapts, and the action varies over time.

2. Saddle Point, Minimum, or Maximum: - Saddle Point: The action is likely to be at a saddle point due to the dynamic balancing of factors. The system may have directions in which the action decreases (due to pheromone decay) and directions in which it increases (due to path variability). - Minimum: If the system stabilizes around a certain path that balances the stochastic wiggle and the decaying pheromones effectively, the action might approach a local minimum. However, this is less likely in a highly dynamic system. - Maximum: It is unusual for the action in such optimization problems to represent a maximum because that would imply an unstable and inefficient path being preferred, which is contrary to observed behavior.

Practical Implications

1. Continuous Adaptation: - The system will require continuous adaptation to maintain optimal paths. Ants need to frequently update their path choices based on the real-time state of the pheromone landscape.

2. Complex Optimization: - Optimization algorithms must account for the random variations in movement, the rules for deposition and diffusion and the temporal decay of pheromones. This means more sophisticated models and algorithms are necessary to predict and find optimal paths.

Therefore, incorporating the wiggle angle and pheromone evaporation into the model makes the action more dynamic and less likely to be strictly stationary. Instead, the action is more likely to exhibit behavior characteristic of a saddle point, with continuous adaptation required to navigate the dynamic environment. This complexity necessitates advanced modeling and optimization techniques to accurately capture and predict the behavior of the system.

3.2. Dynamic Action

For dynamical non-stationary action principles, we can extend the classical action principle to include time-dependent elements. The Lagrangian is changing during the motion of an agent between the nodes as the terms in it are changing.

1. Time-Dependent Lagrangian that explicitly depends on time or other dynamic variables:

where ( q ) represents the generalized coordinates, (

) their time derivatives, ( t ) time, and (

) a set of dynamically evolving parameters. 2. Dynamic Optimization - the system continuously adapts its trajectory q(t) to minimize or optimize the action that evolves over time:

The parameters are updated based on feedback from the system’s performance. The goal is to find the path that makes the action stationary. However, since is time-dependent, the optimization becomes dynamic.

Euler-Lagrange Equation

To find the stationary path, we derive the Euler-Lagrange equation from the time-dependent Lagrangian. For a Lagrangian

, the Euler-Lagrange equation is:

However, due to the dynamic nature of , additional terms may need to be considered.

Updating Parameters

The parameters

evolve based on feedback from the system’s performance. This feedback mechanism can be modeled by incorporating a differential equation for

:

Here, f represents a function that updates based on the current state , the velocity , and possibly the time t. The specific form of f depends on the nature of the feedback and the system being modeled.

Practical Implementation

In our example of ants with a wiggle angle and pheromone evaporation. The effective Lagrangian will look like this:

with all of the terms defined earlier.

Dynamical System Adaptation

The system adapts by updating based on the current state of pheromones and the ants’ paths.

Solving the Equations

1. Numerical Methods: Usually, these systems are too complex for analytical solutions, so numerical methods (e.g., finite difference methods, Runge-Kutta methods) are used to solve the differential equations governing and . 2. Optimization Algorithms: Algorithms like gradient descent, genetic algorithms, or simulated annealing can be used to find optimal paths and parameter updates.

By extending the classical action principle to include time-dependent and evolving elements, we can model and solve more complex, dynamic systems. This framework is particularly useful in real-world scenarios where conditions change over time, and systems must adapt continuously to maintain optimal performance. This approach is applicable in physical, chemical, and biological systems, and in fields such as robotics, economics, and ecological modeling, providing a powerful tool for understanding and optimizing dynamic, non-stationary systems.

The Lagrangian changes at each time step of the simulation, therefore we cannot talk about static action, but a dynamic action. This is dynamic optimization and reinforcement learning.

The average action is quasi-stationary, as is fluctuates around a fixed value, but, internally, each trajectory which it is composed of is fluctuating stochastically given the dynamic Lagrangian of each ant. It still fluctuates around the shortest theoretical path, so the average action is minimized far from the stationary path, even though close to the minimum it can be stuck in a neighboring stationary action path temporarily. In all these situations, as described above, the average action efficiency is our measure for organization.

3.3. Specific Details in our Simulation

For our simulation the details of the concentration changes at each patch at each update are the sum of three contributions and can be included as:

1. is the preexisting amount of pheromone at each patch at time t.

2. Pheromone Diffusion: The changes of the pheromone at each patch at time

t, are described by the rules of the simulation: 70% of the pheromone is split between all 8 neighboring patches on each tick, regardless of how much pheromone is in that patch, which means that 30% of the original amount is left in the central patch. On the next tick 70% of those remaining 30% will diffuse again. At the same time, using the same rule, pheromone is distributed from all 8 neighboring ants to the central one. Note: this rule for diffusion does not follow the diffusion equations in physics, where there is always flow from high concentration to low.

where

The first term in the equation shows how much of the concentration of the pheromone from the previous time step is left in the next, and the second term shows the incoming pheromone from all neighboring patches, as 70% 1/8 of each concentration is distributed to the central one.

3. The amount of pheromone an ant deposits after n steps can be expressed as:

Where

The stochastic term,

depends on the (

), which is the variance of a uniform distribution and for the parameters in this simulation, is [

46]

3.4. Gradient Based Approach

We can use either the concentration’s value or the concentration gradient in the potential energy term. Using the gradient is a more exact approach but even more computationally intensive.

In further extension of the model, we can incorporate a gradient-based potential energy term. In this case, the concentration dependent term is:

instead of

and the Lagrangian becomes:

3.5. Summary

1. We obtained the Lagrangian with the exact parameters for the specific current simulation that produced the data. Up to our current knowledge we don’t know of other studies which have published the Lagrangian approach to agent based simulations of ants.

2. The Lagrangian is impossible to solve analytically, to our current knowledge, due to the stochastic term. Also, the equation for the concentration of pheromones is at a given patch, but the equation for the amount deposited by the ants depends on how many steps, n, they have taken since they visited the food or nest. Each ant has a different path, so n in the equation will be different for each ant and it will be depositing a different amount of pheromones. This in general cannot be done analytically, it is dependent on the stochastic paths of each ant, therefore one way to solve it is numerically through the simulation. Also, in the equation, the concentration is for each patch i,j. We solve it numerically through the simulation.

3. The average path length obtained from the simulation serves as a numerical solution to the Lagrangian because it results from the model that incorporates all the dynamics described by the Lagrangian. This path length reflects the optimization and behaviors modeled by the Lagrangian terms, including kinetic energy, potential energy influenced by pheromone concentrations, and stochastic movement. The simulation is using the reciprocal of the average path-length as the average action efficiency. This takes into account all of the effects on the Lagrangian.

4. The average action could be stationary close to the theoretically shortest path, i.e. near the minimum of the average action, but further away from it it is always minimized, experimentally and from theoretical considerations. In the simulation, it is measured that longer paths always decay to shorter paths. There can only be some deviations very close to the shortest path due to memory effects and stochastic reasons which will decay with longer annealing and changing parameters such as exploration by increasing the wiggle angle, changing the pheromone deposition, diffusion and evaporation rates, changing the speed and mass of the ants, and other factors. When the average action efficiency is growing it means that the average unit action is decreasing. When the action is stationary, as it is at the end of the simulations, as seen in the time graphs, the average action efficiency is also stationary - it does not grow anymore in the time and for the parameters of the current simulation. This is because the size of the world and the number of ants are fixed in each run of the simulation. In systems which can continue to grow, the limits will be much further away. A similar process is happening in real complex systems such as organisms, ecosystems, cities, and economies. Due to the stochastic variations, we can consider only average quantities.

4. Mechanism

4.1. Exponential Growth and Size-Complexity Rule

Average action efficiency is the proposed measure for level of organization and complexity. To test it we turn to observational data. The size-complexity rule states that the complexity increases as a power law of the size of a complex system [

32]. This rule has been observed in systems of a very different nature, with some explanation for the proposed origin [

34,

47]. In the next section on the model of the mechanism of self-organization, we derive those exponential and power law dependencies. In this paper, we show how our data aligns with the size-complexity rule.

4.2. A Model for the Mechanism of Self-Organization

We apply the model first presented in our paper from 2015 [

10] and used in the following papers [

11,

12] to the ABM simulation here, and specify only some of the quantities in this model for brevity, clarity and simplicity. Then, we show the exponential and power law solutions for this specific system. The quantities that we show in the results, but, that are not included in the model, participate in the same way in the positive feedback loops, and have the same power law solutions, as seen in the data. This positive feedback loop model is universal for arbitrary number of characteristics of self-organizing systems, and can be modified to include any of them.

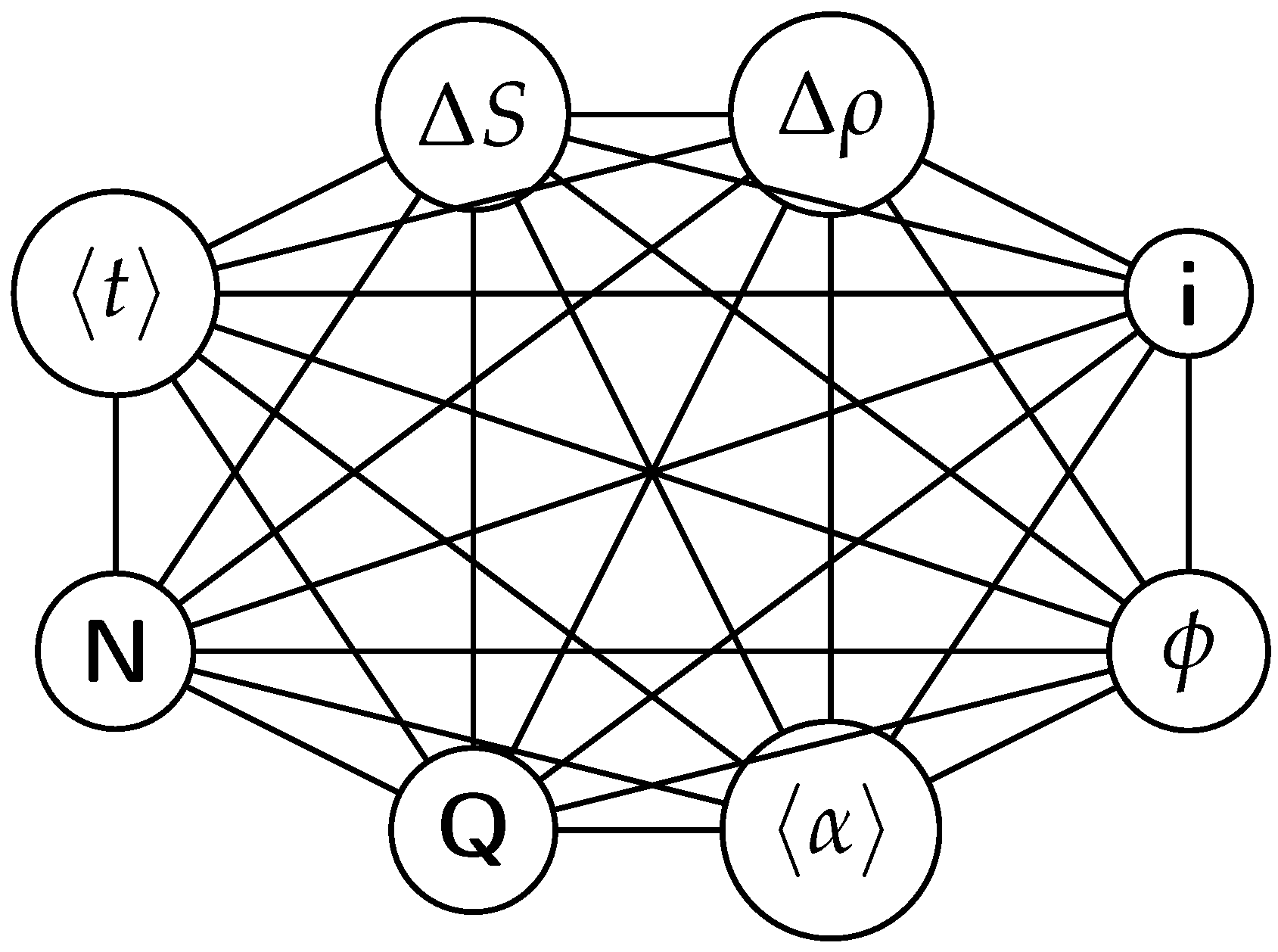

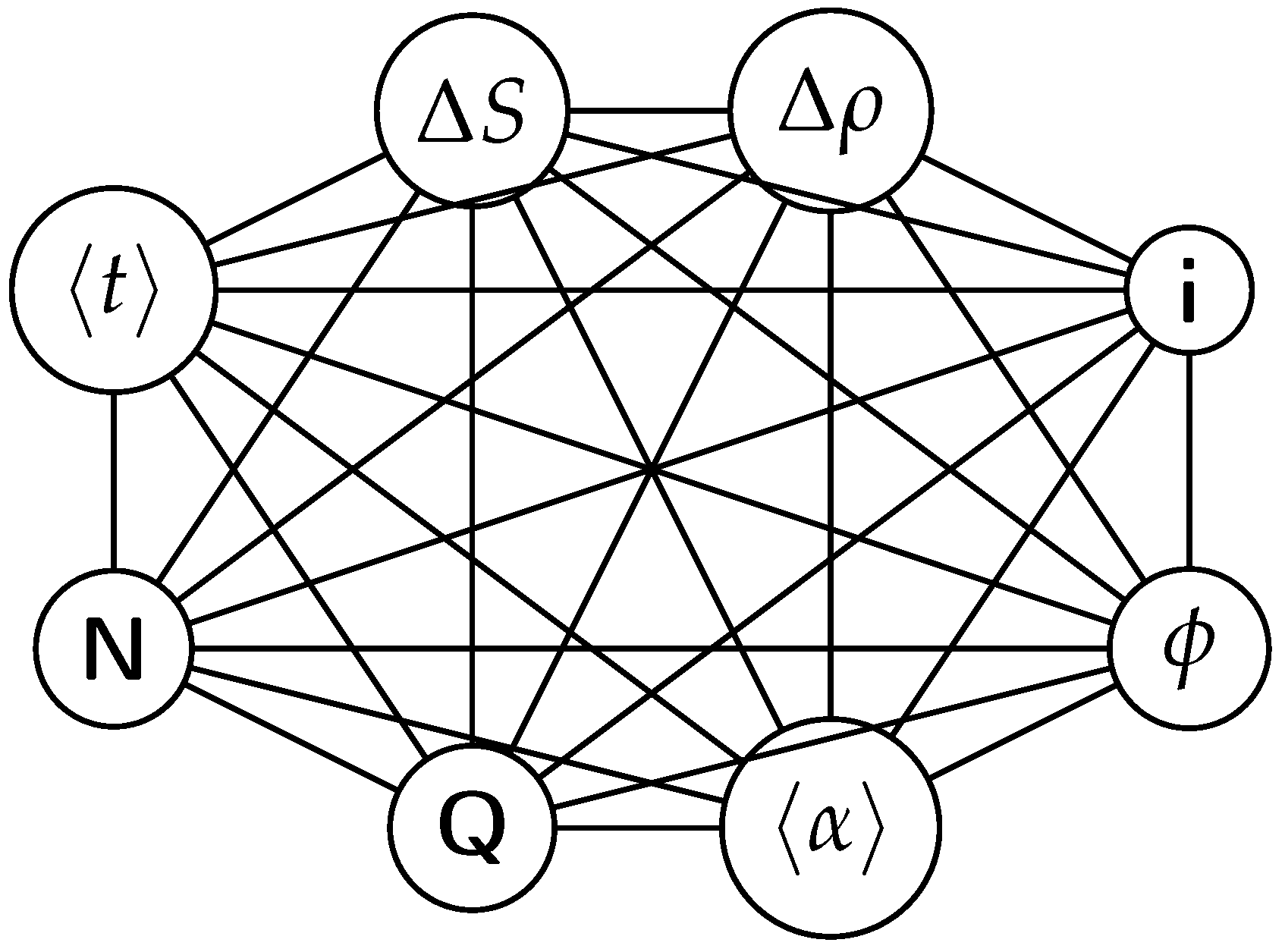

Below is a visual representation of the positive feedback interactions between the characteristics of a complex system, which in our 2015 paper [

10] have been proposed as the mechanism of self-organization, progressive development, and evolution, applied to the current simulation. Here

i is the information in the system, calculated by the total amount of ant pheromones,

is the average time for all of the ants in the simulation crossing between the two nodes,

N is the total number of ants,

Q is the total action of all ants in the system,

is the internal entropy difference between the initial and final state of the system in the process of self-organization of finding the shortest path,

is the average action efficiency,

is the number of events in the system per unit time, which in the simulation is the number of paths or crossings between the two nodes,

, the density of the ants, is the order parameter and

is the increase of the order parameter, which is the difference in the density of agents between the final and initial state of the simulation. The links connecting all those quantities represent positive feedback connections between them.

The positive feedback loops in

Figure 2 are modeled with a set of ordinary differential equations. The solutions of this model are exponential for each characteristic and have a power law dependence between each two. The detailed solutions of this model are shown.

We acknowledge the mathematical point that, in general, solutions to systems of linear differential equations are not always exponential. This depends on the eigenvalues of the governing matrix, which must be positive real numbers for exponential growth to occur. Additionally, the matrix must be diagonalizable to support such solutions.

4.2.1. Systems with Constant Coefficients

• For linear systems with constant coefficients, the solutions often involve exponential functions. This is because the system can be expressed in terms of matrix exponentials, leveraging the properties of constant coefficient matrices.

• Even in these cases, if the coefficient matrix is defective (non-diagonalizable), the solutions may include polynomial terms multiplied by exponentials.

4.2.2. Systems with Variable Coefficients

• When the coefficients are functions of the independent variable (e.g., time), the solutions may involve integrals, special functions (like Bessel or Airy functions), or other non-exponential forms.

• The lack of constant coefficients means that the superposition principle doesn’t yield purely exponential solutions, and the system may not have solutions expressible in closed-form exponential terms.

4.2.3. Higher-Order Systems and Resonance

• In some systems, especially those modeling physical phenomena like oscillations or circuits, the solutions might involve trigonometric functions, which are related to exponentials via Euler’s formula but are not themselves exponential functions in the real domain.

• Resonant systems can exhibit behavior where solutions grow without bound in a non-exponential manner.

While exponential functions are a key part of the toolkit for solving linear differential equations, especially with constant coefficients, they don’t encompass all possible solutions. The nature of the coefficients and the structure of the system play crucial roles in determining the form of the solution.

In our specific system, the dynamics predicts exponential growth. We do not consider friction, negative feedback, or any dissipative processes that would introduce complex or negative eigenvalues. Instead, the system is driven by positive feedback loops, which lead to positive real eigenvalues. These conditions ensure that the matrix is diagonalizable and that the system exhibits exponential growth, as expected under these assumptions.

Our model operates under the assumption of constant positive feedback, which justifies the exponential growth observed in our simulations. This is a valid simplification for our study, focusing on systems with reinforcing interactions rather than dissipative forces. In future work we will expand it to include dissipative forces.

5. Mechanism

5.1. Exponential Growth and Size-Complexity Rule

Average action efficiency is the proposed measure for level of organization and complexity. To test it we turn to observational data. The size-complexity rule states that the complexity increases as a power law of the size of a complex system [

32]. This rule has been observed in systems of a very different nature, with some explanation for the proposed origin [

34,

47]. In the next section on the model of the mechanism of self-organization, we derive those exponential and power law dependencies. In this paper, we show how our data aligns with the size-complexity rule.

5.2. A Model for the Mechanism of Self-Organization

We apply the model first presented in our paper from 2015 [

10] and used in the following papers [

11,