1. Introduction

(The evolution of telecommunications networks significantly transformed how the world connects by providing unprecedented connectivity and altering how we communicate, work, and live. As the scale, size, and complexity increase, innovative methods are required to optimize traditional management techniques and keep pace with these changes. AI and ML have revolutionized how we interact with data; these technologies are also reshaping network management in the telecom industry.

AI and ML have multiple uses within the telecom industry, from analyzing data to predicting outages before they happen, optimizing them for peak performance, and adapting in real time to changing conditions. This paper examines the emerging trends and future opportunities that AI and ML present for enhancing network management in the telecommunications industry. To understand how important AI is in managing telecom networks, it’s helpful to first look at modern networks’ challenges. Today’s telecom networks are massive, with systems spread across large areas, serving millions of users simultaneously. Managing these networks is a huge task that involves constant monitoring, fixing issues, and making improvements to ensure reliable and high-quality service. In the past, network management depended heavily on human expertise, with engineers manually analyzing data and performance metrics to make adjustments. While this worked for many years, more precise and advanced techniques are needed in our hyper-connected world. The size and complexity of modern networks, especially with technologies like 5G and the Internet of Things (IoT), produce an overwhelming amount of data every second. Humans can’t process all this information in real time. That’s where AI comes in, offering a new way to manage networks. AI, powered by ML and data analytics, can quickly spot patterns in data and make decisions based on them.

AI’s role in telecom started with basic expert systems and rule-based algorithms used for network planning and optimization. One of the early breakthroughs was a study by Klaine et al., who developed a framework for self-organizing cellular networks using reinforcement learning. Their research showed that AI could help networks adjust to changing conditions independently, improving coverage and capacity without human input [

1]. As AI technology has evolved, its applications in telecom have expanded. Morocho-Cayamcela et al. discussed how AI is now central to 5G networks, showing the growing role of AI in future telecom systems [

2]. One central area where AI has significantly impacted is detecting network anomalies. Traditional methods based on set thresholds often struggle to catch complex or emerging threats. Through deep learning models, AI can identify subtle patterns that point to potential problems. Research by Boutaba et al. showed that AI models perform much better than traditional methods in finding network issues [

3]. Despite these advancements, there are still challenges in integrating AI into network management, such as concerns about reliability, security, and transparency. Since telecom networks are critical infrastructure, it’s vital to ensure that AI-driven decisions are transparent, accountable, and protected from attacks. Tackling these challenges is essential to maintaining trust in AI-powered network management. Still, the benefits of AI are too significant to overlook. AI offers improved efficiency, less downtime, and a better user experience. With AI, 5G, and edge computing coming together, we’re entering a new era of network management, where networks will be faster, more reliable, and wiser than ever. The rest of the manuscript is formatted as follows: each section discusses data science in that particular network aspect.

Section 2 contains information about data science and some data science techniques used in Traffic classification, Edge computing, and Network function virtualization; section 3 contains predictive maintenance; section 4 contains anomaly detection; section 5, Automated Network Con-figuration and Self-Healing Mechanisms, and finally, discussion, future directions, and conclusion.

2. Figures and Data Science and ML Techniques for Network Monitoring

As telecommunication networks have grown in complexity, traditional monitoring methods have struggled to keep pace with data’s increasing volume, variety, and velocity. Modern networks generate vast amounts of real-time information, requiring advanced tools to analyze this data and respond to potential issues autonomously. Data science and ML have emerged as robust solutions, allowing more efficient and dynamic monitoring. By leveraging these techniques, network operators can detect anomalies, predict failures, and optimize the system’s overall performance. Data science is an interdisciplinary field that combines various methods from statistics, computer science, and domain-specific knowledge to extract valuable insights from large datasets. The process typically begins with data collection, where raw data is gathered from multiple sources. This can include network traffic, system logs, and performance metrics in telecommunication. Once collected, the data undergoes preprocessing, which involves cleaning, transforming, and normalizing it to make it suitable for analysis. After preprocessing, the data is ready for analysis, where statistical techniques and algorithms are applied to uncover patterns, correlations, and trends. ML algorithms are essential at this stage, as they enable automated decision-making by identifying patterns that may not be evident to human analysts. In telecommunications, these models can be trained to classify traffic, predict future demand, or detect network anomalies in real-time. Visualization is another crucial aspect of data science. Representing data through graphs, charts, and dashboards allows network operators to quickly interpret results and make informed decisions. The ability to visualize large and complex datasets in a simple, actionable format can help identify key performance indicators (KPIs) and trends that improve network reliability. At the end of this process, a feedback loop is often established in the data science process. New data is continuously fed into the models, enabling them to learn from past behavior and improve over time. This is particularly relevant in network monitoring, where conditions change rapidly, and models must adapt to evolving network demands and potential threats. By incorporating data science techniques into network management, telecommunications providers can move from reactive problem-solving to proactive and predictive strategies, ensuring better service quality and operational efficiency.

2.1. Network Monitoring and Anomaly Detection

Anomaly detection is a crucial area in which data science has made significant strides. Traditional threshold-based methods often need help with the complexities of modern network environments due to their rigidity and inability to adapt to evolving network conditions. Fernandes et al. proposed a novel approach using autoencoders for network traffic anomaly detection in software-defined networks (SDNs). Their method employs autoencoders to learn a compact representation of regular traffic, enabling the detection of deviations with high accuracy by reconstructing input data and anomaly identification based on the reconstruction error [

4]. Additionally, Lopez-Martin et al. introduced a conditional variational autoencoder (CVAE) for detecting and classifying anomalies in Internet of Things (IoT) environments. Their CVAE model identifies anomalies and provides probabilistic insights into their nature, facilitating more precise and targeted responses [

5].

2.2. Traffic Classification and Analysis

Another critical aspect of network monitoring where data science plays a crucial role is traffic classification and analysis. Network traffic is usually captured using tools such as tcpdump, NetIntercept, and Bro [

6]. The data is generally captured in (.pcap) format and converted to the required format for predictive analytics. Network traffic classification is performed by analyzing packet data to categorize traffic into different classes based on predefined criteria, such as application type, protocol, or security threat. Traditionally, rule-based approaches relied on port numbers or protocol signatures, but these methods are increasingly ineffective due to encrypted traffic and evolving network applications. Modern classification techniques use ML algorithms like Convolutional Neural Networks (CNNs) or Support Vector Machines (SVMs), automatically learning features from raw traffic data. These models analyze flow characteristics, such as packet size, inter-arrival time, and metadata, to classify traffic in real time [

7]. As network applications and services diversify, precise classification of network traffic becomes essential for optimal management and quality of service (QoS). Wang et al. (2018) developed an innovative deep-learning method for classifying encrypted traffic using convolutional neural networks (CNNs). Their approach leverages CNNs to automatically learn hierarchical features from raw network traffic data, achieving high accuracy in classifying various types of encrypted traffic and outperforming traditional ML techniques that rely on handcrafted features [

8].

2.3. Predictive Maintenance

With their multi-layered architecture, deploying 5G networks has underscored the necessity for more advanced, predictive monitoring techniques to ensure network stability and reliability. Traditional reactive maintenance strategies—where problems are addressed after they occur—are no longer viable in these high-performance environments. Predictive maintenance, driven by data science and ML (ML), has emerged as a proactive solution to mitigate failures before they disrupt network services. A study by Thantharate et al. emphasized the potential of ML algorithms such as reinforcement learning and federated learning in 5G network management. Their research demonstrated how these algorithms could enhance network security, optimize resource allocation, and improve performance by analyzing real-time datasets to detect anomalies and predict network behaviors [

9]. Reinforcement learning models, for instance, learn to optimize decisions over time, adapting to the dynamic conditions of a 5G network by continuously interacting with its environment. Predictive maintenance stands out as one of the most impactful applications of ML in 5G. By examining data patterns that precede equipment degradation or network failures, predictive models can anticipate issues reasonably before they occur, enabling preemptive action. These models utilize historical performance data to identify signs of wear or anomalies, allowing operators to schedule maintenance activities without interrupting network services. Sharma and Kumar’s deep learning-based framework exemplifies this approach, combining deep learning with reinforcement learning to monitor optical network traffic patterns and equipment per-formance. Their system not only forecasts failures but also recommends optimal maintenance schedules, thus maximizing uptime and resource efficiency [

10]. One of the primary challenges in implementing predictive maintenance is the high variability in network traffic and environmental conditions, particularly in 5G networks that support diverse services like IoT, ultra-reliable low-latency communications (URLLC), and enhanced mobile broadband (eMBB). To address this, models must be highly adaptable and capable of generalizing across different data types. Federated learning, which enables decentralized training of ML models across distributed edge nodes without exposing raw data, presents a promising solution to this problem. By training on local data at edge devices while ensuring privacy, federated learning improves scalability. It enhances the accuracy of predictive maintenance models by accounting for localized variations in network conditions.

2.4. Edge Computing and Real-Time Analysis

The convergence of edge computing and data science has revolutionized network monitoring and real-time analysis in 5G networks. As 5G networks grow more complex, with ever-increasing data traffic from applications such as IoT, autonomous vehicles, and ultra-low latency services, traditional centralized data processing methods become inefficient. Edge computing addresses this issue by processing data closer to its origin, significantly reducing latency, conserving bandwidth, and enabling faster decision-making. By leveraging edge computing, network operators can perform real-time analytics at the network’s edge, where critical data is generated. This approach allows immediate responses to network anomalies, traffic surges, or security threats without relaying data to a central cloud for processing. The shift to edge computing is particularly crucial for 5G networks, where ultra-reliable low-latency communication (URLLC) services demand near-instantaneous decision-making. For instance, in applications like autonomous driving or remote surgery, even millisecond delays in data transmission can lead to catastrophic consequences. A notable application of edge computing in 5G is network security. Zhang et al. investigated federated learning frameworks for edge-based network intrusion detection. Their system leverages distributed edge nodes to collaboratively train models without sharing raw data, ensuring privacy while reducing the computational burden on any single node. In this context, Federated learning enhances both the scalability and robustness of intrusion detection systems by distributing data processing while preserving privacy through secure aggregation techniques [

11]. This decentralized approach is vital for maintaining the confidentiality of sensitive data, particularly in industries like healthcare and finance, where data security is paramount. While edge computing offers significant advantages, there are also challenges to its widespread adoption. The limited computational power at the edge, data synchronization across nodes, and real-time processing requirements present unique technical obstacles. Additionally, as edge nodes operate in diverse environments, the risk of heterogeneity in data quality and model consistency increases. Despite these hurdles, edge computing remains a powerful tool in optimizing network performance, improving latency, and enabling real-time analysis, making it indispensable for the next generation of telecom networks.

2.5. Network Function Virtualization (NFV) and predictive analytics

Network Function Virtualization (NFV) emerges as a critical technology for managing complex environments as networks transition towards software-defined and virtualized infrastructures. Data science and ML techniques are instrumental in optimizing NFV, helping operators manage virtualized network functions (VNFs) more efficiently. Xie et al. introduced an ML-based framework for performance prediction within NFV environments, leveraging ensemble techniques such as random forests and support vector machines (SVMs). By analyzing historical performance data and workload characteristics, their approach enables accurate forecasting of VNF performance, facilitating better resource allocation and dynamic scaling decisions [

12]. This predictive capability ensures network resources are used efficiently, reducing costs while maintaining high-quality service. Despite these advancements, scaling such techniques across large telecom networks introduces significant challenges. Issues like inconsistent data quality, the complexity of real-time model interpretability, and the need for low-latency processing remain barriers to broader adoption. Boutaba et al. conducted a comprehensive study identifying these challenges. They proposed potential solutions, emphasizing the need for scalable, transparent, and reliable ML models to address the dynamic demands of network management [

3]. As NFV continues to reshape telecommunications, its success will increasingly depend on integrating robust ML algorithms. By transitioning network monitoring from a reactive to a proactive, predictive model, NFV enhances operational efficiency and reduces downtime. However, this shift requires continuous collaboration between data scientists, network engineers, and industry stakeholders to fully unlock the potential of these technologies in managing the rapidly evolving telecom landscape.

3. Predictive maintenance using data science and ML

The telecommunications industry faces constant pressure to maintain optimal performance and minimize downtime. Predictive maintenance, driven by data science and ML, has been a crucial approach to addressing these challenges by forecasting and preventing equipment failures before they occur. This section, supported by recent research and case studies, explores the methodologies, benefits, and challenges of implementing predictive maintenance in telecom networks. Predictive maintenance leverages historical and real-time data to forecast potential failures in network components. ML techniques, including regression models, decision trees, neural networks, and ensemble methods, are pivotal in analyzing data patterns and predicting equipment failures. Regression models, such as linear regression, are often employed for their simplicity and effectiveness in managing linear relationships between variables. For instance, linear regression models can predict network components’ remaining useful life (RUL) based on historical performance data, providing a straightforward yet practical approach to failure prediction [

13]. Decision trees and random forests are well-suited for handling non-linear relationships and interactions among various factors, making them ideal for complex network environments. Decision trees offer a clear visual representation of decision rules, while random forests, an ensemble of decision trees, improve prediction accuracy by aggregating results from multiple trees to mitigate overfitting and enhance robustness [

14]. Deep learning techniques, especially recurrent neural networks (RNNs) and extended short-term memory networks (LSTMN), have shown substantial promise in predictive maintenance due to their ability to capture sequential data and temporal dependencies. RNNs and LSTMs process time-series data from network sensors, learning patterns over time to predict future failures with high accuracy [

15]. Ensemble methods, which combine multiple ML models, further enhance prediction accuracy and robustness by leveraging the strengths of various algorithms. For example, ensemble methods can integrate outputs from regression models and neural networks to produce more reliable predictions in dynamic telecom environments [

16]. Implementing predictive maintenance in telecom networks offers numerous benefits. One significant advantage is reduced downtime, achieved by scheduling maintenance activities during low-traffic periods based on predictive insights. This proactive approach minimizes service disruptions and enhances customer satisfaction [

17]. Predictive maintenance leads to significant cost savings by optimizing maintenance schedules and reducing the frequency of emergency repairs. A study by Kumar et al. demonstrated that predictive maintenance reduced maintenance costs by 30% and increased equipment lifespan by 20% in a large-scale telecom network [

18]. Furthermore, addressing issues before they cause critical failures enhances overall network reliability [

17]. Despite its benefits, implementing predictive maintenance in telecom networks presents several challenges. Data quality and availability are crucial issues, as predictive models require large volumes of high-quality data for accurate predictions. Inconsistent or incomplete data can undermine the reliability of predictive maintenance, leading to suboptimal performance. Integrating predictive maintenance systems with existing network management frameworks also poses challenges. Telecom networks are highly complex, with numerous interconnected components and subsystems. Seamlessly incorporating predictive maintenance solutions into these systems requires meticulous planning and coordination to ensure uninterrupted data flow and system operation [

19]. Another challenge is the interpretability of ML predictions. Understanding the reasoning behind predictive models is crucial for building trust among telecom operators. Complex models, such as deep learning algorithms, often function as “black boxes,” making interpreting their outputs challenging. Tools like SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-agnostic Explanations) can enhance model interpretability by identifying the most influential features in predictions, thereby providing more precise insights into the decision-making process [

20].

4. Anomaly detection in telecom networks

Anomaly detection is crucial for managing telecom networks, aiming to uphold the reliability, security, and efficiency of communication systems. As telecom networks become increasingly intricate, integrating advanced data science and ML techniques is essential for effective anomaly detection. This section, supported by recent research, explores the methodologies, benefits, and challenges associated with anomaly detection in telecom networks. Anomaly detection methodologies in telecom networks utilize various ML techniques, from classical statistical methods to advanced deep learning algorithms. Classical methods such as k-means clustering and DBSCAN are frequently employed to detect outliers by grouping similar data points and identifying those that deviate significantly from typical patterns [

21]. These techniques effectively detect spatial anomalies within network data, where outliers can indicate potential issues or intrusions. Given the temporal nature of telecom data, time-series analysis plays a critical role in anomaly detection. Techniques such as ARIMA (AutoRegressive Integrated Moving Average) and STL (Seasonal-Trend decomposition using Loess) models are commonly used to forecast network behavior and identify deviations from expected patterns [

22]. More sophisticated methods, including recurrent neural networks (RNNs) and long short-term memory (LSTM) networks, offer superior accuracy by capturing temporal dependencies and complex patterns in sequential data [

23]. RNNs and LSTMs are particularly adept at learning from historical data sequences to predict future anomalies, enhancing the detection of subtle, evolving threats. Ensemble learning techniques represent another promising direction for anomaly detection. Ensemble methods enhance robustness and accuracy by combining multiple models, such as decision trees, random forests, and gradient-boosting machines. This approach leverages the strength of individual models while mitigating their weaknesses, resulting in more reliable anomaly detection in telecom networks [

24]. Ensemble models can integrate outputs from various algorithms to provide a comprehensive view of network health, improving detection capabilities. The benefits of effective anomaly detection in telecom networks are substantial. Foremost among these is the prevention of network failures and service disruptions. Early identification and mitigation of anomalies help avoid costly downtimes, ensure continuous service availability, and enhance customer satisfaction [

4]. Additionally, anomaly detection plays a critical role in network security by identifying unusual patterns that may signify cyber-attacks or unauthorized access. ML models can recognize diverse attack signatures, enabling timely intervention and mitigation [

25]. This capability is increasingly essential as telecom networks become more complex and interconnected with other critical infrastructures. Anomaly detection also contributes to efficient network resource utilization. Operators can optimize resource allocation by monitoring performance and identifying inefficiencies, leading to significant cost reductions and improved net-work capacity management. Despite these advancements, several challenges remain. Data’s high dimensionality and heterogeneity pose significant hurdles, as networks generate diverse data types, including logs, traffic data, and performance metrics, complicating integration and analysis. Data labeling presents another challenge: ML models require labeled data for practical training. Labeling network data, particularly anomalies, is time-consuming and often requires expert knowledge. While semi-supervised and unsupervised learning approaches are being explored to address this issue, they still face limitations in accuracy and reliability [

26]. The ML model’s interpretability is also a critical factor. Telecom operators must understand the reasoning behind model decisions to take appropriate actions. However, complex models, especially deep learning algorithms, often function as black boxes, challenging interpreting their choices. As deep learning technologies evolve, there is growing concern about the implications of general intelligence in anomaly detection, adding to the complexity. Developing explainable AI techniques to provide insights into model decisions remains an active area of research [

27]. Telecom networks’ evolving nature presents a continuous challenge for anomaly detection. As networks expand and integrate new technologies, the baseline for regular behavior shifts, requiring ML models to adapt and update regularly. This dynamic environment necessitates a flexible and scalable approach to anomaly detection to keep pace with the changes and ensure effective monitoring.

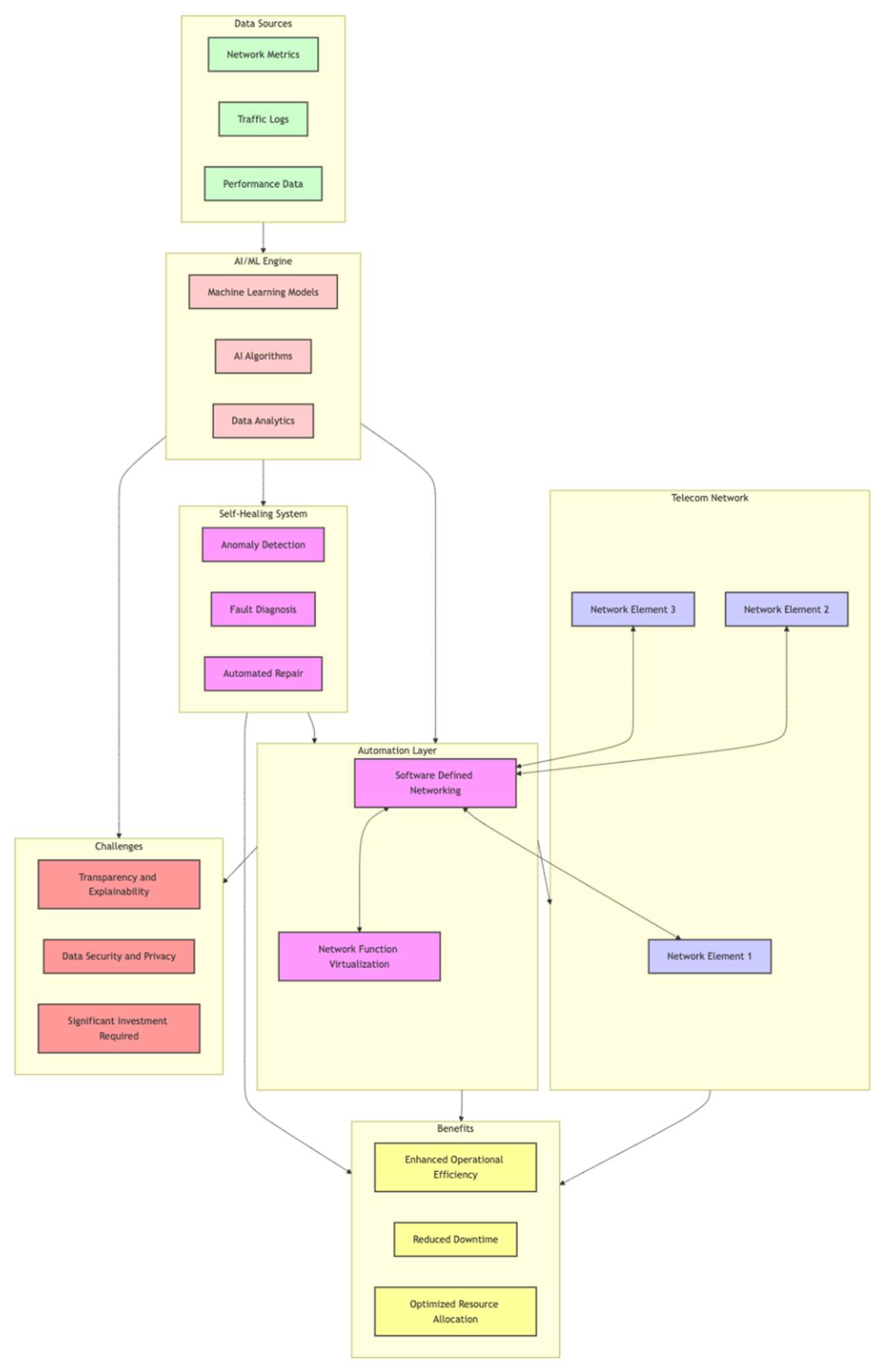

5. Automated Network Configuration and Self-Healing Mechanisms

In telecommunication networks, the shift towards automated network configuration and self-healing mechanisms has been pivotal in enhancing operational efficiency, reducing downtime, and optimizing resource allocation. These technologies leverage AI and ML to automate routine tasks and address network anomalies proactively, thereby minimizing human intervention and mitigating the risk of errors (see Figure 3). This section explores the critical aspects of automated network configuration and self-healing mechanisms, emphasizing their significance in modern telecom networks. The surge in data traffic, driven by IoT devices and the advent of 5G, has further strained network resources, necessitating more efficient management strategies. Automated network configuration addresses these challenges by enabling networks to configure themselves dynamically in response to changing conditions. For example, automated configuration mechanisms can dynamically adjust bandwidth allocation, prioritize traffic flows, and reroute data paths based on real-time network conditions. This ability is essential for 5G networks, where services like enhanced mobile broadband, massive machine-type communications, and ultra-reliable low-latency communications require customized and flexible network configurations [

28]. These mechanisms ensure optimal performance and resource utilization by employing algorithms that optimize network parameters on the fly. Self-healing mechanisms advance automation by enabling networks to detect, diagnose, and repair faults autonomously. Advanced AI and ML algorithms analyze network data to identify anomalies and execute corrective actions without human intervention. For instance, self-healing systems can automatically reconfigure network elements, reroute traffic, or deploy additional resources to mitigate the impact of faults. This proactive strategy is crucial for ensuring the network’s reliability, especially in mission-critical applications where even brief outages can have significant consequences. Research has demonstrated that AI-driven self-healing solutions can substantially reduce network downtime and improve service quality [

29]. By integrating predictive maintenance, these systems can identify patterns indicative of potential failures and address them before they result in outages, thereby enhancing network reliability and customer satisfaction. Implementing automated network configuration and self-healing mechanisms is underway in various telecom networks globally. Technologies like SDN and NFV have streamlined the automation of network functions, allowing for more agile and adaptable network management [

30]. SDN separates the network control plane from the data plane, allowing for centralized control and dynamic reconfiguration of network resources. NFV virtualizes network functions, which can be managed and orchestrated through software, providing greater flexibility and efficiency. AI-driven self-healing systems have been successfully deployed in mobile networks to enhance automation and reduce operational costs. A study demonstrated that integrating AI for self-healing improved network performance and reduced manual intervention by over 50% [

31]. This significant reduction in manual oversight is advantageous in managing extensive networks where manual monitoring and maintenance are impractical. Leading telecom operators have deployed self-healing networks that utilize AI to monitor network health and make real-time adjustments to maintain optimal performance. These systems have effectively reduced network downtime and improved customer experience by ensuring consistent service delivery [

12]. Despite these benefits, several challenges must be addressed in implementing automated network configuration and self-healing mechanisms. One primary concern is the need for transparency and explainability in AI-driven systems. Operators must ensure that these systems operate reliably and that their decision-making processes are understandable to human operators. This is crucial in scenarios where automated decisions, such as rerouting critical traffic or initiating emergency responses, could have significant consequences. Safeguarding data and preserving data privacy and security is also vital and should be considered. Automated systems must handle sensitive data carefully, adhering to privacy regulations and preventing security breaches. Achieving a balance between automation and confidentiality requires careful design and implementation of AI-driven systems [

13]. Additionally, integrating these advanced technologies necessitates significant investment in infrastructure and training. Operators must upgrade their networks to support automation and ensure their workforce possesses the skills to manage and oversee these systems effectively. As these technologies mature, the telecom industry will likely see an increased adoption of fully autonomous networks, where AI-driven systems handle most, if not all, network management tasks. This shift towards more extraordinary automation promises to revolutionize how telecom networks are managed, delivering enhanced efficiency, reliability, and performance.

Figure 1.

End to End flow of Self-healing mechanism and its benefits.

Figure 1.

End to End flow of Self-healing mechanism and its benefits.

6. Challenges and future directions

The future of predictive maintenance in telecom networks will increasingly rely on integrating emerging technologies such as edge computing, federated learning, and quantum computing. These advancements can address existing challenges and significantly enhance predictive maintenance capabilities. Edge Computing is a transformative technology that facilitates real-time data processing at the network’s edge, closer to where data is generated. By reducing and minimizing travel distance data, edge computing can lower latency and enhance the responsiveness of predictive maintenance systems [

32]. For instance, processing sensor data directly at the edge allows immediate anomaly detection and failure prediction, leading to faster and more efficient maintenance actions. This localized processing is particularly beneficial in large-scale telecom networks where reducing latency is critical for maintaining service quality and network reliability. Federated Learning offers a promising approach to overcoming privacy concerns associated with predictive maintenance. This technique enables the training of ML models on decentralized data sources without requiring the sharing of raw data between entities [

33]. In telecom networks, federated learning allows multiple operators to collaboratively train predictive models using their data while preserving the confidentiality of sensitive information. This collaborative method can result in more resilient and generalized models, improving the accuracy of failure predictions and maintenance recommendations while addressing data privacy concerns. Quantum Computing holds the potential to revolutionize predictive maintenance by solving complex optimization problems more efficiently than classical computing methods [

34]. Quantum algorithms can enhance the performance of predictive models by analyzing large volumes of data and detecting patterns at unprecedented speeds. For example, quantum-enhanced optimization algorithms could significantly improve the accuracy and efficiency of predictive maintenance scheduling, allowing for more precise and proactive maintenance strategies. As quantum computing technology advances, it could provide substantial benefits in handling high-dimensional data and intricate patterns in telecom networks. Despite these advancements, several challenges remain. Data Quality continues to be a significant concern, as predictive models require large volumes of high-quality data to make accurate predictions. Consistent or complete data can maintain the effectiveness of predictive maintenance systems.

Additionally, System Integration poses challenges in seamlessly incorporating predictive maintenance solutions into existing network management frameworks. The complexity of telecom networks necessitates careful planning and integration to ensure that predictive maintenance systems function effectively alongside other network management tools. Model Interpretability is another significant challenge. As predictive maintenance models become more complex, particularly with deep learning and quantum computing, understanding how these models arrive at their predictions becomes crucial. Ensuring that model decisions are transparent and understandable to network operators is essential for gaining trust and effectively implementing maintenance actions. Tools and techniques for improving model interpretability, such as explainable AI methods, are crucial for addressing this challenge.

7. Conclusion

Integrating data science and AI in telecommunications network management represents a paradigm shift in how networks are monitored, maintained, and optimized. This paper has explored the significant advancements made in network management through these technologies, focusing on critical areas such as network monitoring, predictive maintenance, anomaly detection, automated network configuration, and self-healing mechanisms. The application of data science techniques in network monitoring has demonstrated remarkable improvements in handling the vast and complex datasets generated by modern telecom networks. Techniques such as anomaly detection using ML algorithms and advanced traffic classification methods have proven crucial in maintaining network performance and reliability. Deep learning and ensemble methods have enhanced these capabilities, offering more robust and accurate solutions than traditional approaches. However, challenges such as data quality and model interpretability remain significant, necessitating continued research and development. Predictive maintenance has emerged as a vital strategy for optimizing network performance and minimizing downtime. ML models can predict potential failures by leveraging historical and real-time data, enabling proactive maintenance and reducing operational costs. Despite its benefits, predictive maintenance faces challenges such as integrating with existing systems and ensuring data quality. Emerging technologies, including edge computing, federated learning, and quantum computing, hold promise for addressing these challenges and further advancing predictive maintenance capabilities. Automated network configuration and self-healing mechanisms represent a critical evolution in network management. These technologies reduce the need for manual intervention, enhance operational efficiency, and improve network reliability. Adopting AI-driven self-healing systems and automated configuration methods has significantly enhanced network performance and cost reductions. However, transparency, explainability, data privacy, and security issues must be addressed to ensure the effective and secure implementation of these technologies. Looking ahead, the telecom industry must navigate several challenges to realize the potential of AI and data science in network management. Critical areas for future research include improving model interpretability, ensuring data privacy, and integrating advanced technologies, including edge computing and quantum computing. As these technologies continue to evolve, they will likely drive further innovations in network management, enabling more efficient, reliable, and intelligent telecom networks. The transformative impact of AI and data science on network management underscores the need for ongoing research and collaboration between industry and academia. By addressing current challenges and embracing emerging technologies, the telecommunications industry is poised to unlock unprecedented efficiency, reliability, and intelligence levels in network management.

References

- Klaine, P.V., et al., A survey of machine learning techniques applied to self-organizing cellular networks. IEEE Communications Surveys & Tutorials, 2017. 19(4): p. 2392-2431. [CrossRef]

- Morocho-Cayamcela, M.E., H. Lee, and W. Lim, Machine learning for 5G/B5G mobile and wireless communications: Potential, limitations, and future directions. IEEE access, 2019. 7: p. 137184-137206. [CrossRef]

- Boutaba, R., et al., A comprehensive survey on machine learning for networking: evolution, applications and research opportunities. Journal of Internet Services and Applications, 2018. 9(1): p. 1-99. [CrossRef]

- Fernandes, G., et al., A comprehensive survey on network anomaly detection. Telecommunication Systems, 2019. 70: p. 447-489. [CrossRef]

- Lopez-Martin, M., et al., Conditional variational autoencoder for prediction and feature recovery applied to intrusion detection in iot. Sensors, 2017. 17(9): p. 1967. [CrossRef]

- Pilli, E.S., R.C. Joshi, and R. Niyogi, Network forensic frameworks: Survey and research challenges. digital investigation, 2010. 7(1-2): p. 14-27. [CrossRef]

- Kaur, P., et al. Network traffic classification using multiclass classifier. in Advances in Computing and Data Sciences: Second International Conference, ICACDS 2018, Dehradun, India, April 20-21, 2018, Revised Selected Papers, Part I 2. 2018. Springer.

- Wang, W., et al. End-to-end encrypted traffic classification with one-dimensional convolution neural networks. in 2017 IEEE international conference on intelligence and security informatics (ISI). 2017. IEEE. [CrossRef]

- Thantharate, A., et al. DeepSlice: A deep learning approach towards an efficient and reliable network slicing in 5G networks. in 2019 IEEE 10th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON). 2019. IEEE.

- Sharma, H. and N. Kumar, Deep learning based physical layer security for terrestrial communications in 5G and beyond networks: A survey. Physical Communication, 2023. 57: p. 102002. [CrossRef]

- Zhang, C., P. Patras, and H. Haddadi, Deep learning in mobile and wireless networking: A survey. IEEE Communications surveys & tutorials, 2019. 21(3): p. 2224-2287. [CrossRef]

- Xie, J., et al., A survey of machine learning techniques applied to software defined networking (SDN): Research issues and challenges. IEEE Communications Surveys & Tutorials, 2018. 21(1): p. 393-430. [CrossRef]

- Schwabacher, M., A survey of data-driven prognostics. Infotech@ Aerospace, 2005: p. 7002.

- Wuest, T., et al., Machine learning in manufacturing: advantages, challenges, and applications. Production & Manufacturing Research, 2016. 4(1): p. 23-45. [CrossRef]

- Zhao, R., et al., Deep learning and its applications to machine health monitoring. Mechanical Systems and Signal Processing, 2019. 115: p. 213-237. [CrossRef]

- Bischl, B., et al., Resampling methods for meta-model validation with recommendations for evolutionary computation. Evolutionary computation, 2012. 20(2): p. 249-275. [CrossRef]

- Jardine, A.K., D. Lin, and D. Banjevic, A review on machinery diagnostics and prognostics implementing condition-based maintenance. Mechanical systems and signal processing, 2006. 20(7): p. 1483-1510. [CrossRef]

- Carvalho, T.P., et al., A systematic literature review of machine learning methods applied to predictive maintenance. Computers & Industrial Engineering, 2019. 137: p. 106024. [CrossRef]

- Mahmood, T. and K. Munir. Enabling Predictive and Preventive Maintenance using IoT and Big Data in the Telecom Sector. in IoTBDS. 2020.

- Ribeiro, M.T., S. Singh, and C. Guestrin. “ Why should i trust you?” Explaining the predictions of any classifier. in Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining. 2016.

- Esling, P. and C. Agon, Time-series data mining. ACM Computing Surveys (CSUR), 2012. 45(1): p. 1-34.

- Hyndman, R.J. and G. Athanasopoulos, Forecasting: principles and practice. 2018: OTexts.

- Malhotra, P., et al. Long short term memory networks for anomaly detection in time series. in Esann. 2015.

- Hilas, C.S. and J.N. Sahalos. An application of decision trees for rule extraction towards telecommunications fraud detection. in Knowledge-Based Intelligent Information and Engineering Systems: 11th International Conference, KES 2007, XVII Italian Workshop on Neural Networks, Vietri sul Mare, Italy, September 12-14, 2007. Proceedings, Part II 11. 2007. Springer.

- Vikram, A. Anomaly detection in network traffic using unsupervised machine learning approach. in 2020 5th International Conference on Communication and Electronics Systems (ICCES). 2020. IEEE.

- Ruff, L., et al. Deep one-class classification. in International conference on machine learning. 2018. PMLR.

- Doshi-Velez, F. and B. Kim, Towards a rigorous science of interpretable machine learning. arXiv preprint arXiv:1702.08608, 2017.

- Asghar, M.Z., F. Ahmed, and J. Hämäläinen. Artificial intelligence enabled self-healing for mobile network automation. in 2021 IEEE Globecom Workshops (GC Wkshps). 2021. IEEE.

- Johnphill, O., et al., Self-Healing in Cyber–Physical systems using machine learning: A critical analysis of theories and tools. Future Internet, 2023. 15(7): p. 244. [CrossRef]

- Santos, J.P., et al., SELFNET Framework self-healing capabilities for 5G mobile networks. Transactions on Emerging Telecommunications Technologies, 2016. 27(9): p. 1225-1232. [CrossRef]

- Shahane, V., Towards Real-Time Automated Failure Detection and Self-Healing Mechanisms in Cloud Environments: A Comparative Analysis of Existing Systems. Journal of Artificial Intelligence Research and Applications, 2024. 4(1): p. 136-158.

- Shi, W., et al., Edge computing: Vision and challenges. IEEE internet of things journal, 2016. 3(5): p. 637-646. [CrossRef]

- Yang, Q., et al., Federated machine learning: Concept and applications. ACM Transactions on Intelligent Systems and Technology (TIST), 2019. 10(2): p. 1-19.

- Preskill, J., Quantum computing in the NISQ era and beyond. Quantum, 2018. 2: p. 79. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).