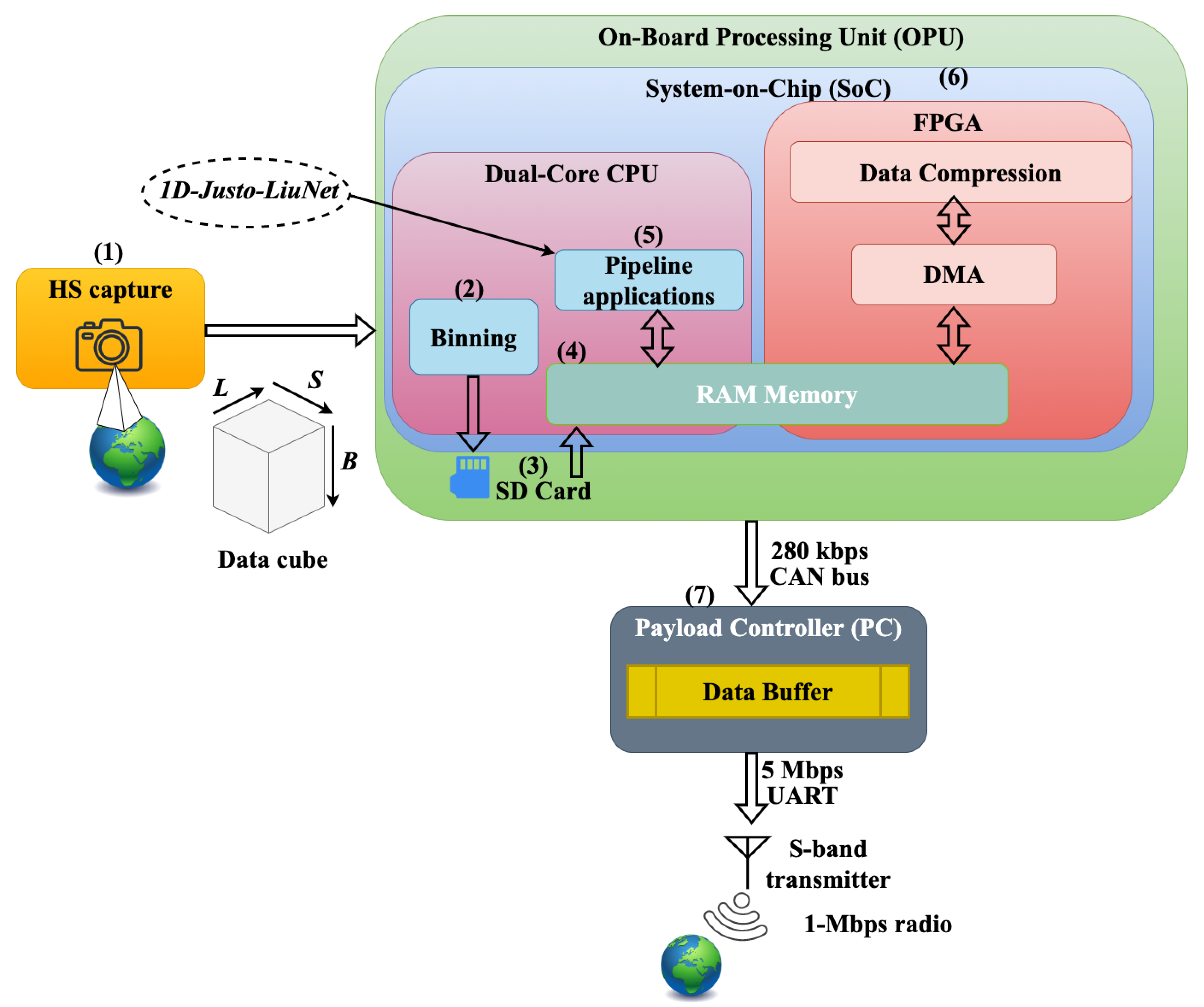

2. System Architecture

Before presenting the implementation of

1D-Justo-LiuNet, we provide an overview of HYPSO-1’s system architecture in flight, where our model is deployed. The satellite’s subsystems are extensively detailed in the current state of the art [

7,

8,

52,

53,

54]. Thus, we next highlight only the relevant aspects from Langer et al. [

55]. We consider that this architecture is representative of current in-orbit processing systems, making our results broadly applicable.

To begin, the processing pipeline includes a hardware stage for data captures, followed by a software for data processing. Langer et al. refers to a Minimal On-Board Image Processing (MOBIP) pipeline for the basic processing steps at launch. Post-launch, this pipeline is updated with additional processing during flight [

55]. In

Figure 1, we provide an overview of the satellite’s system architecture showing only what is most relevant to this work. Namely, the HS camera captures in (1) an image using a push-broom technique, scanning line frames as the satellite orbits. Subsequent lines form an image with spatial dimensions

, each pixel containing

B bands, resulting in a 3D data cube with dimensions

. The data is serialized as a Band Interleaved by Pixel (BIP) stream and sent to the On-Board Processing Unit (OPU). The OPU, built with Commercial-off-the-Shelf (COTS) components, consists of a Zynq-7030 System-on-Chip (SoC) with a dual-core ARM Cortex-A9 CPU processor and a Kintex-7 FPGA [

56]. As seen in

Figure 1, after streaming the data, step (2) involves binning it on the CPU, where pixels are grouped into intervals (

bins) and replaced by a representative value [

55]. While this reduces resolution, Langer et al. note benefits such as compression and improved SNR. Furthermore, in step (3), the binned data cube is stored on a microSD card. In addition, data is loaded in (4) into a RAM memory for CPU processing [

53]. In step (5), data processing begins in a submodule named

pipeline applications in [

55]. This is where we integrate, in this work,

1D-Justo-LiuNet to demonstrate inference at the edge. This will be further described throughout this article. As opposed to the pipeline described by Langer et al., our pipeline implementation has been modified to increase maintainability and ease of use. Instead of a single C program, the pipeline has been split up into individual programs, one for each module, such that they can be executed individually. This reduces time spent in testing and integration. The modules are then run in the pipeline sequence using a Linux shell script.

In addition to

1D-Justo-LiuNet, other modules include smile and keystone HS data cube correction characterized in [

57], a linear SVM [

58], spatial pixel subsampling [

55], and an image composition module from three bands of the data cube [

53]. Furthermore, the FPGA in (6) supports additional processing, achieving lower latency and power consumption. Through a Direct Memory Access (DMA) module, the FPGA directly accesses RAM, allowing faster memory reads and writes, bypassing the CPU. This approach enables the FPGA implementation to perform lossless compression CCSDS-123v1 [

59]. The compression ratios vary depending on the content, such as cloudy images allowing for more compression [

60]. This can reduce the cube size to approximately 40-80 MB. Additionally, near-lossless compression CCSDS-123v2 has been recently tested in [

61] for future nominal operations. On the CPU, the segmented images by

1D-Justo-LiuNet are also compressed and packaged together with the data cube into one single file and stored in the SD card. At this stage, the OPU enters an idle state to be powered off and save energy [

55]. When the satellite has Line-of-Sight (LoS) with a ground station, the payload is powered to stream the compressed segmented image first, followed by the HS raw data cube, to a Payload Controller’s (PC) buffer in (7) with a limited capacity up to two HS data cubes. Data throughput to the PC is slow (about 280 kbps) via a CAN bus, but the PC sends the buffered data to the S-band radio transmitter at a significantly higher throughput of 5 Mbps using an UART interface, which is higher than the S-band throughput. This is done to ensure we maximize the S-band utilization instead of being limited by the CAN-bus. When the satellite has LoS with a ground station, the data temporarily stored on the PC’s buffer is transmitted to the ground station through the S-band radio at 1 Mbps throughput [

9,

62].

To integrate 1D-Justo-LiuNet on the OPU, we store the program along with files such as model parameters, all within a single directory. A bash script then triggers the program to process newly acquired images. In our setup, network execution can be toggled on or off from the ground via scheduling software. To prevent interference with other on-board processes, the scheduling software allocates sufficient time for segmentation and related data handling. Once the segmentation is complete, the output image is compressed into a compressed tarball to reduce its size.

5. Results and Discussion

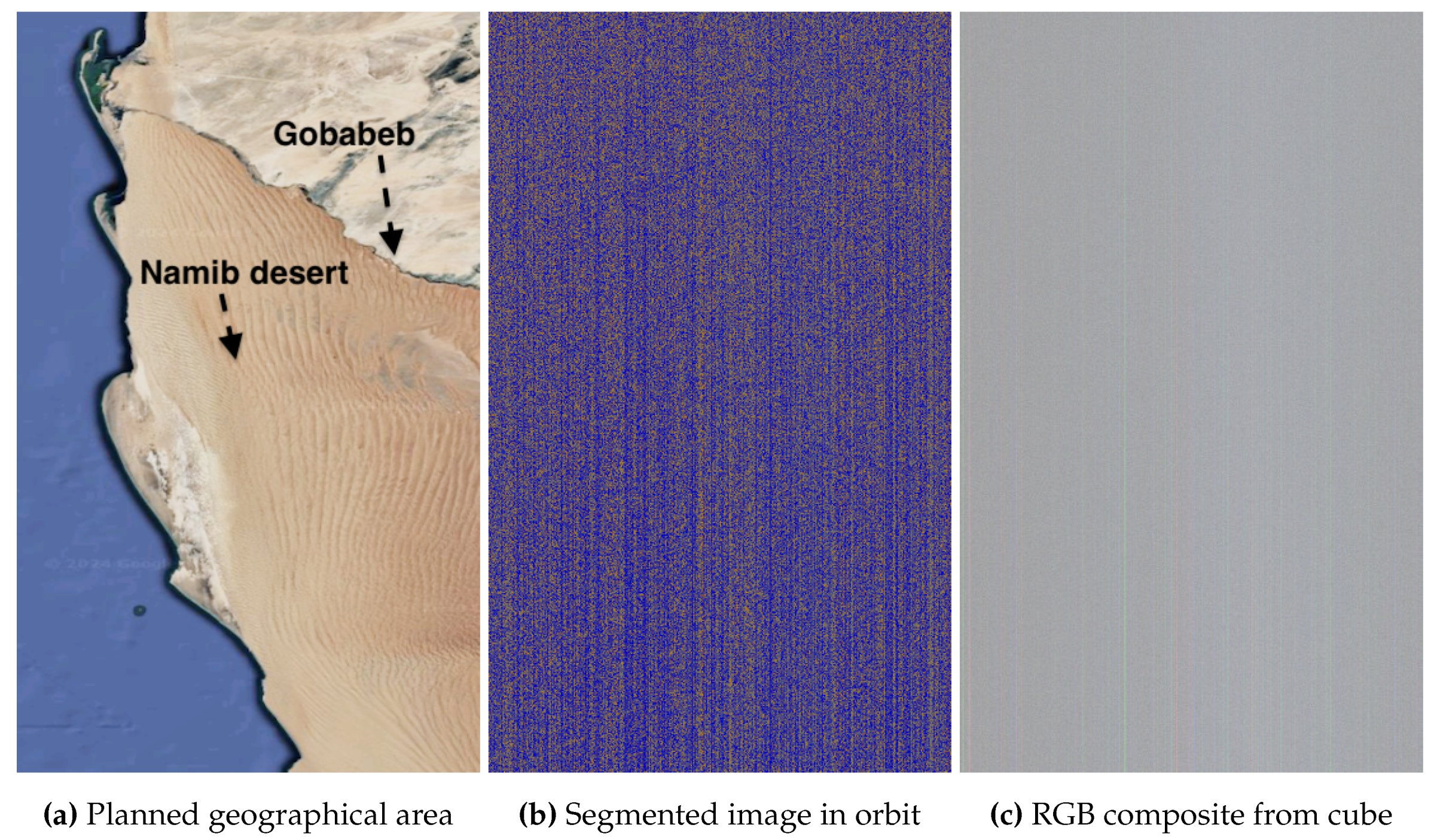

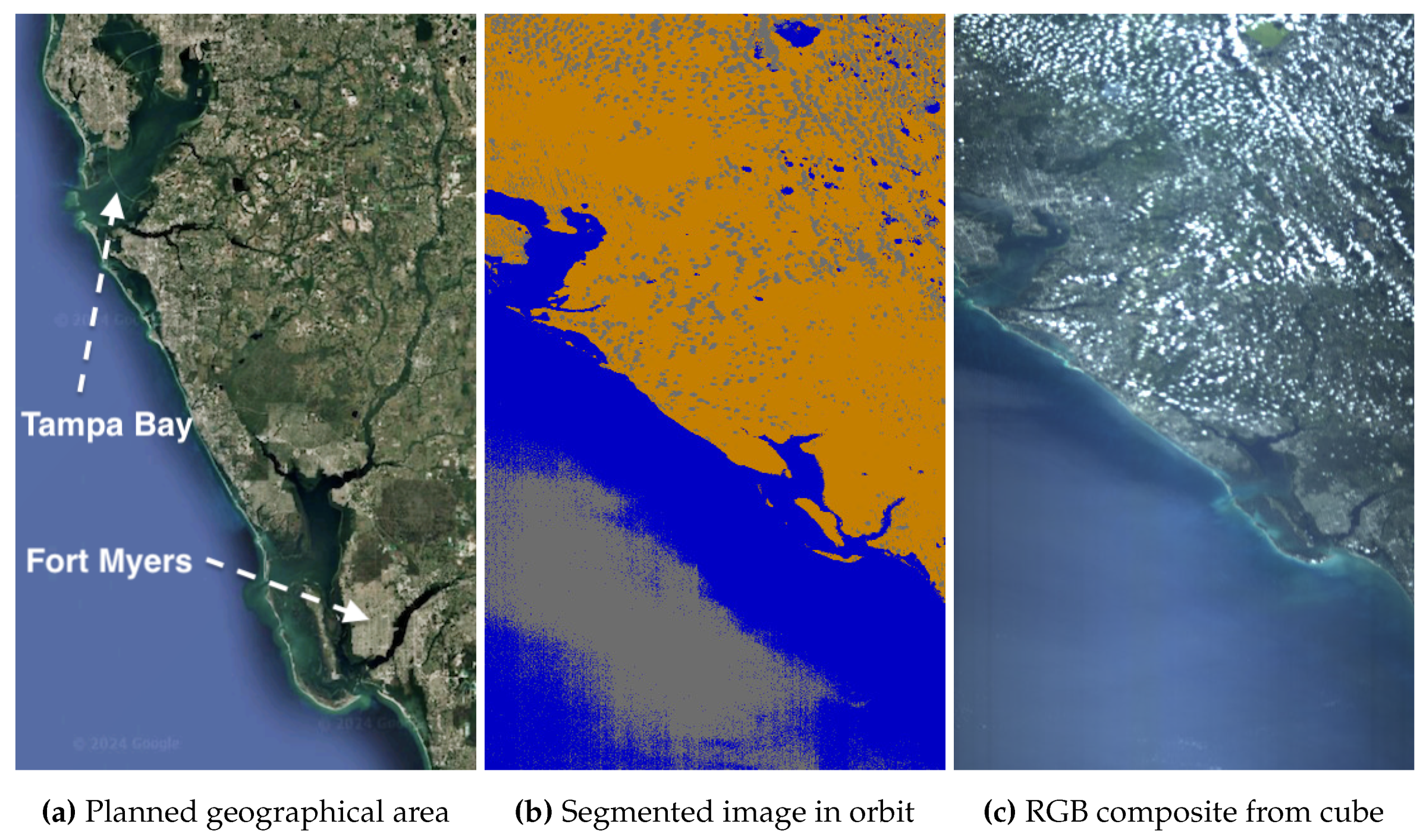

We will illustrate segmented images inferred in orbit for various examples to demonstrate segmentation performance in the different case scenarios previously described. Most figures will primarily include:

A Google map image showing the approximate geographical area where it was planned to image with HYPSO-1.

The segmented image; where blue denotes water, orange indicates land, and gray represents clouds or overexposed pixels.

An RGB composite created from the raw HS data cube, utilizing bands for the Red, Green, and Blue channels out of the 120 available (at 603, 564, and 497 nm, respectively).

We include various captures, selected to address issues such as incomplete downlink, satellite mispointing, varying levels of cloud cover and thickness, and overexposure. Additionally, the captures cover a broad range of terrain types:

Arid landscapes and desert regions with different mineral compositions.

Forested areas and urban environments.

Lakes, rivers, lagoons and fjords of different sizes.

Coastlines, islands and oceans.

Waters with colors ranging from cyan to deep blue.

Arctic regions with snow and ice.

We will also present results from a 2D-CNN detecting patterns in the segmented images that indicate satellite mispointing at space instead of Earth, enabling autonomous decision-making to discard unusable data cubes on board. Brief results from AI explainers will be included to justify the model’s decision. Finally, we will provide timing results to identify which model layers may benefit the most from FPGA acceleration. In the supplementary material, segmentation results by 1D-Justo-LiuNet for over 300 images processed in orbit are additionally provided.

5.1. Accuracy: Incomplete Downlink of Data Cubes

We assess segmentation accuracy in orbit via visual inspection, as no ground-truth labels are available for comparison. This subjective approach is common in the literature when analyzing segmentation results in unlabeled satellite imagery [

79]. However, metrics such as the Davies-Bouldin Index (DBI) and Silhouette Score [

80] may be used to evaluate the quality of segmented images without ground truth. These metrics evaluate segment separation (ideally maximized) and distance between data points within clusters (ideally minimized). Nevertheless, objective quality assessments in digital applications are increasingly challenged, as seen in recent standards like ITU/P.910 from the International Telecommunication Union [

81]. These standards prioritize subjective quality, as objective metrics, like the ones mentioned, can fail to capture user-perceived quality. In this work, relying on objective metrics can be misleading, as they may not capture mission-critical anomalies, making expert inspection more suitable. Since proposing subjective scores is unnecessary and beyond this work’s scope, the evaluation is based on our own expertise with HYPSO-1. We simply compare the RGB composite from the raw data cube with the inferred segmented image.

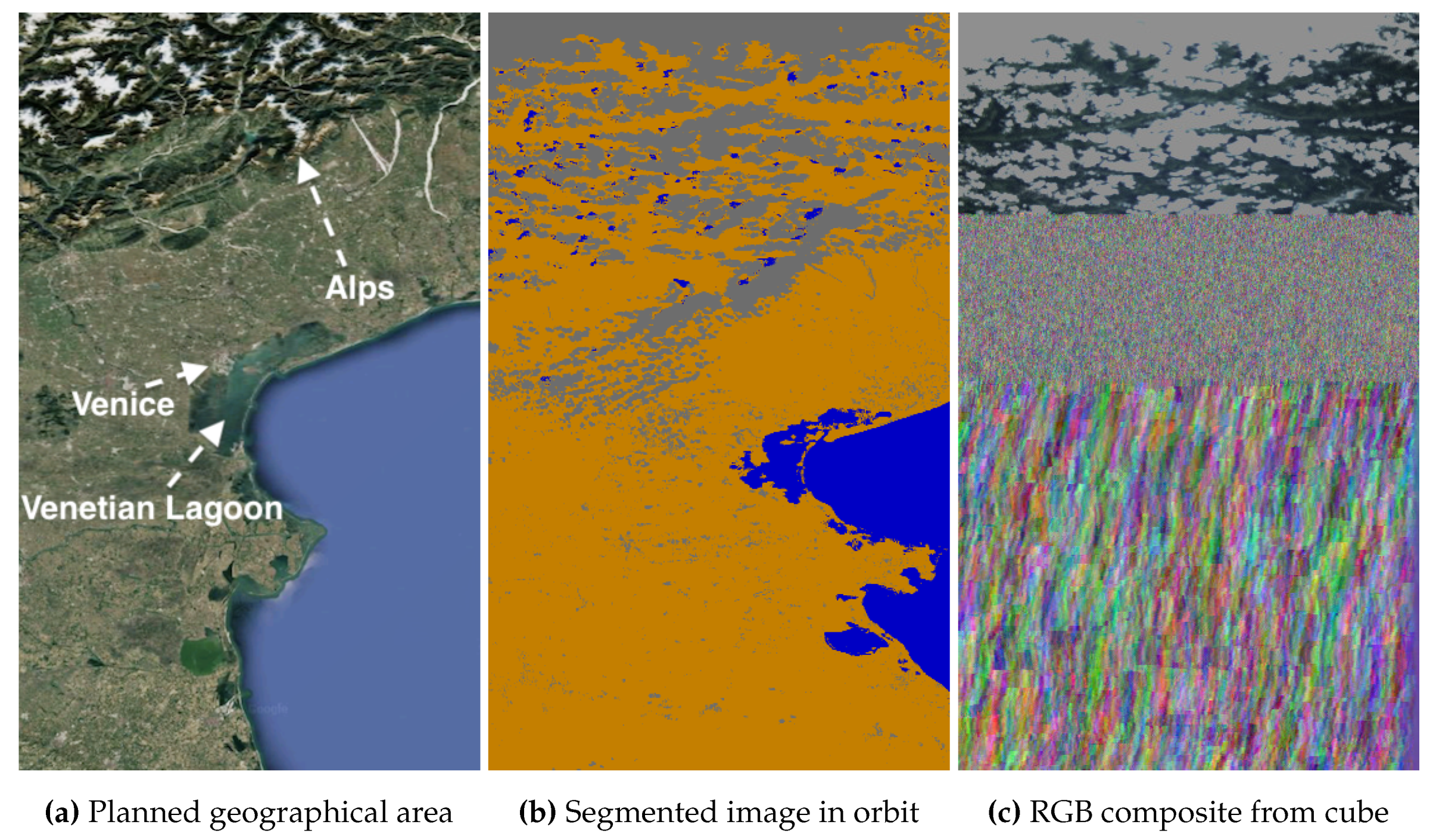

5.1.1. Imagery of Venice

We planned a capture of the Venetian Lagoon, shown in

Figure 6 (a), where only the water is of interest in this case. Other possible regions captured, like the Northern Italian Alps, are incidental and irrelevant here. When comparing the segments in (b) with the map in (a), we confirm the satellite correctly pointed and captured the intended Earth’s coordinates around the Venetian Lagoon by approximate georeferencing. However, we do not receive the complete HS data cube resulting in the decompression artifacts seen in (c). With the proposed algorithms, in-flight segmentation allows us to decide whether to reattempt downlink or discard the on-board HS data cube and revisit Venice instead. Since the lagoon’s water is segmented as cloud-free, with clouds mainly covering the Alps, we decide to reattempt downlink, thereby optimizing latency over revisiting the area. This informed decision is clearly better than discarding the cube based on the incorrect assumption that cloudy top pixels in (c) indicate the lagoon is also obscured.

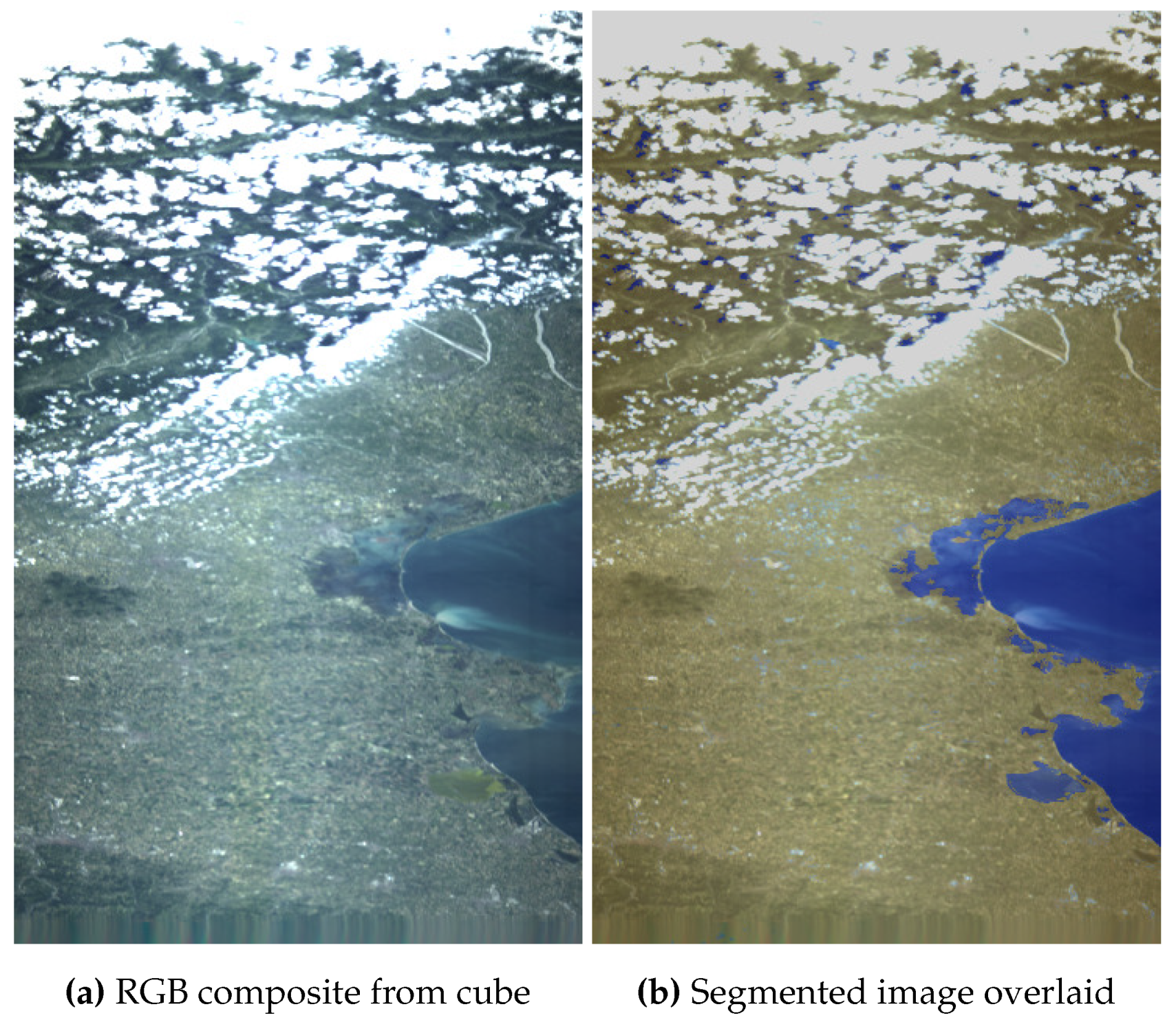

After reattempting downlink, we receive the capture in

Figure 7 (a) mostly without downlink issues. For easier comparison, the segmented image is overlaid on the RGB composite in (b). We confirm the satellite correctly pointed to Venice’s area and captured the lagoon cloud-free, with only the Alps in the north affected, validating our decision to reattempt downlink. We consider the segmentation accuracy guiding this operation as acceptable for our application, even if some misclassifications occur. Although large water bodies and coastlines are well-detected, cloud shadows are sometimes mistaken for deep water due to their dark color, and lighter-toned river water is confused with clouds. This suggests that improved model training could reduce these errors. However, the focus of this article is on model inference, not on training.

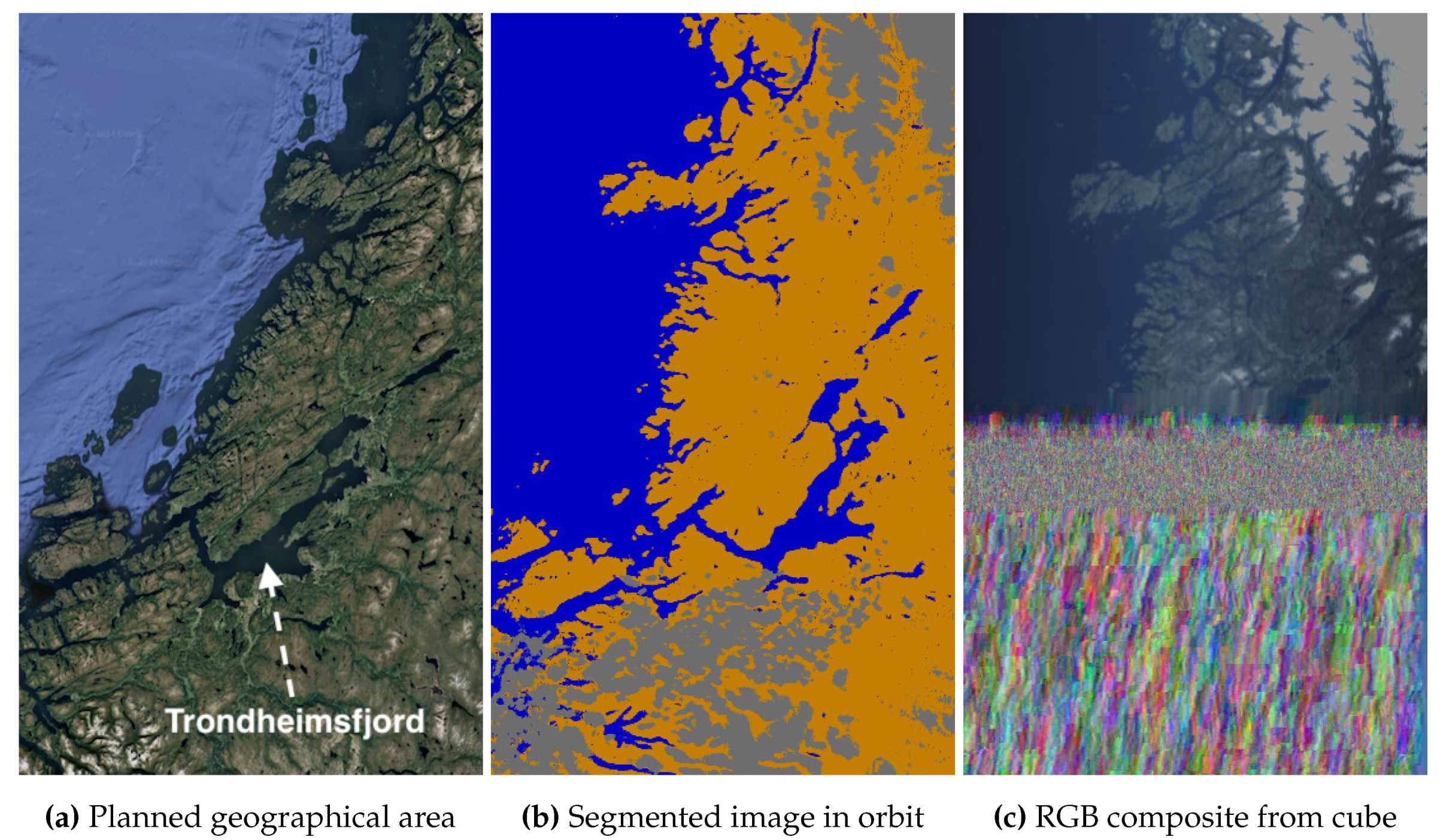

5.1.2. Imagery of Norway

Figure 8 (a) shows our target area around Trondheimsfjord, one of Norway’s longest fjords at over 100 km, surrounded by extensive forests. We aim to capture the fjord and the surrounding forests without significant cloud cover. The segments in (b) confirm that the satellite was correctly oriented toward Trondheim’s area. However, similar to the Venice capture, the data in (c) is affected by artifacts. Despite Norway’s complex and island-dense coastlines, where small islands are often misclassified as water due to stronger ocean reflections overshadowing land scattering, the model still identifies the ocean, land, and clouds at the top of the capture with a precision that meets our operational needs. Although Trondheimsfjord remains cloud-free, substantial cover affects the southern forests. As a result, rather than reattempting downlink, we decide to discard the data cube and plan a revisit for a clearer capture of the southern forests.

5.2. Accuracy: Satellite Mistakenly Pointing at Space

We plan a capture of the Namib desert in Namibia, Africa, shown in

Figure 9 (a). The region hosts a station from ESA that is part of RedCalNet (Radiometric Calibration Network), a global network of sites providing publicly available measurements to support data product validation and radiometric calibration. The downlinked segments in (b) exhibit a characteristic stripped pattern, typically inferred when the satellite mistakenly points at space instead of the Earth. The RGB composite in (c) confirms an unusable capture, showing stripes in a mostly gray image. If future operations adopt our Ground-Based Segment Inspection approach (see

Section 4.2), operators can manually review the downlinked segments and easily spot the mispointing error solely from the segments in (b). While uplinking parameters for new captures, operators can additionally command the satellite to discard the on-board data cube and revisit the area instead, avoiding the high transmission cost of downlinking this unusable cube.

Alternatively, interpreting segments in orbit offers a more autonomous solution (see

Section 4.3). For captures mispointing at space, relying on class proportions alone is insufficient, as it does not account for spatial context. Instead, we propose using a 2D-CNN in flight to detect the striped pattern in the segmented image. The CNN would autonomously decide to discard this unusable data cube without human intervention. This serves as an example of how in-orbit segment interpretation can enable autonomous decision-making.

In our previous work [

43], we found that the 1D-CNN model

1D-Justo-LiuNet, which focuses solely on spectral context, outperformed 2D-CNNs and ViTs in the HS domain. However, the resulting segmented images have a single-channel value per pixel: 0 for

sea, 1 for

land, and 2 for

clouds. Therefore, applying a 1D-CNN to each pixel is not viable, and while flattening the image for 1D processing is possible, it would fail to capture the strong spatial patterns in the stripes. Detecting such patterns, with significant global spatial features, is better suited to 2D-CNNs. In

Figure 6 (b), we showed a segmented image over the Venice area, which is now part of the test set for validating the 2D-CNN. Manual inspection suggested no mispointing error, but we aim for the satellite to infer this autonomously. The 2D-CNN correctly predicts

no mispointing (the Earth was not missed) with 100% confidence, as shown in

Table 3. The table shows that the network outputs a probability vector, with the first position representing the likelihood that the segmented image belongs to

no mispointing class (1.00 in this case), and the second position indicating the probability of it belonging to the

mispointing class (0.00 in this case). Moreover,

Figure 9 (b) shows another segmented image, part of the test set, with a striped pattern indicating a pointing error towards space.

Table 3 shows the 2D-CNN correctly classifies it as

mispointing with 100% confidence. However, future work should focus on optimizing the 2D-CNN into a lightweight version for in-orbit deployment while expanding its capabilities to detect more patterns (e.g., detection of islands, coastlines, etc) to increase automation in flight. If the spatial patterns in the segmented images happen to be more complex than expected, we advise conducting training with more advanced techniques like knowledge distillation, transferring knowledge from a larger teacher model (e.g., ViTs [

44,

82] based on self-attention mechanisms [

83]) into a lighter student model that mimics the teacher’s performance.

Figure 9.

Namib desert close to Gobabeb, Namibia, Africa, on 25 June 2024 at 08:51 UTC. Coordinates: -23.6° latitude and 15.0° longitude. Exposure time: 20 ms.

Figure 9.

Namib desert close to Gobabeb, Namibia, Africa, on 25 June 2024 at 08:51 UTC. Coordinates: -23.6° latitude and 15.0° longitude. Exposure time: 20 ms.

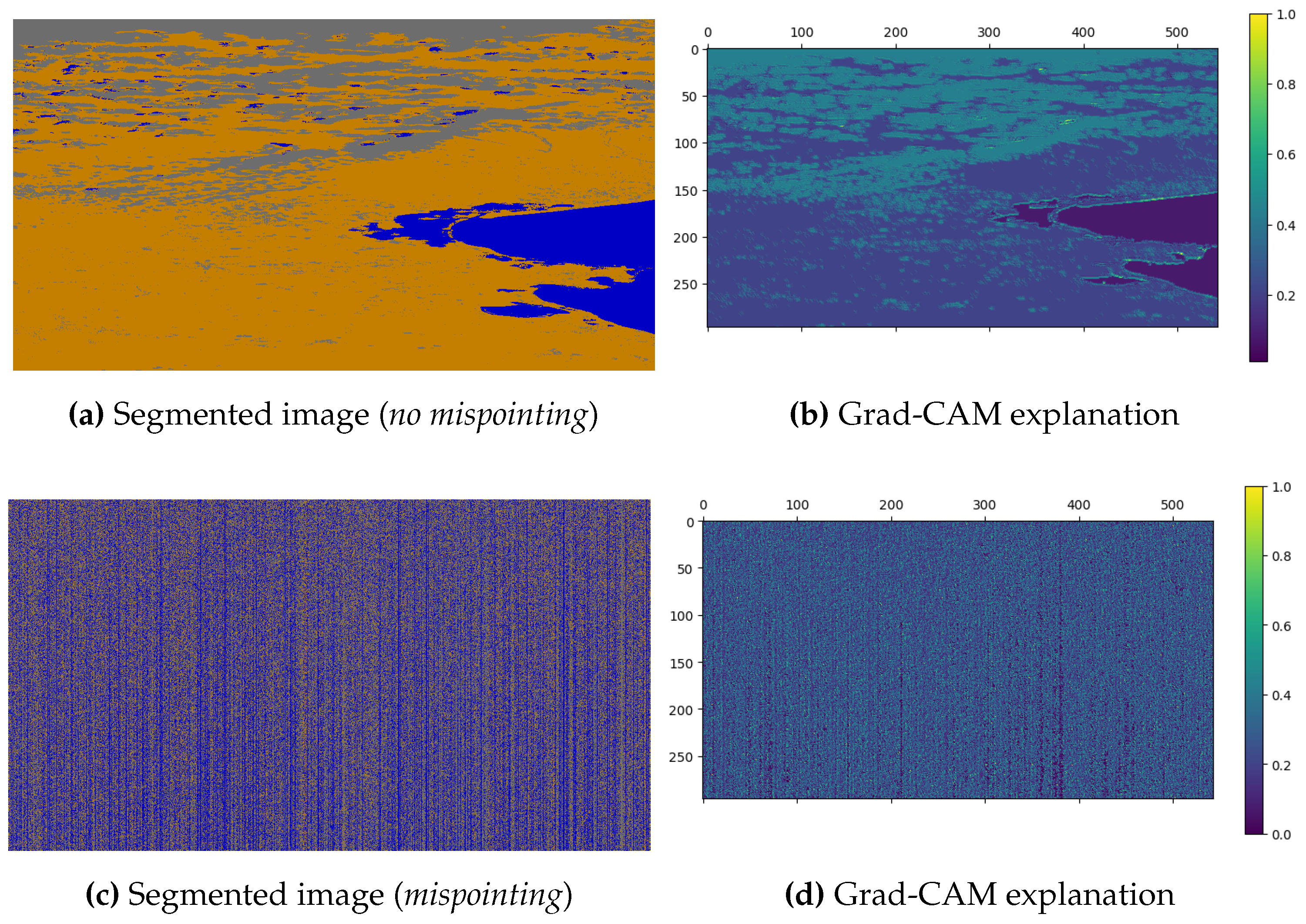

To explain the 2D-CNN model’s predictions, we also use XAI algorithms [

68]. In XAI, choosing the adequate explainer is crucial, as some explanations can obscure rather than clarify the reasoning behind a model’s predicted outcome. For our data and 2D-CNN model, initial SHAP tests do not explain the CNN’s predictions intuitively enough, so we focus on Grad-CAM instead, which provides more interpretable explanations in our case and is commonly used for CNNs.

Figure 10 (a) shows the segmented image over Venice (rotated in the figure), classified in

Table 3 as

no mispointing.

Figure 10 (b) presents a Grad-CAM heatmap, where lighter colors indicate the pixels that influence the model’s decision the most. Grad-CAM shows that the overexposed cloud pixels, along with the coastline shapes, play the key role in the CNN’s classification as

no mispointing, while vegetation and especially water have a minimal impact. Furthermore,

Figure 10 (c) shows again the segmented image with a stripped pattern, classified in

Table 3 as

mispointing.

Figure 10 (d) presents the Grad-CAM heatmap. Unlike in (b), the heatmap now lacks clear contributing regions, suggesting the model likely relies on the global striped pattern rather than on local features. We further confirm this with additional SHAP tests, where most Shapley scores are around 0, indicating no significant impact from any local region, unlike in (b), where overexposed cloud pixels and coastlines drive the CNN’s decision the most. This example demonstrates how XAI can be applied to CNNs in HYPSO-1 to gain a better understanding of model predictions.

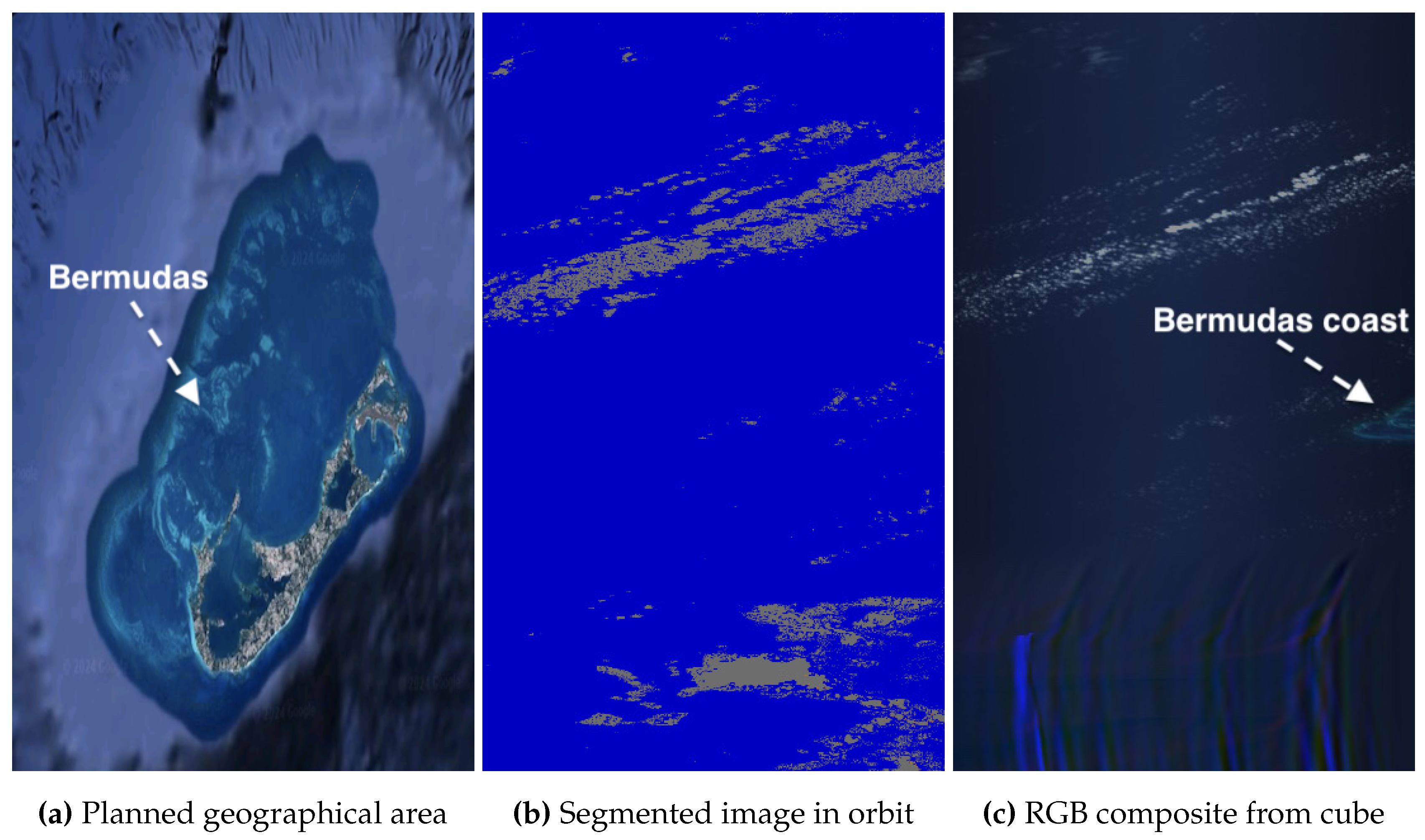

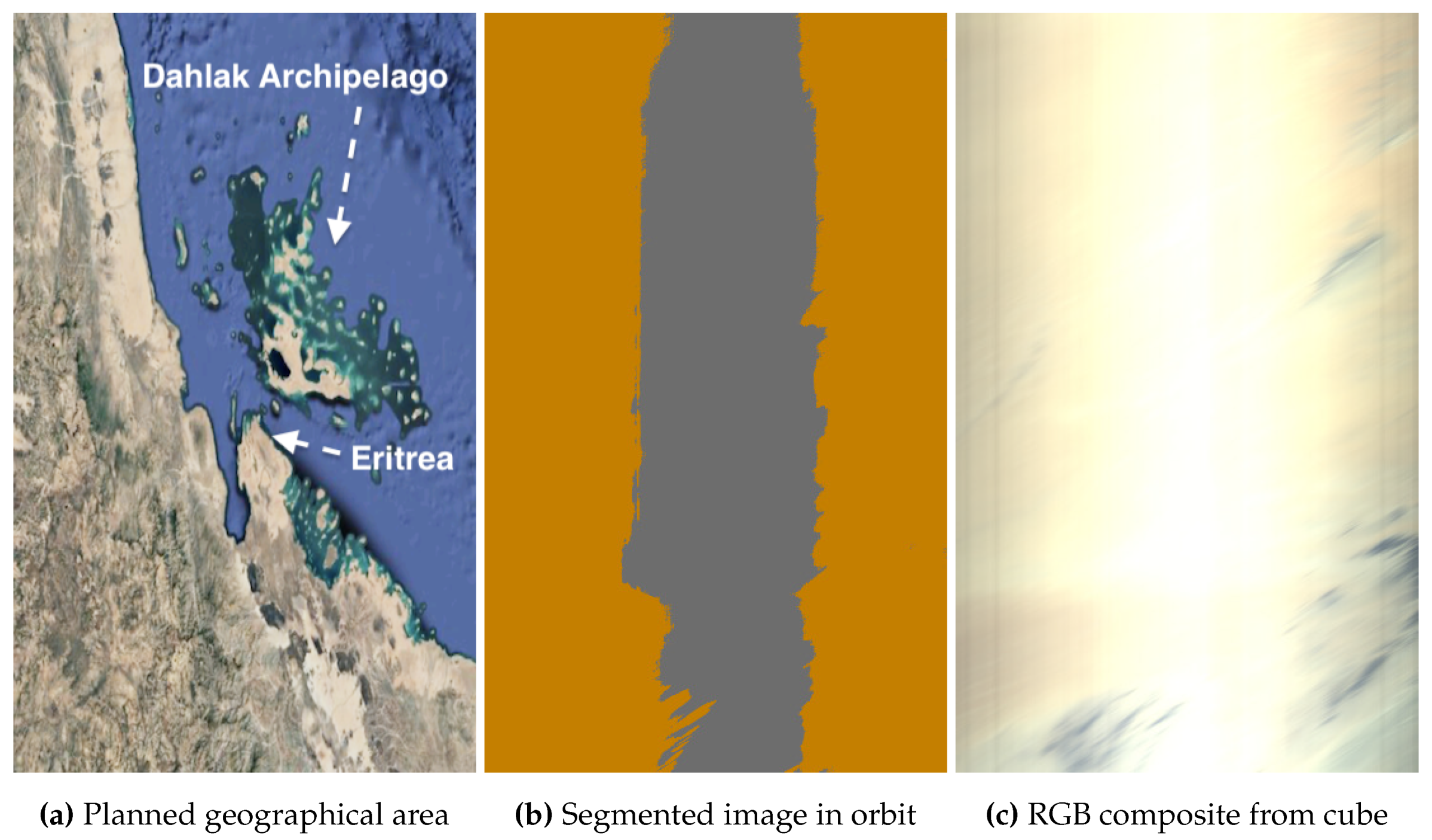

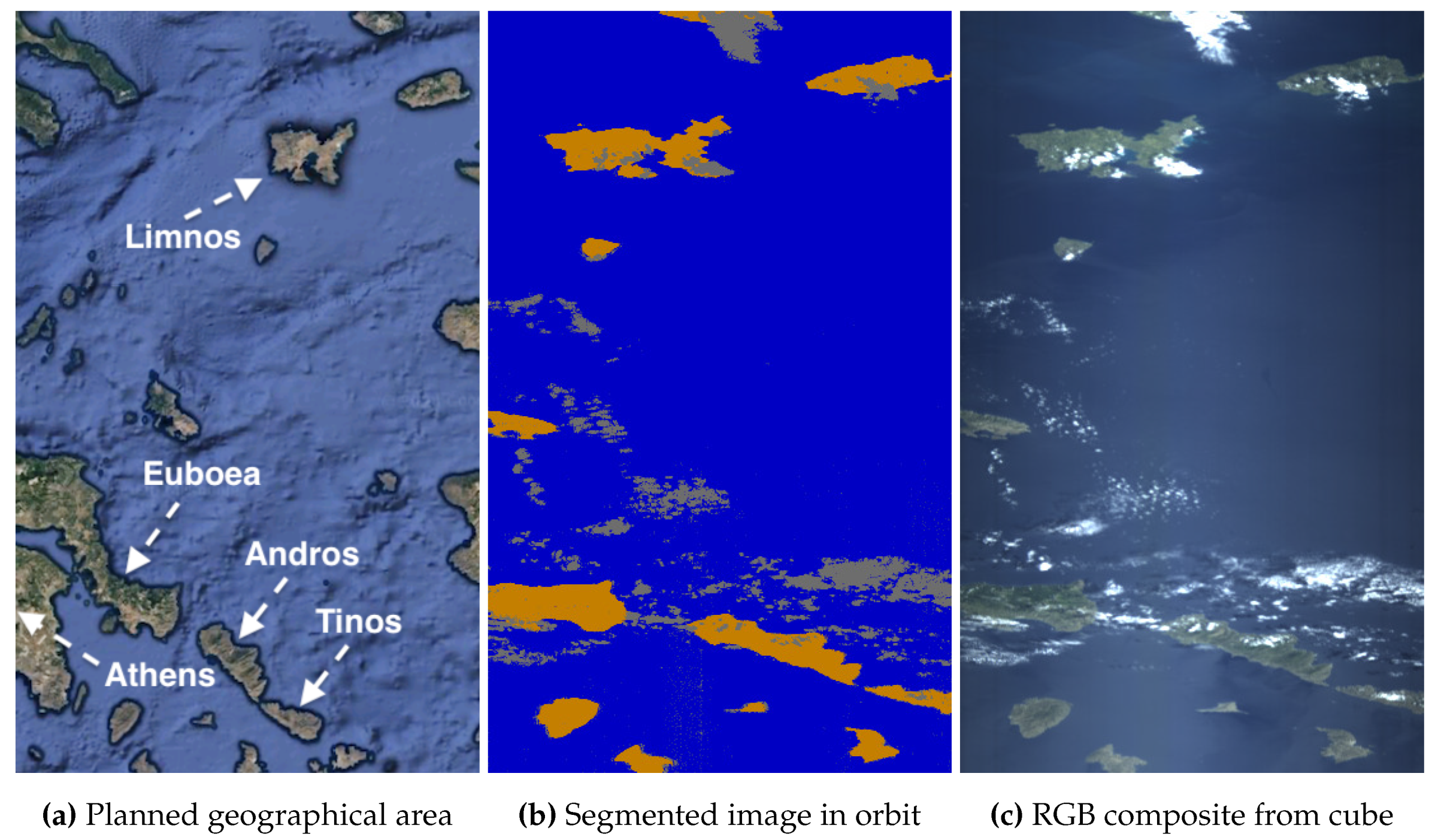

5.3. Accuracy: Satellite’s Inadequate Pointing at Earth’s Surface (Imagery of Bermuda, Greek and Eritrean Archipelagos)

HYPSO-1’s agile operations allow for flexible changes in the satellite’s orientation. However, this can result in the satellite mispointing at space, as previously mentioned, or, despite pointing at Earth, targeting the wrong geographical area and missing the intended targets. For example, in our planned capture of the Bermuda Archipelago in

Figure 11 (a), known for its turquoise waters from shallow depths and coral reefs, the downlinked segments in (b) show mostly water with no land detected, indicating the satellite missed the archipelago. Class proportions reveal 92.03% of pixels are water, 7.93% clouds, and only 0.03% land.

Considering Ground-Based Segment Inspection (

Section 4.2), operators would determine the satellite imaged deep Atlantic water and clouds, with no land detected, indicating the archipelago was missed. Consequently, the transmission cost of downlinking the data cube would be unnecessary, leading operators to command the satellite to discard the on-board cube and revisit the area. The composite in (c) confirms that, although some turquoise colors, likely from Bermuda’s coast, appear on the right of the capture, the archipelago was indeed missed. Decompression artifacts from incomplete downlink, visible at the bottom of the capture, have been previously discussed.

For in-flight segment interpretation, we can apply our two approaches for On-Board Automated Segment Interpretation (

Section 4.3). In this case, where only 0.03% of pixels are segmented as land, interpretation based on the proportion of detected classes is appropriate. During capture planning, in addition to the setup parameters for new captures, the operator could have also uplinked a rough land threshold (e.g., below 0.5% - at the operator’s choice) for the OPU to flag the capture as not usable due to minimal land detection. In orbit, the 0.03% land proportion would fall below this threshold, making the OPU discard the capture. However, beyond merely evaluating the proportion of segmented land, a deep 2D-CNN could interpret patterns in the segmented image, such as island shapes and their spatial arrangement surrounded by water with sharp land-water transitions. If an island-like scene is not detected, the OPU may discard the cube. While we previously demonstrated the concept with a 2D-CNN trained to detect stripes when the satellite mispoints at space, future training to identify more complex features in flight, like island-shapes and other spatial distributions could be relevant.

An additional example of satellite mispointing is given in

Figure 12 for the Dahlak Archipelago (Eritrea) in the Red Sea. The segmented image indicates no water is detected. The class proportions are: 64.60% land, 35.40% overexposed, and 0.00% water; indicating the satellite did not capture the expected scene of an archipelago surrounded by water. Ground-Based Segment Inspection would reveal the satellite missed the Red Sea around the archipelago, detecting only the arid terrain with high overexposure and no water, leading to operators commanding the satellite to discard the HS data cube and scheduling a revisit. Alternatively, for in-flight segment interpretation, the OPU can easily assess the proportion of detected classes. A minimal water threshold can be set, and if not met, the cube is flagged as unusable. In this case, with 0.00% water pixels, the flagging would be straightforward.

Finally,

Figure 13 shows an example of the satellite correctly pointing to the intended area, a Greek Aegean Archipelago near Athens, including islands such as as Andros and Limnos. Ground-Based Segment Inspection would note a more reasonable pattern in (b) for an archipelago, with 87.33% water, 6.80% land, and 5.87% clouds. The class proportions, and especially the segmented image shown in (b), suggest the archipelago was captured correctly. As a result, the operators would command the satellite to downlink the cube, deeming the transmission cost worth, as indeed confirmed by the capture received in (c). For in-flight segment interpretation, the OPU could autonomously check that the class proportions are within certain reasonable thresholds and, using a 2D-CNN, evaluate in orbit if the spatial patterns match an island-like scene.

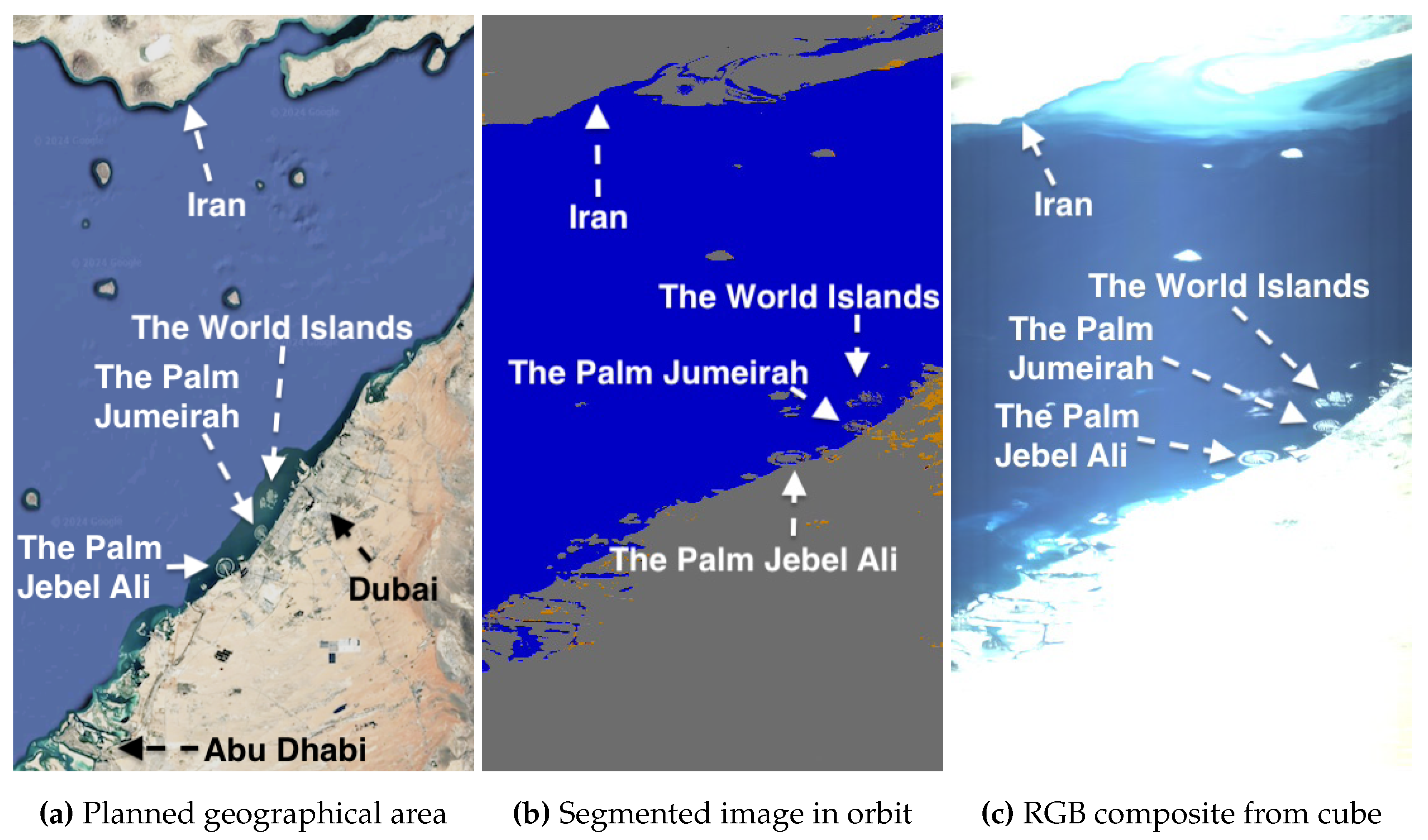

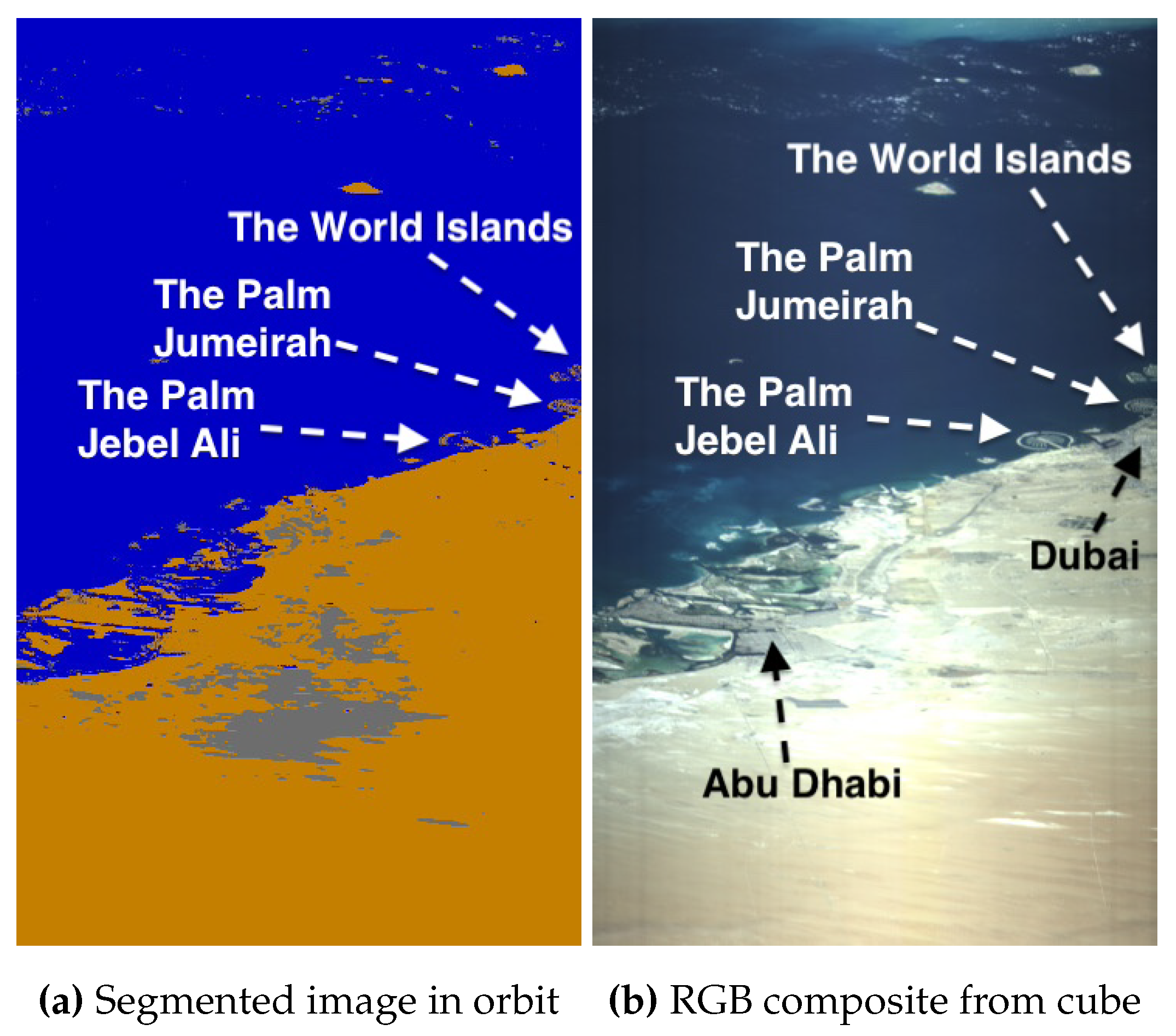

5.4. Accuracy: Captures of Arid and Desert Regions (Imagery of United Arab Emirates, Namibia and Nevada)

Figure 14 and

Figure 15 show captures over the United Arab Emirates (UAE), a region of interest for studying desertification and monitoring aeolian processes resulting in sand movement that could bury roads and threaten urban infrastructure in Abu Dhabi and Dubai.

Figure 14 (a) shows that sand near Abu Dhabi has a lighter color, while sand further into the desert takes on a reddish tone. This color variation is due to differences in mineral content: reddish sand has more iron oxide, whereas lighter sand contains more silica and less iron oxide. By monitoring the sand colors over time, the potential aeolian transport of red sand toward Abu Dhabi may indicate the movement of iron oxide-rich sands from the desert dunes into the cities.

Desert regions, especially those with higher silica, are generally more prone to overexposure. Indeed, segments in (b) show most land is detected as overexposed along the UAE’s coast (bottom segments) and extending north to Iran’s coast (top segments). Dubai’s Palm Islands (The Palm Jabel Ali and The Palm Jumeirah) and World Islands, artificial structures built into the sea, are visible on the right. They are highly saturated because the excessive light from the island easily dominates the scattered light from the surrounding water, as confirmed in (c). However, not only is the land overexposed, but some water too. Namely, while the UAE’s coastal water is correctly segmented, the light cyan water along Iran’s coast is overexposed and segmented as such.

After acquiring a new capture with adjusted exposure settings, the segmented image in

Figure 15 (a) shows a significant reduction in overexposure. Only the lighter silica-rich sand near Abu Dhabi is overexposed, while the reddish iron oxide-rich sand deeper in the desert is not. Larger islands are well identified, but the Palms and World Islands are partially misclassified as water due to the now stronger scattering from the surrounding larger water body compared to the thin islands. Despite this, water near Abu Dhabi’s coast, including shallow waters with potential confusion with land, is correctly segmented. The cyan water along Iran’s coast (in the top) is no longer overexposed, being correctly segmented as water.

Ground-Based Segment Inspection can confirm the satellite correctly pointed at the UAE region and assess whether the new cube is worth downlinking. However, the OPU would face challenges to autonomously decide whether to downlink or discard the capture based solely on detected class proportions. In addition, while a 2D-CNN can accurately detect the coastlines, it cannot guarantee with full certainty that the area corresponds to the intended coastline. Therefore, we recommend future work to implement in-orbit georeferencing for better automation in flight. We note that in our upcoming discussion, we will omit the analysis of Ground-Based Segment Inspection and On-Board Automated Segment Interpretation, as several examples have already been discussed.

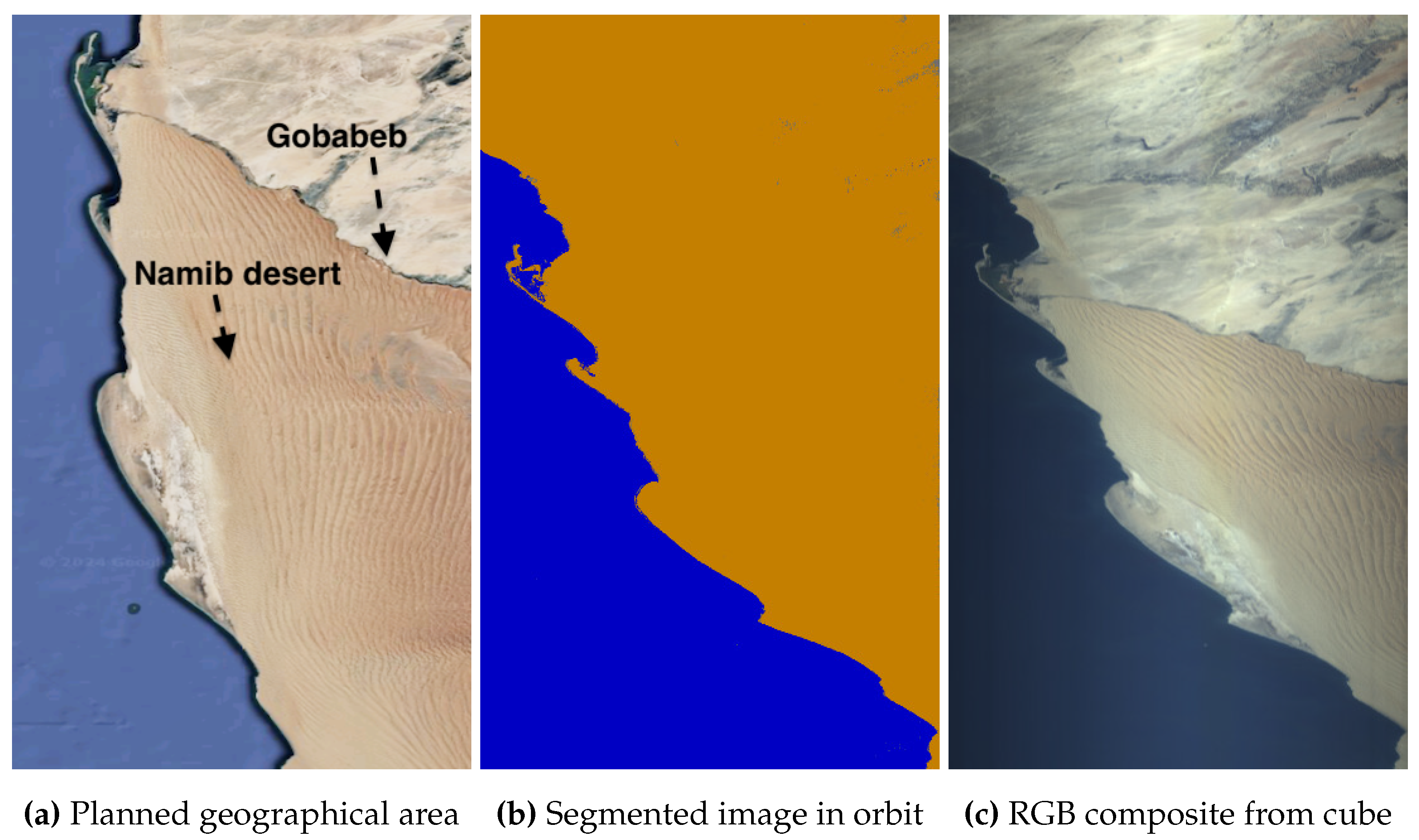

Finally, to demonstrate segmentation accuracy in other deserts,

Figure 16 shows accurate results over iron-rich Namib Desert, hosting ESA’s station part of RedCalNet. Furthermore,

Figure 17 captures Mojave Desert in Nevada near Las Vegas, with minor water misclassifications from cloud shadows, which remain acceptable for our application.

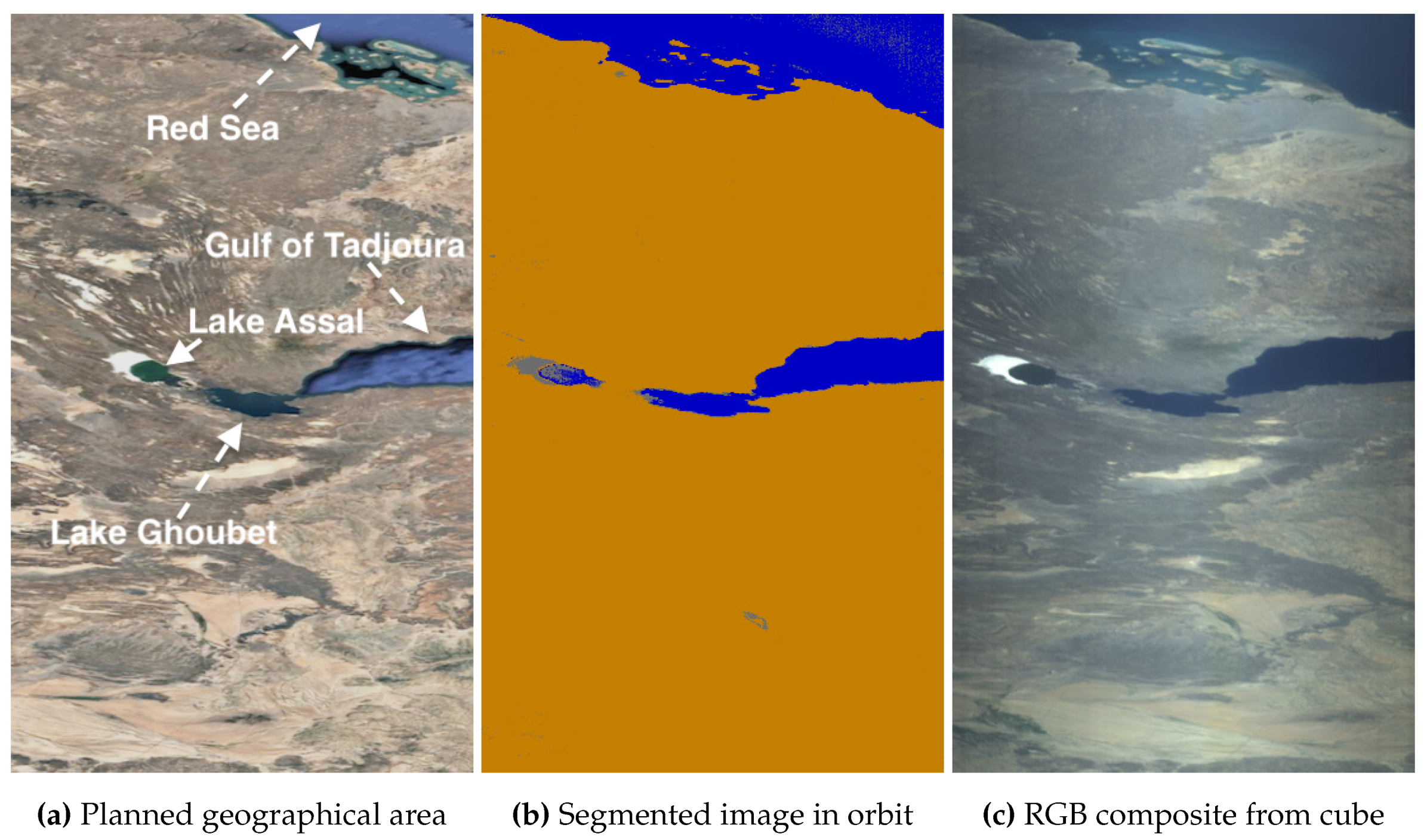

5.5. Accuracy: Captures of Water with Extreme Salinity (Imagery of Lake Assal near the Red Sea)

Given HYPSO’s focus on water observation,

Figure 18 captures Lake Assal near the Red Sea, known for its extreme salinity - higher than the ocean and more than the Dead Sea. This volcanic lake, the lowest point in Africa, is surrounded by salt flats. The segments in (b) accurately identify the Gulf of Tadjoura, Lake Ghoubet, and Lake Assal. In Lake Assal, significant overexposure is detected, due to the highly reflective salt flats. The lake’s extreme saline water is also more reflective than typical water systems, resulting in some water pixels being classified as overexposed. The Red Sea, also saline, has similar overexposed pixels in the top segments. The RGB composite in (c) confirms the model accurately segments the salt flats as overexposed. Additionally, although Lake Assal’s water does not appear overexposed in the RGB, segmentation across all HS bands reveals higher reflectance likely due to its extreme salinity.

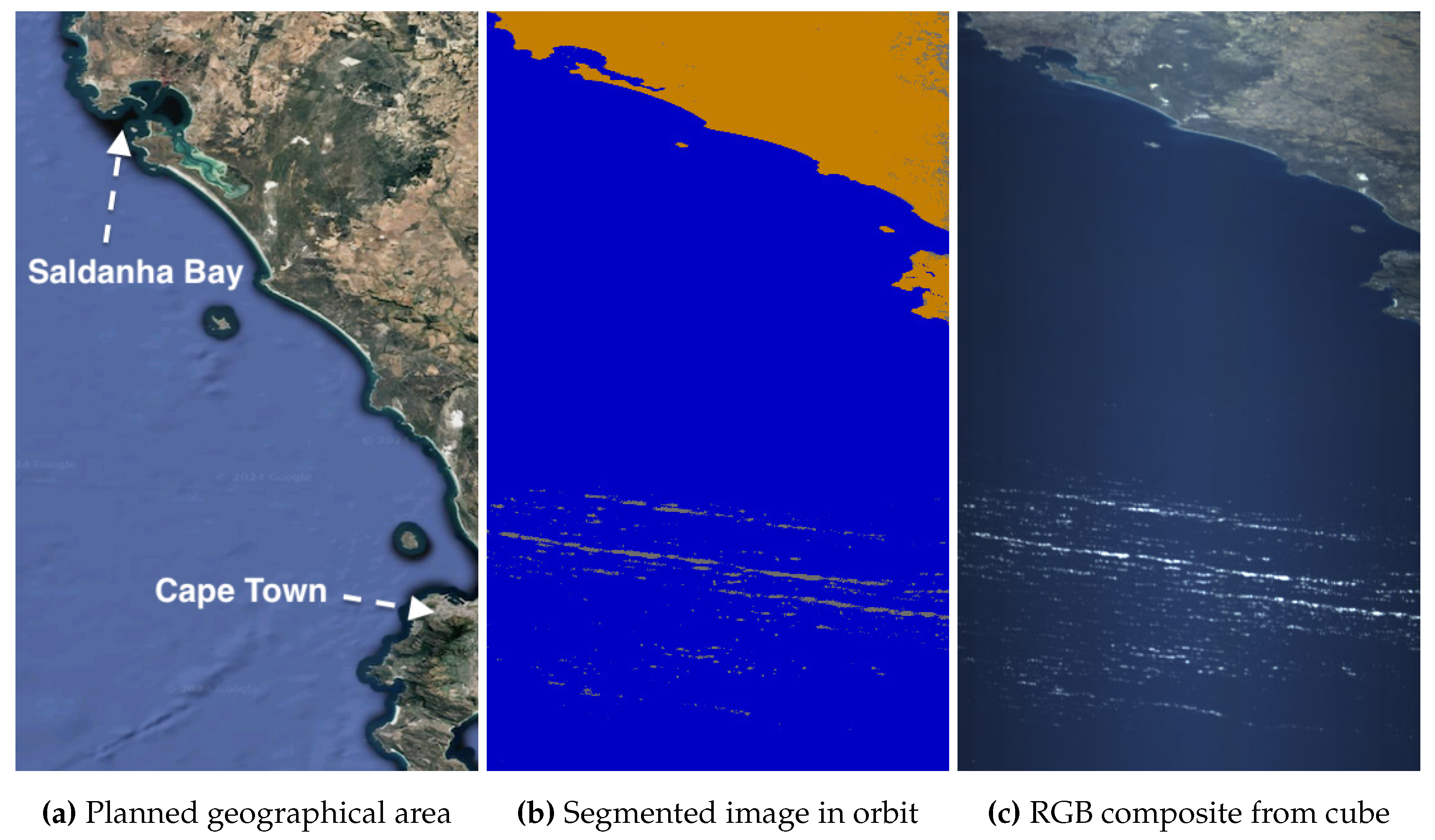

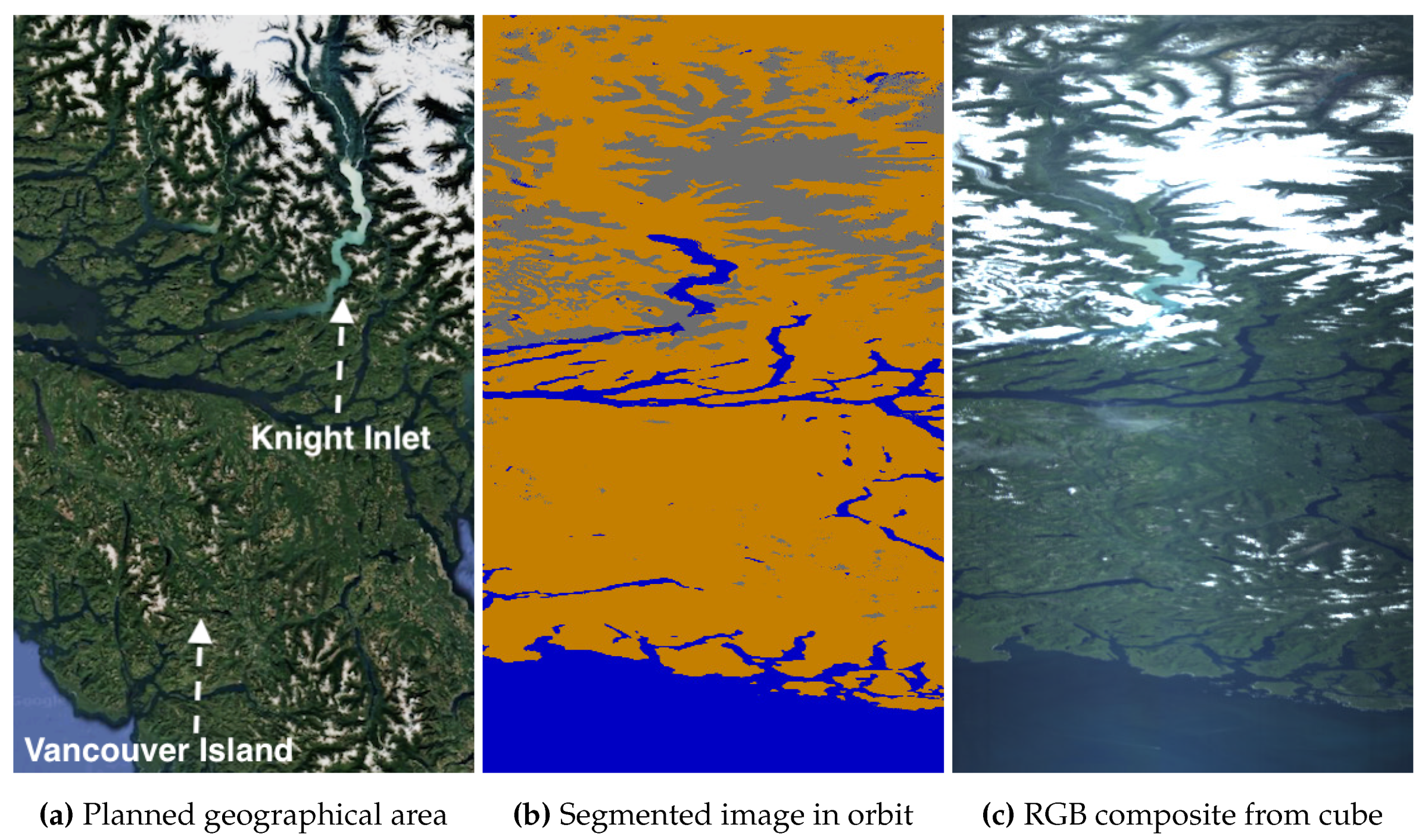

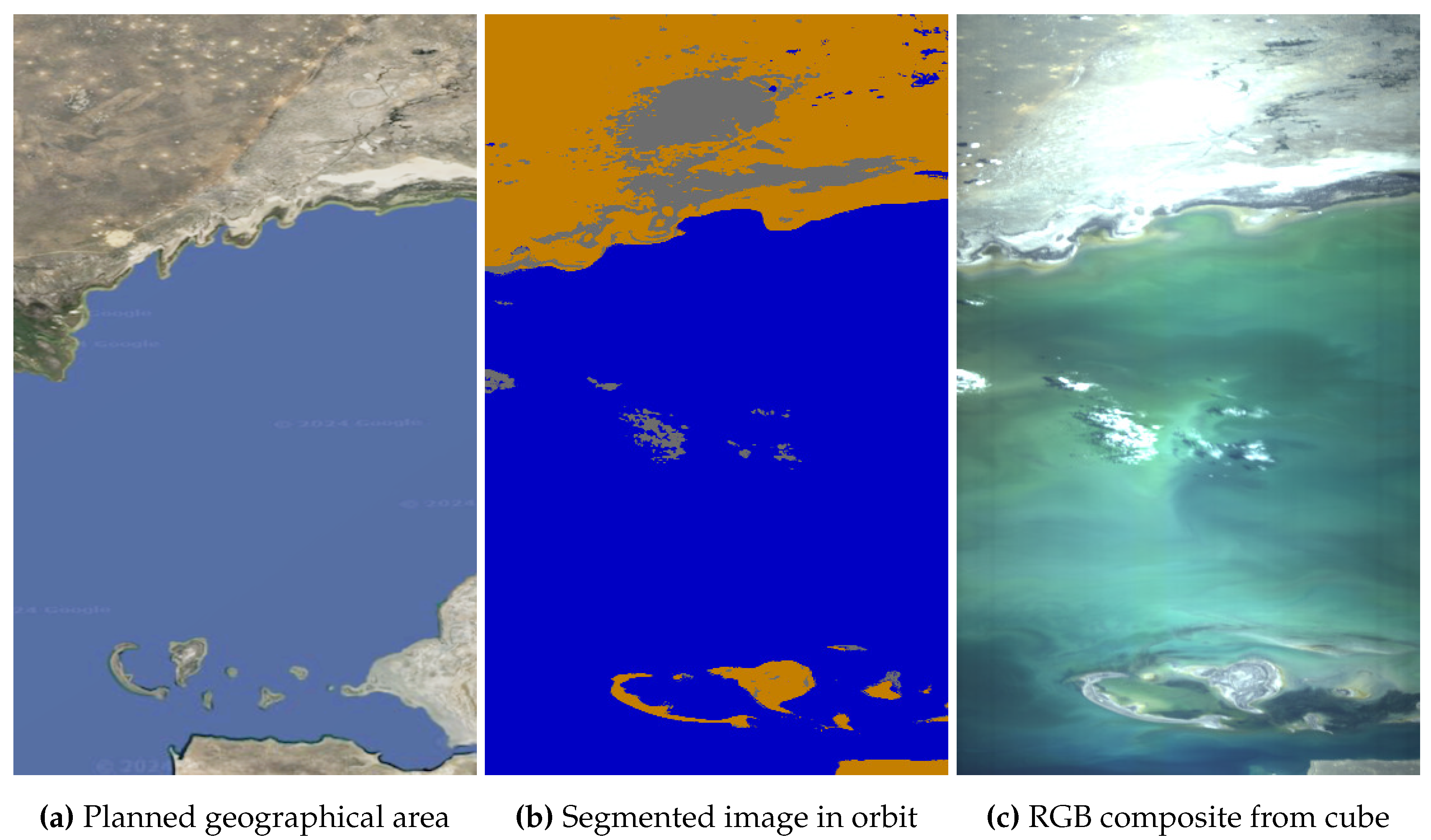

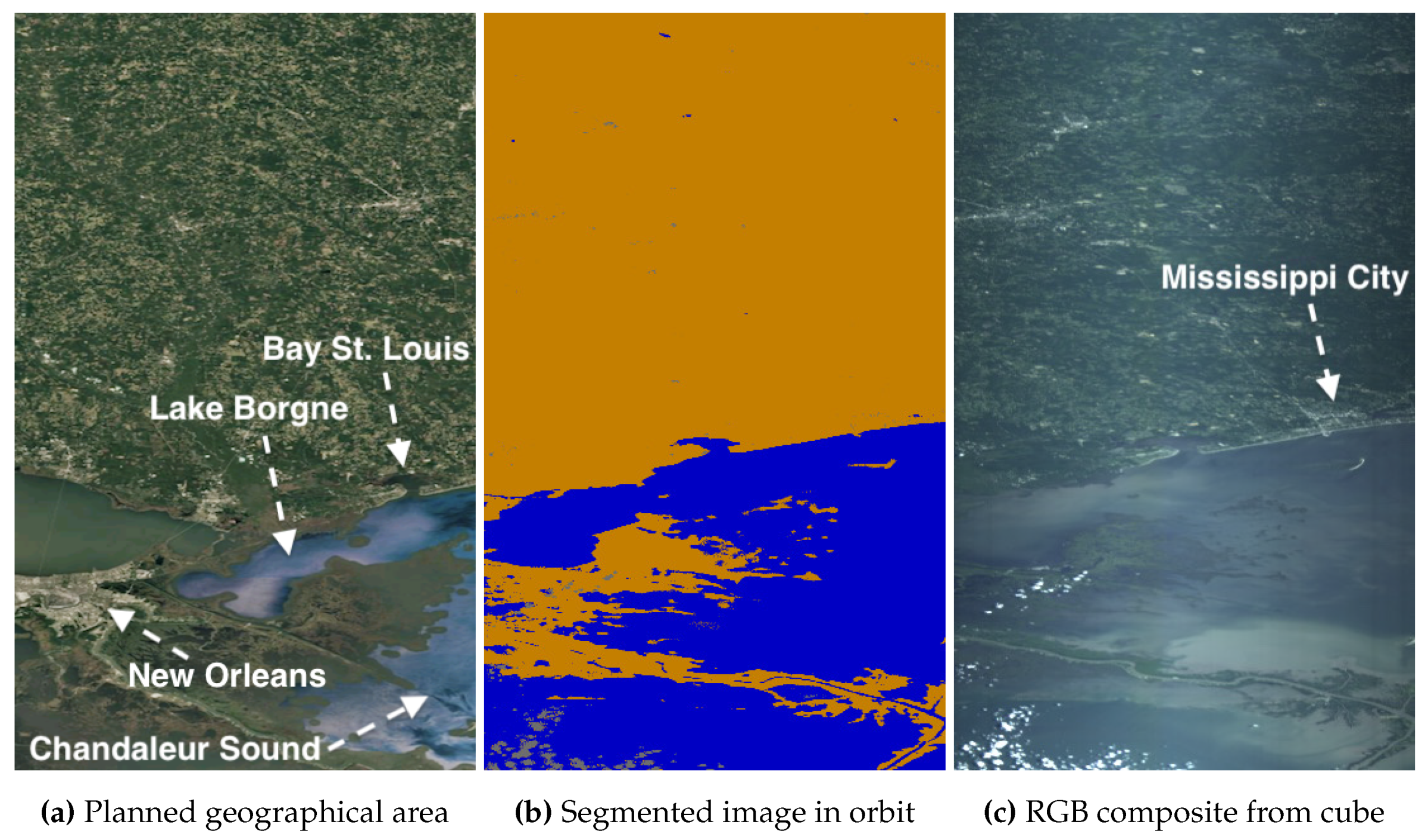

5.6. Accuracy: Captures of Water Colors (Imagery of South Africa, Vancouver, Caspian Sea and Gulf of Mexico)

Given the importance of water observation and ocean color studies for HYPSO,

Figure 19,

Figure 20,

Figure 21 and

Figure 22 present examples of captures with varying water colors, correctly segmented as water. We note that the training set from the

HYPSO-1 Sea-Land-Cloud-Labeled Dataset from our previous work [

65] does not include subcategories to distinguish water colors due to the lack of ground-truth data to conclude a meaningful and physical interpretation of each color.

Figure 19 images the South African coastline, where darker water colors are correctly segmented due to the extensive dark water annotations in our training set. Furthermore, while thicker clouds are correctly segmented, thinner clouds are

misclassified as water, which we will comment later. Additionally, some non-cloud pixels are mistakenly identified as clouds in the top right, suggesting the need for further model training. However, the overall accuracy is still acceptable for our needs. Additionally,

Figure 20 captures channels near Vancouver Island with clean waters similar to other coastlines, exhibiting cyan colors. The cyan water is correctly segmented. Finally,

Figure 21 and

Figure 22 depict other coastal images from the Caspian Sea and the Gulf of Mexico in New Orleans with light and darker green-blue waters, also accurately segmented.

5.7. Accuracy: Captures with Different Cloud Thickness

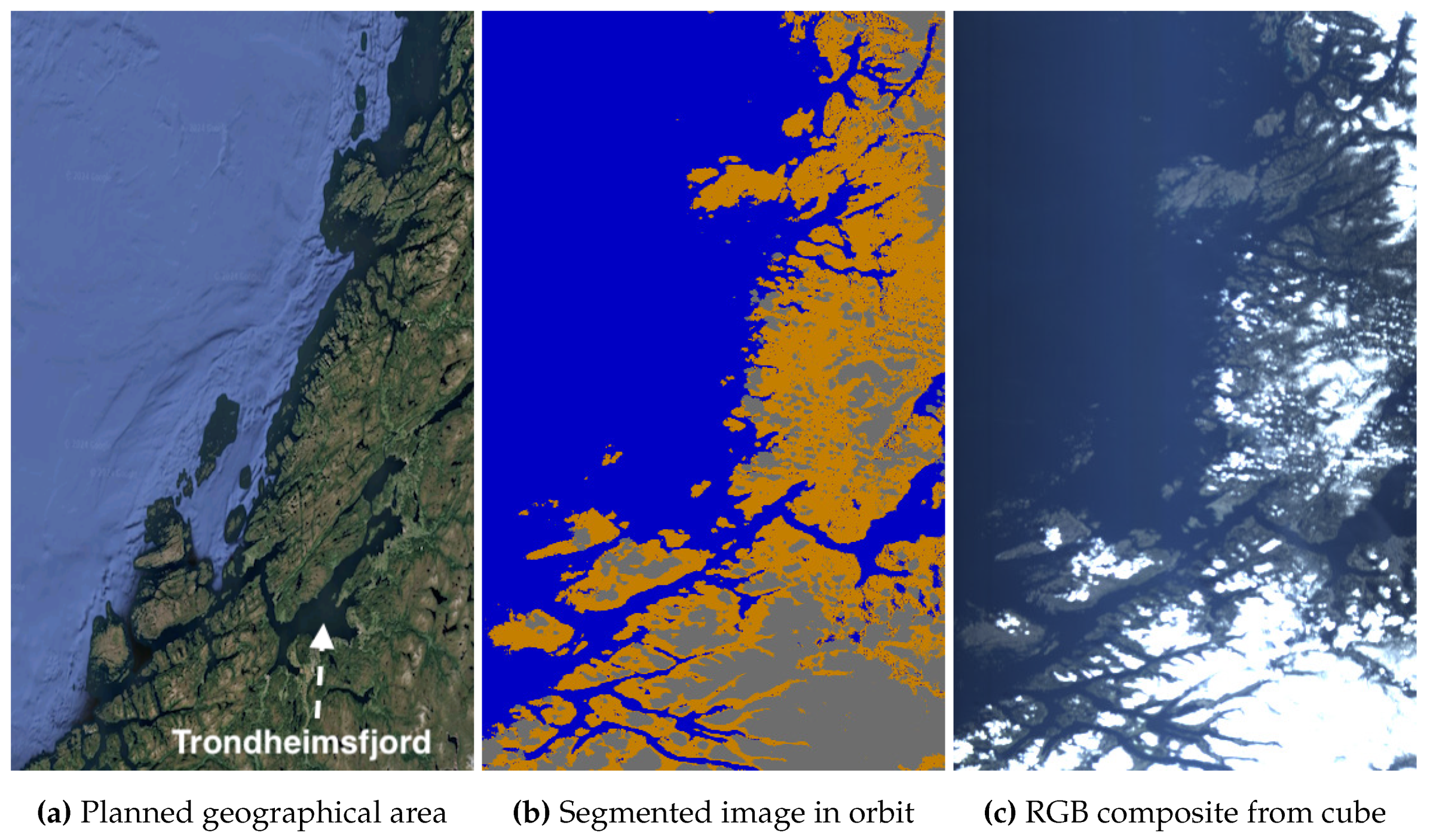

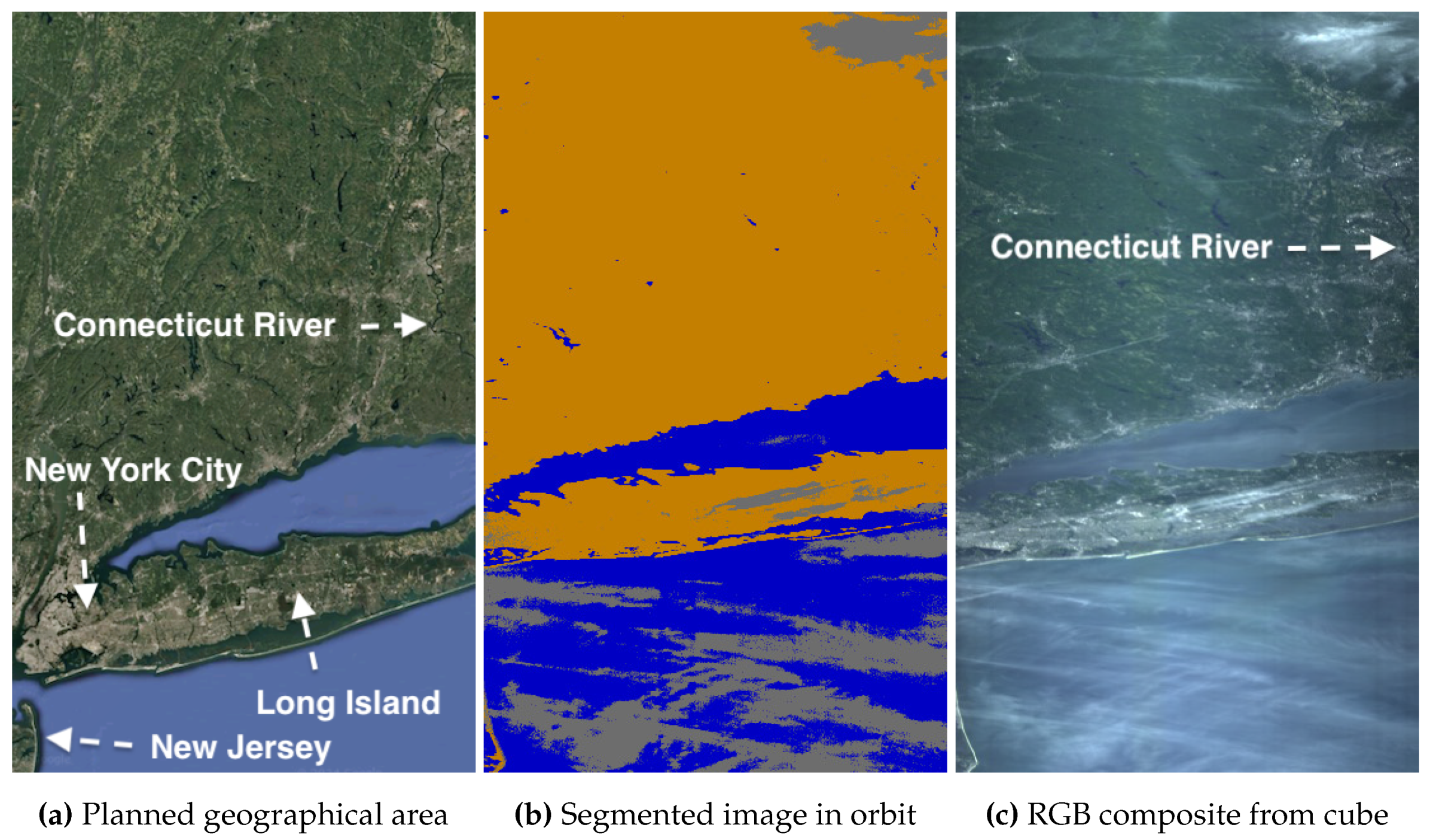

Figure 23 and

Figure 24 show varying cloud thickness.

Figure 23 captures Trondheimsfjord with thick clouds and acceptable segmentation.

Figure 24 over Long Island, New York, shows thinner and more transparent clouds that are harder to segment as light scatters from both the clouds and the background. The segmented image shows cloud cover over the water, and the RGB confirms that, while the clouds are not thick, they obscure enough the water to justify the cloud predictions.

Beyond cloud cover, a few aspects in

Figure 24 are worth discussing. Several pixels on the left side of Long Island are segmented as overexposed, likely due to bright urban surfaces in New York City. Light-colored, low iron oxide beaches in New Jersey are also correctly segmented as overexposed. Additionally, the Connecticut River, north of Long Island, is mostly misclassified as land due to strong NIR light scattered from surrounding vegetation, causing the river’s thin water to be misclassified as land.

Regarding cloud cover, detection is heavily influenced by contrast levels. Back to previous

Figure 11 of the Bermuda Archipelago, small thin clouds are

misclassified as water due to their lower contrast. Only the higher-contrast pixels in the top left are correctly detected as clouds, while the lower-contrast ones are

misclassified as water. However, this is still permissible for our application.

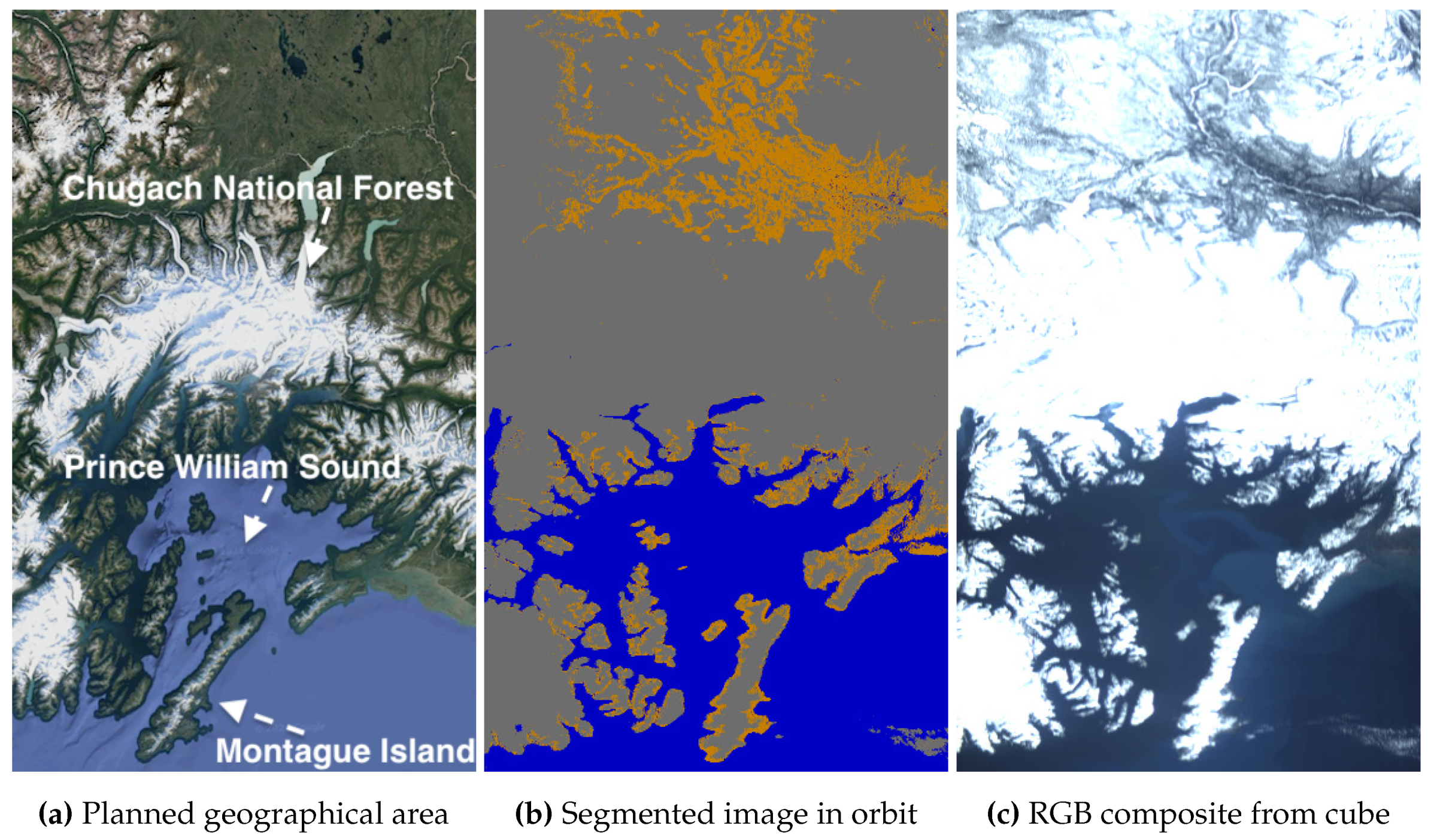

5.8. Segmentation in Snow and Ice Conditions

The

1D-Justo-LiuNet model currently operational in orbit was not trained in [

43] to reliably segment snow and ice, since the

HYPSO-1 Sea-Land-Cloud-Labeled Dataset in [

65] excluded these conditions to simplify the time-consuming labeling process. Regardlessly, we attempt to gain any insights from segmenting these scenes. Future training should improve the model’s ability to distinguish between clouds and snow/ice.

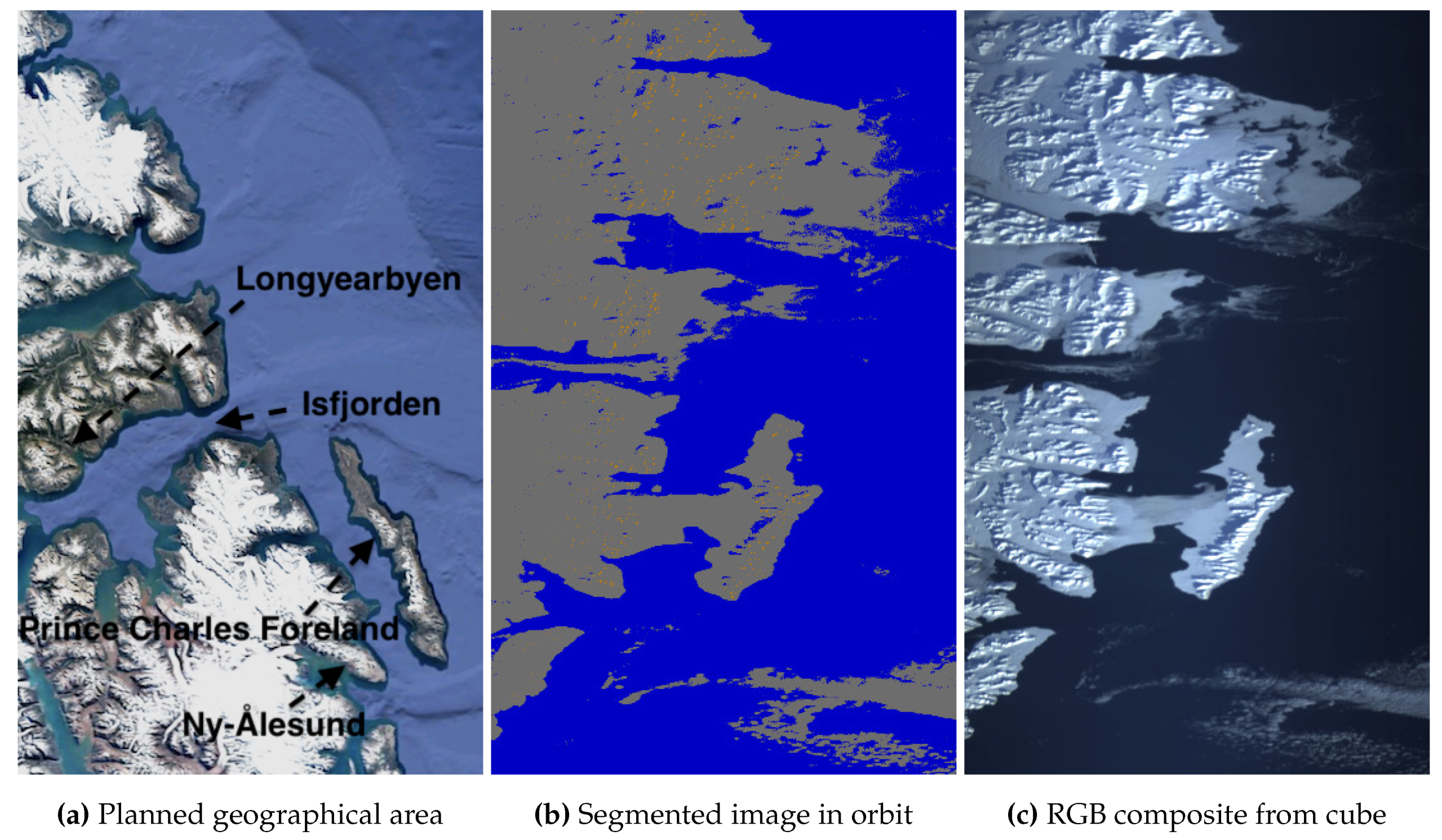

Figure 25 shows Alaska’s Prince William Sound, a water body between the Chugach National Forest and barrier islands, such as Montague Island. If only water was detected in the segments, it would indicate satellite mispointing, as no snow or land would be detected. However, gray segments suggest white pixels that may represent snow. Since the model is not trained to distinguish between clouds and snow, we compare the segmented images with the map for georeferencing. By matching the segment shapes with the map, it seems likely that many of the gray segments represent snow, as they tend to align with the land’s surfaces and contours. Additionally, some pixels also present lower reflectivity, indicating land. While not fully reliable, this method enhances the model’s potential to also segment scenes under snow and ice conditions, as it occurs in the Arctic, where HYPSO-1 often takes captures. For example,

Figure 26 captures the Norwegian Archipelago of Svalbard in the Arctic region, crucial for climate change monitoring. Despite the challenges of georeferencing due to ice movement not represented in the available map, we apply the same approach as with Alaska. The snow-covered land (orange dots within gray segments) suggests the satellite correctly pointed and captured the archipelago, despite uncertainties in cloud cover. The composite confirms that the conclusion is correct.

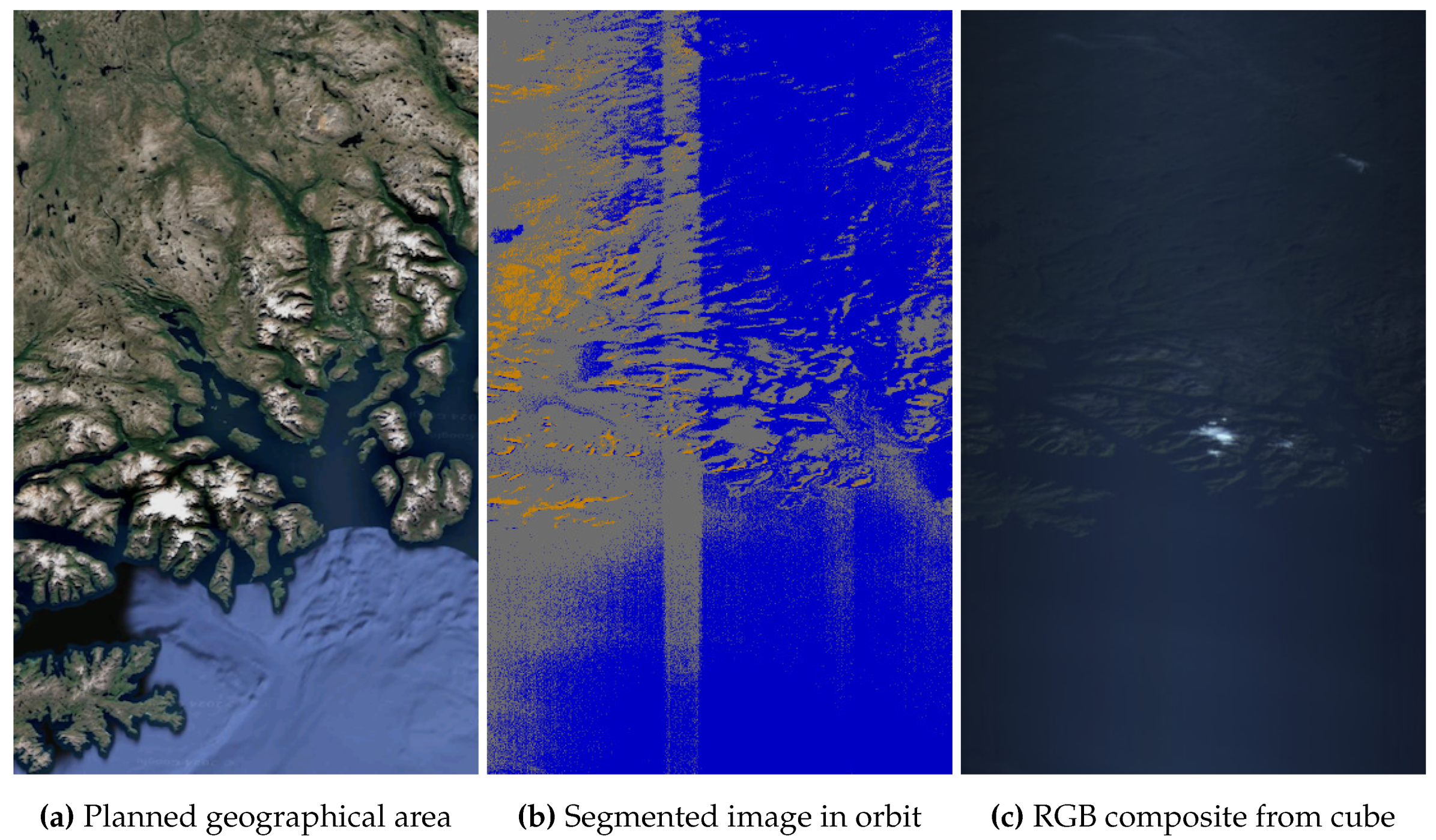

5.9. Accuracy: Relevant Misclassifications (Low-Light Imagery and Florida’s Coast)

Although the

1D-Justo-LiuNet model was not trained to detect snow-covered surfaces, it can still identify them due to extensive training where many white pixels were annotated as clouds or overexposed areas. However, the

HYPSO-1 Sea-Land-Cloud-Labeled Dataset lacks nighttime annotations [

65], as labeling in such conditions would have been more complex. Consequently, the model performs poorly in low-light conditions, as seen in

Figure 27, where a low-light capture from Northern Norway shows significant misclassifications. The segmented image also exhibits a vertical stripe due to the segmentation being applied to uncalibrated sensor data, where sensor stripes have not been corrected. Finally,

Figure 28 shows misclassified Florida’s coastal waters, where brighter water pixels are mistaken for clouds. This issue, seen across multiple captures in the concrete area off Florida’s coast, suggests the need of further exploration or model training in this region. However, the segmentation of the coastline, land, and clouds remains otherwise acceptable.

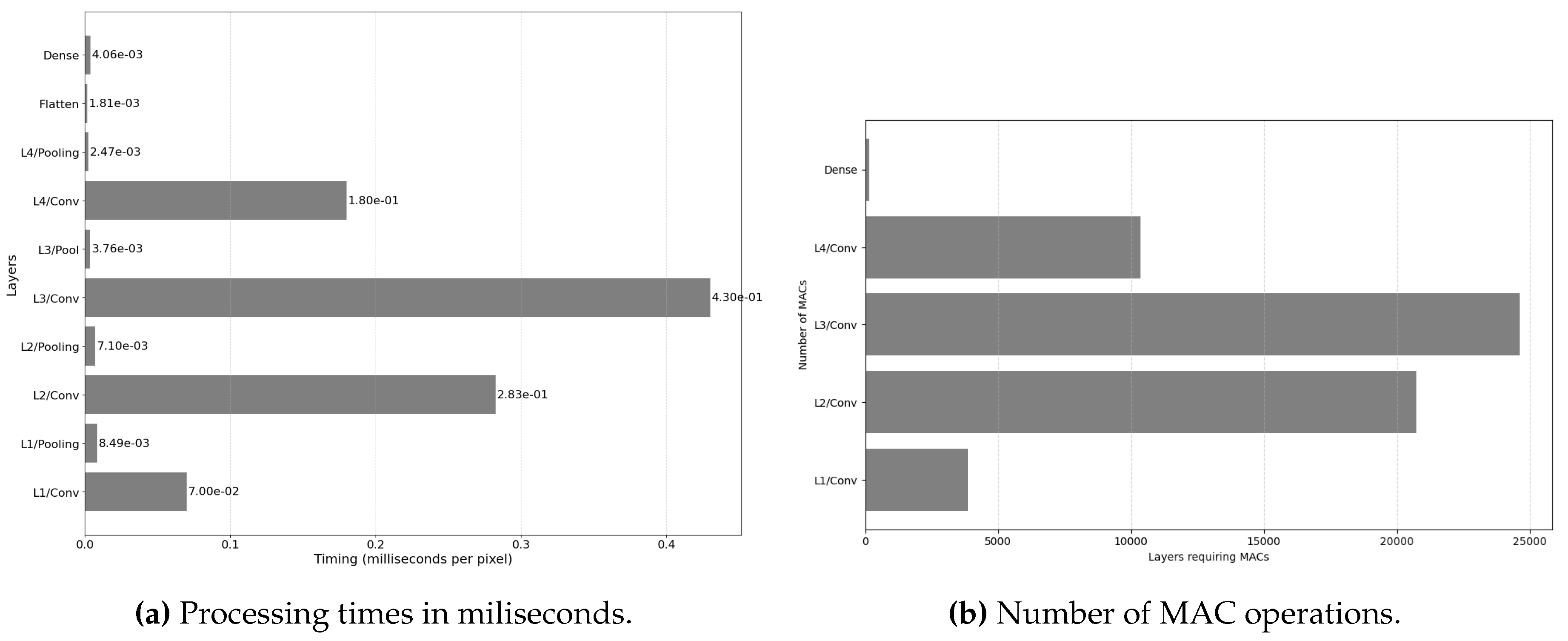

5.10. Inference Time

We continue our discussion by analyzing the processing time of

1D-Justo-LiuNet for segmenting a data cube. While our previous work [

43] concluded that the network was one of the fastest lightweight models, the dual-core CPU in the Zynq-7030 remains considerably slow. Although an FPGA implementation is beyond the scope of this work,

Appendix A provides an analysis of potential future FPGA acceleration. We conclude that

1D-Justo-LiuNet can benefit significantly from accelerating the MAC operations in the third convolution layer on the FPGA.

Figure 1.

HYPSO-1 system architecture general pipeline.

Figure 1.

HYPSO-1 system architecture general pipeline.

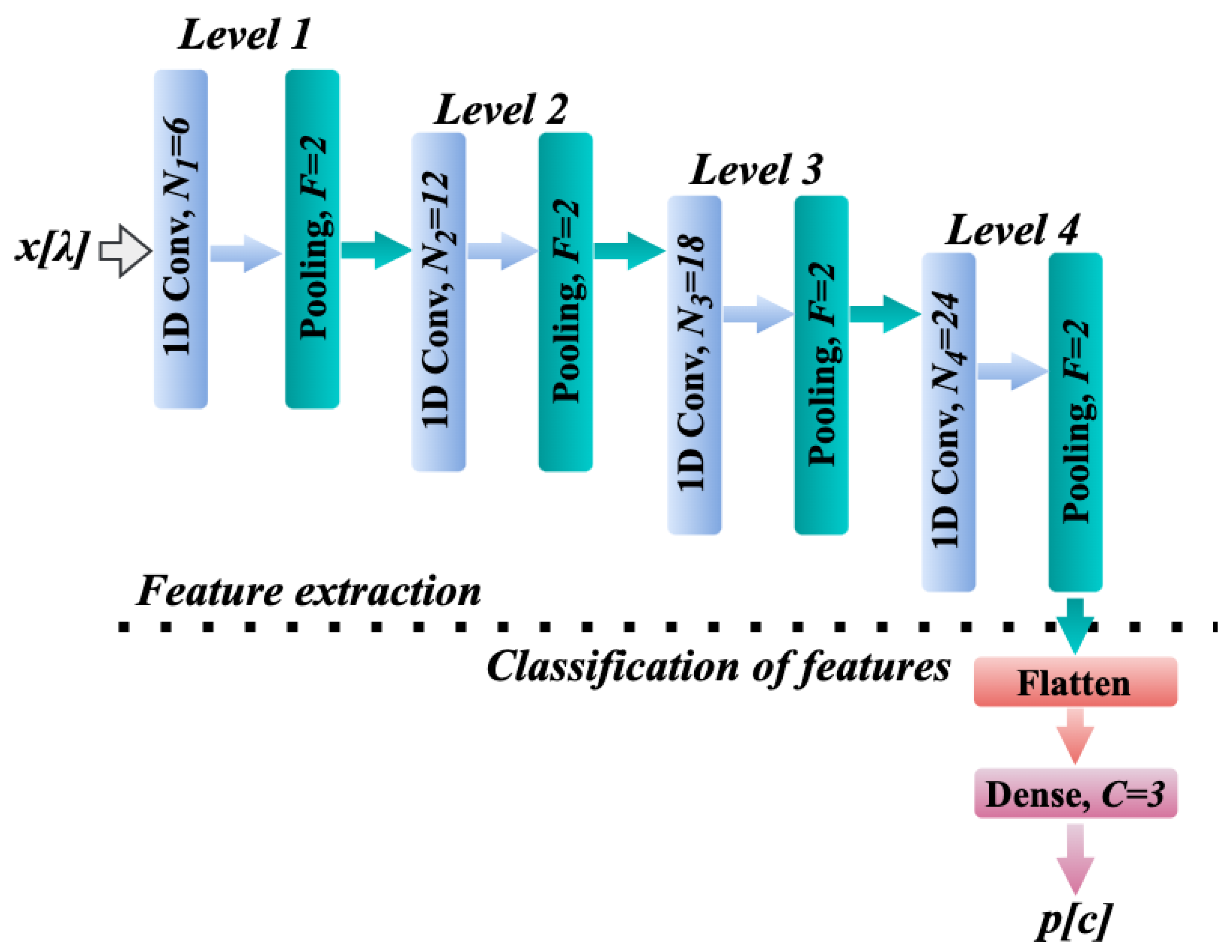

Figure 2.

Architecture of

1D-Justo-LiuNet for feature extraction and classification proposed in our previous work in [

43].

Figure 2.

Architecture of

1D-Justo-LiuNet for feature extraction and classification proposed in our previous work in [

43].

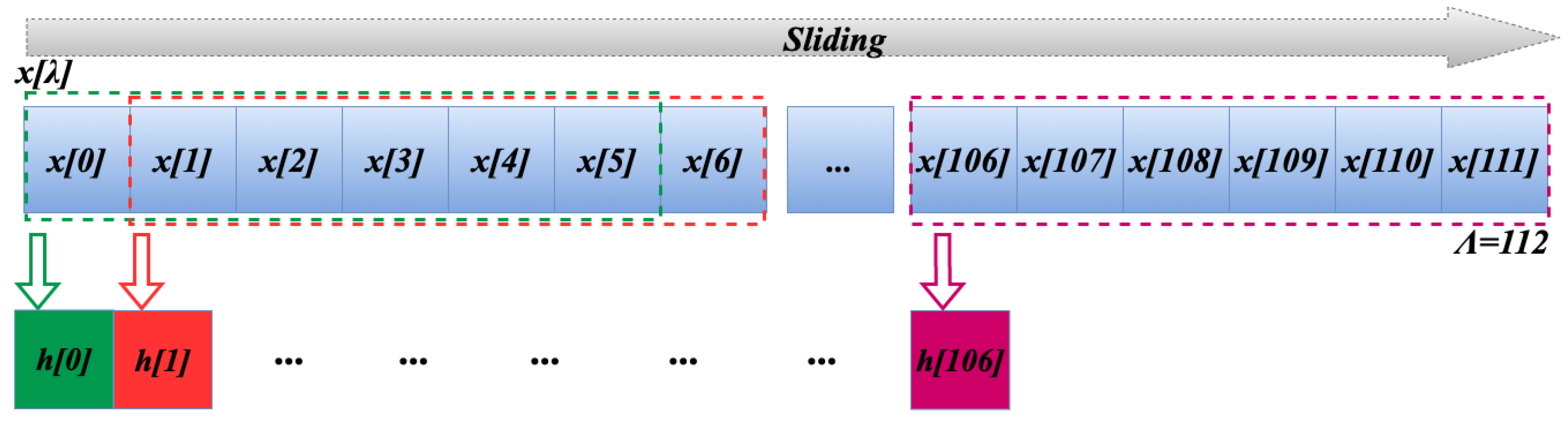

Figure 3.

Sliding process with stride over .

Figure 3.

Sliding process with stride over .

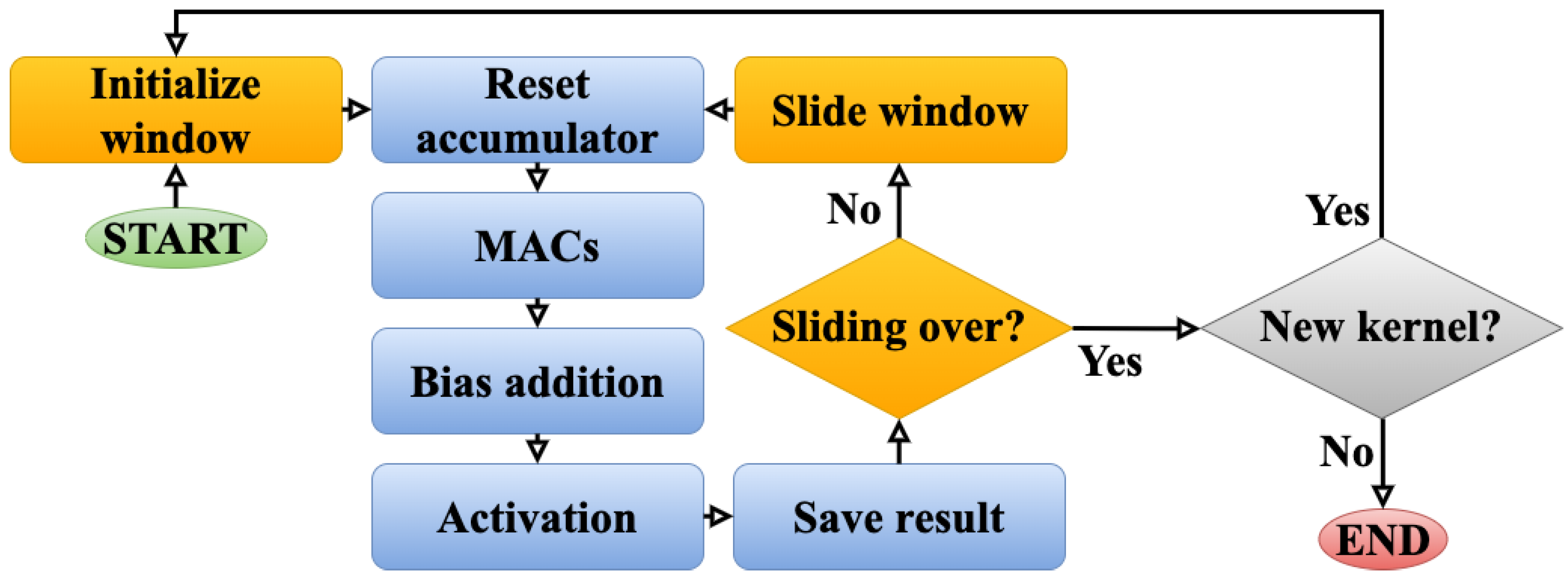

Figure 4.

Flow diagram of operations in the convolution layer (implementation 1).

Figure 4.

Flow diagram of operations in the convolution layer (implementation 1).

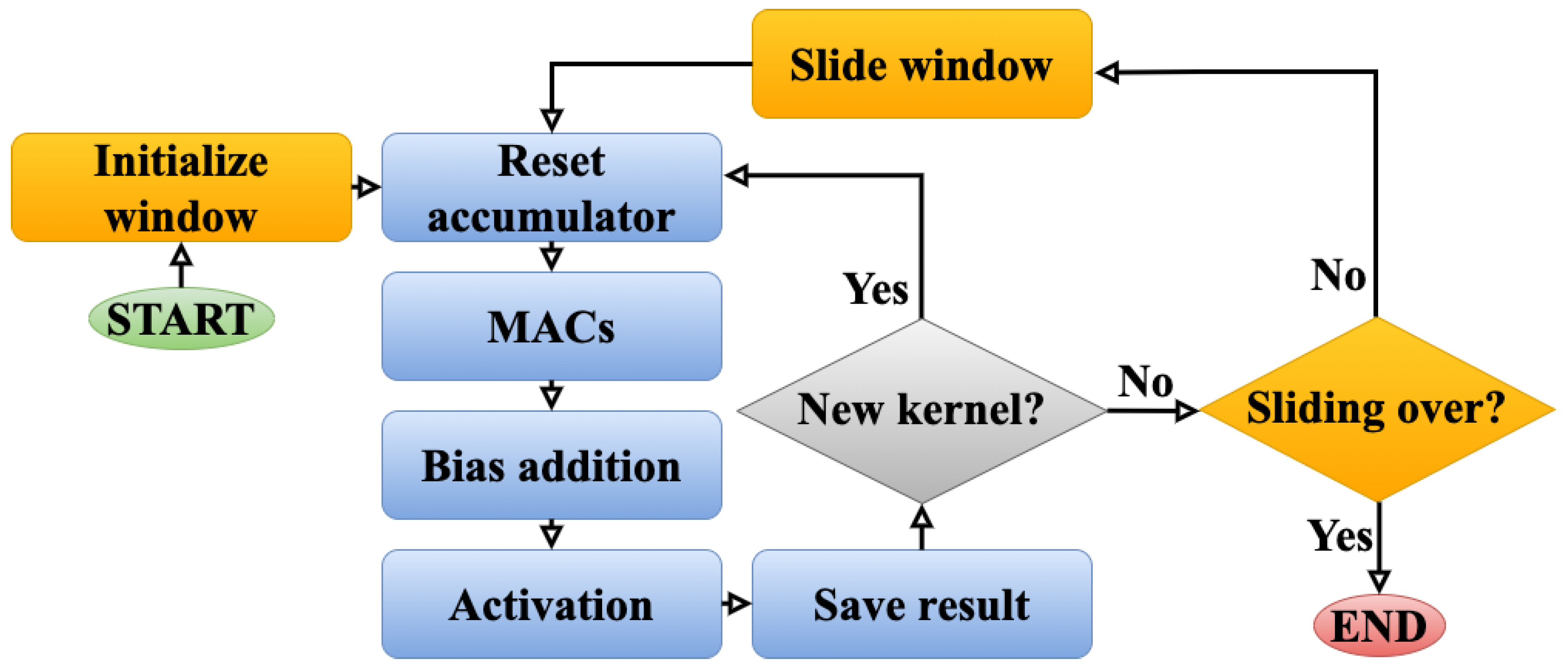

Figure 5.

Flow diagram of operations in the convolution layer (implementation 2).

Figure 5.

Flow diagram of operations in the convolution layer (implementation 2).

Figure 6.

Venice, Italy, Europe, on 22 June 2024 at 09:44 UTC+00. Coordinates: 45.3° latitude and 12.5° longitude. Solar zenith angle: 28.7°, and exposure time: 35 ms.

Figure 6.

Venice, Italy, Europe, on 22 June 2024 at 09:44 UTC+00. Coordinates: 45.3° latitude and 12.5° longitude. Solar zenith angle: 28.7°, and exposure time: 35 ms.

Figure 7.

Venice, Italy, Europe, on 22 June 2024 at 09:44 UTC - retransmitted.

Figure 7.

Venice, Italy, Europe, on 22 June 2024 at 09:44 UTC - retransmitted.

Figure 8.

Trondheim, Norway, Europe, on 17 May 2024 at 10:54 UTC. Coordinates: 63.6° latitude and 9.84° longitude. Solar zenith angle: 44.9°, and exposure time: 50 ms.

Figure 8.

Trondheim, Norway, Europe, on 17 May 2024 at 10:54 UTC. Coordinates: 63.6° latitude and 9.84° longitude. Solar zenith angle: 44.9°, and exposure time: 50 ms.

Figure 10.

Grad-CAM explanations for classification of segmented images.

Figure 10.

Grad-CAM explanations for classification of segmented images.

Figure 11.

Bermuda Archipelago, British Overseas, Atlantic Ocean, on 16 July 2024 at 14:27 UTC. Coordinates: 32.4° latitude and -64.8° longitude. Solar zenith angle: 32.6°, and exposure time: 30 ms.

Figure 11.

Bermuda Archipelago, British Overseas, Atlantic Ocean, on 16 July 2024 at 14:27 UTC. Coordinates: 32.4° latitude and -64.8° longitude. Solar zenith angle: 32.6°, and exposure time: 30 ms.

Figure 12.

Dahlak Archipelago, Eritrea, Africa, on 16 June 2024 at 07:28 UTC. Coordinates: 16.0° latitude and 40.4° longitude. Solar zenith angle: 16.0°, and exposure time: 25 ms.

Figure 12.

Dahlak Archipelago, Eritrea, Africa, on 16 June 2024 at 07:28 UTC. Coordinates: 16.0° latitude and 40.4° longitude. Solar zenith angle: 16.0°, and exposure time: 25 ms.

Figure 13.

Aegean Archipelago, Greece, Europe, on 2 May 2024 at 08:44 UTC. Coordinates: 38.5° latitude and 25.2° longitude. Solar zenith angle: 39.0°, and exposure time: 30 ms.

Figure 13.

Aegean Archipelago, Greece, Europe, on 2 May 2024 at 08:44 UTC. Coordinates: 38.5° latitude and 25.2° longitude. Solar zenith angle: 39.0°, and exposure time: 30 ms.

Figure 14.

Abu Dhabi and Dubai, United Arab Emirates, Asia, on 9 May 2024 at 06:12 UTC. Coordinates: 25.3° latitude and 54.7° longitude. Solar zenith angle: 29.5°, and exposure time: 40 ms.

Figure 14.

Abu Dhabi and Dubai, United Arab Emirates, Asia, on 9 May 2024 at 06:12 UTC. Coordinates: 25.3° latitude and 54.7° longitude. Solar zenith angle: 29.5°, and exposure time: 40 ms.

Figure 15.

Abu Dhabi and Dubai, United Arab Emirates, Asia, on 27 June 2024 at 06:17 UTC. Coordinates: 25.1° latitude and 55.0° longitude. Solar zenith angle: 28.9°, and exposure time: 40 ms.

Figure 15.

Abu Dhabi and Dubai, United Arab Emirates, Asia, on 27 June 2024 at 06:17 UTC. Coordinates: 25.1° latitude and 55.0° longitude. Solar zenith angle: 28.9°, and exposure time: 40 ms.

Figure 16.

Namib desert close to Gobabeb, Namibia, Africa, on 13 June 2024 at 08:49 UTC. Coordinates: -23.6° latitude and 15.0° longitude. Solar zenith angle: 56.8°, and exposure time: 20 ms.

Figure 16.

Namib desert close to Gobabeb, Namibia, Africa, on 13 June 2024 at 08:49 UTC. Coordinates: -23.6° latitude and 15.0° longitude. Solar zenith angle: 56.8°, and exposure time: 20 ms.

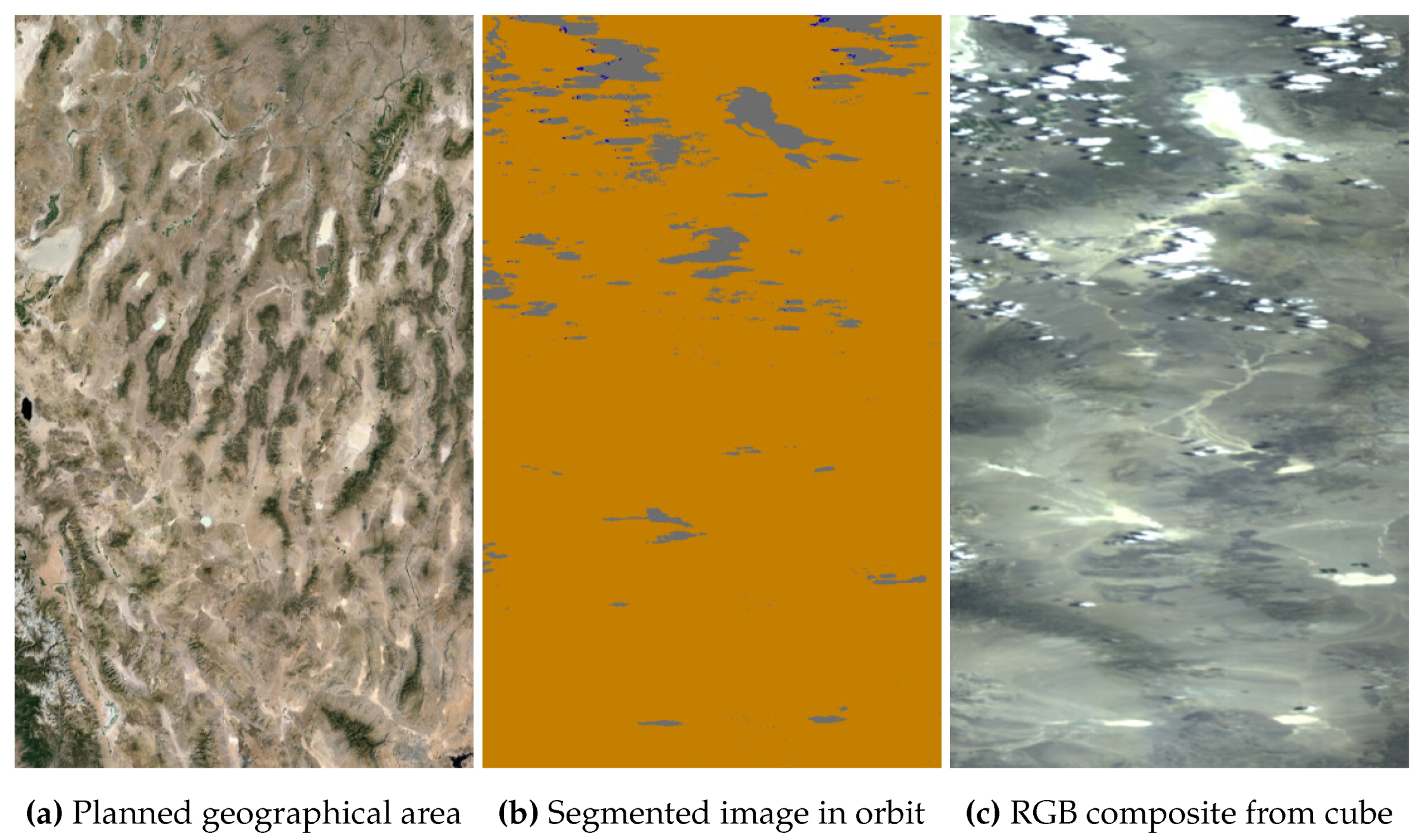

Figure 17.

Mojave Desert, USA, North America, on 28 May 2024 at 17:52 UTC. Coordinates: 38.7° latitude and -116.1° longitude. Solar zenith angle: 28.9°, and exposure time: 20 ms.

Figure 17.

Mojave Desert, USA, North America, on 28 May 2024 at 17:52 UTC. Coordinates: 38.7° latitude and -116.1° longitude. Solar zenith angle: 28.9°, and exposure time: 20 ms.

Figure 18.

Lake Assal near Gulf of Tadjoura and the Red Sea, Djibouti, Africa, on 28 May 2024 at 06:57 UTC. Coordinates: 11.6° latitude and 42.8° longitude. Solar zenith angle: 32.6°, and exposure time: 20 ms.

Figure 18.

Lake Assal near Gulf of Tadjoura and the Red Sea, Djibouti, Africa, on 28 May 2024 at 06:57 UTC. Coordinates: 11.6° latitude and 42.8° longitude. Solar zenith angle: 32.6°, and exposure time: 20 ms.

Figure 19.

Cape Town, South Africa, Africa, on 13 April 2024 at 08:06 UTC. Coordinates: -34.3° latitude and 18.2° longitude. Solar zenith angle: 58.1°, and exposure time: 30 ms.

Figure 19.

Cape Town, South Africa, Africa, on 13 April 2024 at 08:06 UTC. Coordinates: -34.3° latitude and 18.2° longitude. Solar zenith angle: 58.1°, and exposure time: 30 ms.

Figure 20.

Vancouver Island, Canada, North America, on 13 July 2024 at 18:43 UTC. Coordinates: 50.4° latitude and -126.0° longitude. Solar zenith angle: 35.2°, and exposure time: 30 ms.

Figure 20.

Vancouver Island, Canada, North America, on 13 July 2024 at 18:43 UTC. Coordinates: 50.4° latitude and -126.0° longitude. Solar zenith angle: 35.2°, and exposure time: 30 ms.

Figure 21.

Caspian Sea, Asia, on 7 July 2024 at 06:58 UTC. Coordinates: 46.2° latitude and 50.4° longitude. Solar zenith angle: 31.4°, and exposure time: 30 ms.

Figure 21.

Caspian Sea, Asia, on 7 July 2024 at 06:58 UTC. Coordinates: 46.2° latitude and 50.4° longitude. Solar zenith angle: 31.4°, and exposure time: 30 ms.

Figure 22.

New Orleans (Gulf of Mexico), USA, North America, on 14 June 2024 at 16:04 UTC. Coordinates: 30.6° latitude and -89.4° longitude. Solar zenith angle: 25.8°, and exposure time: 30 ms.

Figure 22.

New Orleans (Gulf of Mexico), USA, North America, on 14 June 2024 at 16:04 UTC. Coordinates: 30.6° latitude and -89.4° longitude. Solar zenith angle: 25.8°, and exposure time: 30 ms.

Figure 23.

Trondheim, Norway, Europe, on 26 April 2024 at 10:49 UTC. Coordinates: 64.3° latitude and 9.42° longitude. Solar zenith angle: 50.5°, and exposure time: 40 ms.

Figure 23.

Trondheim, Norway, Europe, on 26 April 2024 at 10:49 UTC. Coordinates: 64.3° latitude and 9.42° longitude. Solar zenith angle: 50.5°, and exposure time: 40 ms.

Figure 24.

Long Island - New York, USA, North America, on 16 June 2024 at 15:14 UTC. Coordinates: 41.3° latitude and -73.4° longitude. Solar zenith angle: 27.1°, and exposure time: 35 ms.

Figure 24.

Long Island - New York, USA, North America, on 16 June 2024 at 15:14 UTC. Coordinates: 41.3° latitude and -73.4° longitude. Solar zenith angle: 27.1°, and exposure time: 35 ms.

Figure 25.

Alaska, USA, North America, on 15 April 2024 at 21:08 UTC. Coordinates: 61.3° latitude and -147.1° longitude. Solar zenith angle: 51.1°, and exposure time: 25 ms.

Figure 25.

Alaska, USA, North America, on 15 April 2024 at 21:08 UTC. Coordinates: 61.3° latitude and -147.1° longitude. Solar zenith angle: 51.1°, and exposure time: 25 ms.

Figure 26.

Svalbard, Norway, Europe, on 3 May 2024 at 19:07 UTC. Coordinates: 78.2° latitude and 13.6° longitude. Solar zenith angle: 80.2°, and exposure time: 35 ms.

Figure 26.

Svalbard, Norway, Europe, on 3 May 2024 at 19:07 UTC. Coordinates: 78.2° latitude and 13.6° longitude. Solar zenith angle: 80.2°, and exposure time: 35 ms.

Figure 27.

Finnmark, Norway, Europe, on 21 July 2024 at 18:24 UTC. Coordinates: 70.2° latitude and 22.8° longitude. Solar zenith angle: 79.5°, and exposure time: 35 ms.

Figure 27.

Finnmark, Norway, Europe, on 21 July 2024 at 18:24 UTC. Coordinates: 70.2° latitude and 22.8° longitude. Solar zenith angle: 79.5°, and exposure time: 35 ms.

Figure 28.

Florida, USA, North America, on 21 May 2024 at 15:51 UTC. Coordinates: 27.2° latitude and -82.6° longitude. Solar zenith angle: 22.1°, and exposure time: 30 ms.

Figure 28.

Florida, USA, North America, on 21 May 2024 at 15:51 UTC. Coordinates: 27.2° latitude and -82.6° longitude. Solar zenith angle: 22.1°, and exposure time: 30 ms.

Table 1.

Notation and descriptions of sequences in 1D-Justo-LiuNet.

Table 1.

Notation and descriptions of sequences in 1D-Justo-LiuNet.

| NOTATION |

DESCRIPTION OF THE SEQUENCE |

| NETWORK’S INPUT |

|

Image pixel to be classified, represented by its spectral signature along wavelengths . In this work, each pixel comprises spectral bands. |

| CONVOLUTIONS FOR FEATURE EXTRACTION |

|

Weight parameters of the kernels in the convolution layer at level X. Each 2D kernel consists of components across the K dimension. The number of kernel components, , corresponds to the number of kernels used in the convolution at the previous level . Since it is common to use multiple kernels at previous levels, the convolution at level X also demands kernels with multiple components, i.e., . Only at level 1, convolution kernels are, however, single-component (1D) across K as there are no previous convolutions and hence weight parameters are given by . In Table 2, we provide the numerical dimensions for , , and K across 1D-Justo-LiuNet. |

|

Bias parameters of the kernels in the convolution at level X. The sequence is always 1D, regardless of whether the kernel weights are multi-component (2D) or single-component (1D). |

|

Output sequence of the convolution layer at level X, including all one-dimensional feature maps with length produced by the respective kernels. Each feature map is always one-dimensional regardless of whether the kernel weights are multi- or single-component. |

| POOLING FOR FEATURE REDUCTION |

| |

Output sequence of pooling layer at level X with feature maps, where their original length is reduced down to . |

| CLASSIFICATION OUTPUT |

|

Output sequence of flatten layer representing the I-th highest-level extracted features in the latent space relevant for sea-land-cloud classification. |

|

Weight parameters of the C neurons in the dense layer, where C represents the number of classes to detect. Each neuron is fully connected, with I synapses, to the respective features in to calculate the probability that they belong to the neuron’s respective class. |

|

Bias parameters of the C neurons in the dense layer. |

|

Output sequence of the dense layer (i.e., output of the network), consisting of C class probabilities. |

Table 2.

Overview of the 1D-CNN 1D-Justo-LiuNet showing sequence dimensions. * N/A: Pooling layers do not contain trainable parameters.

Table 2.

Overview of the 1D-CNN 1D-Justo-LiuNet showing sequence dimensions. * N/A: Pooling layers do not contain trainable parameters.

| INPUT |

LAYER PARAMETERS |

OUTPUT |

| SEQUENCE |

DIMENSIONS |

SEQUENCES |

DIMENSIONS |

SEQUENCE |

DIMENSIONS |

| FEATURE EXTRACTION AND REDUCTION |

| LEVEL 1 |

| CONVOLUTION ( kernels; ) |

|

1 x 112 |

|

6 x 6 |

|

6 x 107 |

| 3-4[1pt/1pt] |

|

|

1 x 6 |

|

|

| POOLING

|

|

6 x 107 |

N/A*

|

N/A |

|

6 x 53 |

| LEVEL 2 |

| CONVOLUTION ( kernels; ) |

|

6 x 53 |

|

12 x 6 x 6 |

|

12 x 48 |

| 3-4[1pt/1pt] |

|

|

1 x 12 |

|

|

| POOLING

|

|

12 x 48 |

N/A |

N/A |

|

12 x 24 |

| LEVEL 3 |

| CONVOLUTION ( kernels; ) |

|

12 x 24 |

|

18 x 12 x 6 |

|

18 x 19 |

| 3-4[1pt/1pt] |

|

|

1 x 18 |

|

|

| POOLING

|

|

18 x 19 |

N/A |

N/A |

|

18 x 9 |

| LEVEL 4 |

| CONVOLUTION ( kernels; ) |

|

18 x 9 |

|

24 x 18 x 6 |

|

24 x 4 |

| 3-4[1pt/1pt] |

|

|

1 x 24 |

|

|

| POOLING

|

|

24 x 4 |

N/A |

N/A |

|

24 x 2 |

| FLATTENING OF FEATURES |

|

24 x 2 |

N/A |

N/A |

|

1 x 48 |

| CLASSIFICATION OF FEATURES |

| DENSE ( class neurons to 48-dimensional latent space) |

|

1 x 48 |

|

3 x 48 |

|

1 x 3 |

| 3-4[1pt/1pt] |

|

|

1 x 3 |

|

|

Table 3.

Classification of segmented images based on confidence probability.

Table 3.

Classification of segmented images based on confidence probability.

| SEGMENTED IMAGES |

PROBABILITY |

CATEGORICAL PREDICTION |

|

Figure 6 (b) |

(1.00, 0.00) |

no mispointing |

|

Figure 9 (b) |

(0.00, 1.00) |

mispointing |