Introduction

In recent years, advancements in artificial intelligence (AI) have transformed various fields, and one notable application is in the realm of medical imaging [

1,

2,

3,

4,

5,

6]. AI holds significant potential in revolutionizing the field of medical imaging, as it can automate numerous tasks and even surpass human abilities in specific areas, whether it be in diagnostic or interventional applications [

7]. Recently, for example, the integration of computer-aided diagnosis (CAD) in mammography for automated AI- based breast cancer screening, has demonstrated high efficiency and accuracy in detection of suspicious and moderate-risk lesions, reducing turnover times and radiologist workload related burn-out [

8]. AI has also demonstrated high capability not only improving automated analysis and data interpretation from computed tomography angiography, but also in performing robot-assisted percutaneous coronary interventions [

9].

Integrating AI with ultrasound (US) imaging is particularly compelling. Unlike other imaging modalities, US relies heavily on human operators [

10,

11]. This dependence on human expertise presents unique challenges, especially with the growing use of portable US devices. These devices are increasingly employed by a diverse range of healthcare providers, including non-radiologists, who may have varying levels of training and experience [

12,

13]. AI algorithms offer a powerful solution to mitigate the challenges associated with operator dependency in US imaging. These algorithms can play a crucial role in the automated detection of anomalies and significant findings, providing not only descriptive analysis but also valuable diagnostic guidance [

14,

15] This capability is particularly beneficial for less experienced operators or in situations where expert radiologists are not readily available in regions with limited medical resources. The integration of AI can lead to more accurate and efficient diagnostic processes, reducing the likelihood of human error and improving patient outcomes. Such benefits in the adoption of AI pave the way for the possible integration of ChatGPT in US imaging.

ChatGPT is an advanced and powerful AI natural language processing model developed by OpenAI and was designed to comprehend and generate human-like text responses [

19]. Having been extensively trained on a diverse corpus of data, ChatGPT has cultivated the capacity to grasp context, acquire knowledge from examples, and produce cohesive responses [

20]. Consequently, it has evolved into a versatile tool applicable to a wide array of uses, including healthcare and medical imaging [

21,

22,

23,

24,

25,

26,

27]. In healthcare, its capacity to process and interpret vast amounts of information can support medical diagnostics, patient communication, and research. The latest version, GPT-4, expands its ability to multimodal interactions, including image processing and potential capabilities in audio and video formats [

28,

29]. This enhancement is especially beneficial in healthcare, where it can analyze medical imagery, assist in creating educational materials, and offer visually descriptive assistance in patient care. By integrating advanced image analysis and generation, GPT-4 stands poised to transform how AI supports healthcare professionals, offering tools for more accurate diagnoses, treatment planning, and patient engagement through rich, interactive media.

Within this context, we assess the potential applications of ChatGPT in US imaging, specifically through examples of using GPT-4 for quantitative image analysis. These examples include techniques like grayscale texture analysis, which allows for intricate tissue characterization, and the precise assessment of perfusion metrics for contrast-enhanced ultrasound (CEUS). This exploration sheds light on how GPT-4 can be instrumental in research endeavors and future clinical applications by augmenting the accuracy of quantitative image analysis. To the best of our knowledge, the application of GPT-4 in image processing and analysis is yet to be explored. Additionally, we highlight instances where ChatGPT demonstrates its capability to enhance workflow efficiencies, notably in report generation. Intriguingly, it also shows potential in providing real-time image guidance.

Technical Overview of ChatGPT

ChatGPT, developed by OpenAI [

30], is an advanced Large Language Model (LLM) known as “Generative Pretrained Transformer” or GPT. This conversational interface is built upon deep learning algorithms and artificial neural networks, primarily trained in an “unsupervised” manner, without human input [

31,

32]. It is based on the transformer model architecture, comprising multiple layers of interconnected transformer blocks with self-attention mechanisms and feed-forward neural networks. This architecture makes it highly versatile for natural language processing tasks such as machine translation, language modeling, and text classification [

33,

34].

ChatGPT undergoes extensive pre-training on diverse datasets, including articles, books, and web pages, enabling it to understand language across various contexts. It can be fine-tuned for specific tasks, making it adaptable for a wide range of applications. As a user-friendly conversational AI model, ChatGPT interacts with users through a chat-box feature, providing relevant and precise responses based on user inputs. Unlike traditional search engines, it generates contextually aligned responses in a conversational manner, thanks to its training data, resulting in more meaningful interactions. It offers specific answers and step-by-step guidance, even providing scripts and codes for image analysis and processing in various programming languages.

GPT-4, the latest iteration of the GPT series, continues to build upon the success of its predecessors, incorporating key innovations to enhance its performance and versatility. One of the standout features of GPT-4 is its multimodal capability, which means it can process not only text but also images as input [

26,

27]. It represents a significant advancement in natural language processing and a substantial departure from earlier GPT models, which primarily focused on text-based tasks. GPT-4’s multimodal capabilities and improved performance make it a powerful tool for various applications. It can handle a wide range of tasks, from natural language understanding and generation to image processing and interpretation. As a result, GPT-4 has the potential to advance the field of AI and enable more sophisticated and interactive human-computer interactions.

ChatGPT Example Applications in Ultrasound Imaging

We anticipate that the use of ChatGPT with US imaging technology has the potential to bring transformative changes to both clinical and research applications. Below are some ways that ChatGPT can contribute to this transformation.

Examples for Using GPT-4 for Quantitative Image Analysis

GPT-4 marks a significant evolution in the realm of AI, transcending its predecessors with multimodal capabilities and enhanced performance. This leap forward isn’t just a minor upgrade but a major shift from the primarily text-centric focus of earlier GPT models. Its expansion into realms like image processing and interpretation heralds a new era in quantitative image analysis [

35]. Beyond its technical prowess, GPT-4 stands out for its accessibility and user-friendly interface. It responds effectively to simple prompts and queries, making advanced AI functions more approachable for a broader audience. While ChatGPT’s potential in quantitative image analysis remains largely unexplored, its application in this field could be a game-changer for research and future clinical applications, according to our current understanding. To our knowledge, ChatGPT has not been evaluated for quantitative mage analysis such application that can be useful for research projects and for future clinical applications.

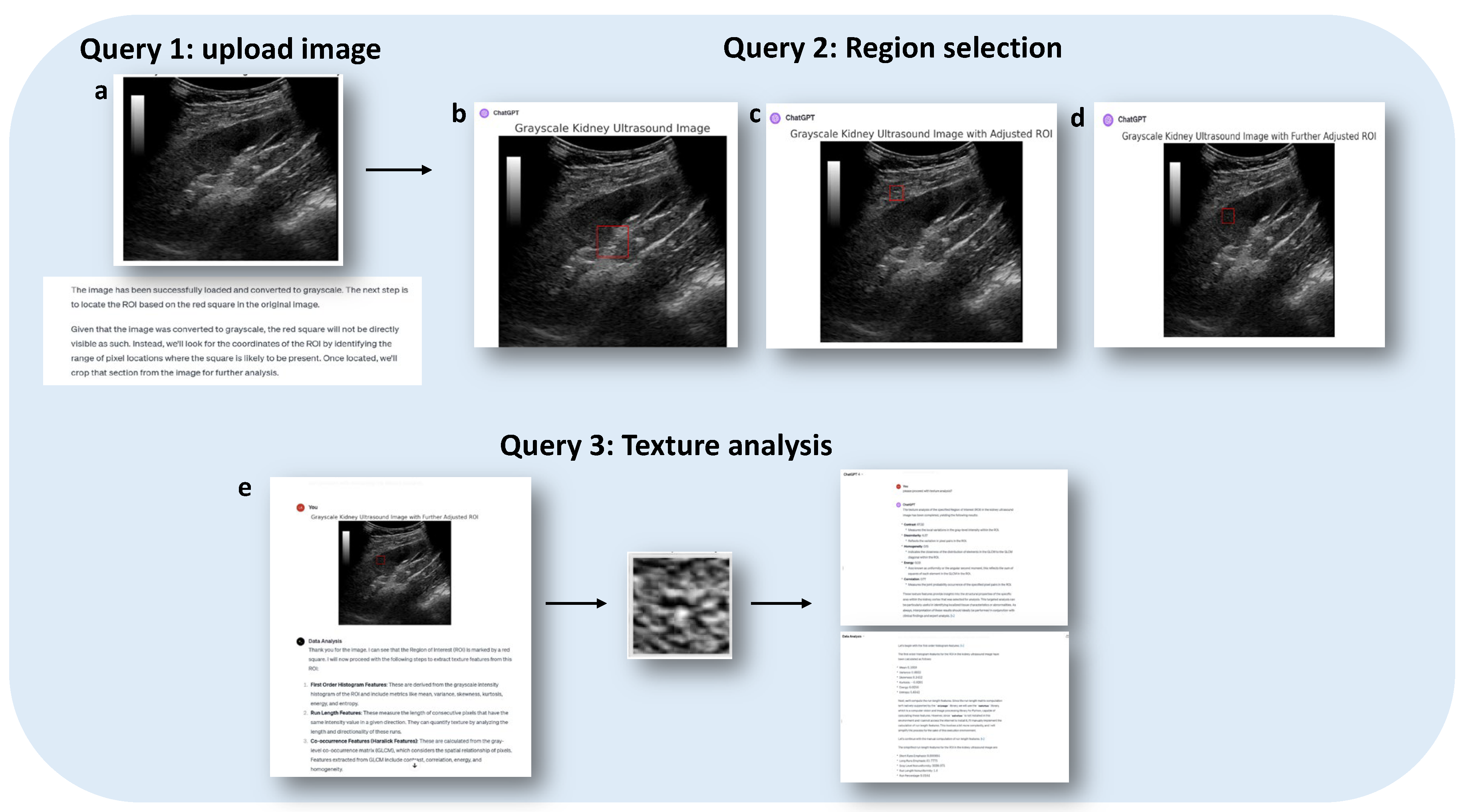

Example 1: Grayscale Texture Analysis Based on B-Mode Ultrasound Images

The field of texture image analysis has shown progress, but available tools are not always open source or in a finalized, validated form [

36]. To address this, we sought help from ChatGPT for performing texture analysis on a renal US image (

Figure 1). We began our process by uploading an image to GPT-4 and submitting a request for texture analysis of the renal cortex region. The initial challenge involved correctly placing the Region of Interest (ROI) in the cortex. This required several iterations, with multiple queries to accurately position the ROI (

Figure 1b).

Once the ROI was correctly situated in the renal cortex, we requested GPT-4 to perform the texture analysis and extract relevant features representing the tissue texture. This analysis yielded several key features, such as contrast, homogeneity, energy, and correlation (

Figure 1c). These features are crucial as they illustrate the inter-pixel relationships within the image, reflecting changes in tissue texture. These changes are significant, as noted in previous studies [

37,

38].

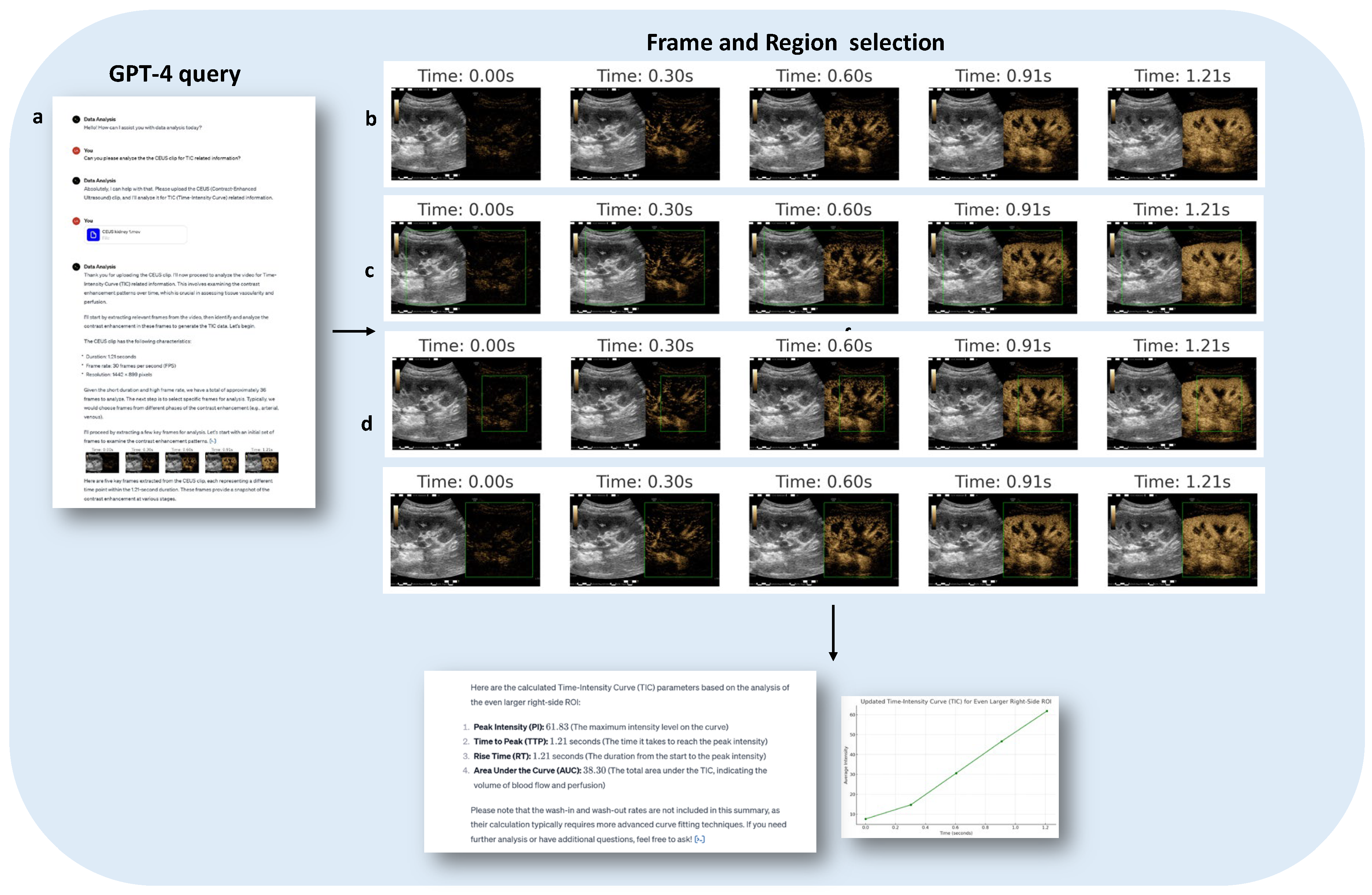

Example 2: Time Intensity Curve Analysis for Contrast Enhanced Ultrasound

The utilization of Contrast Enhanced Ultrasound (CEUS) has been increasing due to its numerous benefits, yet it requires quantitative analysis to interpret tissue perfusion accurately [

39,

40]. Currently, such quantitative tools are either unavailable or inaccessible, prompting the exploration of alternative solutions. Utilizing ChatGPT as a tool for analyzing the dynamics of CEUS could provide a user-friendly option for immediate analysis during imaging sessions. In this study, we assessed GPT-4’s ability to conduct Time-Intensity Curve (TIC) analysis on kidney CEUS images as demonstrated in

Figure 2. The process began with uploading the ultrasound clip followed by a request to ChatGPT for TIC analysis. However, we encountered difficulties in accurately placing the Region of Interest (ROI), necessitating multiple queries to adjust the ROI appropriately. Once the correct ROI was confirmed with GPT-4, the analysis proceeded, successfully yielding the curve and quantitative perfusion parameters indicative of renal enhancement with contrast. One challenge we encountered was related to the file size. Attempts to upload larger files were unsuccessful due to limitations in the system’s capacity to handle bigger data sizes. Therefore, we had to use a shorted clip that does not show wash-out information. Still, this method holds promise for making CEUS analysis more accessible and efficient.

Figure 2.

Time-Intensity Curve Analysis for Quantitative Contrast Enhanced Ultrasound of Thigh Muscles Using ChatGPT. This figure illustrates the application of GPT-4 in analyzing Contrast Enhanced Ultrasound (CEUS) data from thigh muscles. The process initiates with the upload of an ultrasound clip (a), followed by a request to ChatGPT for Time-Intensity Curve (TIC) analysis. (b-c) The figure highlights the challenges faced in accurately defining the Region of Interest (ROI) and the iterative process of adjusting it through multiple queries. Once the correct ROI is established, GPT-4 processes the data to produce the TIC, effectively demonstrating muscle perfusion parameters post-contrast enhancement.

Figure 2.

Time-Intensity Curve Analysis for Quantitative Contrast Enhanced Ultrasound of Thigh Muscles Using ChatGPT. This figure illustrates the application of GPT-4 in analyzing Contrast Enhanced Ultrasound (CEUS) data from thigh muscles. The process initiates with the upload of an ultrasound clip (a), followed by a request to ChatGPT for Time-Intensity Curve (TIC) analysis. (b-c) The figure highlights the challenges faced in accurately defining the Region of Interest (ROI) and the iterative process of adjusting it through multiple queries. Once the correct ROI is established, GPT-4 processes the data to produce the TIC, effectively demonstrating muscle perfusion parameters post-contrast enhancement.

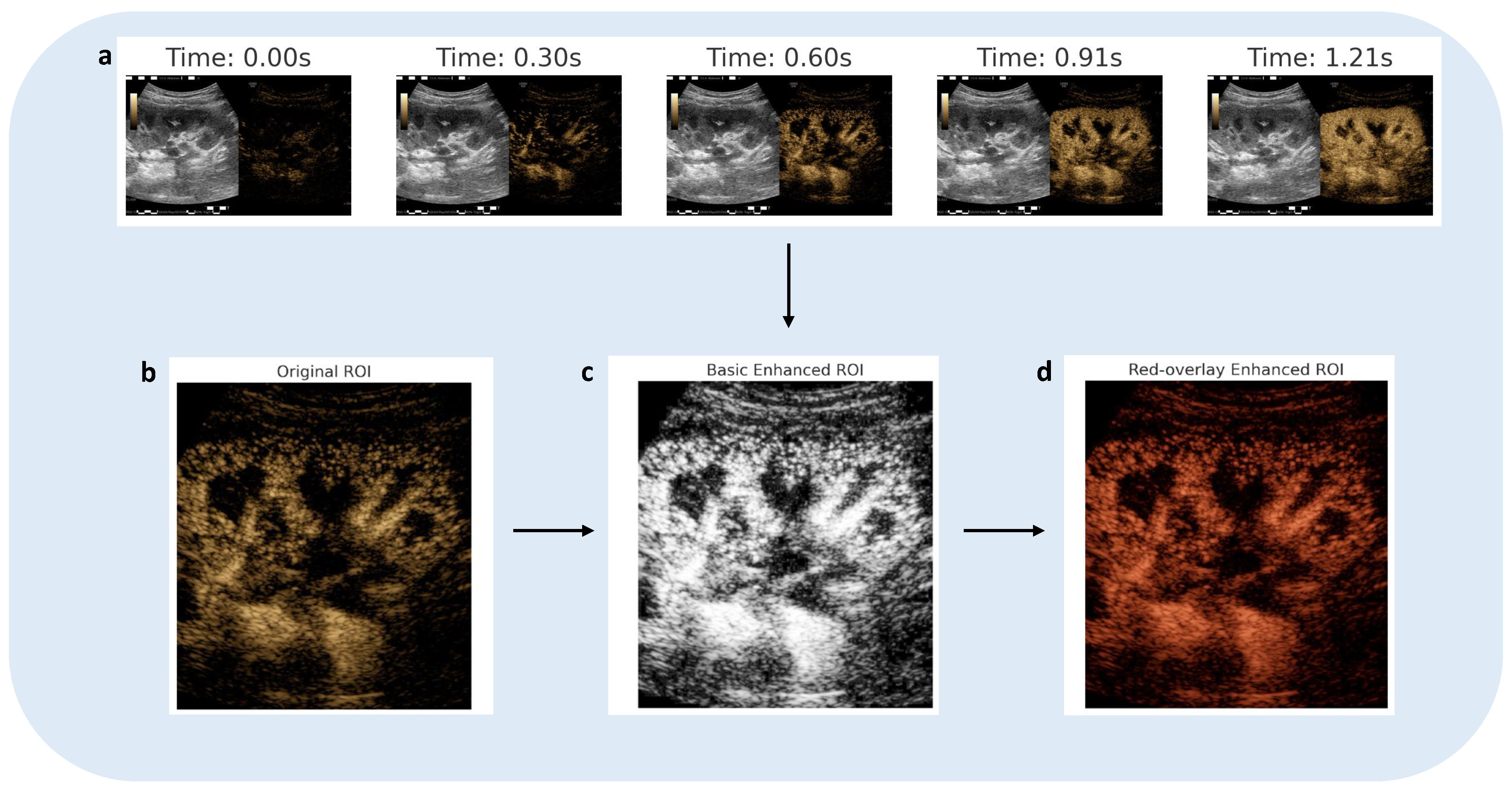

Example 3: Generating Super Resolution Images Based on Vascular Enhancement of CEUS

The development of super-resolution and enhanced imaging using CEUS is a rapidly advancing field, showing significant promise in the detailed examination of tissue microvasculature [

41]. In our study, we utilized the same CEUS data of the kidney previously examined to evaluate GPT-4’s capabilities in generating super-resolution images from basic vascular enhancements [

Figure 3]. Impressively, GPT-4 efficiently produced these images. However, it faced challenges with more complex tasks such as particle tracking, primarily due to memory allocation issues. These difficulties likely arose from the substantial processing demands of larger, enhanced images. This highlights a current limitation in our computational environment, where the memory available may not be adequate for handling high-resolution image processing tasks that require multiple enhancement techniques.

Figure 3.

In this illustration, we demonstrate the process of generating super-resolution images through vascular enhancement of Contrast-Enhanced Ultrasound (CEUS). a) Depicts the original CEUS images of the kidney. b) Displays the selected Region of Interest (ROI) chosen for further processing. c) and d) Showcase the processed images, processed using vascular enhancement techniques.

Figure 3.

In this illustration, we demonstrate the process of generating super-resolution images through vascular enhancement of Contrast-Enhanced Ultrasound (CEUS). a) Depicts the original CEUS images of the kidney. b) Displays the selected Region of Interest (ROI) chosen for further processing. c) and d) Showcase the processed images, processed using vascular enhancement techniques.

Examples for Using ChatGPT for Improving Workflow

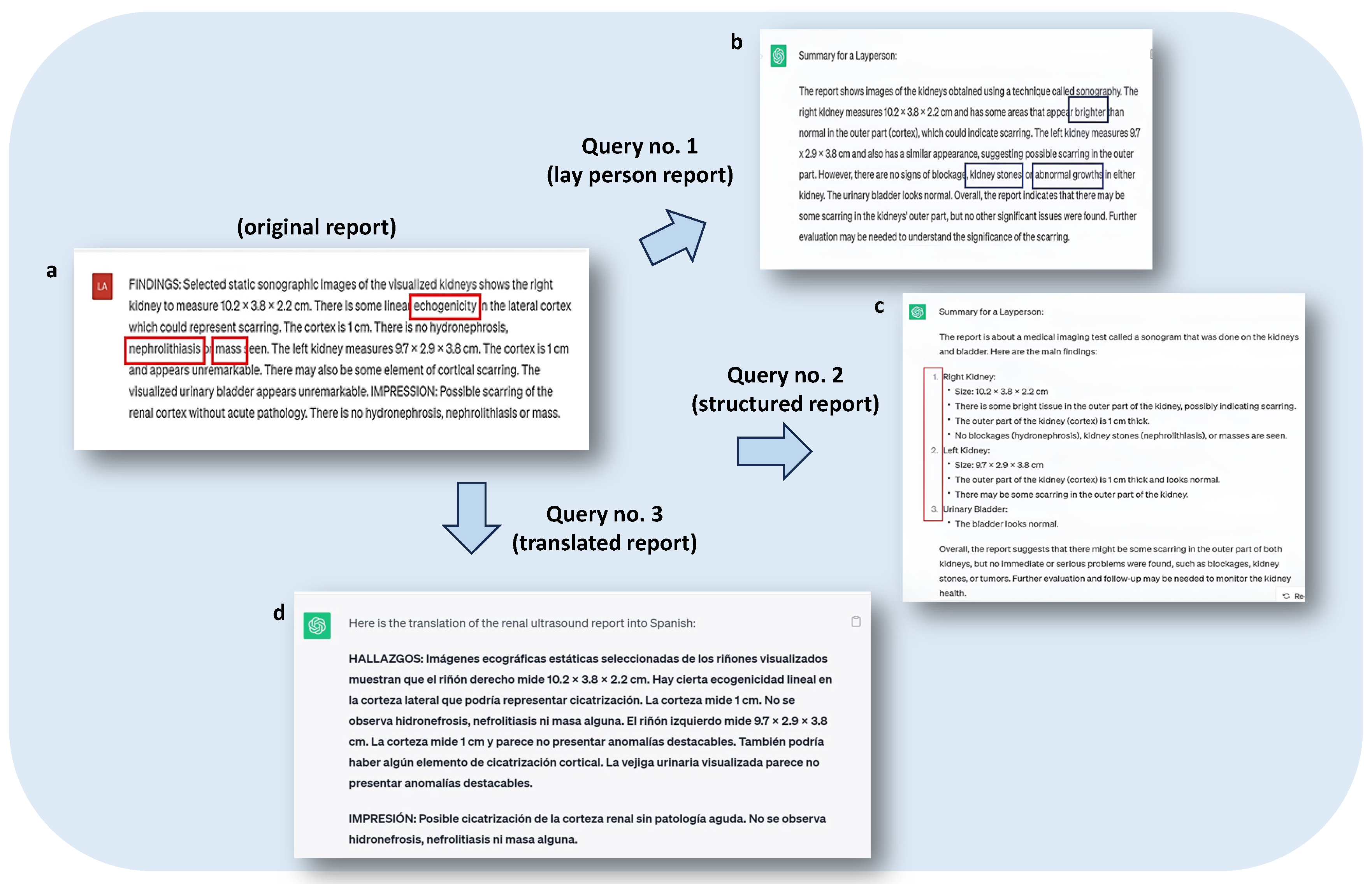

Example 1: Simplifying Report for Better Patient Communication

The integration of ChatGPT in the US imaging reporting system can facilitate better communication between healthcare practitioners and patients. ChatGPT can be utilized to enhance report accuracy and efficiency. It can be employed as a ‘peer-reviewer’ for the initial radiology report to identify potential discrepancies or errors. This can enhance the overall quality of radiology reports and reduce the chances of misdiagnosis. The model can also be used to generate simplified explanations of US findings, enabling healthcare professionals to convey complex medical information in a more accessible manner. It can describe the presence of pathology or the location of an abnormality in simple terms. Tailored layperson reports in multiple languages and education levels, facilitated by ChatGPT, empower patients with relevant health information. This promotes mutual understanding with healthcare providers, enhancing communication, reducing anxiety, and fostering patient-centered care [

42,

43,

44].

In this context, we asked ChatGPT to process an US report, that was acquired from a public repository [

45], to a simpler form. Specifically, we asked ChatGPT to summarize and simplify the US report to layman terms (

Figure 4a). The product report was very easy to understand in very simple descriptive words and was accurate according to our radiologist. We then asked ChatGPT to generate structured report (

Figure 4b), and, to translate the report into Spanish to evaluate its accuracy in other languages. Again, our authors with knowledge in Spanish confirmed the high accuracy of the translations (

Figure 4c).

Figure 4.

This figure illustrates the interactions with ChatGPT where we tasked the model with the re-writing of a renal ultrasound report (a) into a more suitable format for a layperson’s understanding. b) The first query to ChatGPT resulted in the generation of a concise and simplified version of the original report. The second query (c) involved ChatGPT restructuring the report into a series of bullet points for enhanced clarity and readability. We further challenged ChatGPT to perform translations into Spanish (d) and checked its accuracy.

Figure 4.

This figure illustrates the interactions with ChatGPT where we tasked the model with the re-writing of a renal ultrasound report (a) into a more suitable format for a layperson’s understanding. b) The first query to ChatGPT resulted in the generation of a concise and simplified version of the original report. The second query (c) involved ChatGPT restructuring the report into a series of bullet points for enhanced clarity and readability. We further challenged ChatGPT to perform translations into Spanish (d) and checked its accuracy.

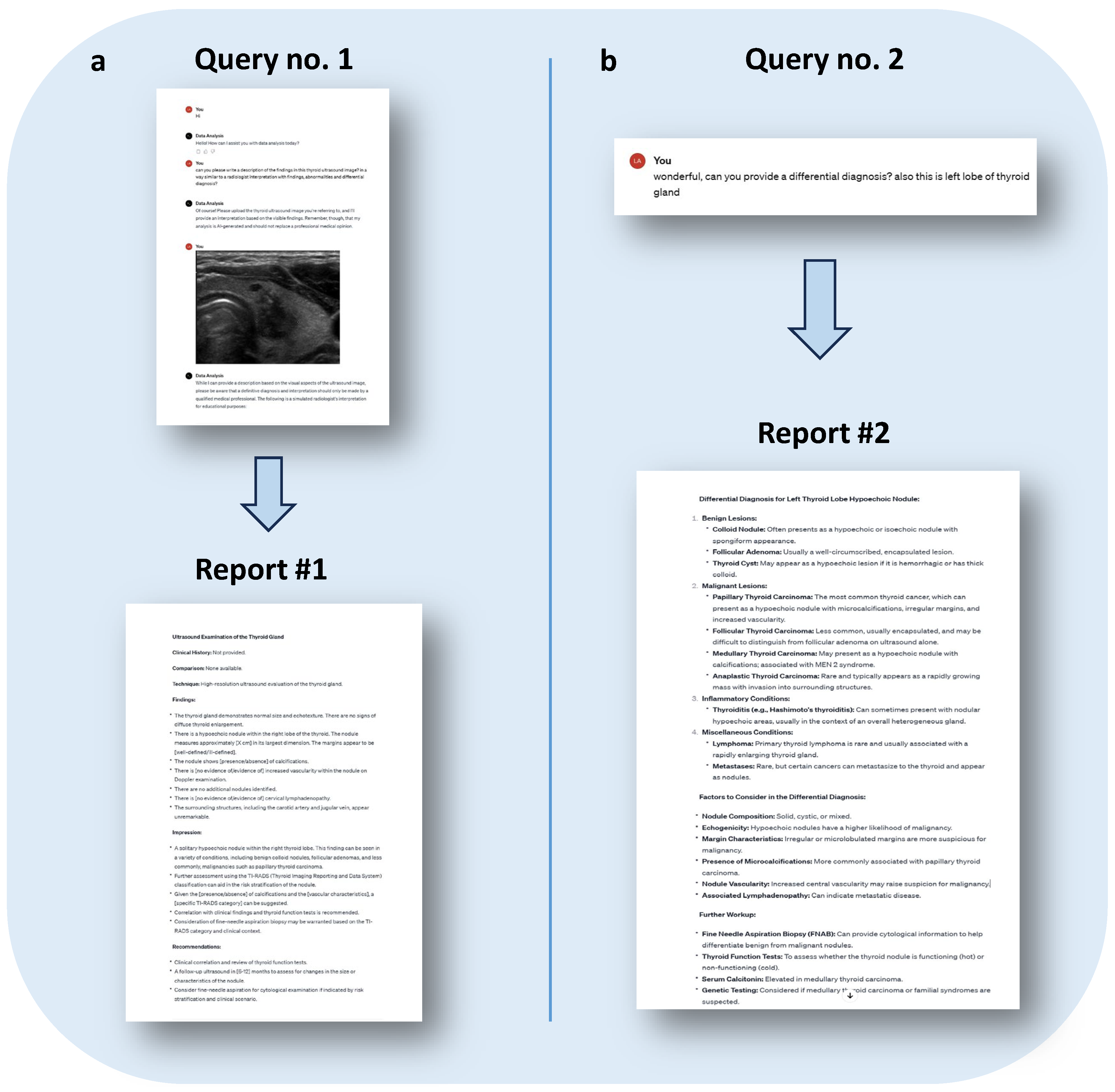

Example 2: Image Interpretation and Diagnosis Report Generation

We evaluated GPT-4’s capability to generate diagnostic reports from US images, aiming to aid radiologists, especially those with less experience. We concentrated on GPT-4’s skill in producing a comprehensive report from thyroid B-mode US image. We input a grayscale image of a malignant thyroid tumor into GPT-4 for analysis. Our request was for GPT-4 to create a report resembling a radiologist’s interpretation, encompassing descriptions of findings, abnormalities, and differential diagnoses (

Figure 5). The report generated by GPT-4 was well-structured and informative (

Figure 5b). It commenced with an overview of the findings, specifically identifying a homogeneous echotexture area in the thyroid. It accurately detected a hypoechoic lesion within the thyroid gland, although it erroneously located it on the right side. The report also included comprehensive observations on the surrounding vasculature and noted other potential abnormalities. Additionally, when prompted for a differential diagnosis, GPT-4 provided a range of possible diagnoses for the hypoechoic lesion, including various tumor types (

Figure 5d). Furthermore, it proposed a follow-up plan for patient management, enhancing the report’s practical utility.

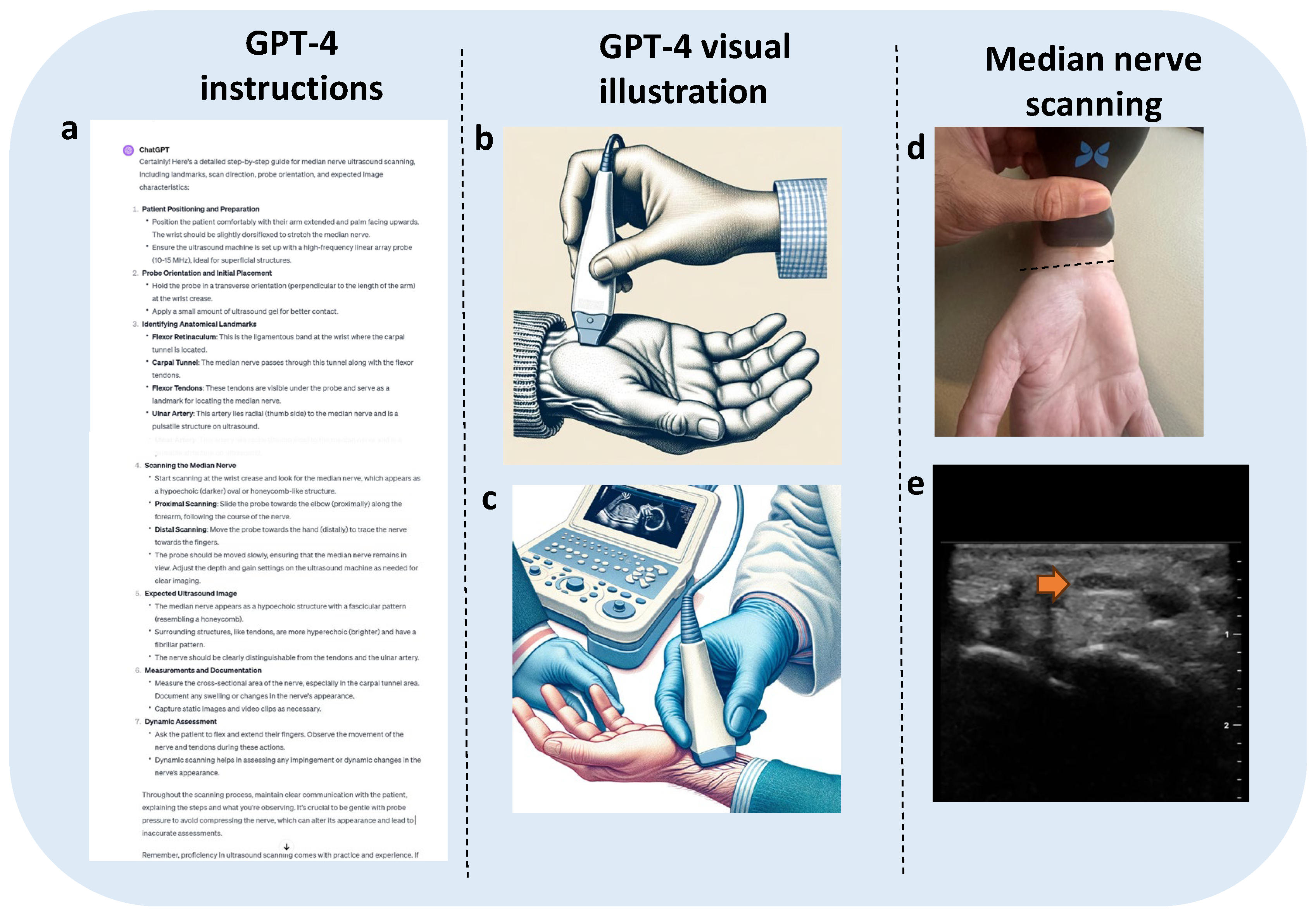

Example 3: ChatGPT Provides Real-Time US Imaging Guidance

The utilization of US technology is on the rise, with the advent of portable US devices that are now being employed worldwide. This expansion in usage extends beyond traditional users like radiologists and sonographers, encompassing a broader spectrum of healthcare providers and trainees from fields such as internal medicine and emergency medicine and even by non-physicians. In this evolving landscape, ChatGPT can serve as a valuable resource, offering real-time guidance to those conducting US imaging. The significance of ChatGPT’s virtual assistance becomes especially pronounced when considering less experienced practitioners who may find real-time support and direction immensely beneficial. This assistance is particularly valuable in situations where US is employed as a portable imaging tool, operated by individuals with varying levels of training and expertise. In the context of complex US procedures, especially in regions with limited expertise or resources, a trainee, for instance, can turn to ChatGPT for guidance during imaging sessions. By interpreting ChatGPT’s instructions and responding to its queries, users can navigate the US probe with precision towards specific target areas, such as tumors or regions of interest. ChatGPT’s contribution lies in providing step-by-step guidance, immediate feedback, precise instructions, and valuable recommendations to healthcare practitioners in real-time. Through ChatGPT’s assistance, practitioners can optimize their probe’s position, angle, and depth, ensuring accurate visualization of the intended target and facilitating precision during procedures. Additionally, in situations involving challenging cases characterized by anatomical variations or hard-to-visualize structures, ChatGPT can suggest alternative strategies to optimize the procedure’s chances of success.

In this experiment we wanted to assess the feasibility and effectiveness of utilizing ChatGPT as a real-time guidance tool during US scanning procedures. Specifically, we aimed to investigate whether ChatGPT could proficiently assist in tasks such as suggesting optimal transducer positioning, orientation, and identification of critical anatomical landmarks for accurate scanning. Our focus centered on the application of ChatGPT’s real-time guidance in the context of US scanning of the median nerve

(Figure 6). The assessment of the median nerve via US is a procedure frequently required for the evaluation of conditions like carpal tunnel syndrome. Although this procedure is commonly performed by medical practitioners, those with limited experience in this specific area may greatly benefit from the precise and tailored instructions provided by ChatGPT. To carry out our experiment, a junior physician researcher with limited experience in US imaging initiated the scanning process. Our US device of choice was the portable Butterfly IQ, which offered dedicated nerve imaging presets. We engaged ChatGPT to provide instructions that encompassed anatomical landmarks and specific guidance for the scanning process. In addition to written instructions, ChatGPT provided illustrations that showed the transducer orientation and scanning position (

Figure 6b,c), however, it did not include a step by step illustration of scanning process. When we implemented the instructions provided by ChatGPT, we significantly improved our ability to locate crucial landmarks and acquire high-quality images of the median nerve (as depicted

Figure 6d,e). This outcome highlights the potential of ChatGPT as a valuable real-time assistant in US scanning, particularly in scenarios where precise guidance and image optimization are paramount.

Challenges and Considerations for Implementing ChatGPT in Practice

While the integration of ChatGPT with US imaging presents promising opportunities, certain challenges and ethical considerations must be addressed to ensure its responsible use. One of the foremost concerns lies in the need for rigorous data privacy measures. Given that ChatGPT might require access to a substantial volume of patient data, including sensitive information like US images and associated medical records, robust security protocols are non-negotiable. Implementing these measures not only safeguards patient confidentiality but also ensures compliance with data protection regulations governing the healthcare sector. In this preliminary study, we used data that were either acquired from public repositories or image data processed for anonymization with no patient information.

Equally crucial is the aspect of patient consent. Transparency in the use of ChatGPT for US imaging is important. Obtaining informed consent from patients is not only an ethical requirement but becomes particularly nuanced when considering the potential implementation of ChatGPT in generating medical reports. Whether the information is fed anonymously or with explicit patient consent, clarity and openness regarding data usage, the technology’s limitations, and potential risks are imperative. This transparency is pivotal for fostering a relationship of trust between patients, healthcare providers, and technology itself.

Another constraint pertains to the technical challenges we have faced, primarily concerning aspects such as file size, image format compatibility, and limitations associated with available memory. Furthermore, there are technical hurdles in terms of analysis techniques, notably the absence of a manual tool for region-of-interest (ROI) placement. Nonetheless, the utilization of GPT-4 as an analytical tool holds great promise for the future.

Conclusions

The fusion of ChatGPT with medical imaging, particularly ultrasound (US) imaging, holds a potential for enhancing both clinical workflows and research initiatives. ChatGPT can play a pivotal role as a valuable resource for image processing and analysis. This integration also promises to significantly boost efficiency by introducing an enhanced reporting system and real-time guidance, thereby facilitating improved communication with patients in clinical settings. In our study we explored some examples for potential applications for ChatGPT. We also wanted to shed light on the current limitations and challenges in implementing ChatGPT within the field. While it remains difficult to pinpoint an exact timeline, advancements in medical imaging and natural language processing, combined with ongoing research endeavors, strongly suggest that intelligent image interpretation utilizing models like ChatGPT could become increasingly viable in the near future.

Author Contributions:

Conceptualization: LRS, MKHM, SA, Methodology: LRS, MKHM, SA, Investigation: LRS, MKHM, SSBV, SA, Resources: LRS, BV, SA, writing review and editing: LRS, MKHM, PR, TMT, BV, SA, Supervision: LRS, BV, SA. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding

Institutional Review Board Statement

Not applicable

Informed Consent Statement

Not applicable

Data Availability Statement

No new data were analyzed in this study. Data sharing is not applicable to this article

Conflicts of Interest

The authors declare no conflict of interest

References

- Oren, O.; Gersh, B.J.; Bhatt, D.L. Artificial intelligence in medical imaging: switching from radiographic pathological data to clinically meaningful endpoints. Lancet Digital Health. 2020, 2, e486–e488. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.E.; Kim, H.H.; Han, B.K.; Ghafoorian, M.; Tan, T.; Andriessen, T.; Vyvere, T.V.; Hauwe, L.v.D.; Romeny, B.t.H.; Goraj, B.; et al. Changes in cancer detection and false-positive recall in mammography using artificial intelligence: a retrospective, multireader study. Lancet Digital Health 2020, 2, e138–48. [Google Scholar] [CrossRef]

- van den Heuvel, T.L.; van der Eerden, A.W.; Manniesing, R.; et al. Automated detection of cerebral microbleeds in patients with traumatic brain injury. Neuroimage Clin. 2016, 12, 241–251. [Google Scholar] [CrossRef]

- Becker, A.S.; Marcon, M.; Ghafoor, S.; Wurnig, M.C.; Frauenfelder, T.; Boss, A. Deep learning in mammography: diagnostic accuracy of a multipurpose image analysis software in the detection of breast cancer. Invest. Radiol. 2017, 52, 434–440. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Faes, L.; Kale, A.U.; et al. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: a systematic review and meta-analysis. Lancet Digital Health 2019, 1, e271–97. [Google Scholar] [CrossRef]

- Bello, G.A.; Dawes, T.J.W.; Duan, J.; Wagner, S.K.; Fu, D.J.; Bruynseels, A.; Mahendiran, T.; Moraes, G.; Shamdas, M.; Kern, C.; et al. Deep learning cardiac motion analysis for human survival prediction. Nat. Mach. Intell. 2019, 1, 95–104. [Google Scholar] [CrossRef] [PubMed]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H.J.W.L. Artificial intelligence in radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef]

- Lauritzen, A.D.; Rodríguez-Ruiz, A.; von Euler-Chelpin, M.C.; Lynge, E.; Vejborg, I.; Nielsen, M.; Karssemeijer, N.; Lillholm, M. An Artificial Intelligence-based Mammography Screening Protocol for Breast Cancer: Outcome and Radiologist Workload. Radiology. 2022, 304, 41–49. [Google Scholar] [CrossRef] [PubMed]

- Subhan, S.; Malik, J.; Haq, A.U.; Qadeer, M.S.; Zaidi, S.M.J.; Orooj, F.; Zaman, H.; Mehmoodi, A.; Majeedi, U. Role of Artificial Intelligence and Machine Learning in Interventional Cardiology. Curr. Probl. Cardiol. 2023, 48, 101698. [Google Scholar] [CrossRef] [PubMed]

- Di Serafino, M.; Iacobellis, F.; Schillirò, M.L.; D’auria, D.; Verde, F.; Grimaldi, D.; Dell’Aversano Orabona, G.; Caruso, M.; Sabatino, V.; Rinaldo, C.; et al. Common and Uncommon Errors in Emergency Ultrasound. Diagnostics 2022, 12, 631. [Google Scholar] [CrossRef]

- Pinto, A.; Pinto, F.; Faggian, A.; Rubini, G.; Caranci, F.; Macarini, L.; Genovese, E.A.; Brunese, L.; et al. Sources of error in emergency ultrasonography. Crit. Ultrasound J. 2013, 5 (Suppl. S1), S1. [Google Scholar] [CrossRef] [PubMed]

- Le, M.P.T.; Voigt, L.; Nathanson, R.; Maw, A.M.; Johnson, G.; Dancel, R.; Mathews, B.; Moreira, A.; Sauthoff, H.; Gelabert, C.; et al. Comparison of four handheld point-of-care ultrasound devices by expert users. Ultrasound J. 2022, 14, 27. [Google Scholar] [CrossRef] [PubMed]

- Malik, A.N.; Rowland, J.; Haber, B.D.; Thom, S.; Jackson, B.; Volk, B.; Ehrman, R.R. The Use of Handheld Ultrasound Devices in Emergency Medicine. Curr. Emerg. Hosp. Med. Rep. 2021, 9, 73–81. [Google Scholar] [CrossRef]

- Kim, Y.H. Artificial intelligence in medical ultrasonography: driving on an unpaved road. Ultrasonography. 2021, 40, 313–317. [Google Scholar] [CrossRef] [PubMed]

- Komatsu, M.; Sakai, A.; Dozen, A.; Shozu, K.; Yasutomi, S.; Machino, H.; Asada, K.; Kaneko, S.; Hamamoto, R. Towards Clinical Application of Artificial Intelligence in Ultrasound Imaging. Biomedicines. 2021, 9, 720. [Google Scholar] [CrossRef]

- Shao, D.; Yuan, Y.; Xiang, Y.; Yu, Z.; Liu, P.; Liu, D.C. Artifacts detection-based adaptive filtering to noise reduction of strain imaging. Ultrasonics. 2019, 98, 99–107. [Google Scholar] [CrossRef]

- Wen, T.; Gu, J.; Li, L.; Qin, W.; Wang, L.; Xie, Y. Nonlocal Total-Variation-Based Speckle Filtering for Ultrasound Images. Ultrason. Imaging. 2016, 38, 254–275. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, J. Ultrasound image denoising using generative adversarial networks with residual dense connectivity and weighted joint loss. PeerJ Comput. Sci. 2022, 8, e873. [Google Scholar] [CrossRef] [PubMed]

- Else, H. Abstracts written by ChatGPT fool scientists. Nature 2023. [CrossRef]

- Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K.; Baabdullah, A.M.; Koohang, A.; Raghavan, V.; Ahuja, M.; et al. Opinion Paper: “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice, and policy. International Journal of Information Management. 2023, 71, 102642. [Google Scholar] [CrossRef]

- Kitamura, F.C. ChatGPT Is Shaping the Future of Medical Writing but Still Requires Human Judgment. Radiology; 0:230171.

- Jeblick, K.; Johnson, L.; Arora, S.; et al. ChatGPT makes medicine easy to swallow: an exploratory case study on simplified radiology reports. arXiv preprint arXiv:, arXiv:2212.14882. [CrossRef]

- Alhasan, K.; Lee, K.; Kumar, A.; Jamal, A.; Al-Eyadhy, A.; Temsah, M.-H.; Al-Tawfiq, J. Aet al. Mitigating the burden of severe pediatric respiratory viruses in the post-COVID-19 era: ChatGPT insights and recommendations. Cureus, 2023; 15. [Google Scholar] [CrossRef]

- Ali, S.R.; Dobbs, T.D.; Hutchings, H.A.; Whitaker, I.S. Using ChatGPT to write patient clinic letters. Lancet Digit. Health 2023, 5, e179–e181. [Google Scholar] [CrossRef] [PubMed]

- Santandreu-Calonge D, Ortiz-Martinez Y, Agusti-Toro A; et al. Can ChatGPT improve communication in hospitals? Prof. De La Inf. 2023, 32. [Google Scholar] [CrossRef]

- Biswas, S.S. Role of ChatGPT in radiology with a focus on pediatric radiology: proof by examples. Pediatr. Radiol. 2023, 53, 818–822. [Google Scholar] [CrossRef] [PubMed]

- Arya S Rao, John Kim, Meghana Kamineni, Michael Pang, Winston Lie, and Marc Succi. Evaluating chatgpt as an adjunct for radiologic decision-making. medRxiv, pages 2023–02, 2023.

- Wiggers, Kyle. OpenAI releases GPT-4, a multimodal AI that it claims is state-of-the-art”. TechCrunch. Archived from the original on March 15, 2023. Retrieved March 15, 2023.

- ubeck, Sébastien; Chandrasekaran, Varun; Eldan, Ronen; Gehrke, Johannes; Horvitz, Eric; Kamar, Ece; Lee, Peter; Lee, Yin Tat; Li, Yuanzhi; Lundberg, Scott; Nori, Harsha; Palangi, Hamid; Ribeiro, Marco Tulio; Zhang, Yi (March 22, 2023). “Sparks of Artificial General Intelligence: Early experiments with GPT-4”. arXiv:2303.12712 [cs.CL].

- ChatGPT – Release Notes”. Archived from the original on August 4, 2023. Retrieved August 4, 2023.

- Ortiz, Sabrina (February 2, 2023). “What is ChatGPT and why does it matter? Here’s what you need to know”. ZDNET. Archived from the original on January 18, 2023. Retrieved December 18, 2022.

- “Artificial intelligence technology behind ChatGPT was built in Iowa — with a lot of water”. AP News. September 9, 2023. Retrieved September 10, 2023.

- “What’s the next word in large language models?”. Nature Machine Intelligence. 5 (4): 331–332. April 2023. https://doi.org/10.1038/s42256-023-00655-z. ISSN 2522-5839. S2CID 258302563. Archived from the original on June 11, 2023. Retrieved June 10, 2023.

- “What is ChatGPT and why does it matter? Here’s what you need to know”. ZDNET. May 30, 2023. Retrieved June 22, 2023.

- Varghese, B.A.; Cen, S.Y.; Hwang, D.H.; Duddalwar, V.A. ChatGPT-4: a breakthrough in ultrasound image analysis, Radiology Advances 2024, 1, umae006,Varghese BA, Cen SY, Hwang DH, Duddalwar VA. Texture Analysis of Imaging: What Radiologists Need to Know. AJR Am. J. Roentgenol. 2019, 212, 520–528. [Google Scholar] [CrossRef] [PubMed]

- Gabrielsen, D.A.; Carney, M.J.; Weissler, J.M.; Lanni, M.A.; Hernandez, J.; Sultan, L.R.; Enriquez, F.; Sehgal, C.M.; Fischer, J.P.; Chauhan, A. Application of ARFI-SWV in Stiffness Measurement of the Abdominal Wall Musculature: A Pilot Feasibility Study. Ultrasound Med. Biol. 2018, 44, 1978–1985. [Google Scholar] [CrossRef]

- Habibollahi, P.; Sultan, L.R.; Bialo, D.; Nazif, A.; Faizi, N.A.; Sehgal, C.M.; Chauhan, A. Hyperechoic Renal Masses: Differentiation of Angiomyolipomas from Renal Cell Carcinomas using Tumor Size and Ultrasound Radiomics. Ultrasound Med. Biol. 2022, 48, 887–894. [Google Scholar] [CrossRef]

- Wu, M.; Hu, Y.; Hang, J.; Peng, X.; Mao, C.; Ye, X.; Li, A. Qualitative and Quantitative Contrast-Enhanced Ultrasound Combined with Conventional Ultrasound for Predicting the Malignancy of Soft Tissue Tumors. Ultrasound Med. Biol. 2022, 48, 237–247. [Google Scholar] [CrossRef]

- Sultan, L.R.; Karmacharya, M.B.; Al-Hasani, M.; Cary, T.W.; Sehgal, C.M. Hydralazine-augmented contrast ultrasound imaging improves the detection of hepatocellular carcinoma. Med. Phys. 2023, 50, 1728–1735. [Google Scholar] [CrossRef]

- Zhang, Z.; Hwang, M.; Kilbaugh, T.J.; Sridharan, A.; Katz, J. Cerebral microcirculation mapped by echo particle tracking velocimetry quantifies the intracranial pressure and detects ischemia. Nat. Commun. 2022, 13, 666. [Google Scholar] [CrossRef]

- Frosch, D.L.; Elwyn, G. Don’t blame patients, engage them: transforming health systems to address health literacy. J. Health Commun. 2014, 19 Suppl. s2, 10–14. [Google Scholar] [CrossRef] [PubMed]

- CDC Patient Engagement | Health Literacy | CDC.

- Zarcadoolas, C.; Vaughon, W.L.; Czaja, S.J.; Levy, J.; Rockoff, M.L. Consumers’ perceptions of patient-accessible electronic medical records. J. Med. Internet Res. 2013, 15, e168. [Google Scholar] [CrossRef] [PubMed]

- NDX-Imaging. https://www.ndximaging.com/radiology-reads/sample-reports/.

- “OpenAI API”. platform.openai.com. Archived from the original on March 3, 2023. Retrieved March 3, 2023.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).