1. Introduction

Automatic kinship verification is a biometric application that utilizes facial images to assess whether two individuals are related by family through an analysis of their facial features. This area holds considerable promise for both research and practical applications [

1,

2]. Facial images provide a wealth of information—such as identity, age, race, expression, and gender—which can be leveraged to distinguish individuals. The significance of kinship verification lies in its diverse real-world applications, including enhancing facial recognition systems, supporting searches for missing children, aiding in forensic image annotation, building family trees, and analyzing the multitude of images shared on social media platforms [

3].

Despite significant advancements in kinship verification in recent years, several challenges persist. These challenges stem from intrinsic factors like variations in facial appearance, as well as extrinsic factors such as differing imaging conditions. Additionally, there remains a need for more diverse datasets to effectively address these issues [

4,

5,

6].

A typical kinship verification system involves three primary stages: feature extraction, subspace learning or dimensionality reduction, and matching. This study focuses on representing sets of facial images as third-order tensors using local histogram features extracted from a novel descriptor, Hist-2D-DWT. This descriptor incorporates four scale coefficients—approximation, horizontal, vertical, and diagonal. The proposed Hist-2D-DWT descriptor offers superior properties when compared to existing local descriptors found in the literature. It has been applied successfully across various domains, including 2D and 3D face recognition, person reidentification, and age estimation [

7,

8,

9,

10,

11,

12]. Furthermore, it provides an efficient approach to reducing high-dimensional data while retaining essential features that could otherwise be lost with traditional methods.

The rest of this paper is organized as follows:

Section 2 describes the methodology, introducing the proposed face descriptor, Hist-2D-DWT.

Section 3 covers the experimental setup and analyzes the results obtained. Lastly,

Section 4 offers concluding remarks.

2. Related Works

In recent years, a broad spectrum of shallow texture models and algorithms has been developed for kinship verification, which can be broadly categorized into two main approaches. The first approach comprises techniques that employ widely recognized feature descriptors, including Histogram of Oriented Gradients (HOG) [

13], Scale-Invariant Feature Transform (SIFT) [

14], and Discriminative Correlation-Based Feature Descriptor (D-CBFD) [

15]. These methods focus primarily on extracting low-level facial features or their combinations to assess kinship links in a straightforward yet effective manner. The second approach is focused on designing simple but highly distinctive metrics to evaluate whether two facial images exhibit a kinship relationship. Key contributions in this area include the Neighborhood Repulsed Metric Learning (NRML) proposed by Lu et al. [

14], and Transfer Subspace Learning (TSL) [

16].

Recently, the application of Convolutional Neural Networks (CNNs) has made a transformative impact on kinship verification by introducing models uniquely tailored for this task. For instance, Li et al. [

17] proposed the Siamese Multi-scale Convolutional Neural Network (SMCNN), which leverages two identical CNNs supervised by a similarity metric-based loss function, specifically designed to optimize kinship verification. Additionally, Zhang et al. [

18] introduced an innovative technique termed CNN-points, which has demonstrated promising results by leveraging facial landmark points. Despite these advancements, the progress in CNN-based kinship verification has been constrained due to limited dataset availability and the evolving understanding of deep convolutional networks’ full potential for kinship analysis.

One of the most promising methodologies in kinship verification is Multilinear Subspace Learning (MSL), an advanced machine learning approach adept at extracting discriminative features across various scales and multiple feature extraction methods [

19,

20,

21,

22,

23,

24,

25,

26]. MSL excels in identifying complex patterns within large datasets, offering a robust framework for analyzing inter-variable relationships. When integrated with tensor data, MSL has proven to be especially effective for kinship verification applications. Among the prominent algorithms in this domain, Multilinear Side-information-based Discriminant Analysis (MSIDA) [

27] is particularly noteworthy. MSIDA projects input tensors into a novel multilinear subspace, maximizing class separation while minimizing within-class distance, thereby significantly enhancing discrimination between kinship classes. Another leading approach, the Tensor Cross-View Quadratic Analysis (TXQDA) [

28], offers advanced functionality by preserving the data’s intrinsic structure, enhancing interclass separation, and effectively addressing the challenges associated with small sample sizes, all while reducing computational overhead.

Collectively, these approaches represent substantial progress in the field of kinship verification. However, challenges related to data scarcity, computational efficiency, and the development of robust deep learning frameworks remain active research areas, underscoring the need for further innovations in this evolving domain.

3. Methodology

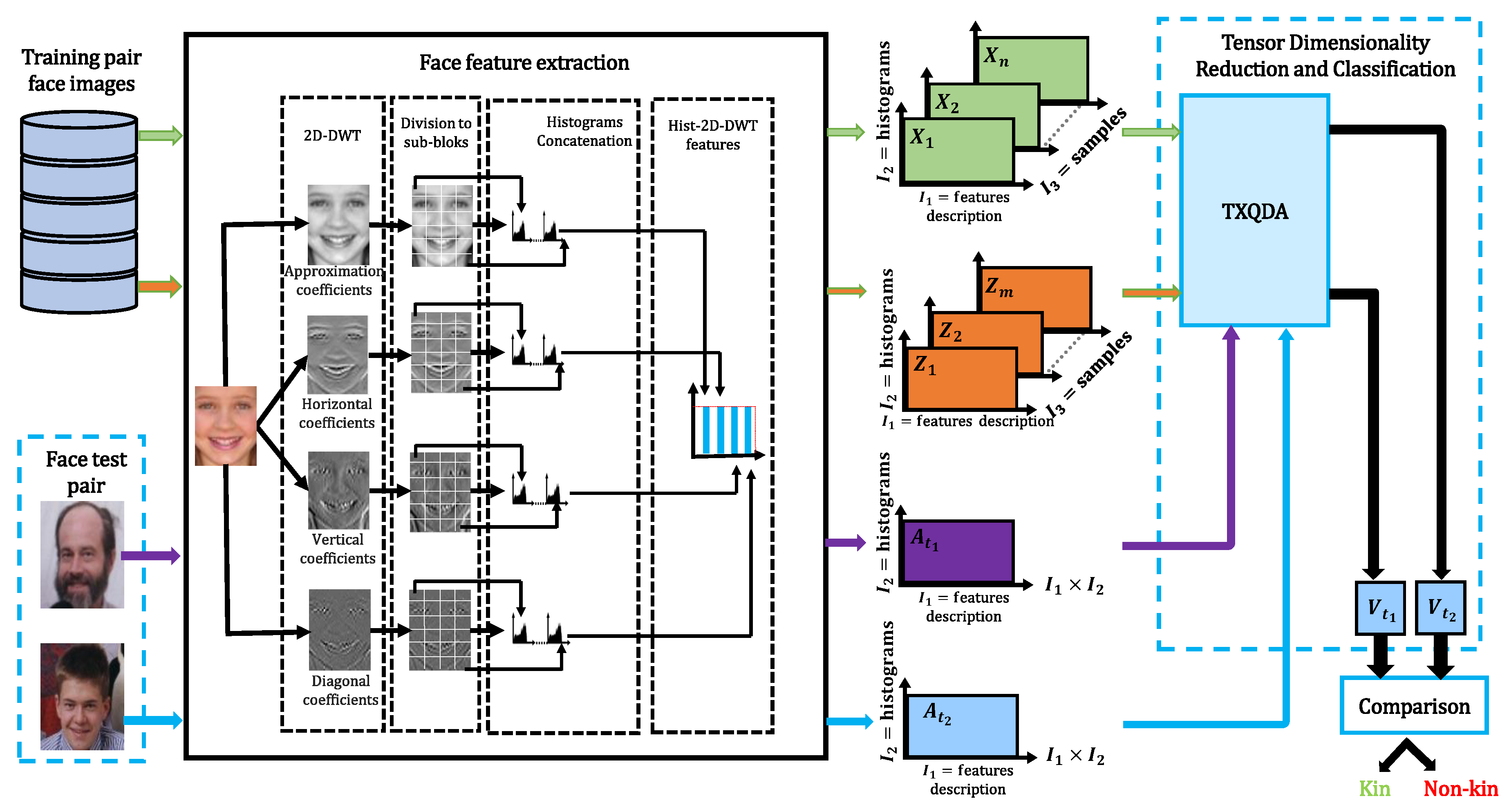

This section outlines the design of the face kinship verification system proposed in our work. As illustrated in

Figure 1, the proposed framework consists of four main components: (1) face preprocessing, (2) feature extraction, (3) multilinear subspace learning and dimensionality reduction, and (4) matching. Each of these stages is discussed in detail in the following sections.

4. Preprocessing

Preprocessing is a crucial step in face kinship verification, as it significantly enhances the quality, consistency, and reliability of input data, ultimately supporting more accurate analysis and comparison. In this study, we employ the Multi-Task Cascaded Convolutional Networks (MTCNN) approach [

29] to precisely detect and localize facial regions within images, ensuring that each face is correctly aligned and ready for further processing. This method has proven highly effective in isolating facial regions with precision, even under varied conditions.

Following facial detection, we enhance the image quality using the Retinex filter [

30], an advanced image enhancement technique known for its ability to improve contrast and correct lighting inconsistencies. This step is particularly beneficial in kinship verification, where subtle visual cues are crucial for accurate verification [

24]. The Retinex filter allows for clearer, more consistent facial representations by simulating human color perception under varying lighting conditions, ensuring that even fine-grained features remain distinguishable across diverse images. This preprocessing pipeline—combining MTCNN for robust face detection and Retinex filtering for enhanced image quality—lays a strong foundation for reliable kinship verification.

4.1. Features Extraction Based Hist-2D-DWT Face Descriptor

Feature extraction is a vital component of any face verification system, as it produces a feature vector or matrix that uniquely represents each individual within the dataset. These features serve as a distinctive biometric signature for each person. The two-dimensional discrete wavelet transform (2D-DWT) is a commonly employed technique in image processing that converts an image from the spatial domain to the frequency domain [

31]. This method decomposes a 2D facial image into four sub-bands, or scales: the approximation coefficient

, the horizontal coefficient

, the vertical coefficient

, and the diagonal coefficient

[

32].

The mathematical foundation of this process includes the following equations:

Where

m and

n are translation parameters, and

j represents scale, the discrete scaling and translation functions for 2D-DWT are expressed as:

The transformation of an image of size

can be represented as:

To improve the accuracy of kinship verification, a novel face descriptor called Hist-2D-DWT is proposed. This technique involves dividing the four 2D-DWT coefficients (CA, CH, CV, and CD) into non-overlapping sub-blocks. Each sub-block is represented by a histogram containing 256 bins. These histograms are then concatenated into a single vector, forming a comprehensive feature matrix for the face descriptor, known as Hist-2D-DWT. This feature extraction process is illustrated in

Figure 1.

4.2. Multilinear Subspace Learning and Matching

During the offline training phase, the Tensor Cross-View Quadratic Discriminant Analysis (TXQDA) technique projects the training tensors

X and

Y into a novel discriminant subspace [

28]. This projection reduces the dimensions of both tensors, resulting in new dimensions

for mode-1 and mode-2, respectively, where

. It is important to note that the dimension of mode-3, which represents the individuals in the dataset, remains unchanged.

We used the reduced discriminant features projected through TXQDA to compare pairs of faces. Specifically, cosine similarity [

33] is employed to determine whether the pair belongs to the same family, i.e., kin or not-kin. The score between the two face vectors

and

is calculated as a normalized dot product, defined as follows:

5. Experiments

5.1. Datasets Description

In this work, we utilize two public kinship verification datasets, Cornell KinFace [

13] and TSKinFace [

34], for the implementation and performance evaluation of the proposed approach. The Cornell KinFace dataset comprises 143 pairs with various demographic attributes. The distribution of kinship pairs is as follows: 40% Father-Son (FS), 22% Father-Daughter (FD), 13% Mother-Son (MS), and 26% Mother-Daughter (MD).

The TSKinFace dataset is organized into two main groups: the Father–Mother–Daughter group and the Father–Mother–Son group. Within the Father–Mother–Daughter group, there are two subsets: Father–Daughter with 502 kinship relationships and Mother–Daughter with 502 kinship relationships. Similarly, within the Father–Mother–Son group, there are two subsets: Father–Son with 513 kinship relationships and Mother–Son with 513 kinship relationships.

5.2. Result Analysis and Discussion

The experimental results on the Cornell KinFace dataset are detailed in

Table 1. This table contrasts the mean accuracy of the basic 2D-DWT approach, which does not utilize histograms, with the performance of the proposed Hist-2D-DWT approach across various scales: CA , CH , CV , CD, and all channels combined.

The data clearly illustrate the superior performance of the Hist-2D-DWT approach compared to the basic 2D-DWT method. Specifically, the Hist-2D-DWT descriptors consistently achieve significantly higher accuracy across all tested scales. Notably, the Hist-2D-DWT-CA descriptor reaches an impressive mean accuracy of 93.46%, showcasing the effectiveness of the histogram-based approach in extracting and leveraging discriminative features from facial images. This substantial improvement highlights the advantage of incorporating histogram analysis in the 2D-DWT framework for kinship verification tasks.

The results of our experiments on the TSKinFace dataset are summarized in

Table 2. This table evaluates the mean accuracy of both the basic 2D-DWT approach and the proposed Hist-2D-DWT method across four kinship relations: Father-Son (FS), Father-Daughter (FD), Mother-Son (MS), and Mother-Daughter (MD). The analysis is conducted across various scales, including CA, CH, CV, CD, and all channels combined.

The table reveals a consistent and substantial improvement in accuracy with the Hist-2D-DWT approach compared to the basic 2D-DWT method. The advanced histogram-based feature extraction in Hist-2D-DWT significantly enhances performance across all kinship relations, demonstrating its superior ability to capture and leverage discriminative features for kinship verification.

5.3. Comparison with Recent Works

The proposed method was evaluated using the Cornell KinFace and TSKinFace datasets, and its performance was compared with recent kinship verification methodologies. The results are summarized in

Table 3. The framework achieved a notable accuracy of 93.46% on the Cornell KinFace dataset and 89.61% on the TSKinFace dataset. As evidenced in

Table 3, the Hist-2D-DWT-CA method significantly outperforms other contemporary techniques. Specifically, it demonstrates a 6.59% improvement over the best-performing method reported in [

27] for the Cornell KinFace dataset. Furthermore, the results for the TSKinFace dataset, also presented in

Table 3, indicate that Hist-2D-DWT-CA enhances kinship verification performance across most relationships. It achieves a substantial mean accuracy improvement compared to methods such as MSIDA [

27], RDFSA [

35], and BC2DA [

36]. Overall, our method notably enhances kinship verification accuracy, particularly on challenging datasets. The superior performance of the Hist-2D-DWT-CA method can be attributed to several factors. Firstly, it effectively utilizes a multidimensional data structure via 3rd-order tensors to extract the maximum discriminative information from input images. Secondly, the incorporation of histograms from facial blocks produces a more refined feature vector, further enhancing the accuracy of kinship verification.

6. Conclusion and Future Direction

This research introduces an advanced kinship verification system that utilizes a highly efficient face description technique based on histograms of the two-dimensional discrete wavelet transform (Hist-2D-DWT). By incorporating tensor subspace learning, this approach achieves significant performance gains over existing methods. The Hist-2D-DWT descriptor effectively captures discriminative features from small facial regions, demonstrating superior accuracy compared to traditional 2D-DWT methods. Although the Hist-2D-DWT descriptor is computationally efficient, it may fall short of the representational power of deep neural networks in more complex scenarios. Future research should aim to address these limitations by integrating larger and more diverse datasets, as well as exploring hybrid models that combine traditional descriptors with deep learning techniques to enhance robustness and adaptability.

References

- W. Wang, S. You, S. Karaoglu, and T. Gevers, “A survey on kinship verification,” Neurocomputing, vol. 525, pp. 1–28, 2023. [CrossRef]

- S. Huang, J. Lin, L. Huangfu, Y. Xing, J. Hu, and D. D. Zeng, “Adaptively weighted k-tuple metric network for kinship verification,” IEEE Transactions on Cybernetics, 2022. [CrossRef]

- M. C. Mzoughi, N. B. Aoun, and S. Naouali, “A review on kinship verification from facial information,” The Visual Computer, pp. 1–21, 2024. [CrossRef]

- X. Wu, X. Feng, X. Cao, X. Xu, D. Hu, M. B. López, and L. Liu, “Facial kinship verification: a comprehensive review and outlook,” International Journal of Computer Vision, vol. 130, no. 6, pp. 1494–1525, 2022. [CrossRef]

- X. Zhu, C. Li, X. Chen, X. Zhang, and X.-Y. Jing, “Distance and direction based deep discriminant metric learning for kinship verification,” ACM Transactions on Multimedia Computing, Communications and Applications, vol. 19, no. 1s, pp. 1–19, 2023. [CrossRef]

- A. Chouchane, M. Bessaoudi, E. Boutellaa, and A. Ouamane, “A new multidimensional discriminant representation for robust person re-identification,” Pattern Analysis and Applications, vol. 26, no. 3, pp. 1191–1204, 2023. [CrossRef]

- T. Ayyavoo and J. John Suseela, “Illumination pre-processing method for face recognition using 2d dwt and clahe,” Iet Biometrics, vol. 7, no. 4, pp. 380–390, 2018. [CrossRef]

- S. Naveen and R. Moni, “A robust novel method for face recognition from 2d depth images using dwt and dct fusion,” Procedia Computer Science, vol. 46, pp. 1518–1528, 2015. [CrossRef]

- H. Ouamane, “3d face recognition in presence of expressions by fusion regions of interest,” in 2014 22nd Signal Processing and Communications Applications Conference (SIU). IEEE, 2014, pp. 2269–2274.

- M. Belahcene, M. Laid, A. Chouchane, A. Ouamane, and S. Bourennane, “Local descriptors and tensor local preserving projection in face recognition,” in 2016 6th European workshop on visual information processing (EUVIP). IEEE, 2016, pp. 1–6.

- C. Ammar, B. Mebarka, O. Abdelmalik, and B. Salah, “Evaluation of histograms local features and dimensionality reduction for 3d face verification,” Journal of information processing systems, vol. 12, no. 3, pp. 468–488, 2016.

- A. Chouchane, “Analyse d’images d’expressions faciales et orientation de la tête basée sur la profondeur,” Ph.D. dissertation, Université Mohamed Khider-Biskra, 2016.

- R. Fang, K. D. Tang, N. Snavely, and T. Chen, “Towards computational models of kinship verification,” in 2010 IEEE International conference on image processing. IEEE, 2010, pp. 1577–1580.

- J. Lu, X. Zhou, Y.-P. Tan, Y. Shang, and J. Zhou, “Neighborhood repulsed metric learning for kinship verification,” IEEE transactions on pattern analysis and machine intelligence, vol. 36, no. 2, pp. 331–345, 2013.

- H. Yan, “Learning discriminative compact binary face descriptor for kinship verification,” Pattern Recognition Letters, vol. 117, pp. 146–152, 2019. [CrossRef]

- S. Xia, M. Shao, and Y. Fu, “Kinship verification through transfer learning,” in Twenty-second international joint conference on artificial intelligence. Citeseer, 2011.

- ——, “Kinship verification through transfer learning,” in Twenty-second international joint conference on artificial intelligence. Citeseer, 2011.

- K. Zhang12, Y. Huang, C. Song, H. Wu, L. Wang, and S. M. Intelligence, “Kinship verification with deep convolutional neural networks,” in In British Machine Vision Conference (BMVC), 2015.

- M. Bessaoudi, M. Belahcene, A. Ouamane, A. Chouchane, and S. Bourennane, “A novel hybrid approach for 3d face recognition based on higher order tensor,” in Advances in Computing Systems and Applications: Proceedings of the 3rd Conference on Computing Systems and Applications 3. Springer, 2019, pp. 215–224.

- H. Ouamane, “3d face recognition in presence of expressions by fusion regions of interest,” in 2014 22nd Signal Processing and Communications Applications Conference (SIU). IEEE, 2014, pp. 2269–2274.

- A. A. Gharbi, A. Chouchane, A. Ouamane, Y. Himeur, S. Bourennane et al., “A hybrid multilinear-linear subspace learning approach for enhanced person re-identification in camera networks,” Expert Systems with Applications, vol. 257, p. 125044, 2024. [CrossRef]

- M. Khammari, A. Chouchane, A. Ouamane, M. Bessaoudi et al., “Kinship verification using multiscale retinex preprocessing and integrated 2dswt-cnn features,” in 2024 8th International Conference on Image and Signal Processing and their Applications (ISPA). IEEE, 2024, pp. 1–8.

- A. Chouchane, M. Bessaoudi, H. Kheddar, A. Ouamane, T. Vieira, and M. Hassaballah, “Multilinear subspace learning for person re-identification based fusion of high order tensor features,” Engineering Applications of Artificial Intelligence, 2024. [Online]. Available. [CrossRef]

- M. Khammari, A. Chouchane, A. Ouamane, M. Bessaoudi, Y. Himeur, M. Hassaballah et al., “High-order knowledge-based discriminant features for kinship verification,” Pattern Recognition Letters, vol. 175, pp. 30–37, 2023. [CrossRef]

- M. Khammari, A. Chouchane, M. Bessaoudi, A. Ouamane, Y. Himeur, S. Alallal, W. Mansoor et al., “Enhancing kinship verification through multiscale retinex and combined deep-shallow features,” in 2023 6th International Conference on Signal Processing and Information Security (ICSPIS). IEEE, 2023, pp. 178–183.

- A. Chouchane, A. Ouamanea, Y. Himeur, A. Amira et al., “Deep learning-based leaf image analysis for tomato plant disease detection and classification,” in 2024 IEEE International Conference on Image Processing (ICIP). IEEE, 2024, pp. 2923–2929.

- M. Bessaoudi, A. Ouamane, M. Belahcene, A. Chouchane, E. Boutellaa, and S. Bourennane, “Multilinear side-information based discriminant analysis for face and kinship verification in the wild,” Neurocomputing, vol. 329, pp. 267–278, 2019.

- O. Laiadi, A. Ouamane, A. Benakcha, A. Taleb-Ahmed, and A. Hadid, “Tensor cross-view quadratic discriminant analysis for kinship verification in the wild,” Neurocomputing, vol. 377, pp. 286–300, 2020. [CrossRef]

- K. Zhang, Z. Zhang, Z. Li, and Y. Qiao, “Joint face detection and alignment using multitask cascaded convolutional networks,” IEEE signal processing letters, vol. 23, no. 10, pp. 1499–1503, 2016.

- L. Meylan and S. Süsstrunk, “Color image enhancement using a retinex-based adaptive filter,” in Conference on Colour in Graphics, Imaging, and Vision, vol. 2. Society of Imaging Science and Technology, 2004, pp. 359–363.

- I. Daubechies, Ten lectures on wavelets. SIAM, 1992.

- Z.-H. Huang, W.-J. Li, J. Shang, J. Wang, and T. Zhang, “Non-uniform patch based face recognition via 2d-dwt,” Image and Vision Computing, vol. 37, pp. 12–19, 2015. [CrossRef]

- H. V. Nguyen and L. Bai, “Cosine similarity metric learning for face verification,” in Computer Vision–ACCV 2010: 10th Asian Conference on Computer Vision, Queenstown, New Zealand, November 8-12, 2010, Revised Selected Papers, Part II 10. Springer, 2011, pp. 709–720.

- X. Qin, X. Tan, and S. Chen, “Tri-subject kinship verification: Understanding the core of a family,” IEEE Transactions on Multimedia, vol. 17, no. 10, pp. 1855–1867, 2015.

- A. Goyal and T. Meenpal, “Robust discriminative feature subspace analysis for kinship verification,” Information Sciences, vol. 578, pp. 507–524, 2021. [CrossRef]

- M. Mukherjee and T. Meenpal, “Binary cross coupled discriminant analysis for visual kinship verification,” Signal Processing: Image Communication, vol. 108, p. 116829, 2022. [CrossRef]

- A. Goyal and T. Meenpal, “Eccentricity based kinship verification from facial images in the wild,” Pattern Analysis and Applications, vol. 24, pp. 119–144, 2021. [CrossRef]

- L. Zhang, Q. Duan, D. Zhang, W. Jia, and X. Wang, “Advkin: Adversarial convolutional network for kinship verification,” IEEE transactions on cybernetics, vol. 51, no. 12, pp. 5883–5896, 2020.

- X. Chen, C. Li, X. Zhu, L. Zheng, Y. Chen, S. Zheng, and C. Yuan, “Deep discriminant generation-shared feature learning for image-based kinship verification,” Signal Processing: Image Communication, vol. 101, p. 116543, 2022. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).