1. Introduction

Bloom [

1] introduced the concept of an uncertainty shock, examining stock market volatility and its impact on broader economic outcomes. In his work, he identifies second-moment shocks—uncertainty shocks—as a primary channel through which stock market volatility affects the economy, observed through both realized and implied option prices. Bloom uses the CBOE Volatility Index (VIX) to quantify this uncertainty, which reflects market expectations of future volatility over the next 30 days based on S&P 500 options prices. However, analysis of S&P 500 returns reveals that these option prices follow a non-normal distribution. As a result, measuring market volatility using only the second moment, or standard deviation, is insufficient, as it assumes a symmetric distribution and fails to account for the “heavy tails” that are known to be present in market data (Cont [

2]). This limitation weakens the standard deviation’s ability to capture the full impact of extreme market fluctuations, leading to underestimating the true effect of such shocks.

To effectively capture uncertainty, the identification strategy must consider the impact of extreme events on option prices, as these events amplify uncertainty through what is known as tail risk. Kozeniauskas et al. [

3] highlights the importance of tail risks in cases where data are non-normally distributed. By introducing "disaster risk" as a factor, they show how uncertainty is magnified through heavy tails. Unlike normal distributions, which have thin tails and suggest a low probability of extreme events, non-normal distributions with heavy tails better represent the likelihood of rare, impactful events. Additionally, the symmetry of a normal distribution implies equal chances of extreme positive or negative outcomes—“disasters” and “miracles”—a pattern not reflected in observed data.

In the context of financial time series data, Cont [

2] points out that the non-Gaussian nature of the distribution of asset returns (first moments) makes a strong case for using other measures of dispersion to observe the variance of the returns. The large movements in financial markets, identified via the heavy tails of the distribution, cannot be regarded as simple outliers in the sample. Therefore, amplification by heavy tails motivates us to model the tails of the distribution of asset returns appropriately. In addition, modeling the heavy tails and skewness of the distribution will enable us to examine the magnitude of the uncertainty caused by large movements in the financial market.

Given the emphasis on heavy tails in non-Gaussian stable distributions, using second moments to capture the volatility as a measure of uncertainty will not factor in the extreme tail risks, making the notion of uncertainty insufficient. Moreover, volatility does not account for the directional bias of the uncertainty, rendering uncertainty shocks non-explanatory of large movements in the financial market. Risk–reward ratios (R/R) offer a more nuanced approach, considering that they are widely used as performance measures in financial decision-making. R/R ratios focus on the tail risk to explain the amplification in uncertainty shocks by including the rewards (potential gains on the right tail) and risks (potential losses on the left tail).

Furthermore, since risk–reward measures can help reduce max drawdowns, they offer practical insights into managing financial downturns, which are integral to understanding uncertainty shocks amplified by the heavy tails of the financial time series distribution. In addition, to capture the observed volatility in financial markets, we use a double subordinated Normal Inverse Gaussian (NIG) Lévy process to construct a revised VIX from S&P 500 option prices. This NIG Lévy process explains the skew and fat-tailed properties of index option prices and gives rise to an arbitrage-free, option pricing model which is then used to compute the in-sample, implied volatility of the stock market.

By combining the newly constructed revised VIX with computed R/R ratios, we produce a new series of uncertainty shocks, which better explain the variations caused by large market movements. These uncertainty shocks are robust in explaining the unexplained amplification of uncertainty through tail risks by combining the direction of the potential gains and losses of the stock market given the heavy tails and skewness of the option (asset) prices. The revised measure of volatility, which identifies uncertainty shocks, has broad economic implications for analyzing market risks and responses. To the point of all information embedded, given the new measure captures intrinsic time volatility, to correctly capture the skewness and fat tails of the S&P500 index, it can explain the impact of monetary policy tightening (e.g., federal funds rate hikes or forward guidance) on risky assets such as the index itself. It will capture the effects surrounding pre- and post-announcements of policies made by the Federal Reserve.

In addition, it can explain the shift in risk-neutral density implying fat tails due to geopolitical risk in the form of armed conflicts (e.g., the Russia-Ukraine, or the Iran-Israel conflict). The transmission channel will reflect the impact of such events on the agent’s risk aversion and willingness to resort to the third derivative of their utility function to trigger precautionary savings. Similarly, other rare disasters’ impact on volatility in the financial markets, like COVID-19, will also be explained by the revised VIX given it captures all information about market activity in the event of rare disasters. Moreover, it has implications for sector-specific or firm-level friction in the form of the present value of future cash flow being heavily discounted if firm earnings are lower than market expectations (for instance, tech stocks being sold at a large mass after tech firms failed to meet market expectations in early 2022 on account of their earnings).

This research question aims to contribute to numerous strands of the literature on macro-volatility in financial markets and the channels of uncertainty. Bloom [

4] argued that investors want to be compensated for higher risk, and because greater uncertainty leads to increasing risk premia, this should raise the cost of finance. Hence, capturing an increase in risk premia can be attributed to the time-intrinsic and fat tails characteristics of the uncertainty explaining the magnitude of extreme events. Kelly and Jiang [

5] pointed out that researchers have hypothesized that heavy-tailed shocks to economic fundamentals help explain certain asset pricing behavior that has proved otherwise difficult to reconcile with traditional macrofinance theory.

Rietz [

6] was among the first to emphasize the phenomenon of fat tails attributed to the rare disaster hypothesis. Moreover, Bansal and Yaron [

7] construct a model of long-run risks that incorporates fat-tailed endowment shocks. Duffie et al. [

8] explain the mechanics of modeling extreme events with jump-diffusions to capture heavy-tailed events. Extending on this, Shirvani et al. [

9] extend Mehra and Prescott [

10]’s framework to account for the growth rate of consumption and dividends that follow a fat-tailed distribution. The authors assume that the log-growth rates of consumption and dividends follow a NIG distribution and find that the constant relative risk aversion (CRRA) estimate derived from the NIG-fitted model is significantly lower than the estimate obtained using a log-normal distribution fit.

Furthermore, the tail risk mechanism that accentuates uncertainty channels is highlighted in Orlik and Veldkamp [

11] explaining the fluctuations in the macroeconomic measure of uncertainty. In addition, Kozlowski et al. [

12] identifies that agents are unaware of the true distribution of shocks but use data to estimate it non-parametrically. However, transitory, especially extreme events, generate persistent changes in beliefs and macro outcomes. While these studies explain the extreme events channel of uncertainty, intrinsic time volatility to explain heavy tails remains an ambiguous territory. This paper is the first to explain the subordination process using the intrinsic time-volatility and the heavy tails of the asset return to capture the dynamics of the financial market and using R/R ratios to estimate uncertainty shocks as macro-volatility in financial markets.

Section 2 presents the double subordinated NIG Lévy process for S&P 500 option prices used to construct the revised VIX.

Section 3 presents the double subordinated NIG Lévy process European call option pricing used to price the S&P500 returns following a Normal Double Inverse Gaussian (NDIG) log-price process.

Section 4 presents the method used for estimating the parameters.

Section 5 demonstrates the robustness of multiple subordinated models of volatility.

Section 6 presents the general families of risk–reward ratios.

Section 7 presents the novel identification strategy for uncertainty shocks.

Section 8 concludes the paper.

2. Double Subordinated NIG Lévy Process for S&P 500 Option Prices

The double subordinator framework in our approach to construct a revised VIX involves a Lévy subordinator process. We define the functional form of the stochastic process following a Lévy process as described by Carr et al. [

13] and Shirvani et al. [

14]. In dynamic asset pricing theory, the price dynamics explain the behavior of the risky financial asset. Consider the price process

, where

is the time horizon, implying that

is the maturity date of a financial contract. Therefore, a Lévy process

with non-decreasing trajectories or sample-paths is known as a Lévy subordinator. To begin with, we start by modeling S&P500 options in a standard Black–Scholes–Merton option pricing model assuming normality. The price process of the risky asset is

where

is the log-price process and

is a standard Brownian motion. Moreover, knowing that the option prices of S&P 500 are non-normally distributed (in particular, having heavy tails), we can follow Mandelbolt and Taylor [

15] and Clark [

16] who suggested the use of a subordinated Brownian motion, where the price process

and thereby, the log price process is defined by

where

is a Lévy subordinator.

Shirvani et al. [

17] describes the properties of various multiple subordinated log-return processes designed to model leptokurtic asset returns, showing that multiple subordinated log-asset return processes can imply heavier tails than single subordinated models, and thus have the ability to capture the third moment (skewness) and the fourth moment (kurtosis). Hence, a double subordination process to model the fat tails of S&P 500 option prices may be an appropriate choice.

Let

denote the price process of the S&P 500 options, where

and the members of the triplet (

) are independent processes generating a stochastic basis

denoting continuous time preferences. We refer to

as a standard Brownian motion along with

and

being the Lévy subordinators. Moreover,

,

, and

are

adapted processes whose trajectories are right-continuous with left limits. Shirvani et al. [

17] denote the double subordinated process by

. Therefore, Equation (

4) is a double-subordinated log-price process.

Considering the S&P500 options prices are modeled as a subordinated geometric Brownian motion, a multiple subordinated model would sustain the bivariate semi-martingale structure. In addition, Lévy subordinators (volatility intrinsic time and heavy tails) do not disrupt the S&P500’s time-changed process and sustain the bivariate semi-martingale property under a risk-neutral measure consistent with the fundamental asset pricing theorem. Consider the case where

and

are inverse Gaussian Lévy processes, i.e.,

having the pdf

Consider the second subordinator,

. Given the nature of both subordinators, we can regard

in Equation (

4) as the

Normal Double Inverse Gaussian (NDIG) log-price process. Therefore, the characteristic function (c.f.) of

is defined by

Therefore,

can be expanded and written as:

given

. Hence, the moment generating function (MGF) of

is

, where

. Therefore, the NDIG process modeled in Equation (

6) and the expansion of the c.f. modeled in Equation (

8) has eight parameters, i.e.,

. Given the complexity of fitting the processes modeled to the data, we will follow the methodology explained by Shirvani et al. [

14] where six parameters can be estimated from the model. Still, the remaining two are computed by taking expectations.

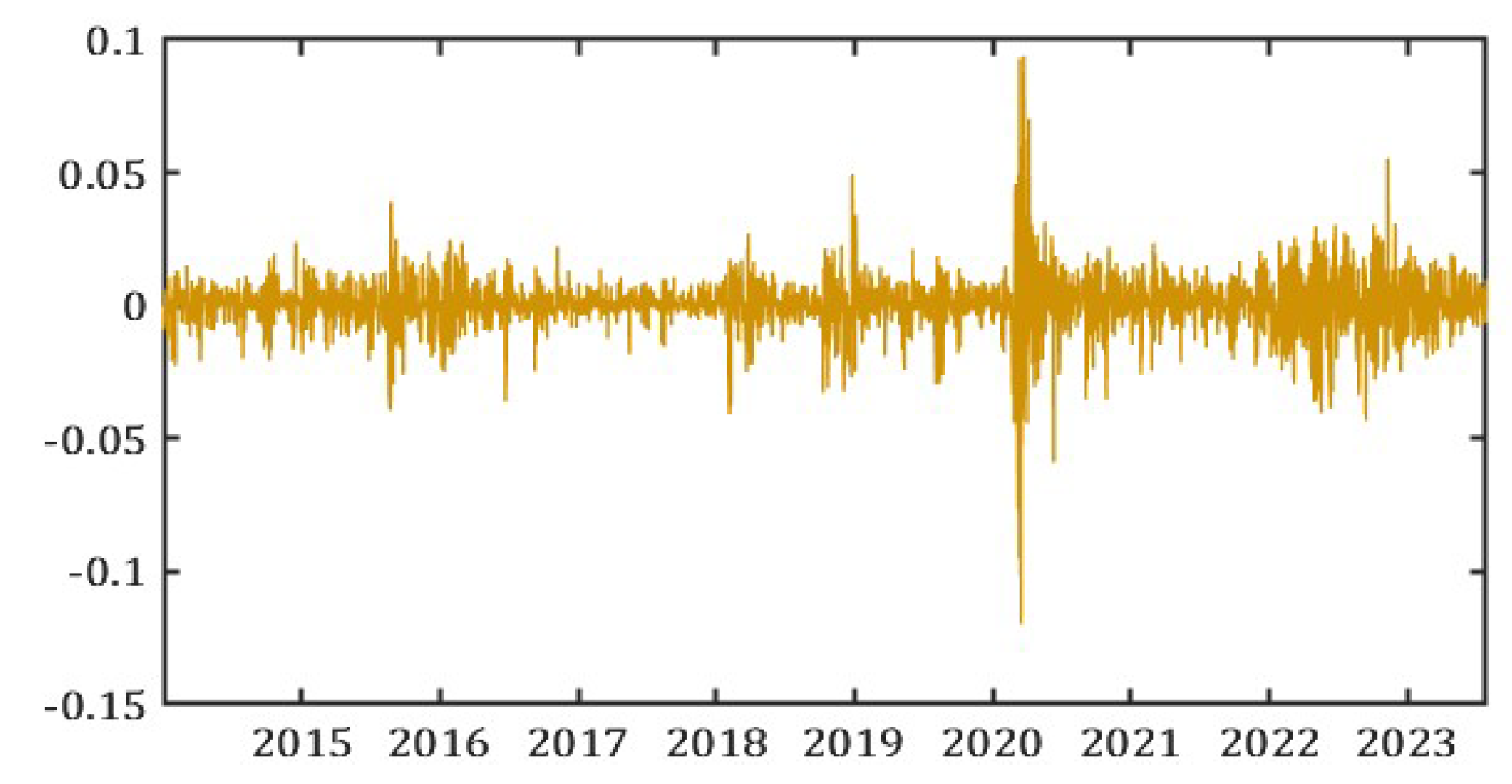

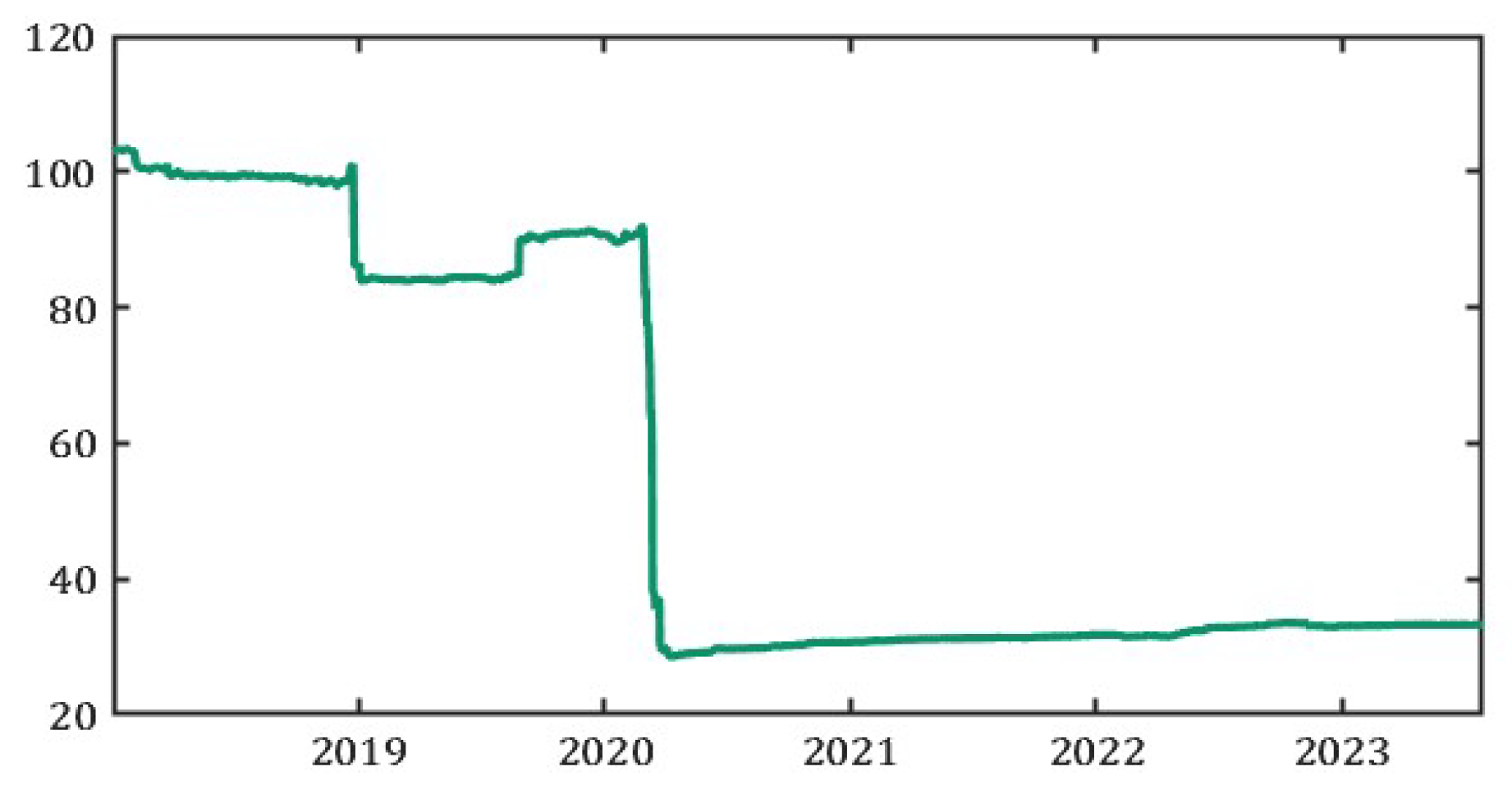

Figure 1.

S&P500 Options Prices.

Figure 1.

S&P500 Options Prices.

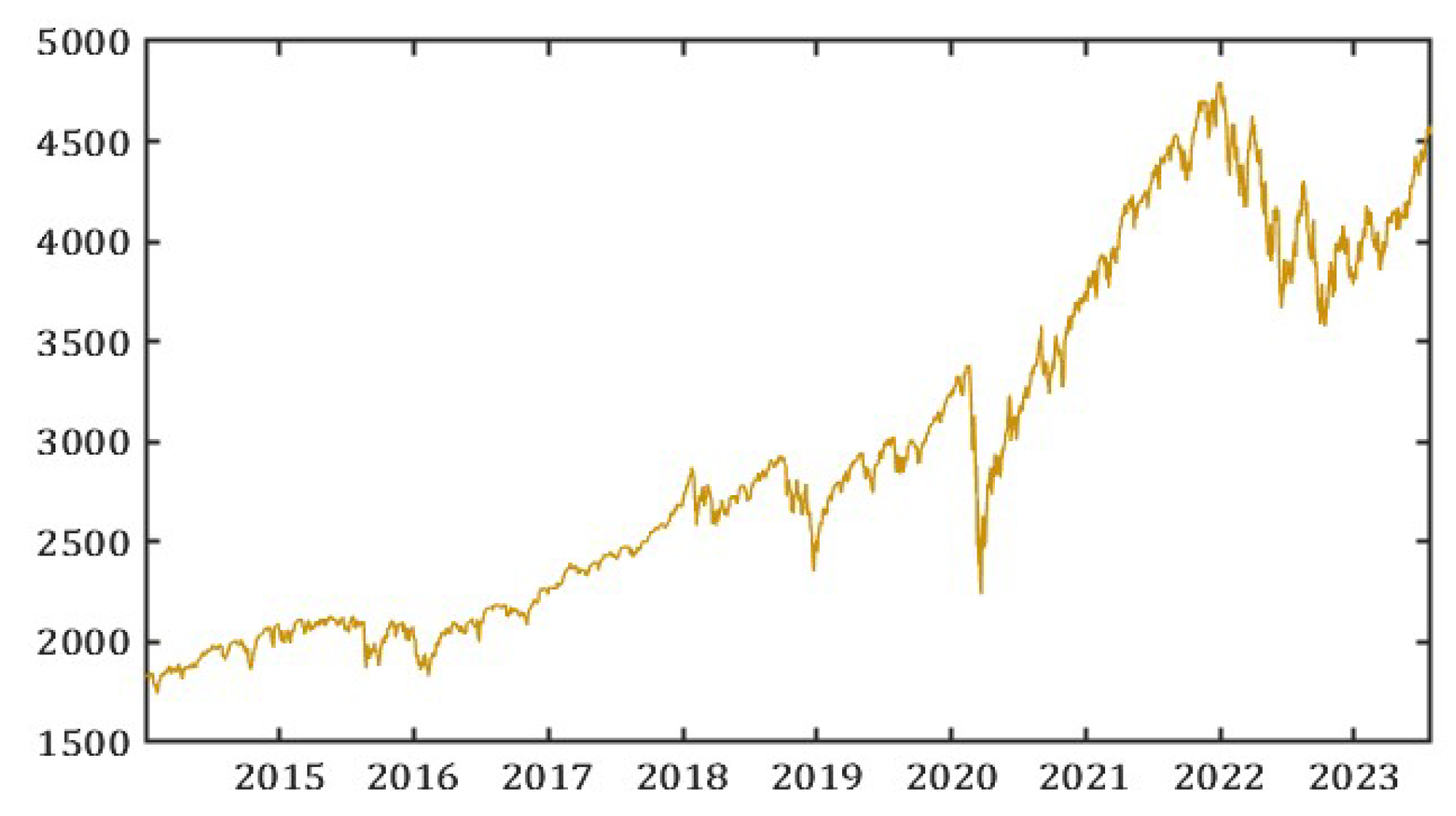

Figure 2.

Last Price (S&P500).

Figure 2.

Last Price (S&P500).

3. NDIG Model for European Call Option Pricing

We assume complete markets where agents can continuously trade a risky asset and a risk-free bond. The NDIG model will be used to price a European Call Option,

, with the underlying risky asset,

, being the options prices of the S&P500. The discounted price process

with

being the risk-free rate needs to sustain the martingale structure, therefore, we need to derive an Equivalent Martingale Measure (EMM)

of

on the stochastic base

. We follow the same methods as described in Shirvani et al. [

14] of using a Minimal Conditional Martingale Measure (MCMM), hence, pricing European options under this measure satisfies the no-arbitrage condition.

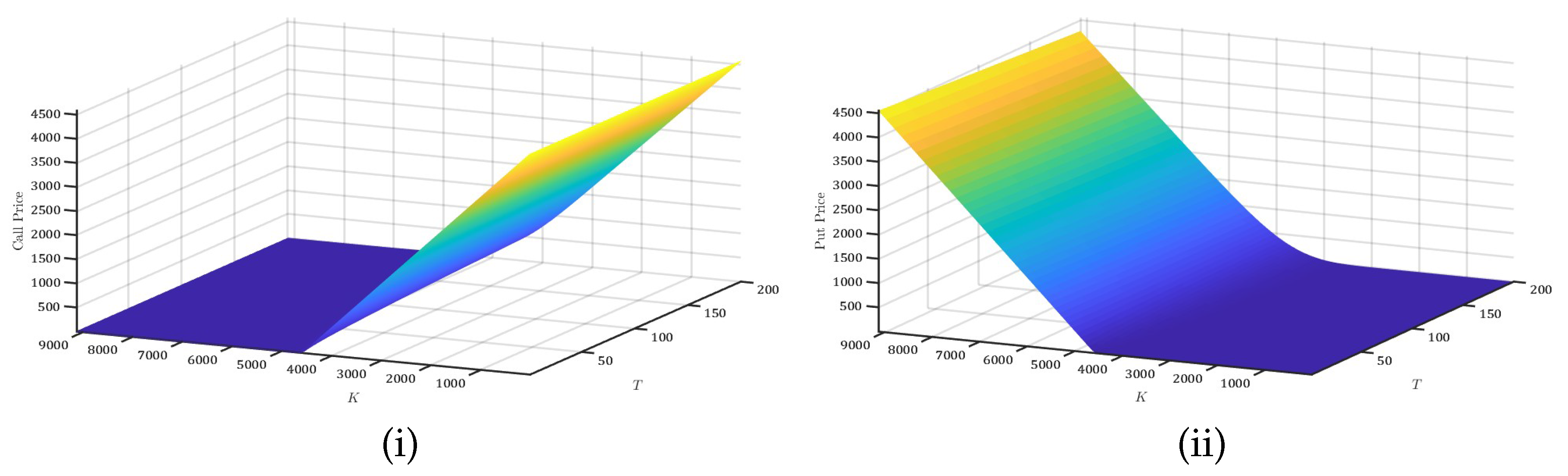

Figure 3.

Implied Volatility Surface. (i) Call Price and (ii) Put Price.

Figure 3.

Implied Volatility Surface. (i) Call Price and (ii) Put Price.

In addition, given that the c.f. of

is defined in Equation (

8), we follow the Carr and Madan [

18] approach of using the Fast Fourier Transform (FFT) to price options in cases where the c.f. of the log-price of the underlying asset is known analytically. Consequently, the inital point for applying the FFT is derived via the following relation:

Carr and Madan [

18] show that the numerical solutions yield an `optimum value’ for

and control over the error produced by truncating the integral in Equation (

9) over a finite domain

. Furthermore, to pin down

, the upper bound on

a, we can follow the process outlined in Equation (

10) and compute the upper bounds in moving windows to investigate the behavior of

a over time.

Figure 4 analyzes the behavior of

. For instance, it is equipped to describe the large movement in the financial market in March 2020, indicated by a significant drop in the value of the upper bound.

Moreover, option prices for

are determined using FFT over Equation (

9) with multiple values of the strike price

K and maturity time

T. To maintain stability in the implied volatility surface of the S&P500 options, we place a restrictive condition on

a, namely,

.

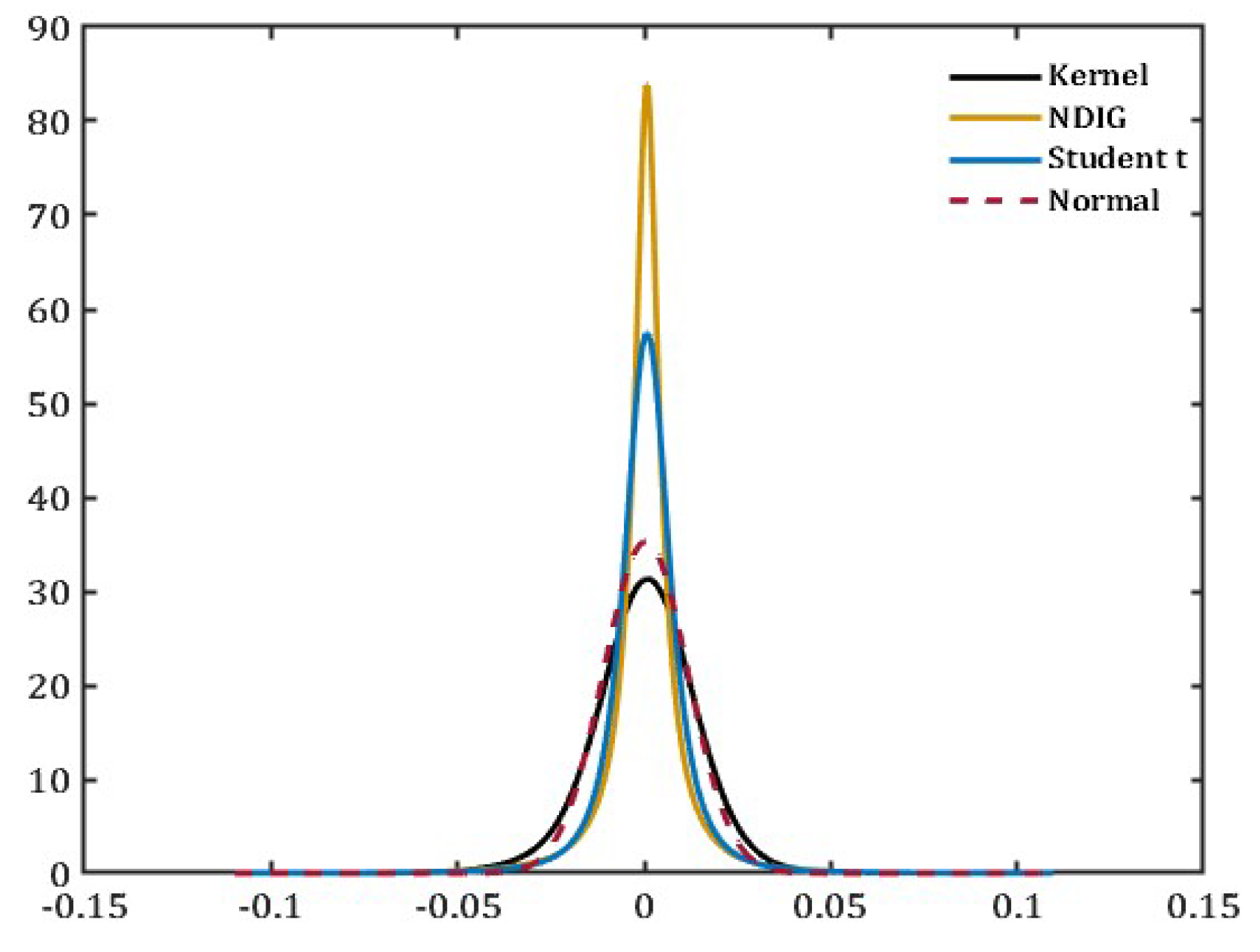

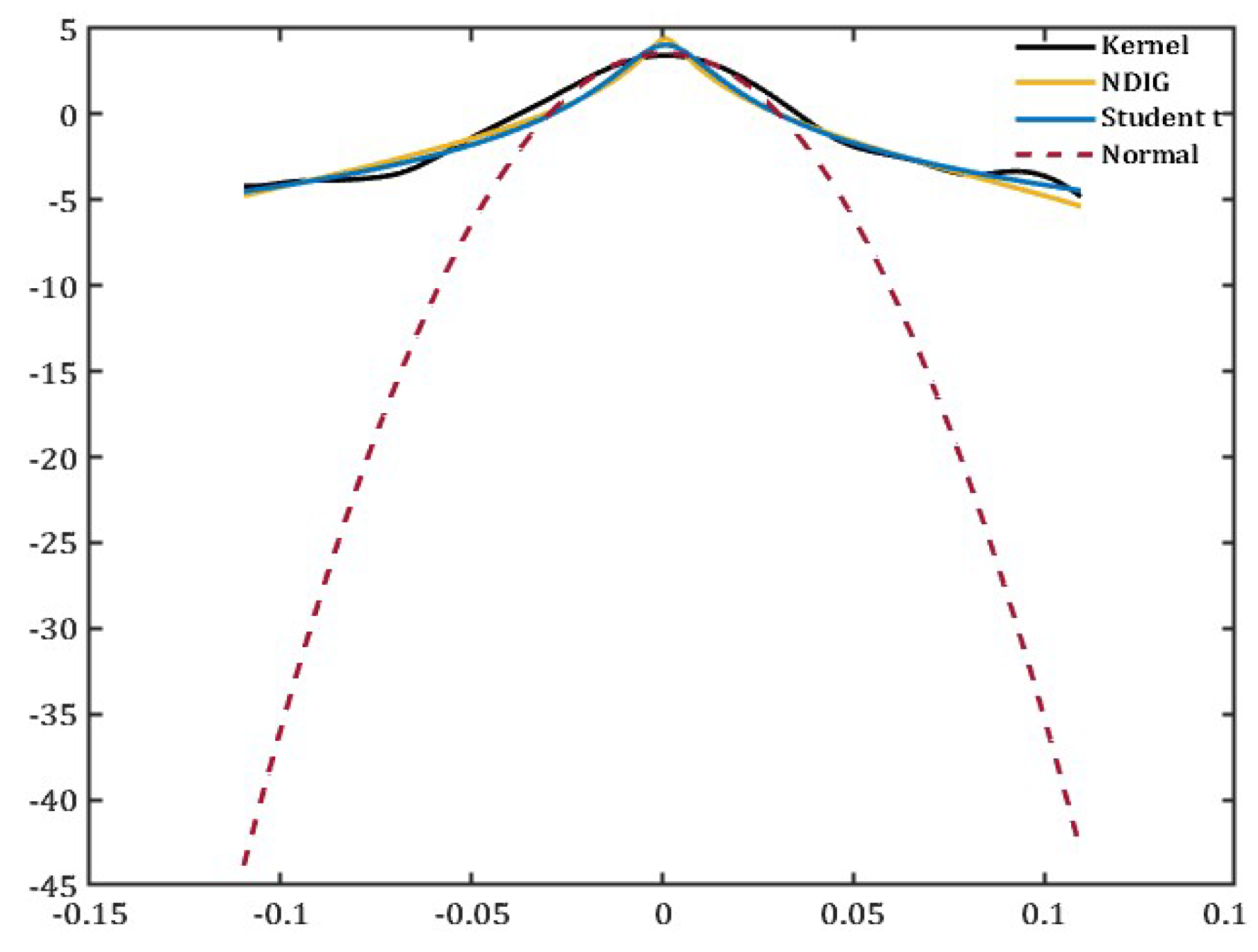

Figure 5 and

Figure 6 compare the kernel density fits of the S&P500 options. As hypothesized, the density fit of S&P500 options showcases the fat tails that cannot be captured through a normal distribution. Comparing the NDIG fit to the Student’s

t-distribution, we can see that NDIG is better at capturing the heavy tails in the data previously not accounted for when measuring uncertainty. Therefore, the method of using two subordinators to explain the volatility through the intrinsic time of the asset return process and the heavy tails of the data is an appropriate strategy.

4. Parameter Estimation

To estimate the parameters of the double subordinated log-price process, we fit the NDIG model described in the previous two sections to the S&P500 daily returns ranging from January 2, 2014 up to July 28, 2023. The NDIG model is explained in Equations (5), (6), (7), and (8), and is used to estimate six parameters: . We work with the assumption that the subordinators and are used to model the skewness and heavy tails (kurtosis) of the asset return process and the intrinsic time of the asset return time series, respectively.

Therefore, the estimation of the first four moments follows the same method as described in [

14] to estimate parameters using the NDIG model for Bitcoin log returns.

The given minimization problem in Equation (

11) is subject to the following five constraints to calculate the five choice variables or parameters using first-order conditions. The constraint for the first moment is ∼

. The constraint for the second moment is ∼

. The constraint for the third moment is ∼

. The constraint for the fourth moment is ∼

. The constraint for the c.f. is ∼

. Here,

denotes the asset return time series observed from the S&P500 options prices. Moreover,

depends on the one-to-one correspondence between the cdf and the c.f. as noted in Shirvani et al. [

14]. We follow the method described in Yu [

19] to estimate the integral of the c.f. We get the following parameter estimates using the method of moments and empirically fitting the c.f. These estimates are presented in

Table 1.

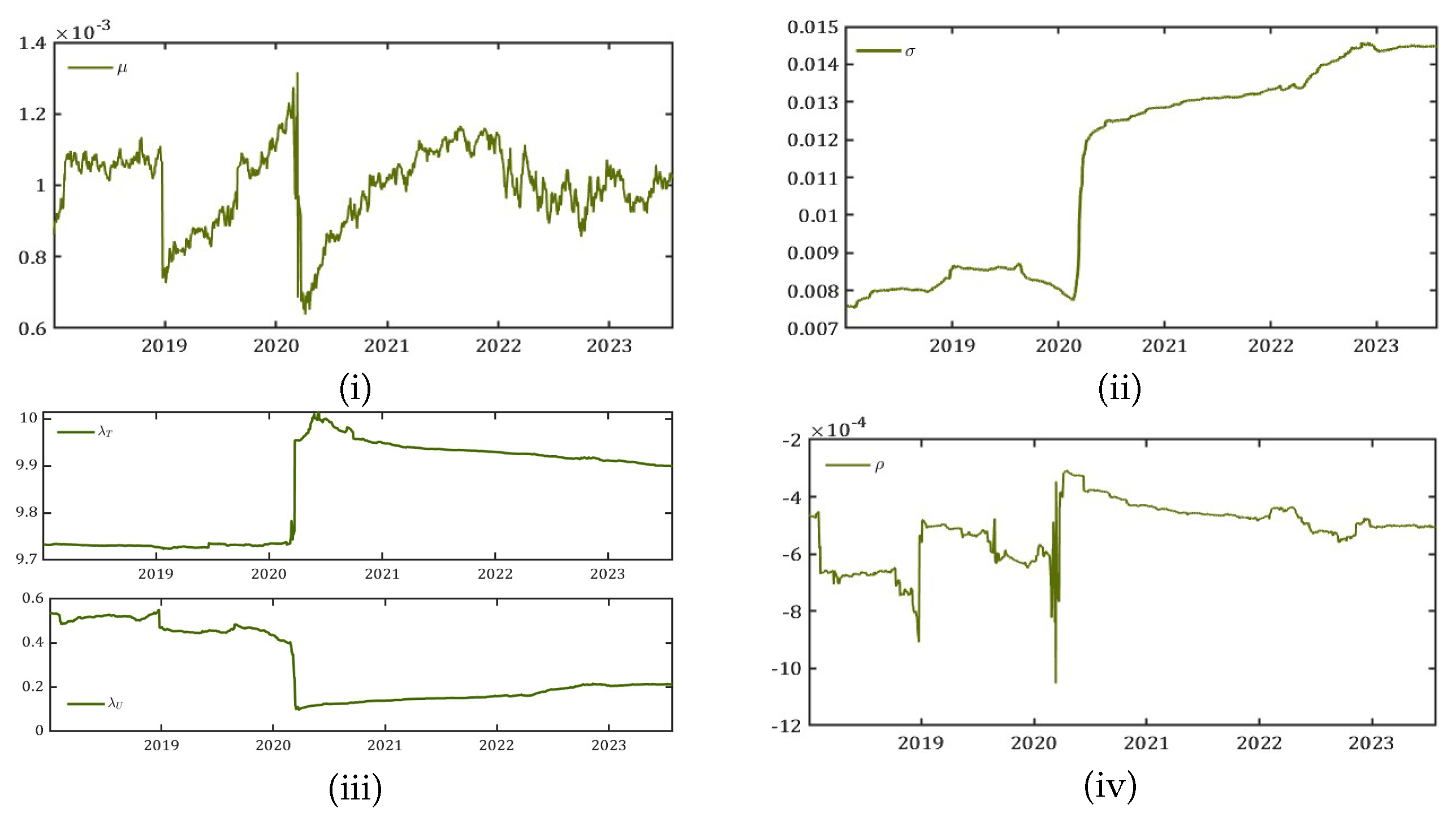

In addition, our objective of interest leads us to estimate the parameters in the four moving windows to examine the behavior of the first four moments of the data. While

and

remain on a constant path throughout the period used in the estimation of the parameters, we see significant movements in the drift term

, the volatility parameter

,

,

and

.

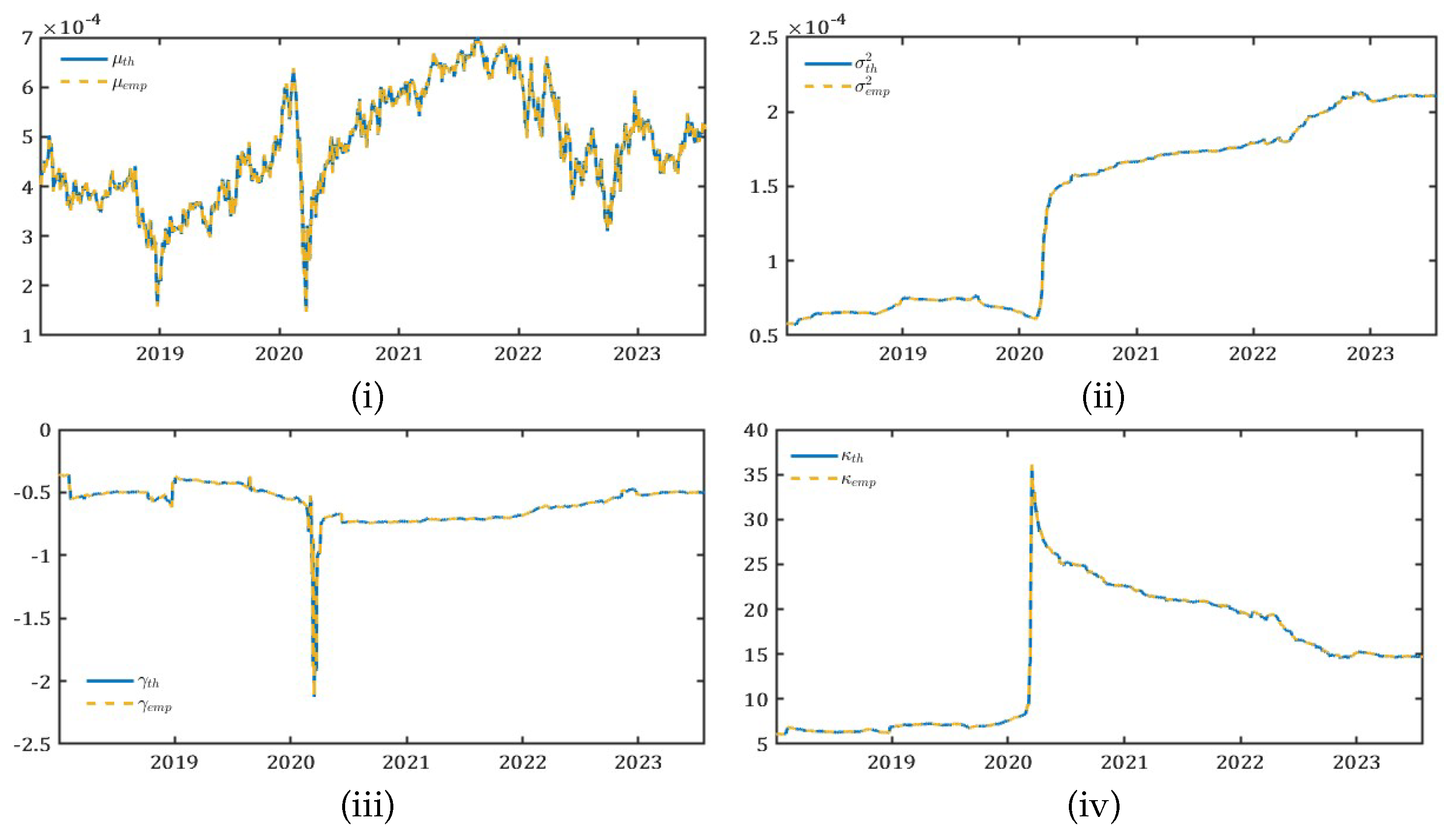

Figure 7 shows the rolling window parameter estimates.

5. Multiple Subordinated Models of Volatility

The VIX, a product of the CBOE, is often called the “fear gauge” of the market and measures the market’s expectation of volatility over the next 30 days implied by S&P500 option prices. Most of the literature on uncertainty shocks focuses on capturing volatility in the financial market using the VIX standard deviation or incorporating time-varying volatility clustering as shocks into macroeconomic models. But, first, using the standard deviation works only when the underlying data assumes a symmetric distribution: yet it is evident from

Section 2 that the VIX follows a non-normal distribution. This leads to an underestimation of the true magnitude of uncertainty shocks from the VIX. Second, a popular approach to computing uncertainty shocks is examining the time-varying volatility clustering of the VIX.

Time-varying or stochastic volatility models capture the variability of the VIX over time. Since uncertainty is not constant, models such as the Generalized Autoregressive Conditional Heteroskedasticity (GARCH) and its variant, the Fractionally Integrated GARCH (FIGARCH) are used to capture the time-varying nature of volatility clustering. These models account for the tendency of high-volatility events to be followed by more high-volatility, while low-volatility periods are often followed by low volatility. By analyzing this clustering in the VIX, it is possible to identify periods with increased uncertainty.

However, one of the key identifiable issues in computing the volatility surfaces of the VIX is using local volatility models. These models assume a deterministic function of current asset price and time

t and use it to compute the value of the volatility surfaces based on near-term and next-term expirations of the S&P500 options

1. Given that local volatility models are deterministic functions and not stochastic functions which account for past observed asset mean returns and volatility, they fail to reconcile with the fundamental theorem of asset pricing, thereby, making it inconsistent with the dynamics of the financial market. Moreover, there are two reasons why local volatility measures are inferior to multiple subordinated models, which in our case, accounts for stochastic volatility and heavy tails of the data distribution.

Consider an agent who can continuously trade a risky asset and risk-free bond in their portfolio assuming that markets are complete and asset prices clear all markets. There are no financial frictions in the market and agents observe the information about the assets available at time

t. The first reason why local volatility models fail is that having two sources of uncertainty, namely the time-varying nature of the asset return process and the fat-tails and skewness of options prices, the agent trading a risky asset and a riskless bond cannot hedge their risk perfectly (Shirvani et al. [

20]), thus succumbing to one channel of risk out of the two.

The second reason why local volatility models fail is that they are not semi-martingales

2 implying that the fundamental theorem of asset pricing fails. Delbaen and Schachermayer [

22] exposit the fundamental theorem of asset pricing for unbounded stochastic processes. The theorem states that the absence of arbitrage possibilities for a stochastic process

is equivalent to the existence of an equivalent martingale measure for

. Let

be an

-valued semi-martingale defined on the stochastic basis

. Then

satisfies the condition of `No Free Lunch with Vanishing Risk’

3 if and only if there exists a probability measure

such that

is a sigma-martingale with respect to

.

Figure 8.

Comparison of the four moments: Theoretical vs. Empirical ∼ (i) , (ii) , (iii) , and (iv) . `Th’: theoretical moments estimated from fitted parameters. `Emp’: empirical moments computed from the S&P500 option prices.

Figure 8.

Comparison of the four moments: Theoretical vs. Empirical ∼ (i) , (ii) , (iii) , and (iv) . `Th’: theoretical moments estimated from fitted parameters. `Emp’: empirical moments computed from the S&P500 option prices.

As a result, the local volatility model does not generally satisfy the semi-martingale property due to its deterministic nature which does not reconcile with the fundamental theorem of asset pricing. Instead, the price process may exhibit characteristics such as explosions or other paths, and therefore, a local volatility model would prevent a decomposition into a local martingale plus a finite variation process. One way to address the issue of this failure of local volatility measures is to assume that a proxy for volatility (like VIX) is a tradable asset. This way, the market is complete, and the pricing model becomes a bivariate semi-martingale (Shirvani et al. [

20]). Therefore, multiple subordinated processes can be applied to a bivariate semi-martingale (for instance, a stochastic process including bivariate cases), and the resulting process will generally retain the semi-martingale property (Barndorff-Nielsen and Shephard [

24]), depending on the characteristics of the subordinators.

Furthermore, Shirvani et al. [

17] introduced the intrinsic time volatility or volatility subordinator model to reflect the heavy-tail phenomena present in asset returns. They studied the question of whether the VIX is a volatility index that adequately reflects the intrinsic time and showed that the volatility index fails to appropriately capture the intrinsic time for the SPDR S&P 500. The VIX, as a measure of time change, does not reflect all the information required to correctly capture the skewness and the fat tails of the S&P 500 index. Hence, an NDIG Lévy process model with a time-varying volatility subordinator adequately accounts for the measure of intrinsic time.

Kelly and Jiang [

5] developed a tail risk measure that is correlated with the tail risk measure extracted from S&P500 options and negatively predicts real economic activity. In their methodology, they correctly explain that the dynamic tail risk estimates are infeasible in a univariate time series model because of the infrequent nature of extreme events. However, one of the major drawbacks of this paper is the authors fail to explain how to handle the family of extreme negative thresholds,

. For instance, if we estimate the tail parameter and find that the tail is excessively heavy and wish to purchase insurance on the portfolio consisting of assets with returns

, the method falls short. They also fail to distinguish whether the returns considered are logarithmic or arithmetic, which is crucial for tail estimation. In addition, they do not discuss the idea of portfolio insurance (option pricing) when the tails have a Pareto distribution. For instance, the agent cannot estimate the risk using their benchmark model and proceed to buy puts as portfolio insurance instruments using the Black–Scholes–Merton model since it assumes Gaussian-ness (thin tails), leading to risk estimations away from the true estimates.

Orlik and Veldkamp [

11] and Kozeniauskas et al. [

3] provide extensions to tail risk estimations to compute uncertainty shocks. However, they only explain one channel of uncertainty, that of the heavy tails embedded in the idea of the rare disaster hypothesis. Consequently, the idea of a semi-martingale with heavy-tailed behavior raises additional concerns. While symmetric Pareto distributions are infinitely divisible, they do not support option pricing because the Esscher transform

4 requires an exponential moment. Our approach solves this problem and provides a more robust framework for risk assessment and option pricing. The approach of using NDIG Lévy processes allows us to estimate the risk of a portfolio (or individual assets) consistently with the Fundamental Asset Pricing Theorem (Duffie [

26]).

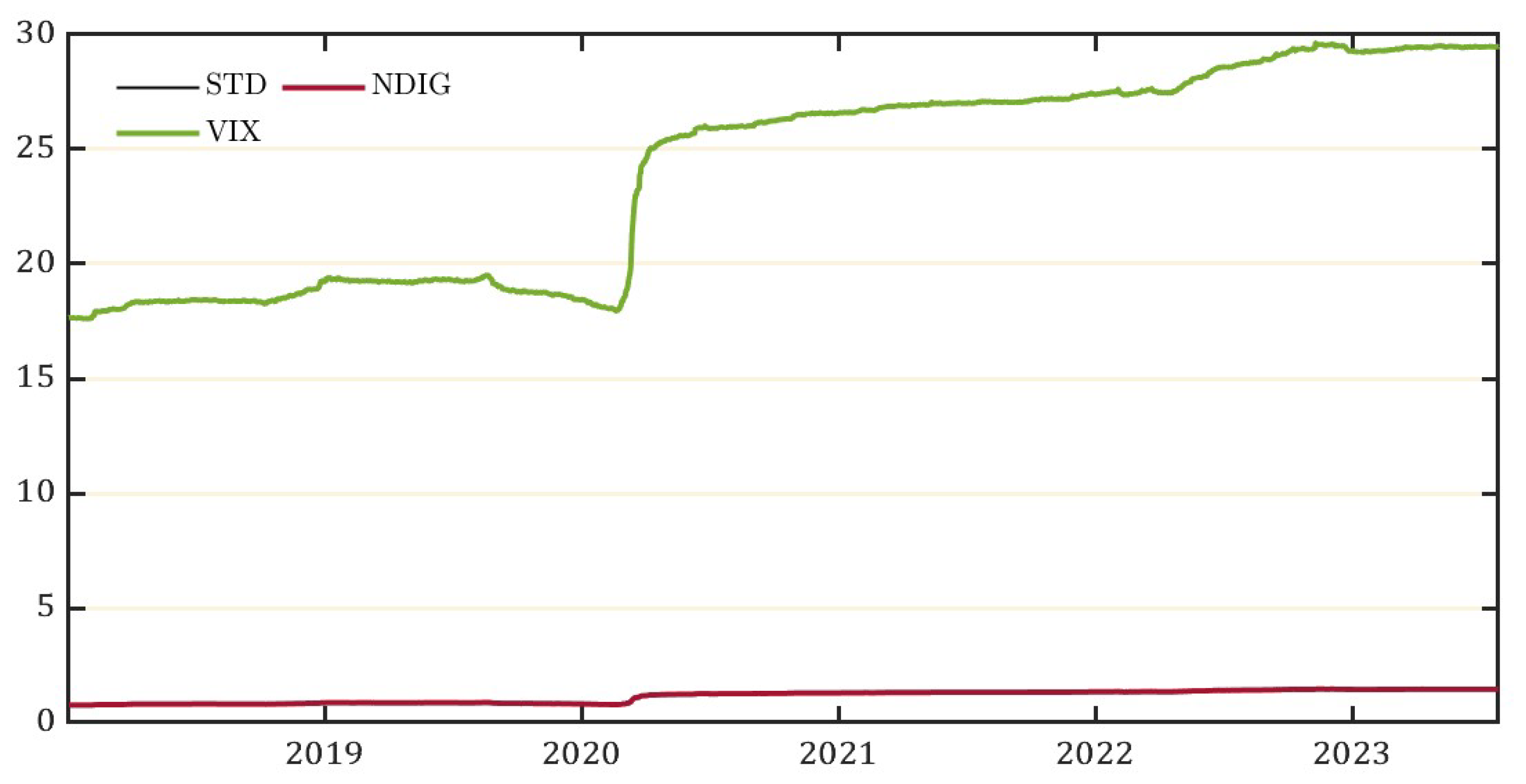

Based on the estimation power of the multiple subordinated models to account for volatility measures, we estimate the historical volatility of the VIX and report it along with the second moment from the NDIG estimates.

Figure 9 presents both results in a 1008 rolling window based on the sample period of the data used for the estimation. To calculate the value of the VIX index from S&P500 option prices, we use the same index formula that the CBOE specifies:

In Equation (

12), 1 and 2 refer to near-term and next-term option expiration times. While near-term means option contracts expiring in 23–30 days, next-term means contracts expiring in 31–37. The weights specified by

, where

, denote the expiration times post normalization accurate to the minute. We impose the following constraints on the weights:

and

along with

. Shirvani et al. [

14] provide the functional form of the weights (which we follow) along with an expression describing the near-term and next-term volatilities captured by

.

To compute the values of the revised VIX based on Equation (

12) where the option prices are modeled using the NDIG log price process, we use the multiple subordinated model to calculate the prices of the European call and put options with near- and next-term expirations. Therefore, by using the subordinated method, we derive a revised measure of volatility using the parameters estimated by the method of moments. The volatility of a unit increase of

, the log-price process of S&P500, is the NDIG volatility that accounts for both the geometric Brownian motion and the two Lévy subordinators of the model.

Furthermore, the rolling NDIG parameters are estimated from the S&P500 daily returns from January 2, 2014, up to July 28, 2023. We specify the window size to be 1008 by accounting for 252 trading days in a year and choosing four years as our moving window. Hence, we derive the annualized volatility implied by the NDIG volatility. To match the scale of the estimates of historical volatility and the intrinsic-time volatility implied by the NDIG model, we linearly scale the estimates given in

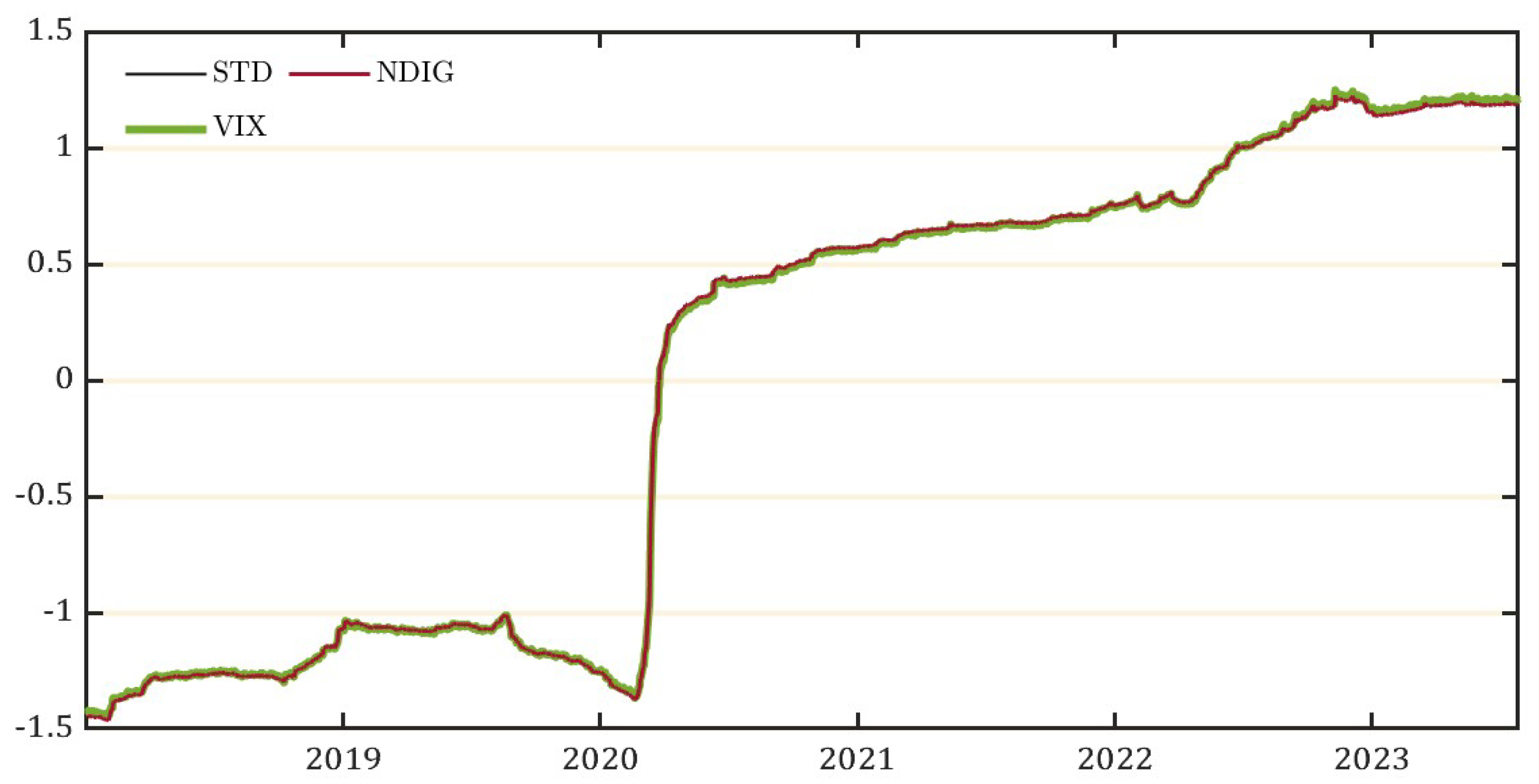

Figure 9 by reducing the mean to zero and scaling the variance to 1.

Figure 10 presents the normalized Volatility of VIX as our revised VIX index that explains the volatility of the financial market using intrinsic time volatility and heavy tails of the distribution.

Figure 10 measures the historical volatility and matches it with the intrinsic time volatility, and it can be seen there that the revised index accurately captures the jumps and diffusions in the markets previously unaccounted for and therefore crucial in estimating the uncertainty as a macro-volatility in financial markets. There are two key facts to note about the revised VIX index. First, we see a jump in March 2020 which captures the large crash in the S&P500 daily returns post the heightened uncertainty about economic conditions following the impact of the news of a global pandemic. Considering the impact generated by persistent volatility, the NDIG model preserves the volatility measure implied by the intrinsic time subordinator.

Second, following the path of persistent volatility post-pandemic, we see another jump in the volatility following the events that characterized the plummets in early 2022 following consistent hikes in the federal funds rate, the fear of the start and continued geopolitical conflict between Russia and the Ukraine, along with the tech stock selloff due to an unexpected fall in tech firms’ earnings indicated by the reports of their earnings.

6. Risk–Reward Ratios Over Fractional Time Series

R/R ratios offer a balanced approach to exploring the potential gains and losses in the financial market due to violent market movements. These measures help address the asymmetry in risk perceptions and the potential for large losses, and are thereby helpful in extracting meaningful signals from the volatility noise that are not accounted for when using measures of dispersion over symmetric distributions. Using an axiomatic approach, every performance measure or R/R ratio should satisfy the properties of, first monotonicity, which means that more is better than less. Second, quasi-concavity leads to preferences that value averages higher than extremes, encouraging diversification. Third, scale invariance and last, being distribution-based.

Let

be a convex set of random variables on a probability space

. Each element

denotes a financial return over time length

. Given these conditions, consider an R/R ratio of the following form:

for a reward measure

and a risk measure

. In addition,

denotes

and

denotes

. The ratio

should satisfy the following two conditions:

- (1)

(M) Monotonicity: such that

- (2)

(Q) Quasi-Concavity: such that .

Cheridito and Kromer [

27] explain that monotonicity is a minimal requirement that every performance indicator should satisfy. It simply implies that more of a financial return is better than less and preferred by all agents. Moreover, quasi-concavity has can explain the aversion to uncertainty. If

is monotonic and quasi-concave, averages are preferred to extremes and diversification is encouraged. In cases when

does not satisfy the required properties, there are

and a scalar

such that

. In such a case, research on Value-at-Risk (VaR) Artzner et al. [

28] shows that there will be a concentration of risk.

Moreover, there is a large family of R/R ratios that also satisfy the following conditional properties:

- (1)

(S) Scale-Invariance:

- (2)

(D) Distribution-based: only depends on the distribution of X under .

Given that performance ratios should satisfy the first two mandatory properties and the two conditional properties, we can prove the functional properties of to make the ratios micro-founded so as to explain the meaning of the signals contained in .

Proposition 1:Letfollow the form as described in Equation (7):

- (1)

Ifandsuch that, thensatisfies the monotonicity property (M).

- (2)

Ifis concave andconvex, thensatisfies the quasi-concavity property (Q).

- (3)

and, thensatisfies the scale-invariance property (S).

- (4)

If and satisfy the distribution-based property (D), then so does.

Proof is straightforward and mentioned in Cheridito and Kromer [

27].

One of the key issues when measuring the R/R ratios over the revised VIX is that while computing performance ratios over a convex set of random variables generates independent and identically distributed (i.i.d.) variables, the financial return itself is not i.i.d., so this hinders the process of identifying uncertainty shocks as i.i.d. To mitigate this, we adopt the method of fitting a fractional time series model to take into account the long memory of the mean and volatility exhibited in the time series data. Baillie et al. [

29] introduce the FIGARCH (Fractionally Integrated GARCH) model, demonstrating that traditional GARCH models are inadequate for capturing long memory in volatility. This finding highlights the need for fractional integration in volatility modeling to better reflect persistent effects in financial time series. This justifies the need for fractional integration in volatility modeling. Similarly, Hyung and Franses [

30] shows that long memory in both the mean and variance processes is better modeled and captured using Autoregressive Fractionally Integrated Moving Average-Fractionally Integrated GARCH (ARFIMA-FIGARCH) models. Hence, the goal of the present paper is to emphasize the use of fractional time series models to capture the long memory that is explained by the multiple subordinated NIG Lévy process

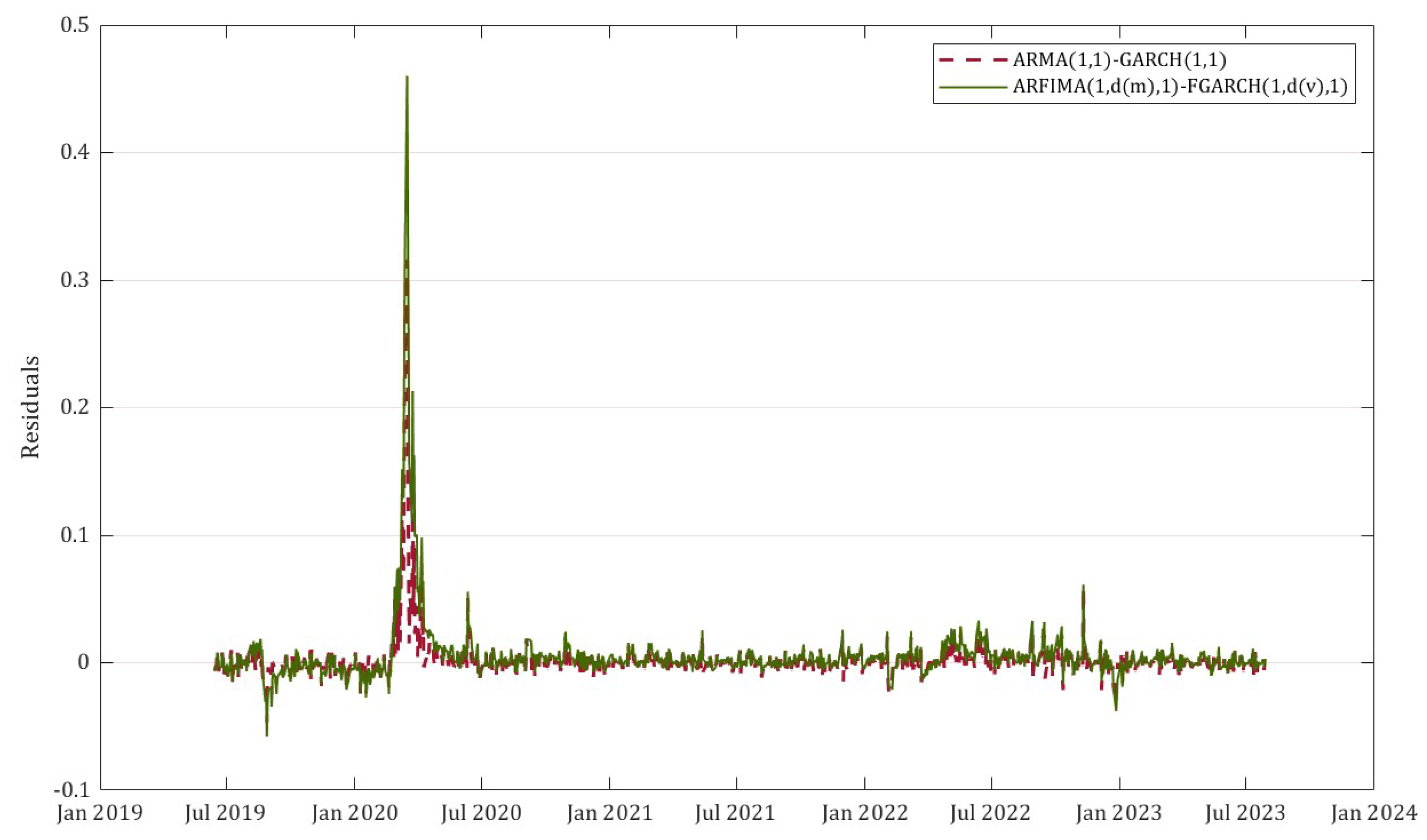

Figure 8 gives an illustration of the difference between the innovations of the ARFIMA(1,

, 1)-FIGARCH(1,

, 1) and the Autoregressive Moving Average-GARCH of lag 1 and order 1 (ARMA(1,1)-GARCH(1,1)) fitted over the values of the newly constructed normalized VVIX. The fractional time series model is better at capturing the persistent effects created by the shocks implied by the newly constructed volatility index.

Figure 11.

Residuals of the fitted time series models

Figure 11.

Residuals of the fitted time series models

The long memory in the mean, captured using the ARFIMA model, refers to the persistence of past values of a time series influencing future values over long periods. In financial time series, long memory in the mean implies that past values of the series have a significant, slowly decaying influence on future values. Therefore, innovations to the time series do not fade away quickly, but explain the influence on the mean for a long time. ARFIMA models allow a slower, hyperbolic decay, characterizing a long memory. In addition, if a time series has long memory in its volatility, meaning the persistence of past volatility (variance) over time, large shifts in volatility appear to cluster and stay accentuated for long periods before decaying to normal levels. Long memory of volatility is present in financial markets (financial time series), where periods of high volatility (e.g., during a financial crisis) tend to last for extended periods and generate persistent shocks.

Therefore, to capture the long memory of the mean and volatility exhibited by the time series of the normalized VVIX constructed in this paper, we apply the ARFIMA(1,

, 1)-FIGARCH(1,

, 1), where

is the term describing the long memory of the mean and

is the term describing the long memory of the volatility. The time series follows the process:

In Equation (

14),

L is the lag operator,

is the fractional differencing parameter, reported to be 0.268,

is the autoregressive polynomial, while

is the MA polynomial.

contains

values of the normalized VVIX, and

is the

vector of white noise error term.

In Equation (

15),

is the autoregressive polynomial, and

is the fractional differencing parameter for volatility, reported to be 0.01.

is the square of the white noise error term to capture the conditional variance generating persistent volatility.

is the constant term and

is the lag polynomial. Lastly,

is the

vector of normal innovations. To allow for a long memory in the fractional time series, we set the condition

. In cases where

, the model is a standard ARMA(1,1)-GARCH(1,1) process.

Furthermore, it is essential to determine whether there is a predictable signal in the noise, as defined by the performance ratios, in the innovations that can make the markets inefficient given that using this measure of the revised VIX, agents will be able to forecast volatility price. To detect the predictable signal in the noise, we simulate

scenarios of the normalized VVIX (with NDIG distribution) over the ARFIMA(1,

, 1)-FIGARCH(1,

, 1) process as defined by Equations (14) and (15). We compute the Rachev ratio and the Stable Tail Adjusted Return ratio

5 over

S scenarios to extract predictable signals from the volatility noise.

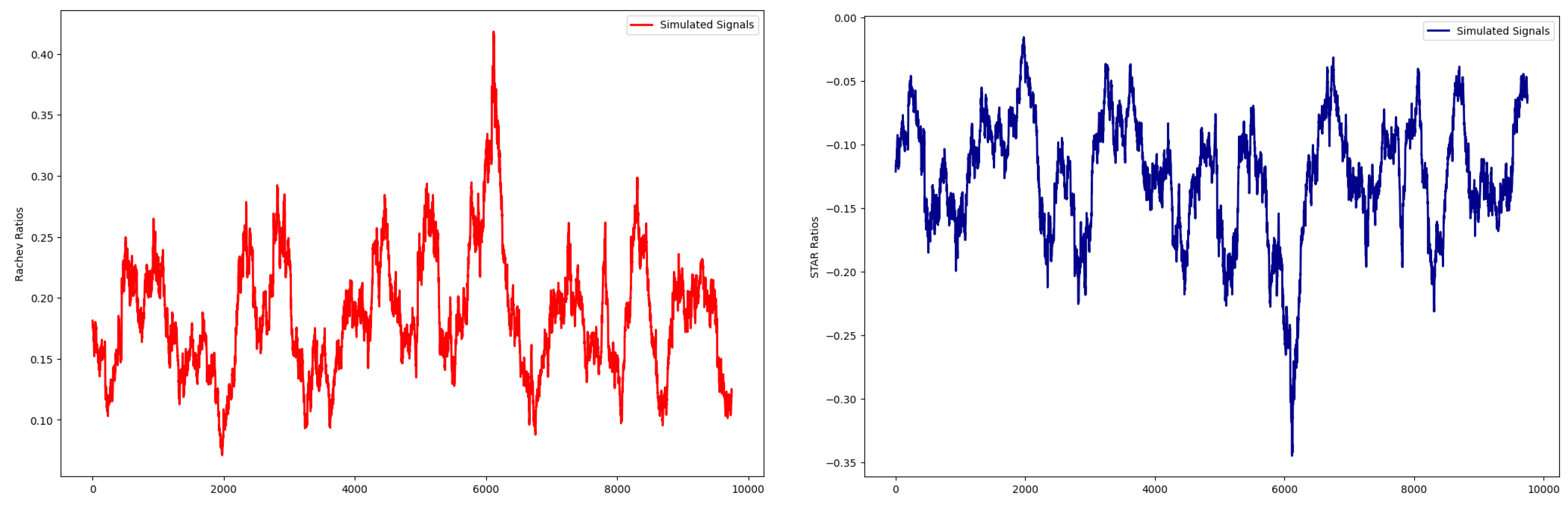

Figure 12 shows the performance ratios computed over the simulated scenarios.

From the simulated signals using the fractional time series process, it is evident that there is significant randomness in the volatility noise with volatility clustering and generates no predictable power. Given that there is no identifiable pattern in the volatility noise that can enable an agent to forecast volatility price, we can conclude that the revised measure of VIX generates randomness in volatility noise and satisfies the Efficient Market Hypothesis.

7. Identification Strategy

To identify i.i.d. shocks, using the normal innovations extracted by utilizing Equations (14) and (15), we compute R/R ratios over the residuals of the ARFIMA(1,

, 1)-FIGARCH(1,

, 1) process. For illustrative purposes, we compute two performance ratios

6 namely, the Rachev ratio and STAR ratio over the normal innovations. The following are the functional forms of the two ratios.

- (1)

Rachev Ratio:

where

, where

is defined as the Average Value at Risk and

X is the measure of interest, in this case, normal innovations of the revised VIX.

refers to the confidence interval of the value on the right tail, whereas

refers to the confidence interval of the value on the left tail. While the Rachev ratio satisfies the properties (M), (S), and (D), it violates (Q) due to a non-concave numerator.

- (2)

Stable Tail Adjusted Return Ratio (STAR Ratio):

where

is the Average-Value-at-Risk at the level

STARR satisfies all four axioms namely (M), (Q), (S), and (D), therefore, is axiomatically robust.

Equations (16) and (17) will be used as the benchmark performance ratios for computing the uncertainty shocks (signals from the volatility noise) that follow the ARFIMA(1,

, 1)-FIGARCH(1,

, 1) process. Therefore,

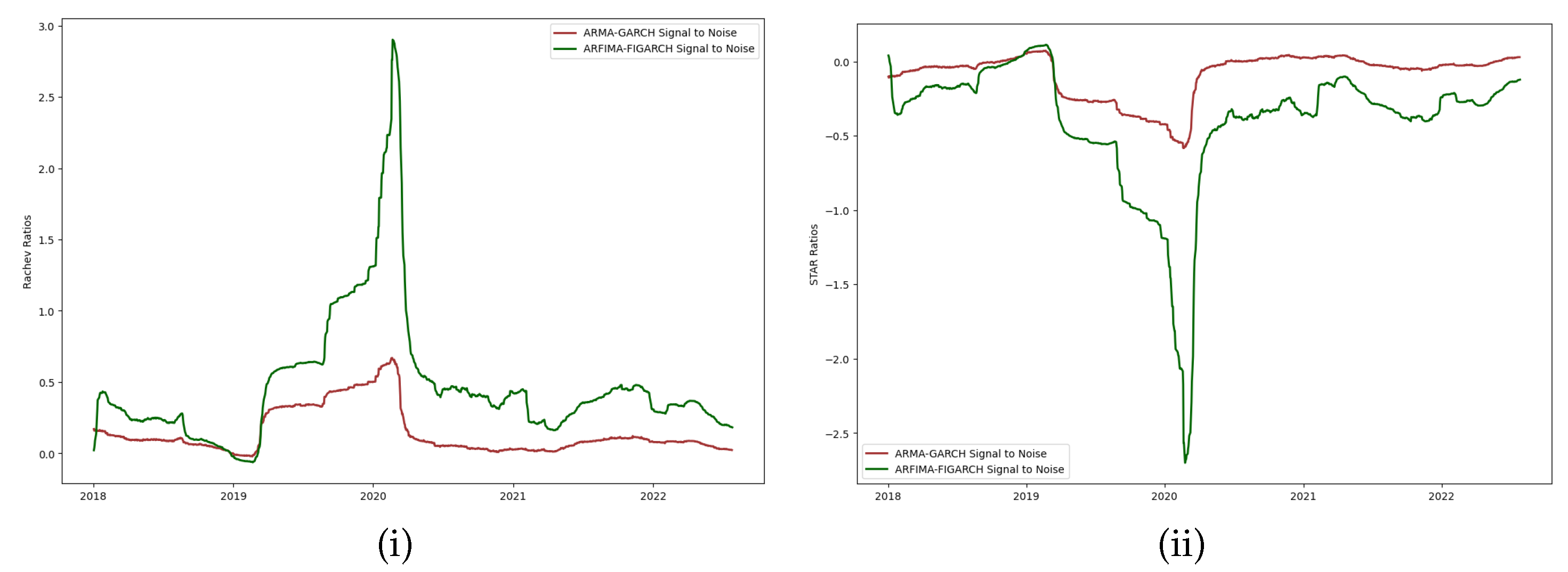

Figure 13 shows the performance ratios computed over the normal innovations of the fitted fractional time series model as the new i.i.d series of uncertainty shocks.

Our uncertainty shocks

(ARFIMA-FIGARCH Signal to Noise) are not serially correlated, as confirmed by

Figure 12. Hence, the assumption that macroeconomic shocks are uncorrelated over time can be defended by arguing that there are no identifiable signals about volatility in the noise (demonstrated in the scenario simulation) and do not depend on any past shocks. Since uncertainty is considered an unexpected and independent event, we can say that these uncertainty shocks are serially uncorrelated, and we account for volatility clustering using the long memory of the fractional time series and the integration of the double subordinated NIG Lévy process fit to the option prices of the S&P 500.

Intuitively, it is reasonable to argue that the large steps or jumps in the uncertainty shocks observed are detectable signals, particularly when using R/R ratios to detect the signal-to-noise ratio from the time series. Moreover, performance ratios might capture underlying financial market dynamics differently from standard methods, making these shocks more prominent or close to the true magnitude of financial market volatility as a proxy for uncertainty. These jumps or steps could reflect the underlying market conditions more robustly, particularly while measuring the impact of uncertainty or volatility on the behavior of the financial market.

Performance ratios 1 and 2 focus on the aspect of the signal-to-noise ratio, which allows us to highlight fluctuations in the financial market that traditional local volatility models might miss. The robustness comes from the ability of our model to capture these dynamics with more sensitivity to changes that directly affect market participants’ risk–reward trade-offs. In addition, this identification is compelling because the innovations (using ARFIMA-FIGARCH) account for persistent and long-memory effects, which are often observed in financial market data. Therefore, these larger jumps could be indicative of shifts in the market’s perception of risk and volatility in response to significant events or structural changes in the economy.

Furthermore, these uncertainty shocks are equipped to explain several key events, alluded to in

Section 1, in the sample period over which they have been computed. In

Figure 13 (i,ii), we can see the shock occurring in March 2020 which explains the major plummet of the S&P500 index due to the news about COVID-19 forcing lock-downs across the United States along with major developed countries. This period saw the financial market crashing significantly, leading to heightened uncertainty about economic activities and triggering the risk-aversion behavior of the agents. Moreover, it is intuitively safe to assume that these uncertainty shocks explain the effect of risk-averse agents, in complete markets, liquidating their holdings in risky assets and transferring wealth into risk-free assets to enable precautionary savings consistent with the consumption risk-sharing hypothesis.

Our strategy for identifying uncertainty shocks, particularly during early 2022, leverages significant movements in the S&P 500 index driven by multiple interrelated factors. This period marks the second major event in recent financial history where elevated volatility and uncertainty shocks are observable, as captured by our model. The key drivers of these shocks include macroeconomic conditions, geopolitical risks, and sector-specific factors, which align with both theoretical frameworks and empirical observations.

First, rising inflationary pressures in early 2022, exacerbated by supply chain disruptions and a post-pandemic rebound in global demand, led the Federal Reserve to signal an aggressive tightening of monetary policy. This response, aimed at fulfilling the Fed’s dual mandate of stabilizing prices and maximizing employment, introduced heightened uncertainty into financial markets. The market response was reflected in sharp declines in the S&P 500, particularly as the trajectory of interest rates became uncertain. This aligns with the established literature on monetary policy uncertainty, where market participants react strongly to the ambiguity surrounding future rate hikes and their potential impact on risky assets (see, e.g., Bloom [

4] and Jurado et al. [

31]). The forward guidance provided by the Federal Reserve increased volatility as markets began to price-in the risks of a more restrictive policy environment, leading to higher risk premia and a greater sensitivity to macroeconomic news.

Second, the geopolitical shock arising from Russia’s invasion of the Ukraine in February 2022 serves as a clear catalyst for heightened uncertainty. Geopolitical events, such as armed conflicts, are known to produce large, exogenous shocks to the economy, often characterized as rare disaster events within the framework of tail risk (see Barro [

32] and Routledge and Zin [

33]). These events significantly impact asset prices due to the sudden and unpredictable nature of the disruptions they cause to global trade, energy markets, and investor sentiment. Our analysis demonstrates that these geopolitical shocks are captured by the fat-tailed behavior of the S&P 500 distribution, which reflects a shift in the risk-neutral density, consistent with the rare disaster hypothesis. Such tail risks are not adequately captured by traditional measures of market volatility alone but are crucial for understanding the full scope of uncertainty shocks in periods of geopolitical crisis.

Third, the sharp correction in high-growth technology stocks during early 2022 provides another dimension to the uncertainty shocks identified in our model. Many of these firms had experienced meteoric rises during the pandemic due to favorable liquidity conditions and investor expectations of continued high growth. However, as inflation and interest rates increased, the present value of these firms’ future cash flows was discounted more heavily, leading to sharp declines in their valuations. This sectoral shock was compounded by low earnings reports from several Fortune 500 technology companies, which introduced additional uncertainty at the firm level. Firm-level uncertainty, particularly in sectors like technology, is often driven by earnings volatility and future profitability concerns, as outlined by Bloom et al. [

34]. The declines in these stocks reflected broader concerns about the sustainability of growth in the face of rising costs and tightening monetary conditions, further amplifying the aggregate uncertainty in financial markets.

Our identification strategy highlights the multifaceted nature of the uncertainty shocks in financial markets by identifying effects arising out of macroeconomic shocks, geopolitical risk, and sectoral disruptions. The use of the S&P 500 as a proxy for these shocks is well-supported by its role as a barometer of overall market sentiment and risk appetite. Additionally, by incorporating insights from the rare disaster literature and firm-level uncertainty frameworks, our analysis captures both systemic and idiosyncratic factors contributing to market-wide uncertainty during this period.

8. Conclusion

This paper presents a novel approach to identifying uncertainty shocks in financial markets, focusing on the heavy-tailed, non-Gaussian nature of asset returns. By fitting a double-subordinated Normal Inverse Gaussian (NIG) Lévy process to S&P 500 option prices, we constructed a more robust measure of volatility, the Volatility of VIX (VVIX). This revised VIX captures large market movements incorporating features like skewness and heavy tails commonly observed in financial data.

Our methodology extends the traditional framework for measuring uncertainty by introducing a general family of R/R ratios. These ratios, computed on the fitted fractional time series of the revised VIX, offer a more nuanced understanding of the relationship between risk and return in the presence of extreme tail risks. This approach not only improves the identification of uncertainty shocks but also enhances our ability to model macroeconomic volatility and its impact on financial markets.

In summary, our findings suggest that standard second-moment measures of volatility and using local volatility models to compute volatility surfaces of the VIX are insufficient for capturing the full extent of volatility (implying uncertainty) in financial markets, especially during periods highlighting extreme tail risk. The proposed VVIX, derived from a double-subordinated NIG Lévy process, provides a more accurate representation of market volatility and its associated risks, making it a valuable tool for both researchers and practitioners in economics and finance to explore new avenues concerning the impact of uncertainty shocks. Lastly, future research may explore the application of this method to other classes of assets and its implications for portfolio management, risk mitigation strategies, and more importantly, to better understand the true effect of uncertainty on macroeconomic indicators.

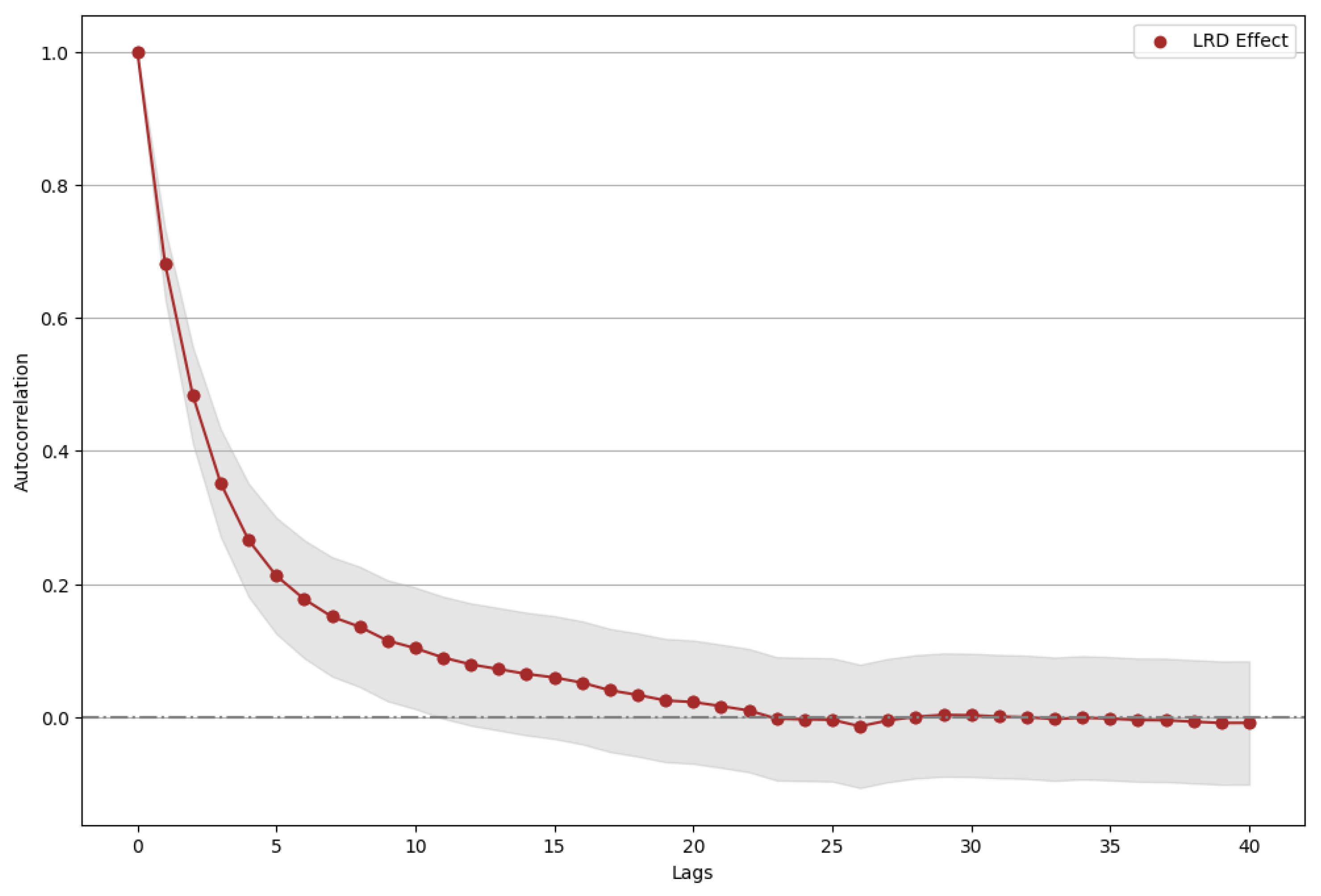

Appendix

Figure A1 displays the autocorrelation function of the residuals from an ARFIMA-FIGARCH model fitted to the revised VIX, revealing long-range dependencies characteristics. The slow decay of the autocorrelation indicates that volatility is highly persistent, as the residuals show significant autocorrelation even beyond the initial lags. The decay begins sharply but gradually flattens, with the autocorrelation remaining positive up to about 22 lags before approaching zero. This suggests that the ARFIMA-FIGARCH model captures the persistent volatility patterns inherent in the VIX, effectively modeling the heavy-tailed nature and memory effects associated with market uncertainty. The lag at which the autocorrelation converges close to zero implies that the model successfully accounts for the long memory in the volatility, which is crucial for understanding the dynamics of financial market stress.

Figure A1.

Long range dependences: Decay of the autocorrelation.

Figure A1.

Long range dependences: Decay of the autocorrelation.

Figure A2 displays the STAR ratio computed over the normal innovations of the current VIX (using ARMA(1,1)-GARCH(1,1)) and the normal innovations of the revised VIX (VVIX) (using ARFIMA(1,

, 1)-FIGARCH(1,

, 1)). The new measure of uncertainty shocks is capturing more pronounced signals about financial market volatility than the measure extracted from the current VIX. Given that fitting an ARFIMA(1,

, 1)-FIGARCH(1,

, 1) on the current VIX has not been explored in the literature, we revert to the standard ARMA(1,1)-GARCH(1,1) when

.

Figure A2.

Signal to Noise: Current VIX v/s Revised VIX (VVIX).

Figure A2.

Signal to Noise: Current VIX v/s Revised VIX (VVIX).

References

- Bloom, N. The Impact of Uncertainty Shocks. Econometrica 2009, 77, 623–685. [Google Scholar]

- Cont, R. Empirical properties of asset returns: Stylized facts and statistical issues. Quantitative Finance 2000, 1, 223–236. [Google Scholar] [CrossRef]

- Kozeniauskas, N.; Orlik, A.; Veldkamp, L. What are Uncertainty Shocks? Journal of Monetary Economics 2018, 100, 1–15. [Google Scholar] [CrossRef]

- Bloom, N. Fluctuations in Uncertainty. Journal of Economic Perspectives 2014, 28, 153–176. [Google Scholar] [CrossRef]

- Kelly, B.; Jiang, H. Tail Risk and Asset Prices. The Review of Financial Studies 2014, 27, 2841–2871. [Google Scholar] [CrossRef]

- Rietz, T.A. The Equity Risk Premium: A Solution. Journal of Monetary Economics 1988, 22, 117–131. [Google Scholar] [CrossRef]

- Bansal, R.; Yaron, A. Risks for the Long Run: A Potential Resolution of Asset Pricing Puzzles. The Journal of Finance 2004, 59, 1481–1509. [Google Scholar] [CrossRef]

- Duffie, D.; Pan, J.; Singleton, K. Transform analysis and asset pricing for affine jump-diffusions. Econometrica 2000, 68, 1343–1376. [Google Scholar] [CrossRef]

- Shirvani, A.; Stoyanov, S.; Fabozzi, F.; Rachev, S. Equity premium puzzle or faulty economic modeling? Review of Quantitative Finance and Accounting 2021, 56, 1329–1342. [Google Scholar] [CrossRef]

- Mehra, R.; Prescott, E.C., Chapter 14: The equity premium in retrospect. In Handbook of the Economics of Finance; 2003; Vol. 1B, pp. 889–938.

- Orlik, A.; Veldkamp, L. Understanding Uncertainty Shocks and the Role of Black Swans. Technical Report 2 0445, National Bureau of Economic Research, 2014. [Google Scholar]

- Kozlowski, J.; Veldkamp, L.; Venkateswaran, V. The Tail That Wags the Economy: Beliefs and Persistent Stagnation. Journal of Political Economy 2020, 128, 2839–3284. [Google Scholar] [CrossRef]

- Carr, P.; Geman, H.; Madan, D.B.; Yor, M. Stochastic Volatility for Levy Processes. Mathematical Finance 2003, 13, 345–382. [Google Scholar] [CrossRef]

- Shirvani, A.; Mittnik, S.; Lindquist, W.B.; Rachev, S.T. Bitcoin Volatility and Intrinsic Time Using Double-Subordinated Lévy Processes. Risks (MDPI) 2024, 12, 1–21. [Google Scholar] [CrossRef]

- Mandelbolt, B.; Taylor, H. On the distribution of stock price differences. Journal of Operations Research 1967, 15, 1057–1062. [Google Scholar] [CrossRef]

- Clark, P. A subordinated stochastic process model with fixed variance for speculative prices. Econometrica 1973, 41, 135–156. [Google Scholar] [CrossRef]

- Shirvani, A.; Rachev, S.; Fabozzi, F. Multiple subordinated modeling of asset returns: Implications for option pricing. Econometric Reviews 2021, 40(3), 290–319. [Google Scholar] [CrossRef]

- Carr, P.P.; Madan, D. Option Valuation Using the Fast Fourier Transform. Journal of Computational Finance 2001, 2. [Google Scholar] [CrossRef]

- Yu, J. Empirical characteristic function estimation and its applications. Econometric Reviews 2003, 23, 93–123. [Google Scholar] [CrossRef]

- Shirvani, A.; Stoyanov, S.V.; Rachev, S.T.; Fabozzi, F.J. A New Set of Financial Instruments. Frontiers in Applied Mathematics and Statistics 2020, 6. [Google Scholar] [CrossRef]

- Meyer, P.; Dellacherie, C. Probabilities and Potential B: Theory of Martingales; North Holland, 1978.

- Delbaen, F.; Schachermayer, W. The fundamental theorem of asset pricing for unbounded stochastic processes. SFB Adaptive Information Systems and Modelling in Economics and Management Science, WU Vienna University of Economics and Business. Report Series SFB ’Adaptive Information Systems and Modelling in Economics and Management Science’ 1999. [CrossRef]

- Delbaen, F.; Schachermayer, W. A general version of the fundamental theorem of asset pricing. Mathematische Annalen 1994, 300, 463–520. [Google Scholar] [CrossRef]

- Barndorff-Nielsen, O.; Shephard, N. Non-Gaussian Ornstein–Uhlenbeck-based models and some of their uses in financial economics. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 2001, 63, 167–241. [Google Scholar] [CrossRef]

- Esscher, F. On the Probability Function in the Collective Theory of Risk. Scandinavian Actuarial Journal 1932, 15, 175–195. [Google Scholar]

- Duffie, D. Dynamic Asset Pricing Theory (3rd ed.); Princeton University Press, 2001.

- Cheridito, P.; Kromer, E. Reward–Risk Ratios. Journal of Investment Strategies 2013. [Google Scholar] [CrossRef]

- Artzner, P.; Delbaen, F.; Eber, J.M.; Heath, D. Coherent Measures of Risk. Mathematical Finance 1999, 9, 203–228. [Google Scholar] [CrossRef]

- Baillie, R.; Bollerslev, T.; Mikkelsen, H. Fractionally integrated generalized autoregressive conditional heteroskedasticity. Journal of Econometrics 1996, 74, 3–30. [Google Scholar] [CrossRef]

- Hyung, N.; Franses, P. Modeling seasonality and long memory in time series. Journal of Econometrics 2002, 109, 241–263. [Google Scholar]

- Jurado, K.; Ludvigson, S.C.; Ng, S. Measuring Uncertainty. American Economic Review 2015, 105, 1177–1216. [Google Scholar] [CrossRef]

- Barro, R.J. Rare Disasters and Asset Markets in the Twentieth Century. The Quarterly Journal of Economics 2006, 121, 823–866. [Google Scholar] [CrossRef]

- Routledge, B.R.; Zin, S.E. Generalized Disappointment Aversion and Asset Prices. The Journal of Finance 2010, 65, 1303–1332. [Google Scholar] [CrossRef]

- Bloom, N.; Bond, S.; Van Reenen, J. Uncertainty and Investment Dynamics. The Review of Economic Studies 2007, 74, 391–415. [Google Scholar] [CrossRef]

| 1 |

Refer to eq (12) which describes the expiration times and methodology of computing the value of the VIX. |

| 2 |

A semi-martingale is a type of stochastic process that plays a central role, particularly in the modeling of asset prices. Semi-martingales are general enough to include many important classes of processes (such as Brownian motion and Lévy processes) while still allowing the use of stochastic calculus. See Meyer and Dellacherie [ 21] |

| 3 |

This condition essentially states that in a well-functioning financial market, it is impossible to construct a trading strategy that yields a risk-free profit with zero initial investment and no risk of loss. This concept is closely related to the absence of arbitrage opportunities in the market. See Delbaen and Schachermayer [ 23] |

| 4 |

The Esscher transform is used to price risky assets and derivatives. See Esscher [ 25]. |

| 5 |

The functional forms of these performance ratios are described in Section 7. |

| 6 |

The phrases `R/R ratios’ and `performance ratios’ are used interchangeably. |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).