1. Introduction

After Simon Newcomb’s public note [

1] and Benford’s statement [

2] that small things are more numerous than large things, and there is a tendency for the step between sizes to be equal to a fixed fraction of the last preceding phenomenon or event, many scientists [

3] tried to explicate the strange high frequency of the micro in nature, the rarity of the macro, and the ebbing progression of the gaps in between.

Nature pivots on exponential powers. Benford underlined that the geometric series has long been recognized as a common phenomenon in factual literature and in the ordinary affairs of life. Nevertheless, human functions are often arithmetic-centric. Will there be a natural coding system to convert these realms into one another, the observable into our inner world’s models, and vice versa? In other words, does nature count on a conformal transformation mechanism [

4]?

In modern terms, Newcomb-Benford law (NBL) states that the first digits of randomly chosen original data typically outline a logarithmic curve in an impressive diversity of fields regardless of their physical units. Equivalently, the law remarks that raw natural data usually belong to nearly scale-invariant geometric series. Among its manifestations, it is fascinating that linear coefficients represented by mathematical and physical constants [

5] (e.g., proportionality parameters or scalar potentials) adhere to the law.

Although this scenario suggests that NBL might account for an elementary principle, we have yet to clarify its origin, realize a theoretical basis, or encounter a convincing reason [

6]. Berger [

7] laments that There is no known back-of-the-envelope argument, not even a heuristic one, that explains the appearance of Benford’s law across the board in data that is pure or mixed, deterministic or stochastic, discrete or continuous-time, real-valued or multidimensional.

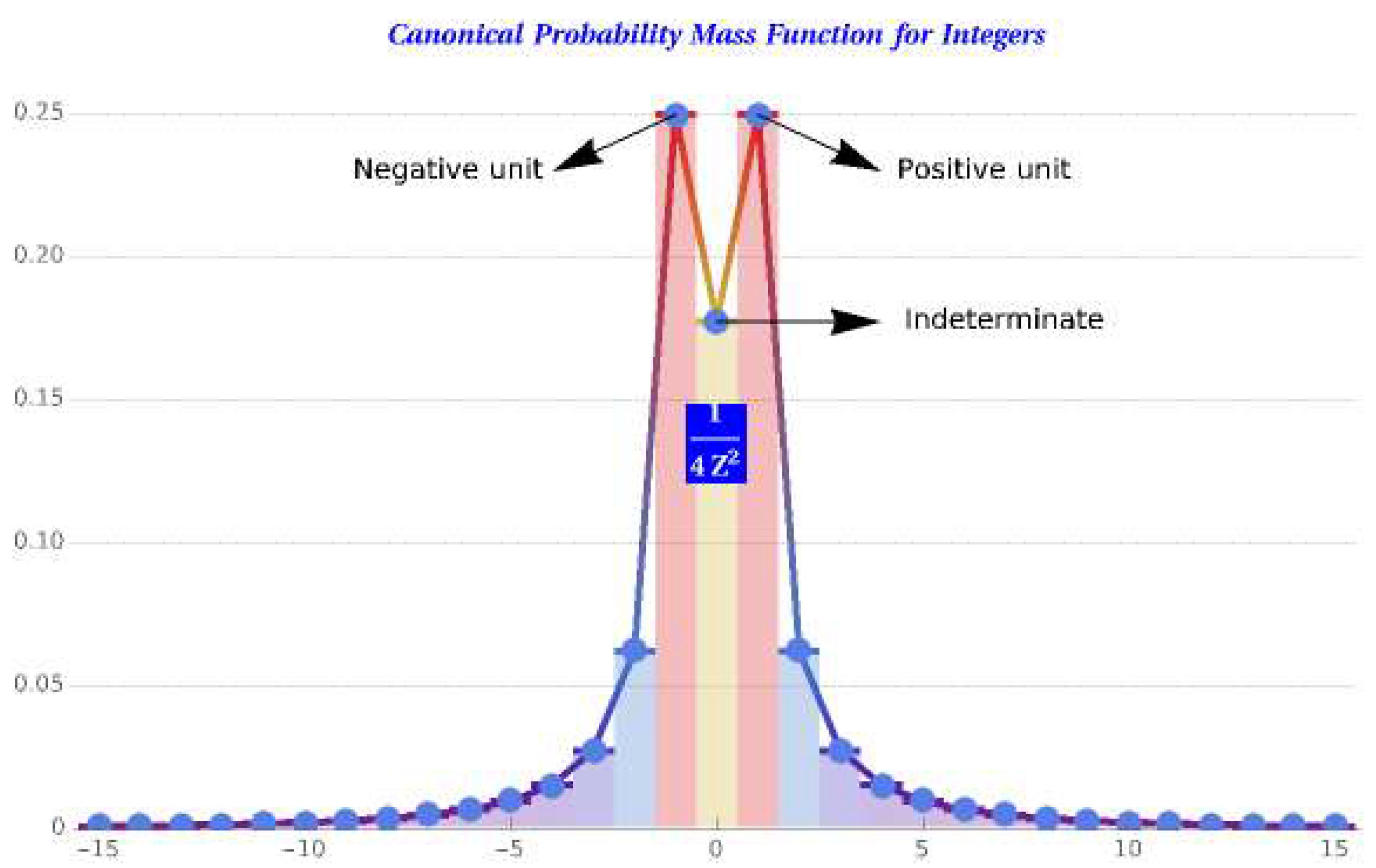

We claim a primordial probability inverse-square law (ISL) is at NBL’s root. This canonical probability mass function (PMF) has a double fundamental effect, namely the NBL for the discrete (global and harmonic) and continuous (local and logarithmic) domains. We prefer to anticipate these three laws’ properties and affiliated terminology in

Table 1, indicating their scope and character, baseline set, physical incarnation, scale, formula, information function, cardinality, and how we will denominate the corresponding item, an item list, and an item range.

Are these laws naturally predetermined probability distributions? We champion the view that the canonical PMF is a brute fact and, consequently, the global and local versions of NBL are inescapable. For one thing, their mode is one. This number is the base case for almost all proofs by mathematical induction, statistically the most probable cardinal of a natural set (e.g., one cosmos, one black hole at the center of a galaxy, one star ruling an orbital planetary system, one heart pumping a body’s blood, one nucleus regulating the cellular activity, et cetera), and seed in the majority of recursive computational processes. We read in [

8] that numbers close to the multiplicative unit are not preferably rooted in mathematics, but a simple glance at the Table of Constants in [

9] points in the opposite direction. Small leading digits and, in general, small significands (mantissae) of coefficients and magnitudes are the most common in sciences, albeit, of course, we can find cardinalities of all sizes.

That the universe is prone to favor slightness is particularly blatant in physics and chemistry. For instance, following the standard cosmological model [

10], the abundance of hydrogen and helium is roughly

and

of all baryonic matter, respectively [

11]. Higher atomic numbers than 26 (iron) are progressively more and more infrequent. Nevertheless, the universe’s heaviest elements can comparatively produce the most remarkable galactic phenomena despite their shortage [

12] (e.g., the necessary metals to form the Milky Way represent only about

of the galaxy’s disk mass). Why do accessibility and reactivity maintain a hyperbolic relationship?

Notwithstanding that NBL assumes standard positional notation (PN) in its fiducial form, our logic also permits obtaining the formulae for non-standard place-value numeral systems. In particular, every NBL’s PMF for standard PN has a bijective numeration [

13] peer. For example, the standard and bijective decimal system global and local laws are similar but different. These results show that the precision of NBL is nonessential, while the support positional scale is what matters.

This article’s field of study is mathematical and computational physics, delving into philosophy, theoretical physics, information theory, probability theory, and number theory. We have organized it as follows. We first examine the challenges researchers historically faced in deducing NBL and the state of the art in this field. Afterward, we present a one-parameter inverse-square PMF for the natural numbers with positive probabilities summing to one, extensible to the integers, and diverging mean (no bias). Next, we deduce the fiducial NBL passing through the global NBL; this two-phase derivation clarifies why the tendency for the minor numbers revealed by the natural sciences can be regular only if we assume that an all-encompassing base exists. To support this view, we substantiate that the set of Kempner’s curious series conforms to the global NBL for bijective numeration. Further, we surmise a PN resolution, i.e., the prospect of a natural position threshold ascribed to a place-value number system.

Information theory [

14] comes into play when we discover that information is prior to probability in the context of NBL. Likewise, a unit fraction is the harmonic likelihood of an elemental quantum gap, and a digit of a numeral written in PN is a bin that covers a proportion of the available logarithmic likelihood.

The odds between two events is a correlation measure whose entropic contribution to a positional scale ushers in Bayes’ rule [

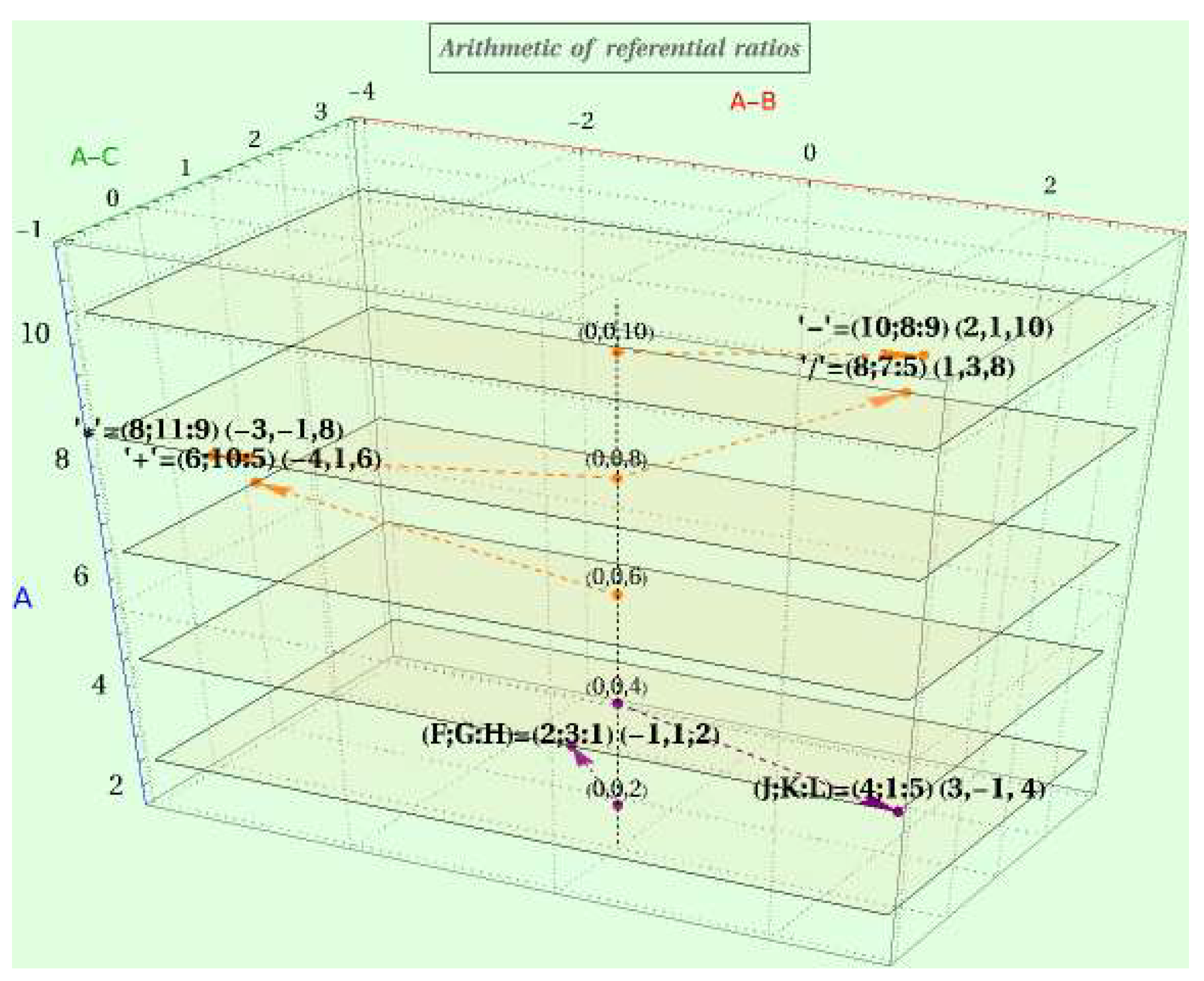

15], namely the product of two factors, a rational prior and a rational likelihood, precisely the NBL probability of the numeric range involved. This structure is recurrent under arithmetic operations and gives place to the algebraic field of referential ratios, the ground for Lorentz covariance, and the cross-ratio, a central instrument of conformality. Then, we determine the conformal metric and iterative coding functions that preserve the local Bayesian information and are compatible with a multiscale complex system [

16]. Finally, we resolve the canonical PMF’s parameter, the proportionality constant that ensures the divisibility of the probability mass for naturals and integers. In the epilogue, we comment on the results and conjecture some ideas that open the door to future research.

The primary motivation of this work is seeking a reason for NBL rather than describing how it works [

17] or elucidating its pervasiveness. Although Newcomb was an astronomer and Benford was an electrical engineer and physicist, basic research on NBL has usually been the territory of mathematicians; physics must reconsider NBL. Finding a rational version of the law was also a goal of our investigation, given that real numbers are physically unfeasible, mere mathematical abstractions.

fits in a relational world ruled by proportions and approximations, contrasting with the continuum’s absolute density and the ultra-accuracy of

. Another motivation is disclosing how a coding source manipulates information in PN. NBL says nothing about the coding process that leads to a digit’s probability of occurrence.

What falls outside our purview? Applications of NBL (e.g., financial) that are irrelevant to computation, information theory, or physics. Neither are we interested in particular virtues of NBL, e.g., the exactness of the law (uncanny, to tell the truth [

18]), because they deviate our attention from the critical topics to tackle, to wit, what makes the minor numbers mostly probable, the link to Bayes’ rule, and the efficacy and universality of the conformal coding spaces (see

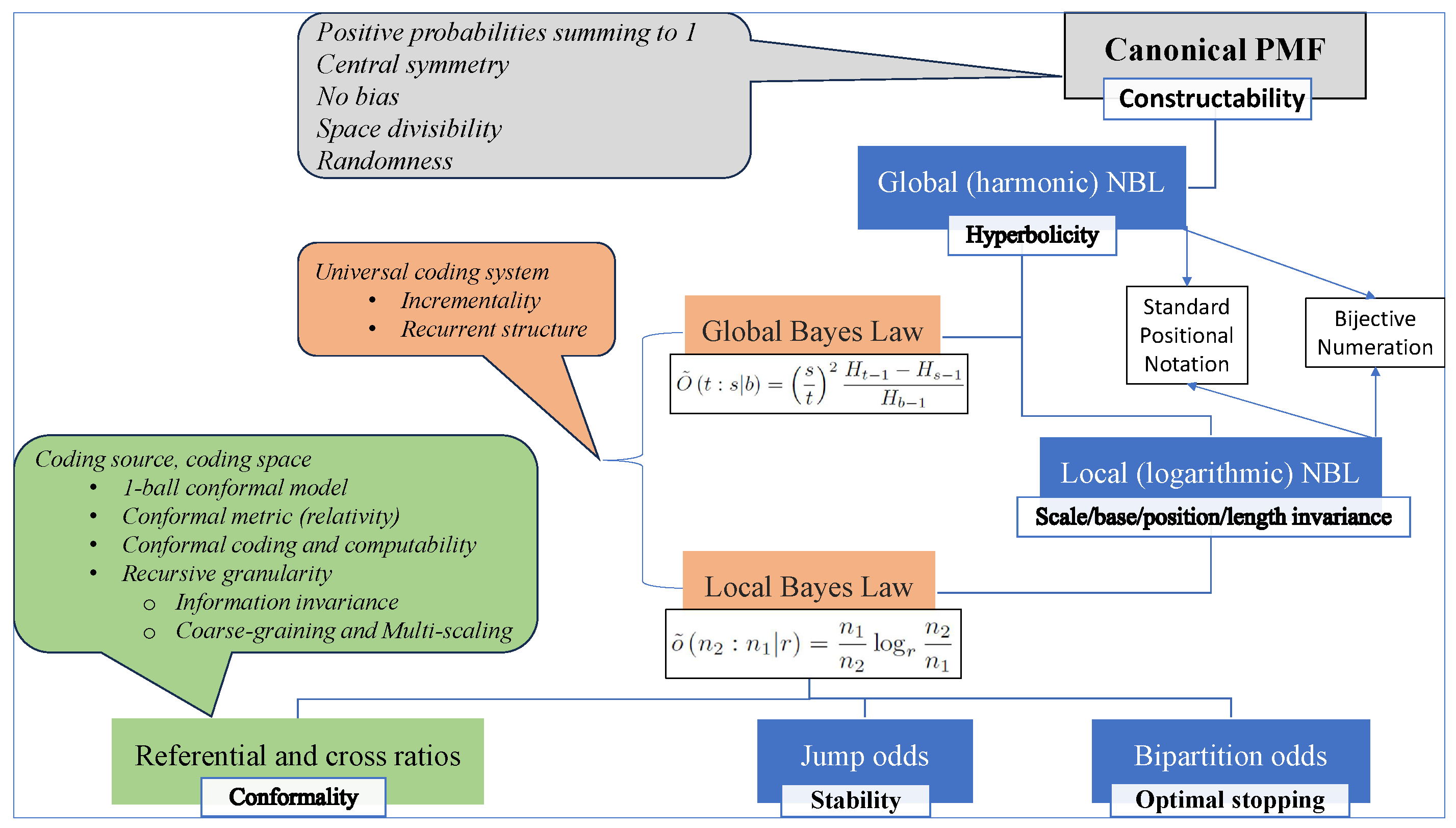

Figure 1). Despite the title, this essay is not about cryptographic protocols or codes enabling source compression and decompression or error detection and correction for data storage or transmission across noisy channels; it is about a source’s system of rules for converting global information into local information.

How did this research develop? Our original rationale was acknowledging that a connection between an NBL and an ISL exists. The rate of change of a significand’s probability drops quadratically, i.e.,

According to this expression, a numeral’s occurrence differential is inversely proportional to the square of its distance (plus its distance) from the coding source. Therefore, we could expect this spatial arrangement around the origin based on an ISL for the natural numbers. If a genuine inverse-square PMF exists, we should arrive at it from just a few essentials. We confirmed that three preconditions, namely positive probabilities summing to one, no bias, and central symmetry, unambiguously define a PMF, except for a proportionality constant. Moreover, requiring probability mass compartmentalization fixes such a constant and completely specifies the canonical PMF for the natural and integer numbers. Because the resulting probability for counting numbers is a unit fraction, a rational version of NBL should accompany the logarithmic counterpart. We ultimately gleaned how to calculate the probability of a quantum in a given base as a value in .

We have encountered that information has a relational character primally conveyed by the likelihood concept, either harmonic (, i.e., harmonic units of information) or logarithmic (). Likelihood is not the information obtained by picking an item from a range but the space allocated to encode an item between the range’s ends. An NBL probability is a proportion of the information total (likelihood density), and an NBL entropy is the weighted mean of the information total (average likelihood). Moreso, odds, referential ratios, and cross-ratios measure likelihood correlations. Because algebra grows on these rational data, geometry embodies algebraic structures, and physics reflects geometrical rules, information turns out to be physical.

Another high-level achievement was finding a hidden connection between NBL and Bayes’ law. This rudimentary rule codes the strength of the relationship between a pair of items normalized in a particular base b or radix r. The global Bayes’ rule, in odds form and b-ary harmonic information units, is the product of a prior, the ratio between the probability of two numbers t and s according to the canonical PMF, by a likelihood factor, the global NBL probability of the bucket in base b. The local Bayes’ rule, in odds form and r-ary logarithmic information units, is the product of the prior, a ratio between the global NBL probability of two quanta j and i on b’s harmonic scale, by a likelihood factor, the local NBL probability of the bin in radix r. Further, Bayesian data conformally encoded constitute normalized likelihood information. Bayes’ rule also recodes information after a change of base or radix, a foundation for incremental computation. Lastly, we learned how a source recursively encodes the observable as Bayesian data and decodes these back into the information of the external world. This Bayesian outlook unifies the frequentist, subjective, likelihoodist, and information-theory interpretations.

We have verified that likelihood, probability masses, entropy, and odds are measurable information, the common factor for the universality of the harmonic and logarithmic patterns appearing in real-life raw numerical series. We have even inferred that information divergence is impossible. In the first place, the entropy of the canonical PMF for the natural and integer numbers converges. Likewise, we have defined global and local Bayesian data supported by confined harmonic and logarithmic scales. The jump odds between consecutive quanta or numerals are also delimited. Physically, the entropic cost of crossing entirely the universe or its local copy agrees with the Bekenstein bound [

19].

Effectively, information occupies finite space. This essay introduces various examples of how a law, PMF, concept, or formula supports our theory that the cosmos is a hyperbolic, thrifty, and relational information system at a fundamental level. The notion of conformality implemented into a source’s coding space subsumes these hallmarks. It employs the NBL invariance of scale, base, length, and position in the Bayes’ rule to calculate the entropic contribution of a range of items. This synergy reinforces the thesis that mathematics begets physics and that information is a form of energy. The universe is a natural positional system that rules how a body’s local quantum-mechanical degrees of freedom carve the information of its consubstantial properties, backing the Computable Universe Hypothesis [

20].

2. Results

We enumerate the research’s concrete results and answer what this study adds to human knowledge.

2.1. Specific Achivements

We have found a roundabout but intuitive argument to explain the appearance of NBL in the vast array of contexts in which its effect manifests; NBL issues from an ISL of probability.

When choosing a natural number at random, nature follows a particular PMF where zero is possible and interpretable as indeterminate, e.g., not-a-number or inaction. We require zero’s probability to be , where is a proportionality constant, and is the probability of picking a counting number, i.e., . We also need this one-parameter PMF to have no bias so that no number is prominent (up to its probability), i.e., any number can appear. Moreover, the mass of a counting number N is necessarily if we want the probability function extensible to integer numbers, i.e., a number with the same probability regardless of the sign. Thus, the universe weighs the cost of choosing as growing quadratically with N.

We have obtained the global and local NBL from this predetermined PMF. Under its tail, the probability that a natural number exceeds

N is proportional to the trigamma function at

N. Likewise, the probability of a natural variable’s second-order cumulative function falling into

is a harmonic likelihood ratio that cancels out the constant

, namely the bucket’s width

relative to the base’s support width

, where

and

. The base

b is a global referent that changes the status of a number to a computable elemental entity we call a quantum. When the bucket is

, we obtain the global NBL of a generic quantum

q,

, an exact and separable function where

,

, and

is the

nth harmonic number. The global NBL represents in information theory the likelihood

q encloses concerning the likelihood total, geometrically a share of the surface area swept by

q, and physically a scalar potential harmonically diminishing as

q moves away from the origin. The odds-version of this PMF (

21), also exact and separable, defines the stability of a quantum jump.

We can handle quanta as real variable values when the global base b is giant. Because a coding source does not know the value of b, it must establish a local referent to normalize its information separated from the surrounding environment, changing the status of a quantum to a locally computable elemental entity we call a digit. This scenario involves the canonical PMF’s third-order cumulative distribution; the probability of a quantum falling into is a logarithmic likelihood ratio that cancels out , precisely the bin’s width relative to the radix support’s width . When the bin is , we arrive at the fiducial NBL, i.e., , where is a digit such that . This PMF represents in information theory the likelihood d encloses regarding the likelihood r embraces. It is geometrically a hyperbolic sector equivalent to the surface area swept by d relative to that swept by r and physically a scalar potential r-logarithmically diminishing as d moves away from the origin.

In general, NBL probabilities consider the cutoffs PN imposes as a proportion of the total information. The global and local versions of NBL for standard PN give probability masses similar to a degree. For comparison purposes, 1 in standard ternary occupies () and (), while 2 occupies and , respectively. Likewise, 1 in standard decimal occupies () and (), while 9 occupies and , respectively.

Furthermore, we provide NBL for bijective numeration to reinforce the thesis that this law is comprehensively universal. All the formulas of standard PN are translatable to bijective numeration. The NBL with standard radix corresponds to the NBL with bijective radix , which is length- and position-invariant in addition to other well-known invariances. Regardless of the numeral system, we must conceive of positional scales as hyperbolic spaces in a broad sense, harmonic in the first place, and logarithmic in the second place.

The sums of Kempner’s curious harmonic series [

21] echo the bijective harmonic scale traced by the global NBL. This outcome is absolute because every Kempner series is infinite, and the calculations consider every possible numerical chain; extended numerals are increasingly unimportant. For example, in decimal, while removing the terms including less than

of 5’s in the denominator makes a harmonic series converge, missing the terms including

of 5’s does not impede the divergence of the depleted harmonic series. We also figure that the natural span of a positional system in base

b is

, a measure of the physical quantity of numerals PN can inherently manage. Beyond this computational resolution, quanta or digits could be haphazard for practical purposes.

NBL, a synonym of PN, a subsidiary of the canonical PMF, describes an information field where probability correlates with accessibility, whence, with concentration and durability. Smaller significands occupy more room and enclose less information than greater significands. In other words, the space is denser and more stable near the coding source, while numerals dilute the space and become more reactive as we move away from the origin.

The analysis of NBL from the odds angle drives us to a rudimentary Bayesian framework. The Bayesian view of objectivistic or subjectivistic probability allegedly requires a reasoner to admit ignorance and imperfection expressed by a prior and its likelihood, respectively. The reasoner also accounts for counterhypotheses by considering the product of the prior and its likelihood in the posterior calculation. Natural Bayesianism works similarly but merely involves a coding source supporting PN.

Bayesian encoding, recoding, and decoding are elemental computing routines that handle odds. The Bayesian encoding of the relation between two numbers is the entropic allocation of their correlation for a harmonic scale, i.e., their ratio squared multiplied by the probability of the associated interval in the chosen base. The Bayesian encoding of the relation between two quanta is the entropic contribution of their correlation for a logarithmic scale, i.e., their ratio multiplied by the probability of the associated bucket in the chosen radix. Therefore, we can interpret a Bayesian rule as the formula to encode the rational point or the corresponding range of integers; this duality principle asserting that points and lines are interchangeable is endemic to the cosmos.

The global Bayes’ law bridges numbers with information. We measure global Bayesian data in harmonic units of information that depend on the base. The natural harmonic scale uses bucket as a reference. We measure local global Bayesian data in logarithmic units of information that depend on the radix. The natural logarithmic scale uses bin as a reference, where e is Euler’s number. However, the arithmetic of Bayesian data generally does not refer to the global base or the local radix; it works on natural scales.

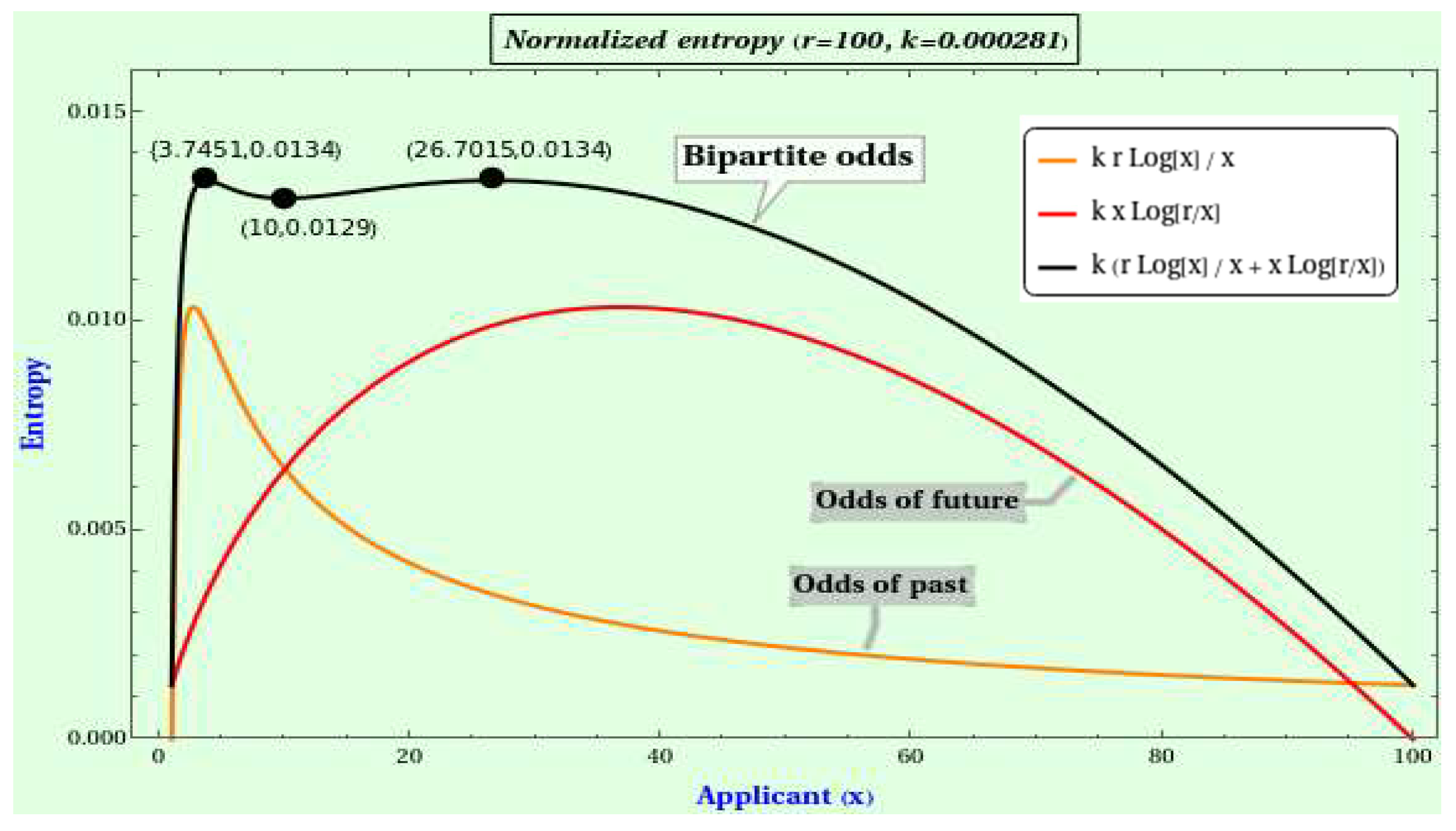

The global Bayesian rule allows for calculating a quantum jump probability, with masses decaying similarly to the global NBL as we move away from the source. Likewise, the local version of Bayesian coding drives us to the PMF of a domain’s bipartition, an information function applicable to stopping problems. Specifically, we deduce the information gained from splitting a radix’s digit set. If we take these digits as generic elements to be processed sequentially, our bipartite odds formula reaches a pair of information maxima involving e. The square root of the radix gives a minimum between the two maxima. We fix ideas by focussing on a variation of the secretary problem pursuing a good instead of the optimal solution. This problem’s representativeness joins the overwhelming evidence supporting the overarching character of the NBL.

A kicky discovery is that the structure of Bayesian data whose prior factor is the unit is recurrent under arithmetic operations, giving rise to the algebraic field of referential ratios . Moreover, a ratio of referential ratios is a cross-ratio, and the logarithm of a cross-ratio locally provides us with the metric of a conformal space reflecting the observable world and consolidating the universal proclivity towards littleness, lightness, brevity, or shortness.

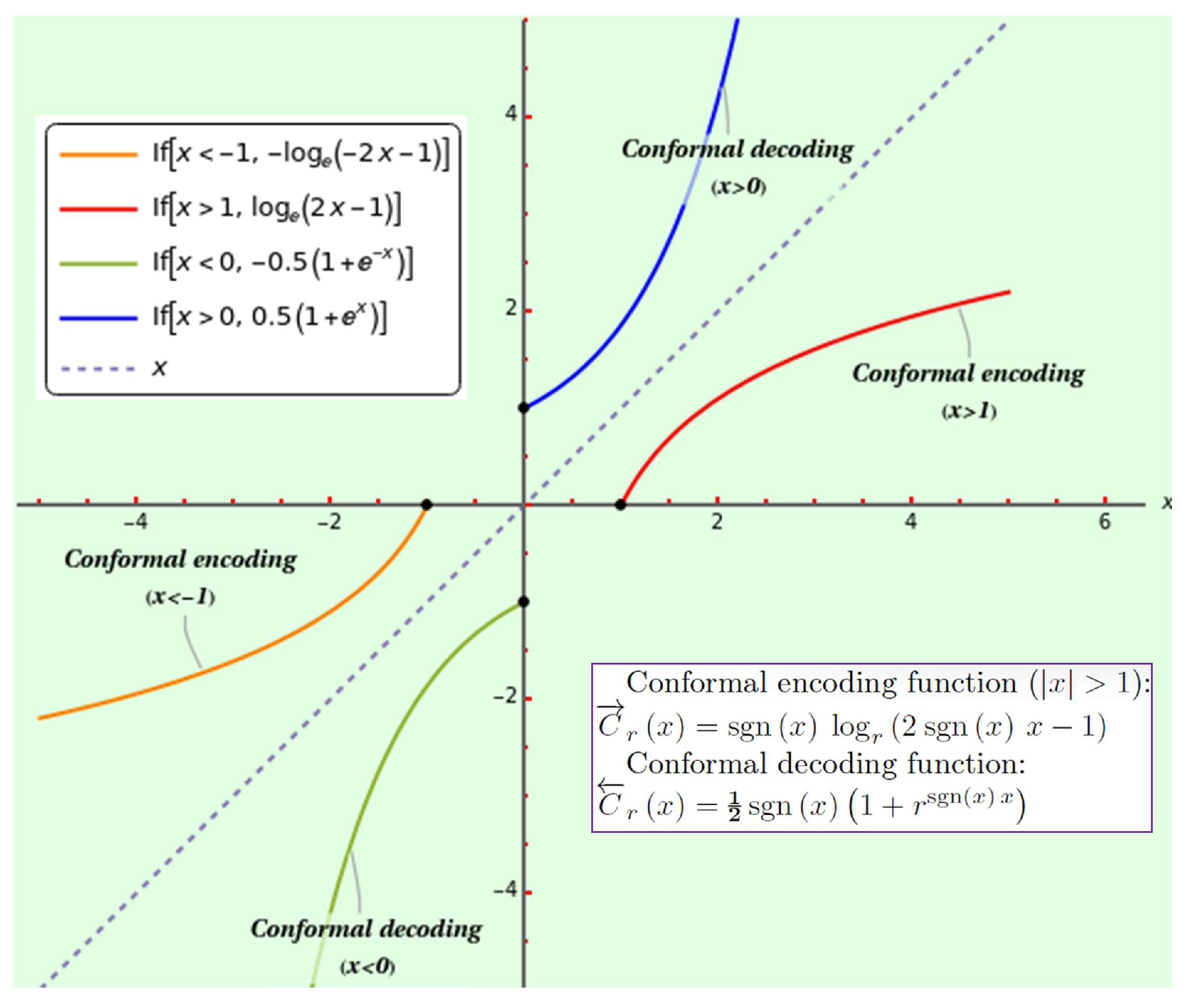

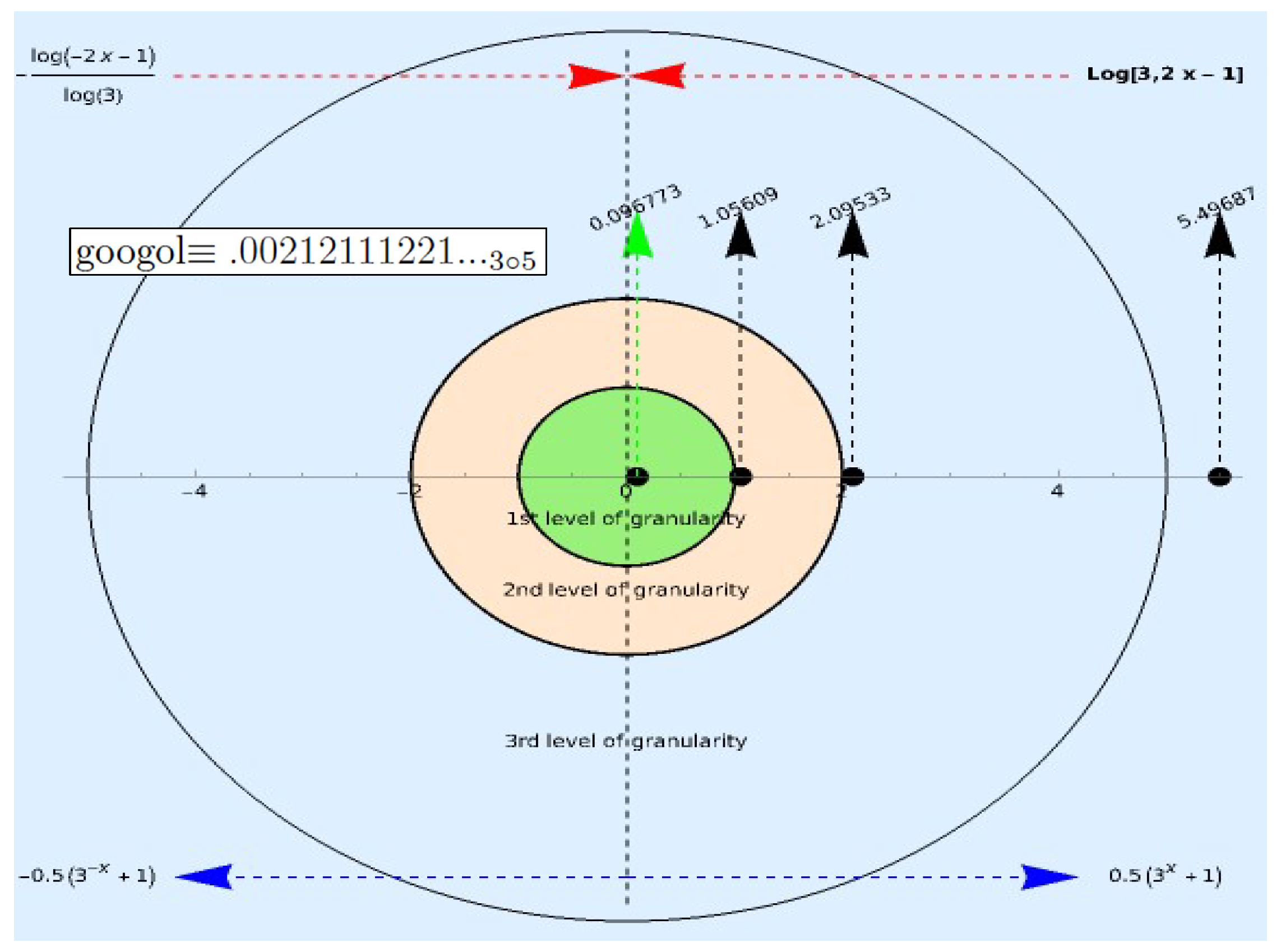

The coding source calculates the conformal distance from the origin as , where , , is the sign function, and P is the observed Euclidean distance to the point where an external object is. The coding space is the ball , with constant curvature of , where r is the radix used to normalize the information; the harmonic (outside) and logarithmic (inside) scales have a common origin and are separated by the boundary when . The conformal encoding function using the logarithm is , with inverse conformal decoding function . Since the metric ranges between and ∞, the source can repeat the encoding process inwards until the external object’s hyperbolic distance falls within the local coding space, halting the recursion. Likewise, every 1-ball with a radius given by the iterated decoding of outwards corresponds to a granularity level.

The results of this research stem all from the canonical PMF for the integer numbers, whose characteristics are fundamental and generative, imaging the essence of the cosmos. Physically, positive probabilities summing to one translates into unitarity, central reflection symmetry into parity invariance, fair mean and variance into uncertainty, holistic rationality into discreteness and relationalism, and utmost randomness in picking the number one into the principle of maximum entropy. Likewise, the global NBL (hence Zipf’s law [

22] with exponent 1), as well as the local NBL (supported by the logarithmic scale), are arguably physical. More generally, our descriptions and derivations introduce diverse instances of how mathematical functions, rules, or algebraic structures emerge as observable dynamics.

2.2. Hyperbolic World

Hyperbolic geometry is non-Euclidean in that it accepts the first four axioms of Euclidean geometry but not the fifth postulate. The

n-dimensional hyperbolic space

is the unique, simply-connected, and complete Riemannian manifold of constant sectional curvature (equal to

[

23]). For instance, saddle surfaces resemble the hyperbolic plane

in a neighborhood of a (saddle) point. These are typical ways to introduce the notion of hyperbolicity.

Instead, we prefer to identify a hyperbolic space with a domain whose geometry pivots on the hyperbola, contrasting with flat and elliptic spaces, which are parabola-based and circle-based, respectively. Harmonic scales are part of this world because a logarithmic scale results from summing over a harmonic series with vanishing steps between the values of a rational variable. The computational implementation of this hyperbolic world is PN, i.e., representing numeric entities on a positional scale, either harmonic or logarithmic.

Various combinations of exponential forms define the hyperbolic functions, so logarithms characterize the corresponding inverse (or area) hyperbolic functions. In geometry, the extent of the hyperbolic angle about the origin between the rays to and , where , is the sector . The natural logarithmic scale, factually , rules the cosmos to a great degree, developing systems whose properties echo a scale-invariant and base-invariant frequency.

Physics ties an ISL with a geometric dilution corresponding to point-source radiation into three-dimensional space [

24]. Math shapes an ISL within a two-dimensional setting [

25]. Nonetheless, our brute ISL of probability drives us to various versions of NBL all in one dimension, from which nature can expand the logarithmic scale upon hyperbolic spaces of all ranks to avoid the curse of dimensionality [

26]. Remarkably, forming a hyperbolic triangle is more than four times as probable as a non-hyperbolic one. We daresay that hyperbolic geometry beats at the universe’s core.

In information theory, we consider that a hyperbolic space is a coding space within which likelihoodepitomize the physicality of the positional number system. A global NBL probability is a harmonic likelihood ratio, and a local NBL probability is a logarithmic likelihood ratio. A ratio of NBL probabilities determines the relative odds between two buckets of quanta globally or between two bins of digits locally. Typically, a coding source calculates the odds between two numeric events considering the information of the range they embrace regarding the entire informational support provided by the global base or the local radix. These normalized odds are likelihood ratios.

Decoded (prior) odds between two events are correlations that a coding source translates to a positional scale multiplied by a likelihood ratio. This product is Bayes’ rule to encode and transform the information. The shock is that first, addition, subtraction, multiplication, and division reproduce this coding pattern, and second, under certain conditions, it collapses into the algebraic field of referential ratios

. A quotient of referential ratios is a cross-ratio, the linear fractional transformation’s invariant over rings via the action of the modular group upon the real projective plane [

27]. Restricted to one dimension, the cross-ratio’s logarithm in radix

r determines the coding space’s metric with curvature

. The canonical encoding function

and the canonical decoding function

are the unique conformal transformations (i.e., preserving orientation and angles) that, if applied iteratively, map

to the coding space’s positive side in accord with the minimal information principle. For the same reason, the hyperbolic distance

between points

A and

B inside the local coding space is also unique. We conclude that Poincar invariance ultimately stems from the algebraic field of referential ratios.

2.3. Thrifty World

To improve tractability, one can feel tempted to cut the unit uniformly into equal parts. A constant probability distribution assigns the same expected frequency to all the domain values. However, whereas the uniform distribution of probabilities is, in principle, fair and provides maximum entropy, it does not fit well into an open (infinite) outcome space.

Contrariwise, it is noteworthy that [

28] the frequency with which objects occur in ’nature’ is an inverse function of their size, indicating that oddity and magnitude usually correlate and conform to a Benford distribution. NBL says the cosmos displays a progressive aversion to larger and larger numbers, somewhat implementing the parsimonae lex [

29], a principle of frugality [

30] that stimulates economy and effectiveness as universal prime movers, drivers of nascent physics, particularly the spacetime geometry.

The canonical PMF exhibits nature’s bet on the shortest numbers, but NBL provides further precision, pointing to a conservative policy of significands. For instance, the law favors

against

because 12345 is less probable than 1234. For the same reason, the law favors

against those. The last digits might provide negligible, even arbitrary, information [

31]. This innate tendency amounts to restricting the resolution of the representational system to preclude unnecessary precision. Carrying long tails of digits from operation to operation is neither intelligent nor evolutionary. Information is gold, much like energy.

Interestingly, the probability that a randomly chosen natural number between 1 and

N is prime is inversely proportional to

N’s number of digits, whence to the length of its significand [

32], i.e., to its logarithm or equivalently its likelihood. Therefore, primality and information are nearly interrelated. Why is finding a big prime so tricky [

33]? Because it demands logarithmically growing energy.

NBL denotes productivity. Radix economy

measures the price of a numeral

N using radix

r as a parameter. Cost-saving number systems will employ an efficient coding radix; the optimal radix economy corresponds to Euler’s number e, another sign of the preeminence of small numbers. The wider the gap between the economy of consecutive numbers relative to the radix, the higher the expected frequency. Thrifty numbers making a difference are winning, meaning that the probability of a number coded with radix

r showing up is the rate of change, or derivative, of its economy concerning the radix, specifically

This expression indicates the occurrence probability of the numeral N, not necessarily a digit, with radix r. For example, is the probability of running into a decimal number starting with 22, such as or 2237. The logarithmic scale knits the linear space toward the coding source; the closer, the higher the spatial density. A large numeral is less likely due to its representational magnitude, so its space is less contracted than that occupied by a numeral with more probability mass. NBL reflects how PN encodes numbers in agreement with this economic criterium.

Therefore, the radix economy establishes a scalar field where the gap between the potential energies [

34] of two objects only depends on their position as perceived from the source. Thus, the canonical PMF and NBL subsidiaries are fundamentally efficient, balancing probability mass against notation size. Minor numbers are accessible at a lower cost, while spatial dilution and the prospect of likelihood increase, although deceleratingly, as we climb to infinity.

NBL maps (the minor numbers of) the linear frequency onto (the least costly digits) of the logarithmic frequency through the harmonic frequency. How does a harmonic scale exhibit its austere nature? The study of constrained harmonic series mainly teaches us that the specific digits involved in the restraining chain do not matter, whereas its length does. Long chains or high densities of quanta are rare and deliver slender harmonic terms that hardly occupy space. In contrast, short chains or low quantum densities are regular and cheap, producing heavy harmonic terms that occupy much space, leading to convergence of the series if eliminated. In other words, only usual and economic constraints can impede the divergence of a harmonic series. More generally, increasingly bigger numbers on a linear scale require hyperbolically less and less attention in accord with the room they take up. Nature builds physics upon proximity because almost all large numbers are expensive and indiscernible [

35].

Our theory also associates efficiency with entropy. We can interpret NBL probabilities as degrees of stability or coherence. The lowest digits maintain distinctness from the surroundings thanks to their solid entropic support. The more significant digits are vulnerable and give rise to more transitions, physically translating into higher reactivity or less resistance to integration with the environment.

Parsimonious management of computational resources is crucial, as optimal stopping problems reveal. In the secretary problem, selecting the best applicant is pragmatically less sensible than simply a good one, which requires maximizing the bipartite entropy. The past partition emphasizes the information gathered, while the future partition deals with the information we can obtain from forthcoming aspirants. As the number of examined applicants grows, the past information increases, but the future information decreases. In contrast, the probability of taking advantage of both types of information decreases and increases, respectively. The best applicant implies exclusively focusing on the future partition, but a balanced decision also implies contemplating the past.

Information economy enables cosmological evolution. That the universe optimizes computability follows from NBL embracing several invariances. Base invariance ensures even interaction with the environment because changes in the radix value will imply only incremental updates (recoding), keeping the internal metric up to the curvature. Scale invariance provides the means to recursively perform geometric calculations on nested levels of domain granularity, like a fractal. Rescaling implies only obtaining the powers of any radix using straightforward Moessner’s construction [

36]. Length and position invariance ensure fault tolerance. Ultimately, PN is effective because it makes the most expected data readily accessible for iterative coding functions.

Because a thrifty world refuses the continuum, computing hyperbolic spaces requires rationality to be feasible.

2.4. Relational World

Real numbers are unattainable mathematical objects [

37], artificial, mere abstractions; hence,

-oriented physical laws and principles are suspicious. In contrast, relative odds, i.e., proportions between two numbers, quanta, or digits, are tractable. Rational numbers are the fitting choice in an inaccurate and defective [

38] world, where relations are as important as individual entities [

39] and comparative quantities predominate over absolute values. A universe built upon the rational setting facilitates divisibility, discreteness, and operability. Calculus of rational information relies on a harmonic scale and uses harmonic numbers. Regardless, we need rational models of reality to prove that the

underpins the universe’s computational machinery.

Presuming the minimal information principle, we require a fundamental PMF with positive probabilities summing to one, no bias, and central symmetry. To ensure divisibility of the probability space, which enables the operability of the information, the mass distribution we obtain for the natural numbers must be if N is nonzero and otherwise. Next, we calculate from this PMF the probability of a natural number being odd or even and prime or composite. We also calculate the probability of getting an elliptic, parabolic, or hyperbolic two-dimensional tiling by examining the triangle group. Similarly, the occurrence probability for a nonzero integer Z is . Despite being excluded from the scope of this essay, we can even extend the canonical PMF to rational and algebraic numbers, the computable version of complex numbers. All these laws are rational and inverse square, fulfilling identical requirements.

We underline that the probability mass of a nonzero integer is a unit fraction. Real numbers only appear (in terms of the Riemann zeta function at 2) when the probability of occurrence involves zero or infinity, a sign that these limiting values are virtual. From the canonical PMF, we derive a discrete (global) counterpart of the continuous (local) NBL, where the probability of a significand in a given base is rational. The continuous (local) NBL emanates precisely from the rational (global) NBL by compartmentalizing a one-dimensional hyperbolic space of colossal extent. More generally, whereas the universe originates globally from , it is perceived locally as .

The concept of information is fundamentally rational. Harmonic likelihood is global information defined as

, whereas logarithmic likelihood is local information defined as

. A harmt is the global (harmonic) unit of information, peering the local (logarithmic) unit of information, the nat. Likewise, NBL PMFs represent normalized information regarding the global base

b,

, or the local radix locally,

. Likelihood is space on a harmonic (global) or logarithmic (local) scale; for example, if we assume that the bit (

possible states) is the minimal (unit) length [

40], one byte (

possible states) has length eight. If our world is positional, likelihood and entropy would have metric units of length for all practical purposes, meaning that information is a physical and manageable resource.

Rationality in its purest form appears as the NBL probability of a quantum or a jump , with masses separable as the product of a function of b and a function of q. However, the relational character of the universal rational setting pops up in all its splendor when we address probability ratios. The odds value between between a pair of numbers, quanta, or numerals is the quotient of their picking probabilities, quantifying the strength of their association. Assuming , estimates how uncorrelated a and b are; if , both events are mutually dependent.

Then, a source encodes, recodes, and decodes odds using Bayes’ law, reminding us that ratios are the atoms of a coding process. The global Bayes’ rule says that the odds of quantum s against t in base b are the odds of the number s versus t times the probability of the bucket in base b. The local Bayes’ rule says that the odds of digit i against j with radix r are the global odds of the quantum i versus j times the probability of the bin with radix r. Both represent the entropic contribution of the items in a range to a positional scale, confirming that information is relational.

Exceptional cases of Bayesian data are the cross-ratio, a conformality invariant, and the referential ratios

, the basis for relativity. Despite the conformal coding functions using the logarithm and the exponential function, power (infinite) series by definition, the coding source adds or multiplies incrementally a finite series of referential ratio powers to throw a rational result at any time, bettering the approximation with the number of iterates. Rationality is intricately intertwined with decidability in polynomial time and interruptible algorithms in evolving scenarios [

41].

Numeric values do not contain information per se, while a common property makes two entities commensurable, with the global base and the local radix as main referents. We can take global Bayesian data as rational quanta, computable numbers, and local Bayesian data as observable correlations of numerals. In the end, mathematics is -based, and physics is relational.

3. The Whole Story of NBL

We comment on the aspects of the academic story of the fiducial NBL most relevant to our essay and then traverse the deductive road to it. We have discovered many findings on the run related to the nature of the information at a fundamental level.

First, we introduce an inverse-square law as the origin of NBL. This PMF subsumes a probability law of rational masses, giving place to a normalized universal PN system to manage a hyperbolic, thrifty, and relational world. This harmonic scale system employs a global base as a fundamental referent. When the global base is immense, the scale’s rational setting approaches a domain of real variables and functions ruled by small radices in local settings. In other words, we prove that the local NBL, as everybody knows it, assumes that a prior all-encompassing base exists. Eventually, the interplay between the global base and the local radix will enable us to determine the canonical metric ascribed to a coding source’s conformal space containing an image of the world.

3.1. The Tortuous Road to NBL

The first digits of the numerals found in data series of the most varied sources of natural phenomena [

42] do not display a uniform distribution but rather exhibit that the minor ones are the more likely (see [

43] for a detailed bibliography and [

44,

45] for a general overview). Specifically, this law of anomalous numbers claims that the universe obeys an exponential distribution to a greater or lesser extent.

Newcomb’s insight was, The law of probability of the occurrence of numbers is such that all mantissae of their logarithms are equally probable. (What Newcomb refers to as mantissa is what we will call significand.) More than half a century later, Benford defined the exact formula of every random variable satisfying the first-digit (and other digits) law [

2]. He could not derive it formally, although seeded a line of research asserting that The basic operation

or

in converting from the linear frequency of the natural numbers to the logarithmic frequency of natural phenomena and human events can be interpreted as meaning that, on the average, these things proceed on a logarithmic or geometric scale.

However, this transition from

to

, when the baseline set is unlimited, implies tackling the problem of picking an integer at random [

46], and then mathematical difficulties arise. To commence, numerals beginning with a specific digit do not have a natural density. The decimal sequence

that groups the first digits does not converge (e.g., oscillates). Moreover, suppose each natural occurs with equal probability. In that case, the whole space must have probability 0 or

∞, violating countable additivity (by which the measure of a set must be nonzero, finite, and equal to the sum of the measures of the disjoint subsets); hence, we cannot construct a viable discrete probability distribution. The attempt to choose

fails because it diverges in the limit; it is not countably additive. Furthermore, a universal law such as NBL is supposed to be scale-invariant. However, there are no scale-invariant probability distributions on the Borel (measurable) subsets of the positive reals because the probability of the sets

and

would be equal for every scale

, disobeying once more countable additivity [

47].

Hill [

48] resumed Newcomb’s idea; logarithm’s significands of sequences conformant to NBL trace a uniform distribution. He identified an appropriate domain for the natural probability space and, based on the decimal mantissa ςv-algebra (where countable unions and intersections of subsets can be assigned a gauge), formally deduced the law for the first digit and joint distribution of the leading digits. He also provided a new statistical log-limit central-limit-like significant-digit law theorem that stated the scale-invariance, base-invariance, sum-invariance, and uniqueness of NBL. The cumulative distribution function is

, where

and

r is the radix.

Since Hill’s publication in 1995, more derivations have come to light, one of the subtlest appearing in [

49] (section

). Nonetheless, they all ignore foundational causes.

3.2. Properties of the Distribution

A vehicle of NBL is how different measurement records spread and repositories aggregate data. For one thing, the significant-digit frequencies of random samples from random distributions converge to conform to NBL, even though some of the individual distributions selected may not [

50]. Besides, many real-world examples of NBL arise from multiplicative fluctuations [

51]. What happens is that the absorptive property, exclusive of the fiducial NBL, kicks in [

52]; if X obeys Benford’s law, and Y is any positive statistic independent of X, then the product XY also obeys Benford’s law – even if Y did not obey this law. To boot, variable multipliers (or variable growth rates) not only preserve Benford’s law but stabilize it by averaging out the errors.

Which standard probability distributions obey NBL? Rarely does a distribution of distributions disagree with NBL [

53]. The ratio distribution of two uniform, two exponential, and two half-normal distributions approximately stick to NBL. The Pareto distribution enjoys the scale-invariance property as long as we move from discrete to continuous variables, and Zipf’s law (

with

) satisfies the abovementioned absorptive property if one stays over the median number of digits [

52]. More generally, right-tailed distributions putting most mass on small values of the random variable (i.e., survival or monotonically decreasing like the log-logistic distribution) are just about compliant with NBL [

28] (e.g., the tail of the Yule–Simon distribution [

54]). The Log-normal distribution fits NBL, and the Weibull and Inverse Gamma distributions are close to NBL under certain conditions [

55]. In short, NBL embraces an ample range of statistical models and mixtures of probability distributions.

Empirical testing of random numerals generated according to the exponential and the generalized normal distributions reveals adherence to NBL [

56]. More precisely, almost every exponentially increasing positive sequence is Benford (e.g., sequences of power

, where

), and every super-exponentially increasing or decreasing positive sequence (e.g., the factorial) is Benford for almost every starting point [

57]. Further, an NBL-compliant data series is inherently sturdy because of its invariance to changes concerning sign, base, and scale [

58]; for instance, mining data about the lifetime of mesons or antimesons in microseconds in decimal or seconds in binary results in strict observance of the law.

All these mathematical circumstances we have summarized about NBL explain why it is so widespread but not its reason. Failure to comprehend this distinction has generated confusion and is a typical scientific misunderstanding [

59]. In other cases, authors have deemed specific remarks about NBL its cause when they are indeed consequences [

60].

We will explain why discrete distributions decaying as with are indirectly NBL-compliant. The common factor of all the quasi-NBL distributions is that proportional data intervals approximately fit their heavy tail (the fatter, the better). Notably, this work does not deal with the NBL invariances as presumed properties but derives them from basic requirements demanded from the canonical PMF, producing a subsidiary global NBL and, thereon, the fiducial NBL. The appearance of NBL in power sequences indeed concerns how PN codes probability ratios (odds), where the logarithm and the exponential constitute a fundamental functional duality. The intricate and critical linkage of the law with the rational numbers jumps out.

3.3. A Fundamental Probability Law

We seek a well-defined PMF, i.e., positive probabilities summing to 1. Not all Zipfian distributions [

61] can do the job, for

eludes divergence only if

. In particular, linear forms for the denominator of a natural’s probability cannot fulfill countable additivity.

We assume that

is an inductively constructible set from which all physical phenomena can crop up from the source outward, a basis of reductionism and weak emergency [

62]. By including nil, we also ponder infinity as its reciprocal. However, both projective concepts are only potential and limiting numbers in the offing; employing the successor and predecessor as symmetric constructors, we must be able to choose any number strictly between 0 and

∞ so that no counting number is extraordinary. Again, many Zipfian distributions cannot do the job, for

has a diverging mean only if

. For instance, cubic or higher polynomials lead to convergent expected values.

Additionally, we require a sound and dependable extension to the integers. Zipfian distributions where

a is an even natural do the job, but in the range

defined by the two previous requirements,

is the fitting choice, the only value assuring central reflection symmetry. To cap it all,

agrees with the minimal information principle [

63]; considering other quadratic polynomials for the denominator of a natural’s probability does not yield a better law because it would introduce unwarranted assumptions in vain. For instance, the Zipf–Mandelbrot law [

64]

deals with unexplained coefficients and is not centrally symmetric.

Therefore, the PMF of a random variable

X taking natural numbers is

We will suppose the proportionality parameter

to comply again with the minimal information principle.

is the value of the Riemann zeta function at 2, brewing gently as a factor of endless aggregation of occurrence probabilities. Because the else (null) case is possible, this PMF is not a pure zeta distribution [

65].

Countable additivity holds; the probabilities sum to 1 owing to

The picking event

X is fair owing to the indeterminacy of the expected value of a natural number, i.e.,

Indeed, the nth-order moment diverges for all nonzero .

This PMF does not assume the law of large numbers or the law of rare events. On the contrary, it works under the statistical assumption of independence of occurrences and no bias. Outcomes of the picking event are unpredictable, even considering an indefinite trail of repetitions. No predetermined constant mean exists in space or time, nor is there an absolute measure of rarity; the relative frequency between two events solely depends on their probability mass. We can regard it as a brute law.

Let us leave the rational

unfixed for the time being, given that it is unimportant for the derivation of NBL. Remember that

holds the constraint

(i.e.,

and

), and we will return to it in sub

Section 7.1.

3.4. The Rational (Global) Version of NBL

In analytic number theory, the mesmerizing Euler-Mascheroni constant

([

66], section

) is the limiting difference between the harmonic series and the logarithm, i.e.,

where

is the

Nth harmonic number. If our universe is as harmonic as logarithmic [

49], the discrete version of the NBL must exist connected to but separated from the continuous (fiducial) one.

The cumulative distribution function of a random variable

X obeying (

1) is

which tells us how often the random variable

X is below

N. We call its complementary function natural exceedance probability, quantifying how often

X is on level

N or above. This dwindling distribution function is

where

is the generalized

Nth harmonic number in power 2.

We can express this probability in terms of the second derivative of the gamma function

’s logarithm, i.e., the digamma function’s first normal derivative, defined as

Since

and

, the natural exceedance of

N is

Numbers lack physicality. If numbers were frequencies, the trigamma function would represent a probability fractal signal such that the occurrence probability density (i.e., per frequency range) decays proportionally with the signal’s frequency.

Regardless of the scale, let us divide the natural line into concatenated strings of numbers of the same length, which we name quanta. Then, the second-order cumulative function arrives on the scene for global computability. The plot of , the natural exceedance’s antiderivative, has an informational flavor. A significant value of the quantum q is more unpredictable and influential than a minor one; this harmonic surprise needs a medium to reify the event occurrence, and the extent of the resulting log note is its only measure.

So, how likely is the event

to fall into bucket

, assuming a harmonic scale underneath? The natural harmonic likelihood

depends on the bucket’s extent, namely

in natural harmonic units of (global) information, where we have considered the generalized recurrence relation

. Note that (

2) is a proportion, canceling the constant

.

The natural harmonic likelihood is neither the probability of a quantum falling into nor the probability that is the truth given the observation . It is the information obtained by picking a quantum from the bucket or the information that gives when , i.e., the space allocated to encode a quantum between the bucket’s ends, which is why it does not refer to q.

The harmonic number function (interpolated to cope with rational arguments) parallels the continuous world’s logarithmic function in information theory, like in analytic number theory.

represents the harmt (a portmanteau of harmonic unit), just as the natural local information unit, the nat, corresponds to

by

. Thus, natural harmonic and logarithmic likelihoods are analogous, as we will explain in

Section 3.6. In particular,

implies that

q’s reciprocal denotes information, precisely the natural likelihood of an elemental quantum gap.

A global base

b marks the boundary between the mathematical and physical world. We define the probability mass of bucket

regarding

b’s support as the harmonic likelihood ratio

where

and

. This probability is separable as a product of

’s and

b’s functions, expressing a part of the information total that is the

b-normalized rational quantum

’s length or bucket

’s width.

The reader can object that the concept of likelihood is unnecessary to define (

3) since we can directly define the probability of a bucket as

. However, we aim to stress that we get information regardless of the base, only relative to the natural harmonic bucket

. Because

gives the maximum likelihood estimate,

is the relative likelihood function [

67] of the bucket

given

.

When

and

, we obtain

We measure this PMF in

b-ary harmonic information units. It is the simplest case of Zipf’s law, geometrically an embryonic form of progressive one-dimensional circle inversion. Further, if

q represented a frequency, we could understand the probability of a quantum with a given base as a (physical) potential diminishing hyperbolically with the distance from the source, i.e., a flicker [

68] or pink [

69] noise.

We have described how the harmonic series bridges equations (

1) and (

4). Both laws point to minor numbers as the most frequent significands, amassing more probability around the source to increase accessibility. However, we find three main differences between them:

(

1)’s probability masses are rational numbers. Instead, a quantum’s probability represents an area ratio measured through the digamma function; hence, a quantum’s probability is a quota of information.

The global NBL outlines a hyperbola instead of an ISL. Thus, while the probability of a number is inversely proportional to its norm (the number’s square), the probability assigned to a quantum is inversely proportional to its modulus (the quantum’s absolute value).

(

4) gives us the thing-as-it-appears (perceived potential) stemming from the thing-in-itself (field per se) [

70] expressed by (

1), two sides of the same property or object, the dual essence of the world.

3.5. Analysis of the Global NBL

The global (-based) NBL’s average probability for the decimal system is , which is equal to the local (-based) NBL’s average probability (due to ). The mean value for the quanta 1 to 9 following the global NBL is (from ), whereas it is (from ) for the local NBL. The harmonic mean value for the quanta 1 to 9 following the global NBL is .

As expected, Equation (

4) brings

, i.e., 1 occupies

of the space in binary. In base 3, the appearance probabilities of 1 and 2 as the first quantum are

and

, respectively, a 2/1 sharing out. We deem this Pareto rule so rudimentary that it might be fundamental in physics. The corresponding Pareto rule is 6337 if we utilize the local NBL. Quantum 1 in decimal occupies

, while it is

using the local NBL.

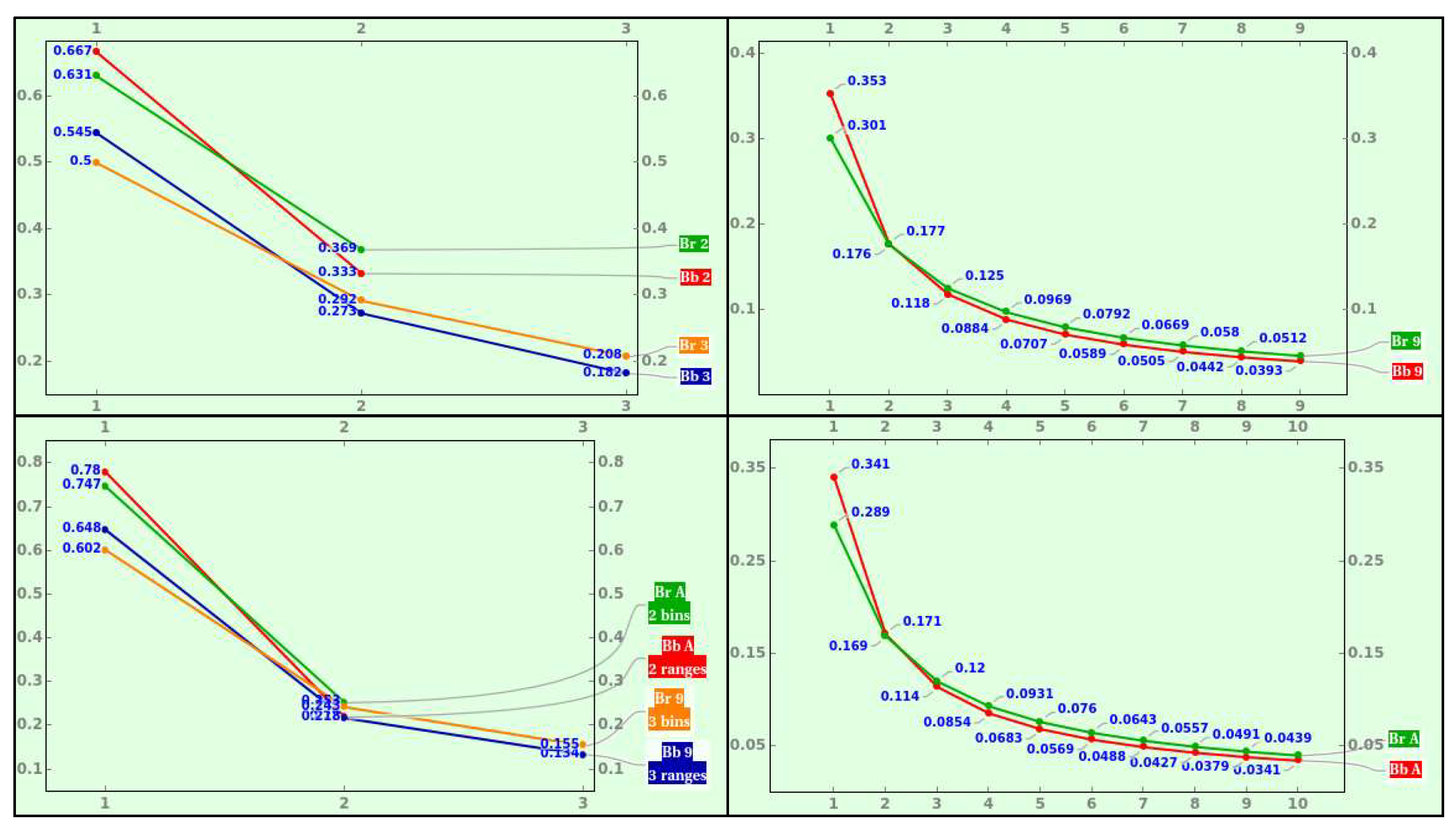

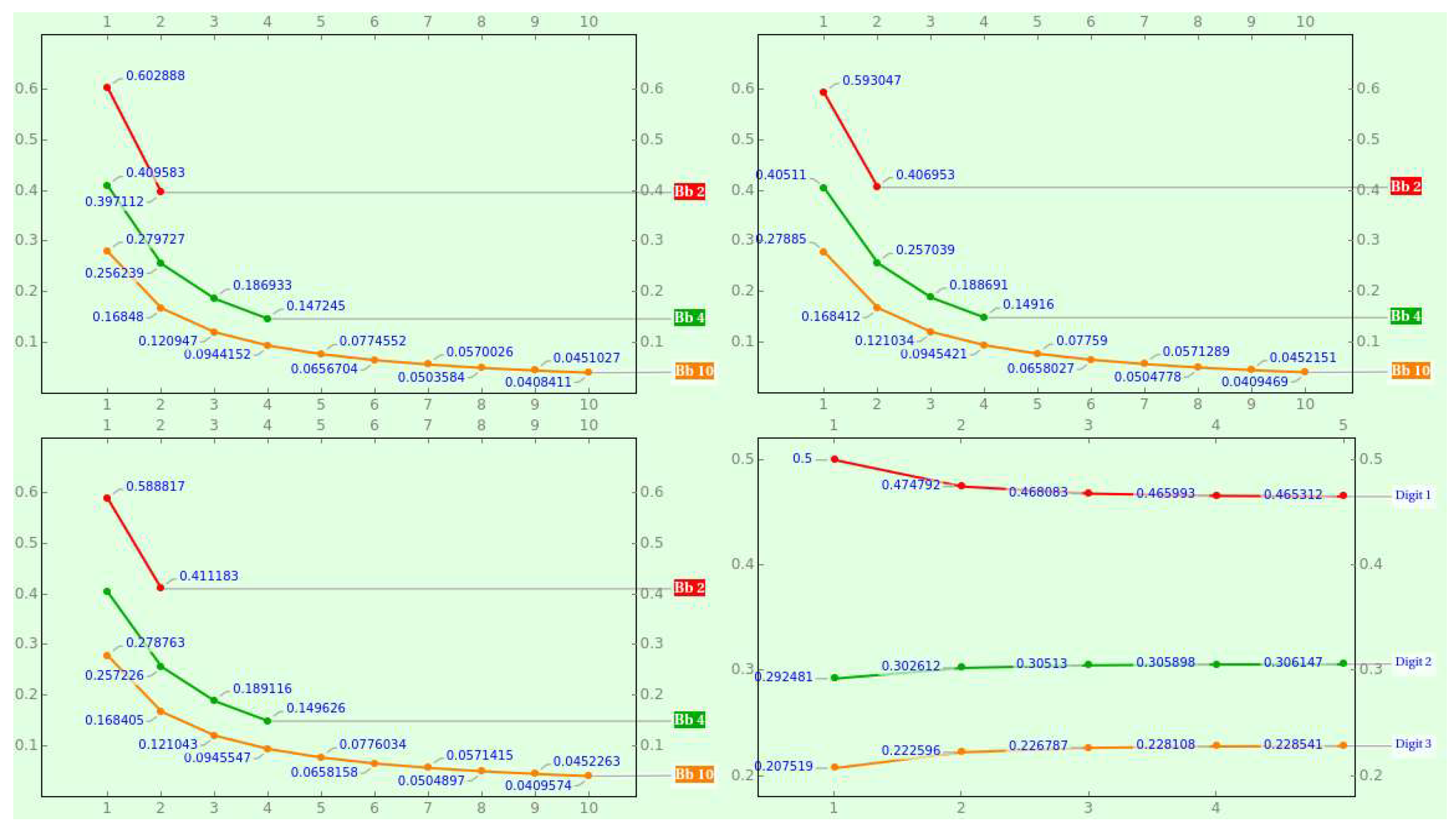

Figure 2 compares the probability of a decimal datum’s first position value between the global, discrete, rational, countable, harmonic NBL and the local, continuous, real, uncountable, logarithmic one. Regardless of the cardinality, the former is always steeper.

The unit bucket a quantum represents can be of any size, so we can recursively perform the integration and normalization process that gave rise to (

4) within every quantum attributed to base

b, obtaining a chain of nested quanta. The probability of getting the leading chain

c of quanta with any length in

b-ary is simply

It represents

c’s likelihood in

b-ary harmonic units and becomes (

4) when

c is a base’s quantum. For example, the probability masses that a decimal chain starts with 10 (e.g.,

) and 99 (e.g., 992) are

and

.

3.6. The Fiducial (Local) NBL

The global NBL furnishes the frame for constructing a sheer logarithmic system that conserves base and scale. To achieve such a pursuit, we must turn to the local context of a coding source and analyze how it represents a numeral in PN.

We call a bin of digits to a bucket of quanta in the source’s proximity. The third-order cumulative function of (

1) arrives on the scene to facilitate local computability. When the base

b is enormous, we can handle digits like real values to calculate the antiderivative of (

4),

, which outlines how unexpected and momentous digit

d is. Large values locally transmit more information than small ones; for whom? Logarithmic surprise needs an observer to reify the event occurrence. The harmonic information perceived by a receiving system, a coding source, becomes local information with extension

. Consequently, broad bins are more likely than narrow ones as supporting evidence.

Assuming a logarithmic scale underneath, we define the natural logarithmic likelihood

of the event

to fall into bin

as the ratio

Note that this proportion no longer refers to base b; a coding source is unaware of the global setting for calculation purposes.

The natural logarithmic likelihood is neither the probability of a digit falling into

nor the probability that

is the truth given the observation

. It is the information obtained by picking a digit from the bin

or the information that

gives when

, i.e., the space allocated to encode a digit between the bin’s ends, which is why it does not refer to

d. However, it has nothing to do with surprisal [

71];

ℓ denotes informative space rather than information content. Indeed, we can take it as the natural positional length of

or the natural width of

. We can also take (

5) as the differential entropy of the uniform probability density function

.

We measure the natural logarithmic likelihood in natural units (nats) because of

. It is manifestly scale-invariant; since the area of a hyperbolic sector (in standard position) from

to

is

, another way to define invariance of scale is that a squeeze (geometrical) mapping boosts the logarithmic likelihood up or down arithmetically (see [

49] chapter I).

The domain of a digit

d spans from the unit to

, where

is the cardinality of the local coding space, precisely the source’s radix. We define the

r-ary probability mass of bin

relative to the radix’s support as the logarithmic likelihood ratio

with

. We can take it as the representation length of

or the width of

in

r-ary logarithmic information units, in correspondence with equation (

3), reckoning the probability of a bucket as a normalized harmonic likelihood. Therefore, in PN, the probability is a quota of the available space, a view we will develop in sub

Section 5.1 and

Section 5.2.

Geometrically, the probability of event conditioned to r is the ratio between the areas under the hyperbola delimited by bins and , equivalent to the area enclosed by the rays and relative to the span of the hyperbolic angle r. Because the hyperbola preserves scale changes, the logarithm uniformly distributes the significant digits of a geometrical sequence, as Newcomb underlined in his note; implies that, for example, x must drop to to divide the natural likelihood by three ().

By setting in (

6)

and

, we fit the

Y’s occurrences into the digits of a standard PN system with radix

r, obtaining

The original natural random variable and the underlying global base b are absent. This expression is the local (fiducial) NBL, which tells us the PMF of a r-ary numeral’s first digit.

A coding system (observer or source) that uses standard PN handles the unit range as a concatenation of the sub-bins , , ... , covering intervals of , , ... units of space, and corresponding to the symbols 1, 2, ... and , respectively; the addition of these areas is the unit.

More fundamentally, common digits are near the coding source, i.e., the probability of a digit correlates with its accessibility and declines logarithmically. If we liken probability mass to space, smaller digits induce more density than significant digits. In other words, accessibility concentrated around the origin progressively dilutes as we move away, contrasting with the linear scale that distributes the space evenly.

We can generalize (

6) to cope with bins outside the radix. The resulting expression is not generally a probability anymore, given that we can have bins of any size, but it is again an

r-normalized likelihood that retains the geometric interpretation. In other words,

is the

r-normalized

’s length or

’s width. We can regard it as a fractal dimension where

r is the scaling factor,

is the number of measurement units, and

is the number of fractal copies. For instance, (

8) might explain the Weber-Fechner law [

72] in psychophysics, where

is the intensity of human sensation,

is a perception- and stimulus-dependent proportionality constant,

is the strength of the stimulus, and

is the zeroing strength threshold.

When

and

, we can again interpret this likelihood as the probability of getting a leading

r-ary numeral

of any length, i.e.,

The efficiency of a

r-ary numeral system worsens as

or

[

73] because

r diverges from the optimal radix economy, namely Euler’s number e, destroying the information. In the former case, we encounter the unary system, which boils down to a linear frequency. In the latter case, the numerals

that only use the first position increase limitlessly. Both are no-coding cases.

4. A Curious Effect

We prove that the Kempner distribution reflects the rational version of NBL for bijective numeration, allows figuring a natural resolution in PN, and confirms a global tendency towards smallness.

Watch the notation; we display the base and the radix underlined to denote bijective numeration rather than standard notation.

4.1. NBL for Bijective Numeration

Suspicion about the authenticity of the number cero [

74] suggests that bijective PN is likely more natural than standard PN, the number system we use daily. Various curious series we will analyze in the following subsection, specifically the Kempner distribution, append additional evidence that NBL for bijective numeration [

75] is foundational and universal.

Every formula about the NBL for standard PN has a bijective peer. Following the same plot thread we developed in

Section 3.4, a sample of chains encoded using bijective

-ary satisfies the global NBL if the leading quantum falls in bucket

relative to the area swept by base

with probability

where

and

. When

and

we obtain the probability with base

of leading quantum

q,

Thus, NBL for the standard PN in base corresponds to NBL for bijective -ary numeration. For example, we obtain , , , , , and , where symbolizes the bijective decimal base. Owing to and , the odds constitute an essential sharing out.

The entropy of PMF (

9),

, is the expected value (weighted arithmetic mean) of the harmonic likelihood function (

) evaluated at the probability mass reciprocal, i.e.,

For example,

,

,

,

, and

. When

acquires a gargantuan value, we can take the summation as an integral and the harmonic number function as the natural logarithm, so that the differential entropy [

76] of the global NBL approximately tends to

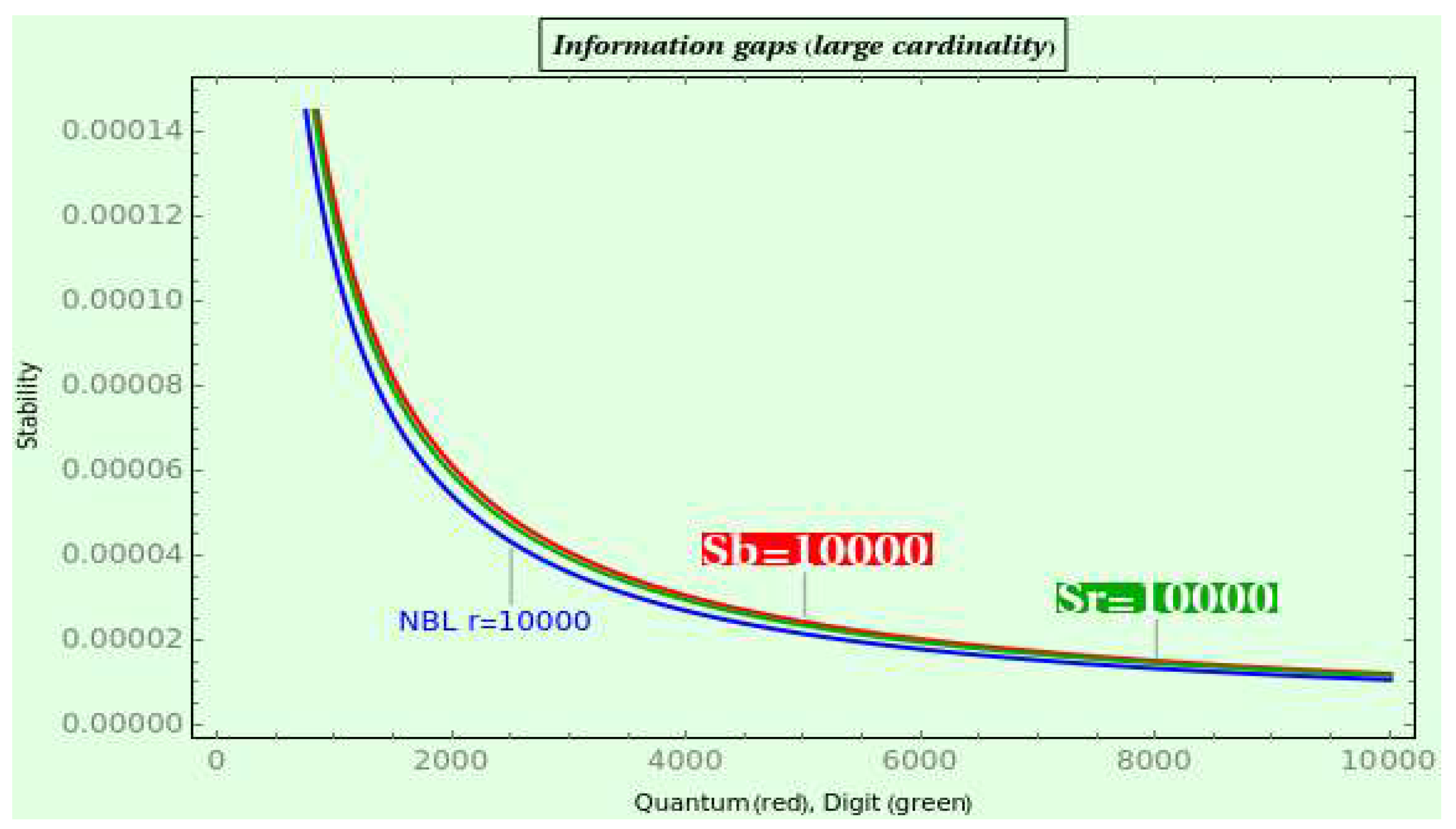

Thus, the global entropy is finite, which agrees with the Bekenstein bound in physics.

The probability of picking a chain of any length starting with

c is the likelihood gap it induces on the

-ary harmonic scale, i.e.,

which becomes (

9) when

c is a base’s quantum. For example, the probability that a bijective decimal chain starts with 11 (e.g.,

) and

(e.g.,

) is

and

, respectively.

This result allows us to derive the probability of picking a length-

l bijective

-ary chain starting with the quantum

q,

where

,

, and

. For instance, the probability of running into 1 to 3 as the first quantum of a bijective ternary chain with length 5 is

, and the chances of choosing 1 to

as the first quantum of a bijective decimal chain with length 2 is

. Watch that this equation boils down to (

9) if

.

Too, the probability that we run into

q as the

p-th quantum of a bijective

-ary chain is

where

,

, and

. For instance, the probability of getting 1 to 3 as the fifth quantum of a bijective ternary chain is

, and the chances of encountering 1 to

as the second quantum of a bijective decimal chain is

. Watch that this equation reduces to (

9) if

.

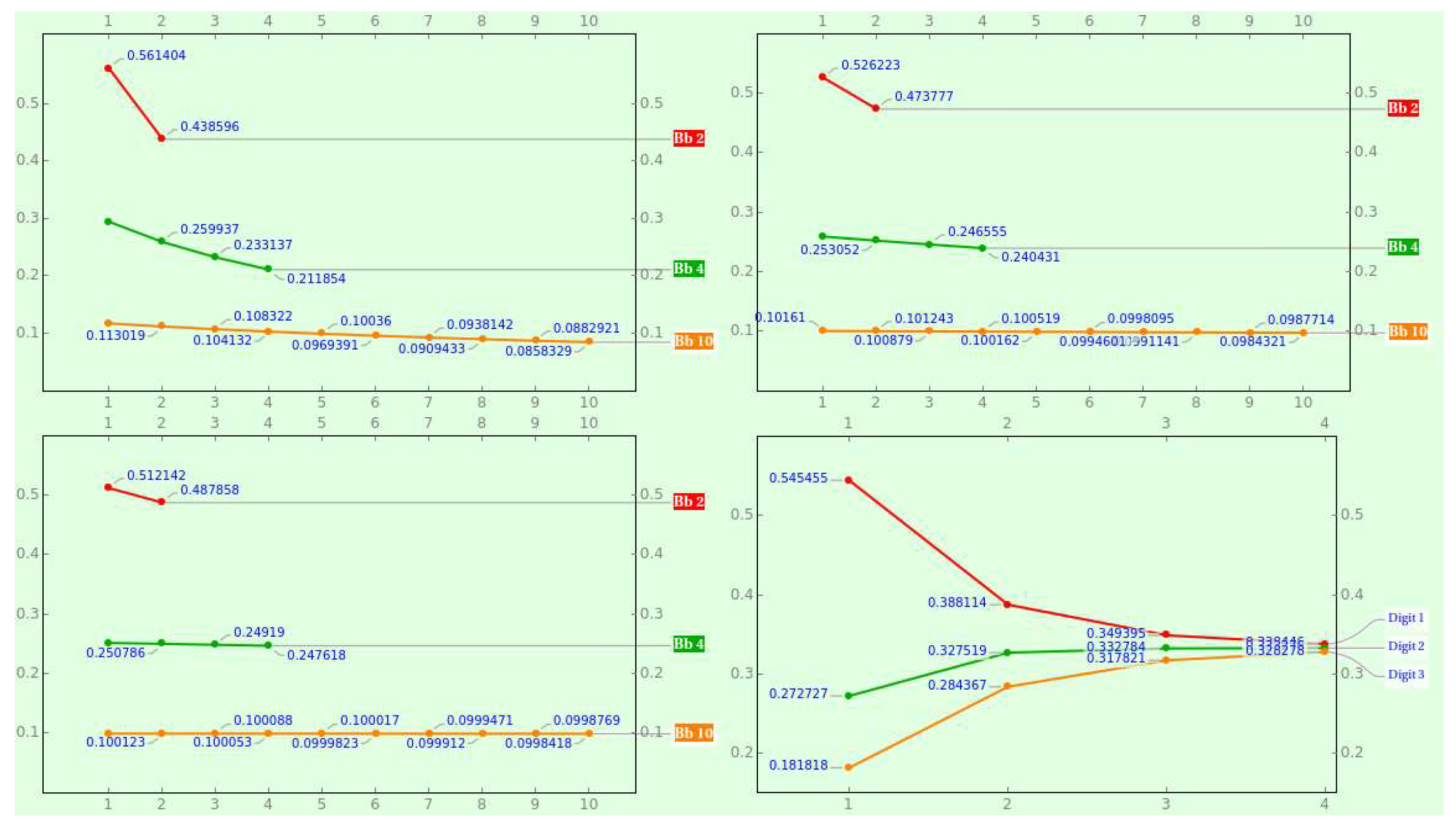

Figure 3 shows the PMF of various bijective bases for consecutive positions and the hyperbolic progression of the bijective ternary digits as the position increases.

Following the plot thread we developed in

Section 3.6, the ratio between the area under the hyperbola delimited by the bin

and the radix support

is

We arrive at the NBL for bijective notation by putting

and

. A sample of numerals expressed in bijective

-ary PN satisfies the local NBL if the leading digit

d occurs with probability

The NBL with radix corresponds to the bijective -ary numeration’s NBL; for example, the standard ternary system assigns to 1 and 2 the probabilities and , which is the PMF of bijective binary numeration. In the usual case where the radix is , the standard decimal system assigns to digits 1 and 9 probabilities of and . In contrast, the bijective decimal numeration assigns to digits 1 and probabilities of and . Likewise, the local bijective ternary numeration assigns to 1, 2, and 3 the probabilities , , and , contrasting with the percentages , , and the global bijective ternary numeration assigns.

The entropy of PMF (

11) for radix

,

, is the expected value (weighted arithmetic mean) of the likelihood function (

) evaluated at the probability mass reciprocal, i.e.,

For example, , , , , and . Because and we assume that is a positive natural number, the local entropy is finite, in agreement with the Bekenstein bound.

Note that

is also valid for the unitary system (

), unlike (

7) in standard PN; bijective unary assigns the probability of

to 1. A system encoding data in bijective unary has no curvature and keeps a linear scale. In bijective numeration, (re)coding from unary into

-ary means summing the number of ones and executing an iterative procedure based on Euclidean division.

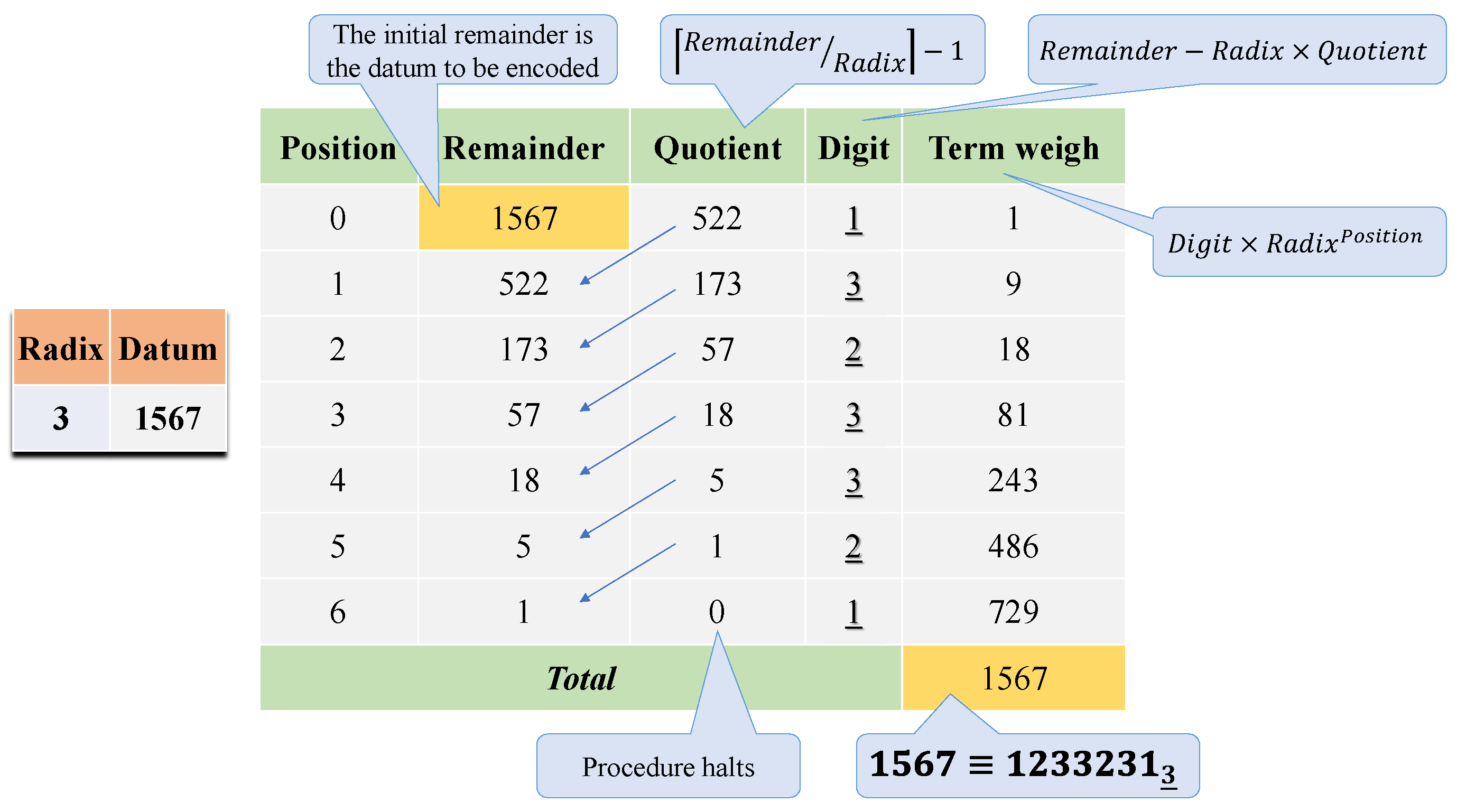

Figure 4 describes the encoding algorithm; e.g., it converts the representation of 1567 into

.

We can generalize the PMF given by (

11) to the probability of getting a leading

-ary numeral

of any length. It is the likelihood gap it induces on the logarithmic scale, i.e.,

For example, the probability that a bijective decimal numeral starts with , say or , is .

This result allows us to derive the probability of picking a bijective

-ary numeral with length

l starting with the digit

d,

where

,

, and

. For instance, the probability of picking 1 to 3 as the first digit of a bijective ternary numeral with length 5 is

, and the probability of choosing 1 to

as the first digit of a bijective decimal numeral with length 2 is

. Owing to this equation boils down to (

11) if

, the local NBL is length-invariant!

Figure 5 shows the PMF of various bijective radices for consecutive lengths and the hyperbolic progression of the bijective ternary digits as the numeral’s length expands.

Likewise, Equation (

12) allows us to derive the law for digits beyond the first; the probability of getting a

-ary digit

d at position

p is

where

,

, and

. Because this equation reduces to (

11) if

, the local NBL is position-invariant! For instance, the chance of picking 1 to 3 as the fifth digit of a bijective ternary numeral is

, and the probability of choosing 1 to

as the second digit of a bijective decimal numeral is

.

4.2. Depleted and Constrained Harmonic Series

The global NBL for bijective numeration suddenly appears in the set of Kempner’s curious series. We say a series is curious when the infinite summation of a harmonic series, divergent, is depleted by constraining its terms to satisfy specific convergence conditions. For example, consider the harmonic series missing the terms where 66 appears in their denominator. Most researchers in this fieldwork use decimal representation, but we can generalize the results to any base. Although their terminology refers to the items of a unit fraction’s denominator as digits, for us, these are quanta of a chain because we are handling terms of a harmonic series.

The point is that most depletions result in an absolute mass because a harmonic series is on the verge of divergence. In particular, a harmonic series becomes convergent by omitting a single quantum. For example, the shrunk harmonic series without the terms in which 4 appears anywhere in the decimal representation of the denominator is of the Kempner series. Offhand, convergence comes up because we withdraw most of the terms; 110 of the terms contain a 4 if the random variable ranges from 0 to 9, have at least one 4 if the random variable ranges from 0 to 99, and eventually, most of the terms of any random chain with 100 quanta will contain at least one 4 and will not sum. However, this explanation needs to be corrected.

A

series converges slowly [

77]. We will reason that this property is due to large numerical chains’ relative and geometrically short contribution to the total.

Table 2 summarizes the outcomes of approximated calculations from 1 (

) to

(

). Nonetheless, the most stunning feature of the Kempner summations (third column) is that they outline a curve that decreases harmonically.

Every quantum eliminates the same number of terms. means not that 1 is in more terms than 2 or 3 but a heavier mass attributed to the terms with the minor quanta; if we take out 11, the resulting summation is smaller than when we take out 12 or 13, and is the quantum that contributes less to the total. (Although is taken as 0 for calculation purposes, the value of proves that bijective numeration is underneath.) Considering that a Kempner series is infinite and the set of Kempner series embraces all quanta q represented in bijective decimal, how could we find a better proof that a default probability potential outlines a hyperbolically decreasing function of q?

Since a curious series converges by default of unit fraction terms, the mass share of a quantum globally depends on the reciprocals of the Kempner summations; the third column of the table includes ’s reciprocals normalized to (e.g., ’s relative mass is ). We must underline the relevance of these summations and percentages, reflecting the mass of every quantum irrespective of where it is, in contrast with the global NBL, which indicates the probability mass of a quantum at a given position in a given base.

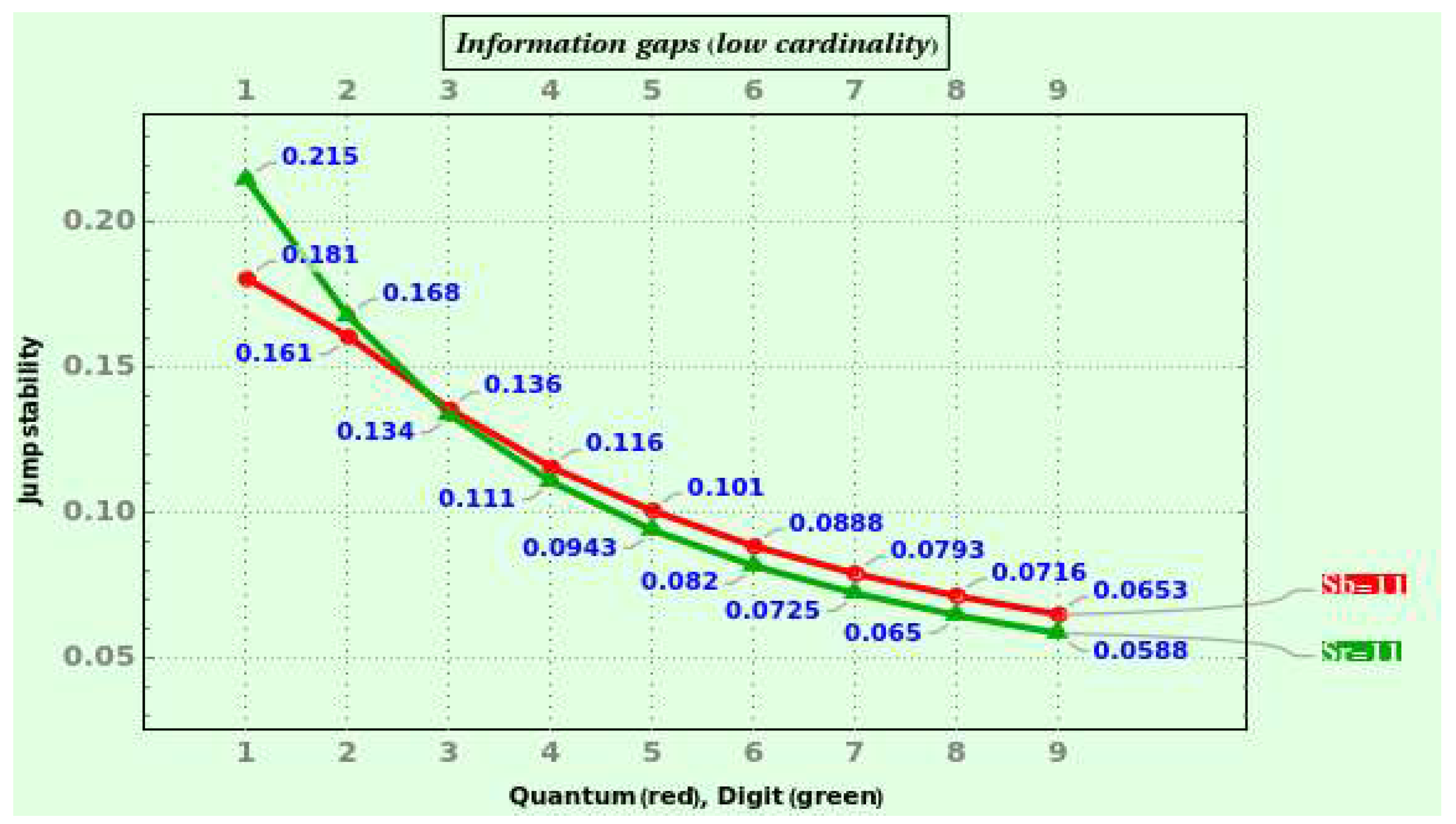

We introduce two caveats to analyze the NBL weights (fourth column). First, the Kempner distribution conforms with NBL via the average of NBL distributions for different positions, which is NBL, too. For instance,

is, in principle, the average of quantum 1’s probabilities at first (

), second (

), third (

), fourth (

), et cetera position according to (

10). Second, because the distribution of the

nth quantum quickly tends to be uniform (

for each of the ten quanta from the fifth position), we must suspect that there exists a threshold position above which the contributions to the quantum’s weight do not count; otherwise, the resulting mean distribution will end up reaching uniformity despite the differences that the Benford distribution makes at the first positions. Consequently, the last column calculates

as the NBL frequency averaged only over the first nine positions. Averaging ten positions also gives an excellent approximation (with a mean error of

) to the distribution of Kempner masses, but nine positions deliver the minimal total mean error of

.

Can we extrapolate this result in

to any value of

? If affirmative, PN would ignore a natural significand’s quanta from the

th place, agreeing with claims often made by mathematicians [

78], physicists [

79,

80], and engineers [

81] about the illogicality of a PN system carrying excessive digits in calculations of any type, regardless of the discipline.

We surmise that a bijective

b-ary chain

c that fulfills

is physically elusive. The universe in base

would cope with at most

nesting levels, each distinguishing between

b possible quanta. The physical resolution

would estimate the scope of quanta a computational system like the cosmos can naturally operate, much as a native resolution describes the number of pixels a screen can display.

In [

82], the author contrives an efficient algorithm for summing a series of harmonic numbers whose denominator contains no occurrences of a particular numerical chain. As a result of the calculations, a harmonic series in base

b omitting a chain of length

n (regardless of its specific quanta) might converge approximately to

.

This conjecture means that the contribution of linearly more extended chains to an endless series is geometrically lesser. For instance, the harmonic series where we impede the occurrence of the decimal numeral 314159 is about , whereas the same sum omitting only 3 is , times as low. Thus, large numerical chains would be exponentially inconsequential.

More general constraints allow several occurrences of a given quantum to calculate summations positively. Let

be the sums of the

b-base reciprocals of naturals that have precisely

n instances of the quantum

q. For example, omitting the terms whose denominator in decimal representation contains one or more 6 is the particular case

. The sequence of values

S decreases and tends to

regardless of

q [

83].

Except for the gap from to , where the total increases, the summation falls as we raise the constraining quantity of quanta. What is the reason? It is not that we get more terms with nqs than terms containing qs, but that the longer the chain, the lighter the contribution. Furthermore, when , , whereas if , , i.e., increments of n near the origin produce significant drops and vice versa, increments of n far from the origin produce negligible drops. Although we have not statistically tested the number of quanta for compliance with NBL, we can again conclude that while small is a synonym for solid and discernible, huge numerical chains are fragile and hardly convey differences.

Instead of imposing absolute constraints, we can allow in a term arbitrarily many quanta

q irrespective of the position and number so long as the proportion of

qs remains below a fixed parameter

. In [

84], the authors prove that the series converges if and only if

. In decimal, while Kempner’s original series implies

, where no term containing a given quantum contributes to the summation, the complete harmonic series means

, where any density is allowed, i.e., we keep all the reciprocals.

For instance, if we consider the constraint allow a rate of of 7s at most, the term 198765432109876543210 disappears ( of 7s), but neither 198654321098 (no 7s) nor 198865432109876543210 ( of 7s) does. While the series converges in , it no longer converges above the threshold . Note that the archetype of the Pareto law appears naturally; on average, of the unit fractions, those with the highest quantum density, offset the remaining . Moreover, this result engages with our surmise concerning the physical resolution of a universal computational system. Again, densities of b quanta or more are intractable. A PN system must restrict itself to chains with less than b quanta to guarantee the operability of coded data and avoid overflow conditions.

5. Odds

Although odds typically appear in gambling and statistics, this section illustrates how they are central to the computational processes of a coding source, including an application to physics and another to decision theory.

We usually define the odds of an outcome as the ratio of the number of events that generate that particular result to those that do not. In this sense, odds constitute another measure of the chance of a result. Likewise, the ratio between the probabilities of two events determines their relative odds; the higher the odds of an outcome compared with another, the more informative the latter’s occurrence is.