Submitted:

11 November 2024

Posted:

12 November 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Modern and Future Home Energy Management Systems – Complexity and Advancements

1.2. An Original Contribution and the Paper Structure

- Synergy between RL and IoT for Real-Time Smart Home systems. While RL and IoT have individually shown promise in home and building automation [43,52,53,54], this review is among the first to extensively analyze how RL can be leveraged with IoT networks to achieve real-time monitoring and adaptive control in energy management. Moreover, it demonstrates the potential for more efficient and autonomous building operations through the utilization of IoT sensors to feed RL systems with real-time data on energy usage, occupancy, and environmental conditions;

- Innovative approaches to DSR optimization. This review identifies a novel application of RL in enhancing DSR programs, enabling homes, in particular prosumers, to dynamically respond to fluctuations in energy prices and grid conditions. By utilizing RL, homes buildings can autonomously learn optimal strategies for shifting or reducing energy loads, contributing to grid stability and energy cost savings, particularly in the context of peak demand periods. The ability of RL to adapt to varying DR signals and building-specific constraints presents a significant advancement over traditional rule-based approaches;

- Advanced scheduling for energy and resource optimization. A unique focus of this review is the application of RL in scheduling algorithms for home automation systems, particularly in relation to energy consumption, occupancy prediction, and appliance usage. This review explores how RL and DRL can optimize multiobjective scheduling problems, balancing comfort, energy efficiency, and operational costs. Such applications are critical for ensuring flexible home and prosumers systems, capable of responding to dynamic energy demands and varying occupant needs;

- Integration of RL and DRL with RES and energy storage systems. One of the most novel aspects of this review is the examination of how RL and DRL techniques can be used to manage RESs, such as solar and wind, in conjunction with energy storage systems, especially important for modern and future prosumer applications. By enabling intelligent decision-making about when to store, use, or sell generated energy, RL and DRL algorithms can help maximize the self-consumption of renewables and ensure grid or microgrid independence. This is particularly important in homes and buildings aiming for net-zero energy performance, as RL-driven strategies can optimize the use of intermittent RES in real time;

- Bridging the gap between theory and practice. While much of the existing research on RL in building automation remains theoretical or simulation-based, this review uniquely emphasizes need for practical case studies and real-world implementations. It identifies key challenges such as scalability, data availability, and heterogeneous system integration, offering insights into how these challenges can be overcome when deploying RL-based systems in operational environments.

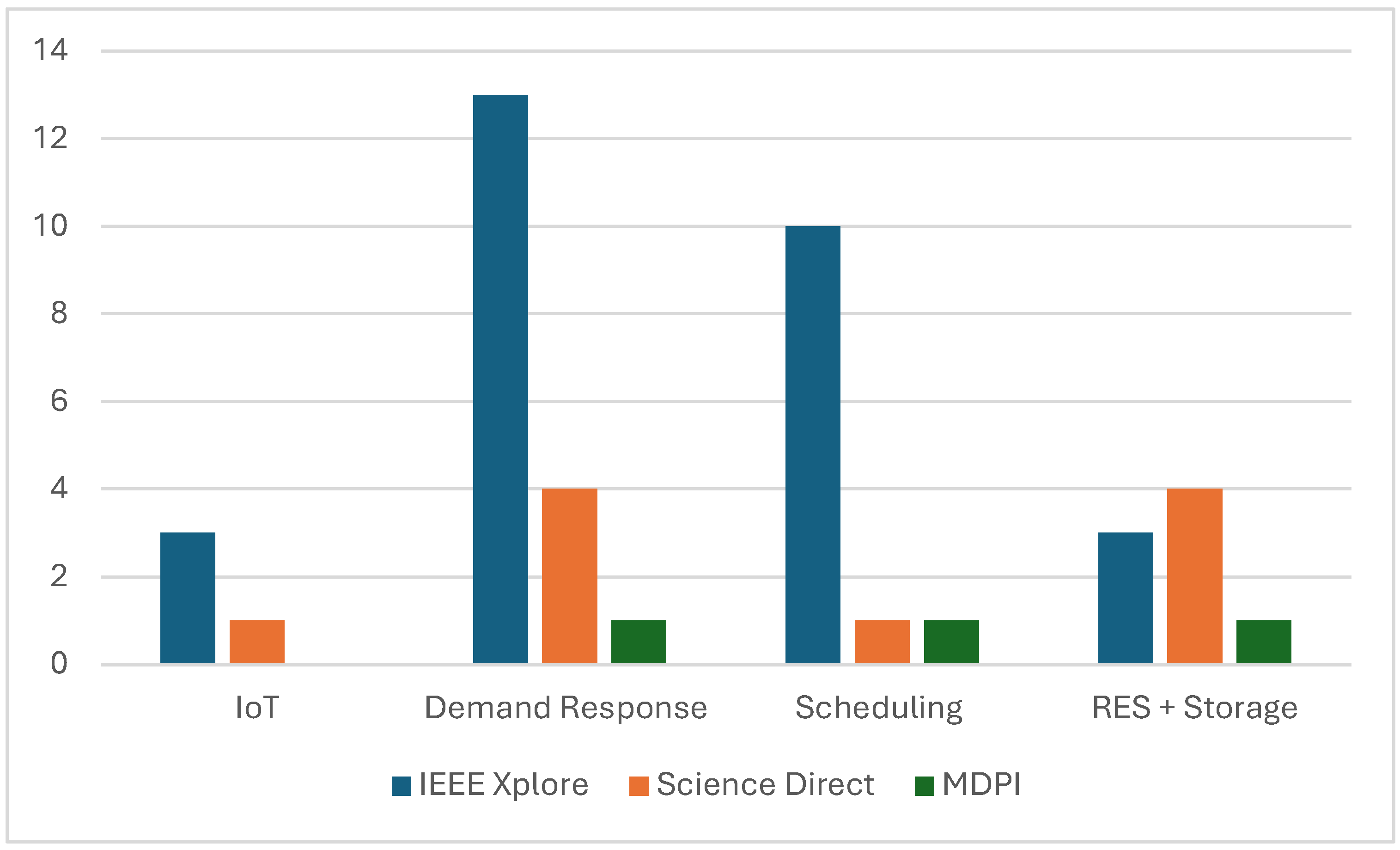

2. Methodology of the Review

3. State of the Art and Practice

-

IoT applications

- Algorithms used: DRL, Deep Q-learning, Q-learning, DDPG

- Objectives: Focuses on optimizing cost and comfort, with additional considerations for autonomy, personalization, and privacy

- Verification: All experiments and models are verified through simulations

-

DSR applications

- Algorithms used: A variety including MORL, Q-learning (and its variations with Fuzzy Reasoning), DQN, MARL, PPO, Actor-Critic methods, among others

- Objectives: Primarily target cost and comfort optimization

- Both simulations and some evaluations using real-world data or physical testing setups (e.g., MATLAB and Arduino Uno)

-

Scheduling applications

- Algorithms used: Q-learning, DQN, PPO, MADDPG, among others

- Objectives: Focus on cost and comfort optimization, with several entries solely targeting cost

- Verification: Predominantly simulations, with some studies using practical data from real-world networks

-

Data security and privacy

- Algorithms used: TRPO, SAC, Q-learning, PPO, DDPG, and others

- Objectives: Aimed at optimizing cost and comfort, with a specific focus on energy systems integrating renewable sources and storage

- Verification: All studies verify their findings through simulations, with some using real-world data from energy markets and PV profiles.

4. Applications of Reinforcement Learning for Home Automation

4.1. Problems, Gaps and Challenges

5. Opportunities in Application of Reinforcement Learning in Home Automation and Home and Building Energy Management

- Adaptability and optimization The utilization of DRL models in HEMS facilitates the dynamic realignment of energy management strategies (storage and consumption) in accordance with fluctuating market and weather conditions, as well as evolving user preferences. This approach has the potential to significantly enhance energy and cost savings [138,139];

- Integration with renewable energy sourcesRL-based HEMS systems facilitate the integration of renewable energy sources, such as photovoltaic panels and wind turbines, thereby enhancing energy independence and reducing reliance on the power grid [138];

- Data security and privacyThe implementation of DRL in HEMS systems requires the utilization of sophisticated data protection and security methodologies to guarantee the confidentiality of user data and the integrity of the system against the threat of cyberattacks [29].

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Nomenclature

| A2C | Advantage Actor-Critic |

| ANN | Artificial Neural Networks |

| BACS | Building Automation and Control Systems |

| BMS | Building Management Systems |

| DDPG | Deep Deterministic Policy Gradients |

| DDQN | Double Deep Q-learning |

| DERs | Distributed Energy Resources |

| DQN | Deep Q-network |

| DRL | Deep Reinforcement Learning |

| DSM | Demand Side Management |

| DSO | Distribution System Operator |

| DSR | Demand Side Response |

| DTA | Dual Targeting Algorithm |

| EPBD | Energy Performance of Buildings Directive |

| HEMS | Home Energy Management Systems |

| HVAC | Heating, Ventilation and Air Condition |

| IoT | Internet of Things |

| LSTM | Long Short-Term Memory |

| MADDPG | Multi-agent Deep Deterministic Policy Gradient |

| MARL | Multi-Agent Reinforcement Learning |

| MORL | Multi-Objective Reinforcement Learning |

| PPO | Proximal Policy Optimization |

| PV | Photovoltaic |

| RES | Renewable Energy Sources |

| RL | Reinforcement Learning |

| SAC | Soft Actor-Critic |

| SRI | Smart Readiness Indicator |

| TD3 | Twin Delayed Deep Deterministic Policy Gradient |

| TRPO | Trust Region Policy Optimization |

| V2G | Vehicle-to-Grid |

References

- Filho, G.P.R.; Villas, L.A.; Gonçalves, V.P.; Pessin, G.; Loureiro, A.A.F.; Ueyama, J. Energy-Efficient Smart Home Systems: Infrastructure and Decision-Making Process. Internet of Things 2019, 5, 153–167. [Google Scholar] [CrossRef]

- Pratt, A.; Krishnamurthy, D.; Ruth, M.; Wu, H.; Lunacek, M.; Vaynshenk, P. Transactive Home Energy Management Systems: The Impact of Their Proliferation on the Electric Grid. IEEE Electrification Magazine 2016, 4, 8–14. [Google Scholar] [CrossRef]

- Diyan, M.; Silva, B.N.; Han, K. A Multi-Objective Approach for Optimal Energy Management in Smart Home Using the Reinforcement Learning. Sensors 2020, 20, 3450. [Google Scholar] [CrossRef] [PubMed]

- Pau, G.; Collotta, M.; Ruano, A.; Qin, J. Smart Home Energy Management. Energies (Basel) 2017, 10, 382. [Google Scholar] [CrossRef]

- Umair, M.; Cheema, M.A.; Afzal, B.; Shah, G. Energy Management of Smart Homes over Fog-Based IoT Architecture. Sustainable Computing: Informatics and Systems 2023, 39. [Google Scholar] [CrossRef]

- Deanseekeaw, A.; Khortsriwong, N.; Boonraksa, P.; Boonraksa, T.; Marungsri, B. Optimal Load Scheduling for Smart Home Energy Management Using Deep Reinforcement Learning. In Proceedings of the 2024 12th International Electrical Engineering Congress (iEECON); IEEE, March 6 2024; pp. 1–4. [Google Scholar]

- Ożadowicz, A.; Grela, J. An Event-Driven Building Energy Management System Enabling Active Demand Side Management. In Proceedings of the 2016 Second International Conference on Event-based Control, Communication, and Signal Processing (EBCCSP); IEEE, June 2016; pp. 1–8.

- Verschae, R.; Kato, T.; Matsuyama, T. Energy Management in Prosumer Communities: A Coordinated Approach. Energies (Basel) 2016, 9, 562. [Google Scholar] [CrossRef]

- European Parliament Directive (EU) 2024/1275 of the European Parliament and the Council on the Energy Performance of Buildings; EU: Strasbourg, France, 2024.

- European Commission Energy Roadmap 2050; 2012.

- Fokaides, P.A.; Panteli, C.; Panayidou, A. How Are the Smart Readiness Indicators Expected to Affect the Energy Performance of Buildings: First Evidence and Perspectives. Sustainability 2020, 12, 9496. [Google Scholar] [CrossRef]

- Märzinger, T.; Österreicher, D. Extending the Application of the Smart Readiness Indicator—A Methodology for the Quantitative Assessment of the Load Shifting Potential of Smart Districts. Energies (Basel) 2020, 13, 3507. [Google Scholar] [CrossRef]

- Ożadowicz, A. A Hybrid Approach in Design of Building Energy Management System with Smart Readiness Indicator and Building as a Service Concept. Energies (Basel) 2022, 15, 1432. [Google Scholar] [CrossRef]

- ISO 52120-1:2021, I. 205 T.C. Energy Performance of Buildings Contribution of Building Automation, Controls and Building Management; Geneva, Switzerland, 2021.

- Favuzza, S.; Ippolito, M.; Massaro, F.; Musca, R.; Riva Sanseverino, E.; Schillaci, G.; Zizzo, G. Building Automation and Control Systems and Electrical Distribution Grids: A Study on the Effects of Loads Control Logics on Power Losses and Peaks. Energies (Basel) 2018, 11, 667. [Google Scholar] [CrossRef]

- Mahmood, A.; Baig, F.; Alrajeh, N.; Qasim, U.; Khan, Z.; Javaid, N. An Enhanced System Architecture for Optimized Demand Side Management in Smart Grid. Applied Sciences 2016, 6, 122. [Google Scholar] [CrossRef]

- Hou, P.; Yang, G.; Hu, J.; Douglass, P.J.; Xue, Y. A Distributed Transactive Energy Mechanism for Integrating PV and Storage Prosumers in Market Operation. Engineering 2022, 12, 171–182. [Google Scholar] [CrossRef]

- Kato, T.; Ishikawa, N.; Yoshida, N. Distributed Autonomous Control of Home Appliances Based on Event Driven Architecture. In Proceedings of the 2017 IEEE 6th Global Conference on Consumer Electronics (GCCE); IEEE, October 2017; pp. 1–2. [Google Scholar]

- Charbonnier, F.; Morstyn, T.; McCulloch, M.D. Scalable Multi-Agent Reinforcement Learning for Distributed Control of Residential Energy Flexibility. Appl Energy 2022, 314, 118825. [Google Scholar] [CrossRef]

- Delsing, J. Local Cloud Internet of Things Automation: Technology and Business Model Features of Distributed Internet of Things Automation Solutions. IEEE Industrial Electronics Magazine 2017, 11, 8–21. [Google Scholar] [CrossRef]

- Yassine, A.; Singh, S.; Hossain, M.S.; Muhammad, G. IoT Big Data Analytics for Smart Homes with Fog and Cloud Computing. Future Generation Computer Systems 2019, 91, 563–573. [Google Scholar] [CrossRef]

- Machorro-Cano, I.; Alor-Hernández, G.; Paredes-Valverde, M.A.; Rodríguez-Mazahua, L.; Sánchez-Cervantes, J.L.; Olmedo-Aguirre, J.O. HEMS-IoT: A Big Data and Machine Learning-Based Smart Home System for Energy Saving. Energies (Basel) 2020, 13, 1097. [Google Scholar] [CrossRef]

- Bawa, M.; Caganova, D.; Szilva, I.; Spirkova, D. Importance of Internet of Things and Big Data in Building Smart City and What Would Be Its Challenges. In Smart City 360°; Leon-Garcia, A., Lenort, R., Holman, D., Staš, D., Krutilova, V., Wicher, P., Cagáňová, D., Špirková, D., Golej, J., Nguyen, K., Eds.; Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering; Springer International Publishing: Cham, 2016; Vol. 166, pp. 605–616 ISBN 978-3-319-33680-0.

- Lawal, K.N.; Olaniyi, T.K.; Gibson, R.M. Leveraging Real-World Data from IoT Devices in a Fog–Cloud Architecture for Resource Optimisation within a Smart Building. Applied Sciences 2023, 14, 316. [Google Scholar] [CrossRef]

- Akter, M.N.; Mahmud, M.A.; Oo, A.M.T. A Hierarchical Transactive Energy Management System for Microgrids. In Proceedings of the 2016 IEEE Power and Energy Society General Meeting (PESGM); IEEE, July 2016; Vol. 2016-Novem; pp. 1–5. [Google Scholar]

- Taghizad-Tavana, K.; Ghanbari-Ghalehjoughi, M.; Razzaghi-Asl, N.; Nojavan, S.; Alizadeh, A. An Overview of the Architecture of Home Energy Management System as Microgrids, Automation Systems, Communication Protocols, Security, and Cyber Challenges. Sustainability 2022, 14, 15938. [Google Scholar] [CrossRef]

- Kiehbadroudinezhad, M.; Merabet, A.; Abo-Khalil, A.G.; Salameh, T.; Ghenai, C. Intelligent and Optimized Microgrids for Future Supply Power from Renewable Energy Resources: A Review. Energies (Basel) 2022, 15, 3359. [Google Scholar] [CrossRef]

- Chamana, M.; Schmitt, K.E.K.; Bhatta, R.; Liyanage, S.; Osman, I.; Murshed, M.; Bayne, S.; MacFie, J. Buildings Participation in Resilience Enhancement of Community Microgrids: Synergy Between Microgrid and Building Management Systems. IEEE Access 2022, 10, 100922–100938. [Google Scholar] [CrossRef]

- Al-Ani, O.; Das, S. Reinforcement Learning: Theory and Applications in HEMS. Energies (Basel) 2022, 15, 6392. [Google Scholar] [CrossRef]

- Wang, Z.; Hong, T. Reinforcement Learning for Building Controls: The Opportunities and Challenges. Appl Energy 2020, 269, 115036. [Google Scholar] [CrossRef]

- Benjamin, A.; Badar, A.Q.H. Reinforcement Learning Based Cost-Effective Smart Home Energy Management. In Proceedings of the 2023 IEEE 3rd International Conference on Sustainable Energy and Future Electric Transportation (SEFET); IEEE, August 9 2023; pp. 1–5. [Google Scholar]

- Yu, L.; Qin, S.; Zhang, M.; Shen, C.; Jiang, T.; Guan, X. A Review of Deep Reinforcement Learning for Smart Building Energy Management. IEEE Internet Things J 2021, 8, 12046–12063. [Google Scholar] [CrossRef]

- Wei, T.; Wang, Y.; Zhu, Q. Deep Reinforcement Learning for Building HVAC Control. In Proceedings of the Proceedings of the 54th Annual Design Automation Conference 2017. ACM: New York, NY, USA, June 18 2017; pp. 1–6.

- Yu, L.; Xie, W.; Xie, D.; Zou, Y.; Zhang, D.; Sun, Z.; Zhang, L.; Zhang, Y.; Jiang, T. Deep Reinforcement Learning for Smart Home Energy Management. IEEE Internet Things J 2020, 7, 2751–2762. [Google Scholar] [CrossRef]

- Kodama, N.; Harada, T.; Miyazaki, K. Home Energy Management Algorithm Based on Deep Reinforcement Learning Using Multistep Prediction. IEEE Access 2021, 9, 153108–153115. [Google Scholar] [CrossRef]

- Perez, K.X.; Baldea, M.; Edgar, T.F. Integrated Smart Appliance Scheduling and HVAC Control for Peak Residential Load Management. In Proceedings of the 2016 American Control Conference (ACC); IEEE, July 2016; Vol. 2016-July; pp. 1458–1463. [Google Scholar]

- Tekler, Z.D.; Low, R.; Yuen, C.; Blessing, L. Plug-Mate: An IoT-Based Occupancy-Driven Plug Load Management System in Smart Buildings. Build Environ 2022, 223, 109472. [Google Scholar] [CrossRef]

- Fambri, G.; Badami, M.; Tsagkrasoulis, D.; Katsiki, V.; Giannakis, G.; Papanikolaou, A. Demand Flexibility Enabled by Virtual Energy Storage to Improve Renewable Energy Penetration. Energies (Basel) 2020, 13, 5128. [Google Scholar] [CrossRef]

- Mancini, F.; Lo Basso, G.; de Santoli, L. Energy Use in Residential Buildings: Impact of Building Automation Control Systems on Energy Performance and Flexibility. Energies (Basel) 2019, 12, 2896. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, X.; Sun, Y.; Zhou, Y. Advanced Controls on Energy Reliability, Flexibility and Occupant-Centric Control for Smart and Energy-Efficient Buildings. Energy Build 2023, 297, 113436. [Google Scholar] [CrossRef]

- Babar, M.; Grela, J.; Ożadowicz, A.; Nguyen, P.; Hanzelka, Z.; Kamphuis, I. Energy Flexometer: Transactive Energy-Based Internet of Things Technology. Energies (Basel) 2018, 11, 568. [Google Scholar] [CrossRef]

- Chen, Y.; Yang, Y.; Xu, X. Towards Transactive Energy: An Analysis of Information-related Practical Issues. Energy Conversion and Economics 2022, 3, 112–121. [Google Scholar] [CrossRef]

- Sheshalani Balasingam; Zapiee, M. K.; Mohana, D. Smart Home Automation System Using IOT. International Journal of Recent Technology and Applied Science 2022, 4, 44–53. [Google Scholar] [CrossRef]

- Yar, H.; Imran, A.S.; Khan, Z.A.; Sajjad, M.; Kastrati, Z. Towards Smart Home Automation Using IoT-Enabled Edge-Computing Paradigm. Sensors 2021, 21, 4932. [Google Scholar] [CrossRef] [PubMed]

- Almusaylim, Z.A.; Zaman, N. A Review on Smart Home Present State and Challenges: Linked to Context-Awareness Internet of Things (IoT). Wireless Networks 2019, 25, 3193–3204. [Google Scholar] [CrossRef]

- Sun, H.; Yu, H.; Fan, G.; Chen, L. Energy and Time Efficient Task Offloading and Resource Allocation on the Generic IoT-Fog-Cloud Architecture. Peer Peer Netw Appl 2020, 13, 548–563. [Google Scholar] [CrossRef]

- García-Monge, M.; Zalba, B.; Casas, R.; Cano, E.; Guillén-Lambea, S.; López-Mesa, B.; Martínez, I. Is IoT Monitoring Key to Improve Building Energy Efficiency? Case Study of a Smart Campus in Spain. Energy Build 2023, 285, 112882. [Google Scholar] [CrossRef]

- Arif, S.; Khan, M.A.; Rehman, S.U.; Kabir, M.A.; Imran, M. Investigating Smart Home Security: Is Blockchain the Answer? IEEE Access 2020, 8, 117802–117816. [Google Scholar] [CrossRef]

- Graveto, V.; Cruz, T.; Simöes, P. Security of Building Automation and Control Systems: Survey and Future Research Directions. Comput Secur 2022, 112, 102527. [Google Scholar] [CrossRef]

- Parikh, S.; Dave, D.; Patel, R.; Doshi, N. Security and Privacy Issues in Cloud, Fog and Edge Computing. Procedia Comput Sci 2019, 160, 734–739. [Google Scholar] [CrossRef]

- Abed, S.; Jaffal, R.; Mohd, B.J. A Review on Blockchain and IoT Integration from Energy, Security and Hardware Perspectives. Wirel Pers Commun 2023, 129, 2079–2122. [Google Scholar] [CrossRef]

- Ożadowicz, A. Generic IoT for Smart Buildings and Field-Level Automation—Challenges, Threats, Approaches, and Solutions. Computers 2024, 13, 45. [Google Scholar] [CrossRef]

- Yu, J.; Kim, M.; Bang, H.C.; Bae, S.H.; Kim, S.J. IoT as a Applications: Cloud-Based Building Management Systems for the Internet of Things. Multimed Tools Appl 2016, 75, 14583–14596. [Google Scholar] [CrossRef]

- Kastner, W.; Kofler, M.; Jung, M.; Gridling, G.; Weidinger, J. Building Automation Systems Integration into the Internet of Things. The IoT6 Approach, Its Realization and Validation. In Proceedings of the Emerging Technology and Factory Automation (ETFA), 2014 IEEE; 2014; pp. 1–9.

- Verbeke Stijin; Aerts Dorien; Reynders Glenn; Ma Yixiao; Waide Paul FINAL REPORT ON THE TECHNICAL SUPPORT TO THE DEVELOPMENT OF A SMART READINESS INDICATOR FOR BUILDINGS; Brussels, 2020.

- European Parliament Directive (EU) 2018/844 of the European Parliament and the Council on the Energy Performance of Buildings; EU, 2018.

- Ramezani, B.; Silva, Manuel. G. da; Simões, N. Application of Smart Readiness Indicator for Mediterranean Buildings in Retrofitting Actions. Energy Build 2021, 249, 111173. [Google Scholar] [CrossRef]

- Janhunen, E.; Pulkka, L.; Säynäjoki, A.; Junnila, S. Applicability of the Smart Readiness Indicator for Cold Climate Countries. Buildings 2019, 9. [Google Scholar] [CrossRef]

- Ożadowicz, A.; Grela, J. Impact of Building Automation Control Systems on Energy Efficiency — University Building Case Study. In Proceedings of the 2017 22nd IEEE International Conference on Emerging Technologies and Factory Automation (ETFA); IEEE, September 2017; pp. 1–8. [Google Scholar]

- Ożadowicz, A.; Grela, J. Energy Saving in the Street Lighting Control System—a New Approach Based on the EN-15232 Standard. Energy Effic 2017, 10, 563–576. [Google Scholar] [CrossRef]

- Laroui, M.; Nour, B.; Moungla, H.; Cherif, M.A.; Afifi, H.; Guizani, M. Edge and Fog Computing for IoT: A Survey on Current Research Activities & Future Directions. Comput Commun 2021, 180, 210–231. [Google Scholar] [CrossRef]

- Genkin, M.; McArthur, J.J. B-SMART: A Reference Architecture for Artificially Intelligent Autonomic Smart Buildings. Eng Appl Artif Intell 2023, 121, 106063. [Google Scholar] [CrossRef]

- Seitz, A.; Johanssen, J.O.; Bruegge, B.; Loftness, V.; Hartkopf, V.; Sturm, M. A Fog Architecture for Decentralized Decision Making in Smart Buildings. In Proceedings of the Proceedings - 2017 2nd International Workshop on Science of Smart City Operations and Platforms Engineering, in partnership with Global City Teams Challenge, SCOPE 2017; Association for Computing Machinery, Inc; 2017; pp. 34–39. [Google Scholar]

- Mansour, M.; Gamal, A.; Ahmed, A.I.; Said, L.A.; Elbaz, A.; Herencsar, N.; Soltan, A. Internet of Things: A Comprehensive Overview on Protocols, Architectures, Technologies, Simulation Tools, and Future Directions. Energies (Basel) 2023, 16, 3465. [Google Scholar] [CrossRef]

- Yousefpour, A.; Fung, C.; Nguyen, T.; Kadiyala, K.; Jalali, F.; Niakanlahiji, A.; Kong, J.; Jue, J.P. All One Needs to Know about Fog Computing and Related Edge Computing Paradigms: A Complete Survey. Journal of Systems Architecture 2019, 98, 289–330. [Google Scholar] [CrossRef]

- Kastner, W.; Jung, M.; Krammer, L. Future Trends in Smart Homes and Buildings. In Industrial Communication Technology Handbook, Second Edition; Zurawski, R., Ed.; CRC Press Taylor & Francis Group, 2015; pp. 59-1-59–20 ISBN 978-1-4822-0732-3.

- Lobaccaro, G.; Carlucci, S.; Löfström, E. A Review of Systems and Technologies for Smart Homes and Smart Grids. Energies (Basel) 2016, 9, 1–33. [Google Scholar] [CrossRef]

- Bouchabou, D.; Nguyen, S.M.; Lohr, C.; LeDuc, B.; Kanellos, I. A Survey of Human Activity Recognition in Smart Homes Based on IoT Sensors Algorithms: Taxonomies, Challenges, and Opportunities with Deep Learning. Sensors 2021, 21, 6037. [Google Scholar] [CrossRef] [PubMed]

- Grela, J.; Ożadowicz, A. Building Automation Planning and Design Tool Implementing EN 15 232 BACS Efficiency Classes. In Proceedings of the 2016 IEEE 21st International Conference on Emerging Technologies and Factory Automation (ETFA); IEEE, September 2016; pp. 1–4. [Google Scholar]

- Sharda, S.; Sharma, K.; Singh, M. A Real-Time Automated Scheduling Algorithm with PV Integration for Smart Home Prosumers. Journal of Building Engineering 2021, 44, 102828. [Google Scholar] [CrossRef]

- Sangoleye, F.; Jao, J.; Faris, K.; Tsiropoulou, E.E.; Papavassiliou, S. Reinforcement Learning-Based Demand Response Management in Smart Grid Systems With Prosumers. IEEE Syst J 2023, 17, 1797–1807. [Google Scholar] [CrossRef]

- Ożadowicz, A. A New Concept of Active Demand Side Management for Energy Efficient Prosumer Microgrids with Smart Building Technologies. Energies (Basel) 2017, 10, 1771. [Google Scholar] [CrossRef]

- Sierla, S.; Pourakbari-Kasmaei, M.; Vyatkin, V. A Taxonomy of Machine Learning Applications for Virtual Power Plants and Home/Building Energy Management Systems. Autom Constr 2022, 136, 104174. [Google Scholar] [CrossRef]

- Razghandi, M.; Zhou, H.; Erol-Kantarci, M.; Turgut, D. Smart Home Energy Management: Sequence-to-Sequence Load Forecasting and Q-Learning. In Proceedings of the 2021 IEEE Global Communications Conference (GLOBECOM); IEEE, December 2021; pp. 01–06. [Google Scholar]

- Zhang, H.; Wu, D.; Boulet, B. A Review of Recent Advances on Reinforcement Learning for Smart Home Energy Management. In Proceedings of the 2020 IEEE Electric Power and Energy Conference (EPEC); IEEE, November 9 2020; pp. 1–6. [Google Scholar]

- Lu, R.; Hong, S.H.; Yu, M. Demand Response for Home Energy Management Using Reinforcement Learning and Artificial Neural Network. IEEE Trans Smart Grid 2019, 10, 6629–6639. [Google Scholar] [CrossRef]

- Radhamani, R.; Karthick, S.; Kishore Kumar, S.; Gokulraj, M. Deployment of an IoT-Integrated Home Energy Management System Employing Deep Reinforcement Learning. In Proceedings of the 2024 2nd International Conference on Artificial Intelligence and Machine Learning Applications Theme: Healthcare and Internet of Things (AIMLA); IEEE, March 15 2024; pp. 1–4. [Google Scholar]

- Dhayalan, V.; Raman, R.; Kalaivani, N.; Shrirvastava, A.; Reddy, R.S.; Meenakshi, B. Smart Renewable Energy Management Using Internet of Things and Reinforcement Learning. In Proceedings of the 2024 2nd International Conference on Computer, Communication and Control (IC4); IEEE, February 8 2024; pp. 1–5.

- Wang, Y.; Xiao, R.; Wang, X.; Liu, A. Constructing Autonomous, Personalized, and Private Working Management of Smart Home Products Based on Deep Reinforcement Learning. Procedia CIRP 2023, 119, 72–77. [Google Scholar] [CrossRef]

- Chen, S.-J.; Chiu, W.-Y.; Liu, W.-J. User Preference-Based Demand Response for Smart Home Energy Management Using Multiobjective Reinforcement Learning. IEEE Access 2021, 9, 161627–161637. [Google Scholar] [CrossRef]

- Angano, W.; Musau, P.; Wekesa, C.W. Design and Testing of a Demand Response Q-Learning Algorithm for a Smart Home Energy Management System. In Proceedings of the 2021 IEEE PES/IAS PowerAfrica; IEEE, August 23 2021; pp. 1–5. [Google Scholar]

- Amer, A.A.; Shaban, K.; Massoud, A.M. DRL-HEMS: Deep Reinforcement Learning Agent for Demand Response in Home Energy Management Systems Considering Customers and Operators Perspectives. IEEE Trans Smart Grid 2023, 14, 239–250. [Google Scholar] [CrossRef]

- Liu, W.; Wang, Y.; Jiang, F.; Cheng, Y.; Rong, J.; Wang, C.; Peng, J. A Real-Time Demand Response Strategy of Home Energy Management by Using Distributed Deep Reinforcement Learning. In Proceedings of the 2021 IEEE 23rd Int Conf on High Performance Computing & Communications; 7th Int Conf on Data Science & Systems; 19th Int Conf on Smart City; 7th Int Conf on Dependability in Sensor, Cloud & Big Data Systems & Application (HPCC/DSS/SmartCity/DependSys); IEEE, December 2021; pp. 988–995.

- Alfaverh, F.; Denai, M.; Sun, Y. Demand Response Strategy Based on Reinforcement Learning and Fuzzy Reasoning for Home Energy Management. IEEE Access 2020, 8, 39310–39321. [Google Scholar] [CrossRef]

- Li, H.; Wan, Z.; He, H. A Deep Reinforcement Learning Based Approach for Home Energy Management System. In Proceedings of the 2020 IEEE Power & Energy Society Innovative Smart Grid Technologies Conference (ISGT); IEEE, February 2020; pp. 1–5. [Google Scholar]

- Mathew, A.; Roy, A.; Mathew, J. Intelligent Residential Energy Management System Using Deep Reinforcement Learning. IEEE Syst J 2020, 14, 5362–5372. [Google Scholar] [CrossRef]

- Ding, H.; Xu, Y.; Chew Si Hao, B.; Li, Q.; Lentzakis, A. A Safe Reinforcement Learning Approach for Multi-Energy Management of Smart Home. Electric Power Systems Research 2022, 210, 108120. [Google Scholar] [CrossRef]

- Chu, Y.; Wei, Z.; Sun, G.; Zang, H.; Chen, S.; Zhou, Y. Optimal Home Energy Management Strategy: A Reinforcement Learning Method with Actor-Critic Using Kronecker-Factored Trust Region. Electric Power Systems Research 2022, 212, 108617. [Google Scholar] [CrossRef]

- Lissa, P.; Deane, C.; Schukat, M.; Seri, F.; Keane, M.; Barrett, E. Deep Reinforcement Learning for Home Energy Management System Control. Energy and AI 2021, 3, 100043. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, D.; Gooi, H.B. Optimization Strategy Based on Deep Reinforcement Learning for Home Energy Management. CSEE Journal of Power and Energy Systems 2020, 6, 572–582. [Google Scholar] [CrossRef]

- Kumari, A.; Tanwar, S. Reinforcement Learning for Multiagent-Based Residential Energy Management System. In Proceedings of the 2021 IEEE Globecom Workshops (GC Wkshps); IEEE, December 2021; pp. 1–6. [Google Scholar]

- Kumari, A.; Kakkar, R.; Tanwar, S.; Garg, D.; Polkowski, Z.; Alqahtani, F.; Tolba, A. Multi-Agent-Based Decentralized Residential Energy Management Using Deep Reinforcement Learning. Journal of Building Engineering 2024, 87, 109031. [Google Scholar] [CrossRef]

- Amer, A.; Shaban, K.; Massoud, A. Demand Response in HEMSs Using DRL and the Impact of Its Various Configurations and Environmental Changes. Energies (Basel) 2022, 15, 8235. [Google Scholar] [CrossRef]

- Roslann, A.; Asuhaimi, F.A.; Ariffin, K.N.Z. Energy Efficient Scheduling in Smart Home Using Deep Reinforcement Learning. In Proceedings of the 2022 IEEE International Conference on Artificial Intelligence in Engineering and Technology (IICAIET); IEEE, September 13 2022; pp. 1–6. [Google Scholar]

- Xiong, L.; Tang, Y.; Liu, C.; Mao, S.; Meng, K.; Dong, Z.; Qian, F. Meta-Reinforcement Learning-Based Transferable Scheduling Strategy for Energy Management. IEEE Transactions on Circuits and Systems I: Regular Papers 2023, 70, 1685–1695. [Google Scholar] [CrossRef]

- Kahraman, A.; Yang, G. Home Energy Management System Based on Deep Reinforcement Learning Algorithms. In Proceedings of the 2022 IEEE PES Innovative Smart Grid Technologies Conference Europe (ISGT-Europe); IEEE, October 10 2022; Vol. 2022-October; pp. 1–5. [Google Scholar]

- Aldahmashi, J.; Ma, X. Real-Time Energy Management in Smart Homes Through Deep Reinforcement Learning. IEEE Access 2024, 12, 43155–43172. [Google Scholar] [CrossRef]

- Seveiche-Maury, Z.; Arrubla-Hoyos, W. Proposal of a Decision-Making Model for Home Energy Saving through Artificial Intelligence Applied to a HEMS. In Proceedings of the 2023 IEEE Colombian Caribbean Conference (C3); IEEE, November 22 2023; pp. 1–6. [Google Scholar]

- Wei, G.; Chi, M.; Liu, Z.-W.; Ge, M.; Li, C.; Liu, X. Deep Reinforcement Learning for Real-Time Energy Management in Smart Home. IEEE Syst J 2023, 17, 2489–2499. [Google Scholar] [CrossRef]

- Jiang, F.; Zheng, C.; Gao, D.; Zhang, X.; Liu, W.; Cheng, Y.; Hu, C.; Peng, J. A Novel Multi-Agent Cooperative Reinforcement Learning Method for Home Energy Management under a Peak Power-Limiting. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC); IEEE, October 11 2020; pp. 350–355.

- Diyan, M.; Khan, M.; Zhenbo, C.; Silva, B.N.; Han, J.; Han, K.J. Intelligent Home Energy Management System Based on Bi-Directional Long-Short Term Memory and Reinforcement Learning. In Proceedings of the 2021 International Conference on Information Networking (ICOIN); IEEE, January 13 2021; Vol. 2021-January; pp. 782–787. [Google Scholar]

- Zenginis, I.; Vardakas, J.; Koltsaklis, N.E.; Verikoukis, C. Smart Home’s Energy Management Through a Clustering-Based Reinforcement Learning Approach. IEEE Internet Things J 2022, 9, 16363–16371. [Google Scholar] [CrossRef]

- Haq, E.U.; Lyu, C.; Xie, P.; Yan, S.; Ahmad, F.; Jia, Y. Implementation of Home Energy Management System Based on Reinforcement Learning. Energy Reports 2022, 8, 560–566. [Google Scholar] [CrossRef]

- Thattai, K.; Ravishankar, J.; Li, C. Consumer-Centric Home Energy Management System Using Trust Region Policy Optimization- Based Multi-Agent Deep Reinforcement Learning. In Proceedings of the 2023 IEEE Belgrade PowerTech; IEEE, June 25 2023; pp. 1–6. [Google Scholar]

- Langer, L.; Volling, T. A Reinforcement Learning Approach to Home Energy Management for Modulating Heat Pumps and Photovoltaic Systems. Appl Energy 2022, 327, 120020. [Google Scholar] [CrossRef]

- Xiong, S.; Liu, D.; Chen, Y.; Zhang, Y.; Cai, X. A Deep Reinforcement Learning Approach Based Energy Management Strategy for Home Energy System Considering the Time-of-Use Price and Real-Time Control of Energy Storage System. Energy Reports 2024, 11, 3501–3508. [Google Scholar] [CrossRef]

- Lee, S.; Choi, D.-H. Reinforcement Learning-Based Energy Management of Smart Home with Rooftop Solar Photovoltaic System, Energy Storage System, and Home Appliances. Sensors 2019, 19, 3937. [Google Scholar] [CrossRef] [PubMed]

- Abedi, S.; Yoon, S.W.; Kwon, S. Battery Energy Storage Control Using a Reinforcement Learning Approach with Cyclic Time-Dependent Markov Process. International Journal of Electrical Power & Energy Systems 2022, 134, 107368. [Google Scholar] [CrossRef]

- Härtel, F.; Bocklisch, T. Minimizing Energy Cost in PV Battery Storage Systems Using Reinforcement Learning. IEEE Access 2023, 11, 39855–39865. [Google Scholar] [CrossRef]

- Xu, G.; Shi, J.; Wu, J.; Lu, C.; Wu, C.; Wang, D.; Han, Z. An Optimal Solutions-Guided Deep Reinforcement Learning Approach for Online Energy Storage Control. Appl Energy 2024, 361, 122915. [Google Scholar] [CrossRef]

- Wang, B.; Zha, Z.; Zhang, L.; Liu, L.; Fan, H. Deep Reinforcement Learning-Based Security-Constrained Battery Scheduling in Home Energy System. IEEE Transactions on Consumer Electronics 2024, 70, 3548–3561. [Google Scholar] [CrossRef]

- Markiewicz, M.; Skała, A.; Grela, J.; Janusz, S.; Stasiak, T.; Latoń, D.; Bielecki, A.; Bańczyk, K. The Architecture for Testing Central Heating Control Algorithms with Feedback from Wireless Temperature Sensors. Energies (Basel) 2023, 16, 5584. [Google Scholar] [CrossRef]

- Arun, S.L.; Selvan, M.P. Intelligent Residential Energy Management System for Dynamic Demand Response in Smart Buildings. IEEE Syst J 2017, 1–12. [Google Scholar] [CrossRef]

- Pan, Y.; Shen, Y.; Qin, J.; Zhang, L. Deep Reinforcement Learning for Multi-Objective Optimization in BIM-Based Green Building Design. Autom Constr 2024, 166, 105598. [Google Scholar] [CrossRef]

- Shaqour, A.; Hagishima, A. Systematic Review on Deep Reinforcement Learning-Based Energy Management for Different Building Types. Energies (Basel) 2022, 15, 8663. [Google Scholar] [CrossRef]

- Qi, T.; Ye, C.; Zhao, Y.; Li, L.; Ding, Y. Deep Reinforcement Learning Based Charging Scheduling for Household Electric Vehicles in Active Distribution Network. Journal of Modern Power Systems and Clean Energy 2023, 11, 1890–1901. [Google Scholar] [CrossRef]

- Deanseekeaw, A.; Khortsriwong, N.; Boonraksa, P.; Boonraksa, T.; Marungsri, B. Optimal Load Scheduling for Smart Home Energy Management Using Deep Reinforcement Learning. In Proceedings of the 2024 12th International Electrical Engineering Congress (iEECON); IEEE, March 6 2024; pp. 1–4. [Google Scholar]

- Jendoubi, I.; Bouffard, F. Multi-Agent Hierarchical Reinforcement Learning for Energy Management. Appl Energy 2023, 332, 120500. [Google Scholar] [CrossRef]

- Qin, Y.; Ke, J.; Wang, B.; Filaretov, G.F. Energy Optimization for Regional Buildings Based on Distributed Reinforcement Learning. Sustain Cities Soc 2022, 78, 103625. [Google Scholar] [CrossRef]

- Anvari-Moghaddam, A.; Rahimi-Kian, A.; Mirian, M.S.; Guerrero, J.M. A Multi-Agent Based Energy Management Solution for Integrated Buildings and Microgrid System. Appl Energy 2017, 203, 41–56. [Google Scholar] [CrossRef]

- Kumar Nunna, H.S.V.S.; Srinivasan, D. Multi-Agent Based Transactive Energy Framework for Distribution Systems with Smart Microgrids. IEEE Trans Industr Inform 2017, 3203, 1–1. [Google Scholar] [CrossRef]

- Vamvakas, D.; Michailidis, P.; Korkas, C.; Kosmatopoulos, E. Review and Evaluation of Reinforcement Learning Frameworks on Smart Grid Applications. Energies (Basel) 2023, 16, 5326. [Google Scholar] [CrossRef]

- Yu, L.; Xie, W.; Xie, D.; Zou, Y.; Zhang, D.; Sun, Z.; Zhang, L.; Zhang, Y.; Jiang, T. Deep Reinforcement Learning for Smart Home Energy Management. IEEE Internet Things J 2020, 7, 2751–2762. [Google Scholar] [CrossRef]

- Zhang, L.; Gao, Y.; Zhu, H.; Tao, L. A Distributed Real-Time Pricing Strategy Based on Reinforcement Learning Approach for Smart Grid. Expert Syst Appl 2022, 191, 116285. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, D.; Zhang, X. Energy Management of Intelligent Building Based on Deep Reinforced Learning. Alexandria Engineering Journal 2021, 60, 1509–1517. [Google Scholar] [CrossRef]

- Wang, Z.; Xiao, F.; Ran, Y.; Li, Y.; Xu, Y. Scalable Energy Management Approach of Residential Hybrid Energy System Using Multi-Agent Deep Reinforcement Learning. Appl Energy 2024, 367, 123414. [Google Scholar] [CrossRef]

- Knap, P.; Gerding, E. Energy Storage in the Smart Grid: A Multi-Agent Deep Reinforcement Learning Approach. In Trends in Clean Energy Research: Selected Papers from the 9th International Conference on Advances on Clean Energy Research (ICACER 2024); Chen, L., Ed.; Springer Nature Switzerland: Cham, 2024; pp. 221–235. [Google Scholar]

- Sobhani, A.; Khorshidi, F.; Fakhredanesh, M. DeePLS: Personalize Lighting in Smart Home by Human Detection, Recognition, and Tracking. SN Comput Sci 2023, 4, 773. [Google Scholar] [CrossRef]

- Safaei, D.; Sobhani, A.; Kiaei, A.A. DeePLT: Personalized Lighting Facilitates by Trajectory Prediction of Recognized Residents in the Smart Home. International Journal of Information Technology 2024, 16, 2987–2999. [Google Scholar] [CrossRef]

- Manganelli, M.; Consalvi, R. Design and Energy Performance Assessment of High-Efficiency Lighting Systems. In Proceedings of the 2015 IEEE 15th International Conference on Environment and Electrical Engineering (EEEIC); IEEE, June 2015; pp. 1035–1040. [Google Scholar]

- Liu, J.; Chen, H.-M.; Li, S.; Lin, S. Adaptive and Energy-Saving Smart Lighting Control Based on Deep Q-Network Algorithm. In Proceedings of the 2021 6th International Conference on Control, Robotics and Cybernetics (CRC); IEEE, October 9 2021. pp. 207–211.

- Suman, S.; Rivest, F.; Etemad, A. Toward Personalization of User Preferences in Partially Observable Smart Home Environments. IEEE Transactions on Artificial Intelligence 2023, 4, 549–561. [Google Scholar] [CrossRef]

- Almilaify, Y.; Nweye, K.; Nagy, Z. SCALEX: SCALability EXploration of Multi-Agent Reinforcement Learning Agents in Grid-Interactive Efficient Buildings. In Proceedings of the Proceedings of the 10th ACM International Conference on Systems for Energy-Efficient Buildings, Cities, and Transportation; ACM: New York, NY, USA, November 15 2023; pp. 261–264.

- Khan, M.A.; Saleh, A.M.; Waseem, M.; Sajjad, I.A. Artificial Intelligence Enabled Demand Response: Prospects and Challenges in Smart Grid Environment. IEEE Access 2023, 11, 1477–1505. [Google Scholar] [CrossRef]

- Gao, Y.; Li, S.; Xiao, Y.; Dong, W.; Fairbank, M.; Lu, B. An Iterative Optimization and Learning-Based IoT System for Energy Management of Connected Buildings. IEEE Internet Things J 2022, 9, 21246–21259. [Google Scholar] [CrossRef]

- Malagnino, A.; Montanaro, T.; Lazoi, M.; Sergi, I.; Corallo, A.; Patrono, L. Building Information Modeling and Internet of Things Integration for Smart and Sustainable Environments: A Review. J Clean Prod 2021, 312, 127716. [Google Scholar] [CrossRef]

- Anvari-Moghaddam, A.; Rahimi-Kian, A.; Mirian, M.S.; Guerrero, J.M. A Multi-Agent Based Energy Management Solution for Integrated Buildings and Microgrid System. Appl Energy 2017, 203, 41–56. [Google Scholar] [CrossRef]

- Pinthurat, W.; Surinkaew, T.; Hredzak, B. An Overview of Reinforcement Learning-Based Approaches for Smart Home Energy Management Systems with Energy Storages. Renewable and Sustainable Energy Reviews 2024, 202, 114648. [Google Scholar] [CrossRef]

- Sheng, R.; Mu, C.; Zhang, X.; Ding, Z.; Sun, C. Review of Home Energy Management Systems Based on Deep Reinforcement Learning. In Proceedings of the Proceedings - 2023 38th Youth Academic Annual Conference of Chinese Association of Automation, YAC 2023; Institute of Electrical and Electronics Engineers Inc., 2023; pp. 1239–1244.

- Daneshvar, M.; Pesaran, M.; Mohammadi-ivatloo, B. 7 - Transactive Energy in Future Smart Homes. In The Energy Internet; Su, W., Huang, A.Q., Eds.; Woodhead Publishing, 2019; pp. 153–179 ISBN 978-0-08-102207-8.

- Rodrigues, S.D.; Garcia, V.J. Transactive Energy in Microgrid Communities: A Systematic Review. Renewable and Sustainable Energy Reviews 2023, 171, 112999. [Google Scholar] [CrossRef]

- Nizami, S.; Tushar, W.; Hossain, M.J.; Yuen, C.; Saha, T.; Poor, H.V. Transactive Energy for Low Voltage Residential Networks: A Review. Appl Energy 2022, 323, 119556. [Google Scholar] [CrossRef]

| Database | Publication Type | Building Automation |

Home Automation |

Reinforcement Learning | Building Automation + Reinforcement Learning |

Home Automation + Reinforcement Learning |

|---|---|---|---|---|---|---|

| Web of Science | Articles | 13,770 | 2,368 | 46,764 | 164 | 20 |

| Reviews | 888 | 179 | 2,007 | 13 | 3 | |

| Scopus | Articles | 11,628 | 8,481 | 51,883 | 103 | 101 |

| Reviews | 967 | 622 | 3,206 | 9 | 3 | |

| Google Scholar | Any type | 3,150,000 | 3,170,000 | 4,680,000 | 250,000 | 204,000 |

| Reviews | 172,000 | 191,000 | 63,200 | 24,600 | 21,500 |

| Database | Publication Type | Building Automation |

Home Automation |

Reinforcement Learning | Building Automation + Reinforcement Learning |

Home Automation + Reinforcement Learning |

|---|---|---|---|---|---|---|

| Springer | Articles | 36,848 | 15,339 | 64,648 | 2,434 | 1,073 |

| Reviews | 2,898 | 1,297 | 5,232 | 462 | 173 | |

| Science Direct | Articles | 70,247 | 25,541 | 83,149 | 3,760 | 1,312 |

| Reviews | 8,619 | 4,046 | 12,9646 | 1,323 | 579 | |

| MDPI | Articles | 1,346 | 379 | 3,797 | 17 | 2 |

| Reviews | 133 | 47 | 261 | 7 | 1 | |

| IEEE Xplore | Conferences | 28,815 | 8,194 | 31,831 | 430 | 65 |

| Journals | 5,861 | 1,032 | 876 | 202 | 24 | |

| Taylor and Francis |

Articles | 154,033 | 54,445 | 310,108 | 16,794 | 9,081 |

| Reviews | 4,498 | 1,737 | 5,397 | 512 | 228 | |

| ACM DigitalLibrary | All type | 149,403 | 28,663 | 47,165 | 13,237 | 4,325 |

| Reviews | 201 | 43 | 62 | 18 | 4 | |

| Wiley Online Library |

Journal | 236,565 | 71,939 | 223,645 | 18,307 | 10,196 |

| Books | 45,956 | 18,855 | 36,651 | 5,294 | 3,001 |

| Reference / Year | Application | Algorithm Method |

Objectives | Verification |

|---|---|---|---|---|

| [77] 2024 | IoT | Deep Reinforcement Learning (DRL) |

Cost and Comfort | Simulation |

| [78] 2024 | IoT | Deep Q-learning | Cost and Comfort | Simulation |

| [79] 2023 | IoT | Q-learning | Other (Autonomy, Personalization, and Privacy) |

Simulation |

| [34] 2020 | IoT | Deep Deterministic Policy Gradients (DDPG) |

Cost and Comfort | Simulation |

| [80] 2021 | Demand Response | Multi-Objective Reinforcement Learning (MORL) |

Cost and Comfort | Simulation |

| [81] 2021 | Demand Response | Q-learning | Cost and Comfort | Real (Physical system testing using MATLAB and Arduino Uno) |

| [82] 2023 | Demand Response | Deep Q-network (DQN) | Cost and Comfort | Simulation (evaluated using real-world data) |

| [83] 2021 | Demand Response | MATD3 - Multi-Agent Twin Delayed Deep Deterministic Policy Gradient |

Cost and Comfort | Simulation (evaluated using real-world data) |

| [84] 2020 | Demand Response | Q-learning combined with Fuzzy Reasoning |

Cost | Simulation |

| [76] 2019 | Demand Response | Multi-Agent Reinforcement Learning (MARL) combined with Artificial Neural Networks (ANN) | Cost and Comfort | Simulation |

| [85] 2020 | Demand Response | Proximal Policy Optimization (PPO) | Cost | Simulation |

| [31] 2023 | Demand Response | Q-learning combined with Fuzzy Reasoning |

Cost and Comfort | Simulation |

| [35] 2021 | Demand Response | DDPG with Dual Targeting Algorithm (DTA) |

Cost and Comfort | Simulation |

| [86] 2020 | Demand Response | DQN | Cost | Simulation |

| [87] 2022 | Demand Response | Primal-Dual Deep Deterministic Policy Gradient (PD-DDPG) | Cost | Simulation |

| [88] 2022 | Demand Response | Actor-Critic using Kronecker-Factored Trust Region (ACKTR) | Cost and Comfort | Simulation (evaluated using real-world data) |

| [89] 2021 | Demand Response | DRL | Cost and Comfort | Simulation |

| [90] 2020 | Demand Response | DQN and Double Deep Q-learning (DDQN) |

Cost and Comfort | Simulation (validated using a real-world database combined with the household energy storage model) |

| [91] 2021 | Demand Response | Q-learning | Cost | Simulation |

| [92] 2024 | Demand Response | DQN | Cost and Comfort | Simulation |

| [74] 2021 | Demand Response | Q-learning | Cost | Simulation |

| [93] 2022 | Demand Response | DQN | Cost and Comfort | Simulation |

| [6] 2024 | Scheduling | DQN, Advantage Actor-Critic (A2C), and Proximal Policy Optimization (PPO) |

Cost | Simulation |

| [94] 2022 | Scheduling | Q-learning | Cost and Comfort | Simulation |

| [95] 2023 | Scheduling | Meta-Reinforcement Learning (Meta-RL) with Long Short-Term Memory (LSTM) | Cost | Simulation (using practical data from Australia’s electricity network) |

| [96] 2022 | Scheduling | DQN, DDPG, and Twin Delayed Deep Deterministic Policy Gradient (TD3) | Cost | Simulation |

| [97] 2024 | Scheduling | PPO | Cost | Simulation (using real-world datasets) |

| [98] 2023 | Scheduling | DQN | Cost and Comfort | Real (using real-time data from a test bench with household devices) |

| [99] 2023 | Scheduling | PPO | Cost and Comfort | Simulation (based on real-world data) |

| [100] 2020 | Scheduling | Multi-agent Deep Deterministic Policy Gradient (MADDPG) | Cost | Simulation |

| [101] 2021 | Scheduling | Q-learning | Cost and Comfort | Simulation |

| [102] 2022 | Scheduling | DDPG | Cost and Comfort | Simulation |

| [103] 2022 | Scheduling | Q-learning | Cost and Comfort | Simulation |

| [3] 2020 | Scheduling | Q-learning | Cost and Comfort | Simulation |

| [104] 2023 | RES + Storage | Trust Region Policy Optimization (TRPO) based Multi-Agent Deep Reinforcement Learning (DRL) | Cost and Comfort | Simulation (using real-world data from the Australian National Electricity Market and PV profiles) |

| [105] 2022 | RES + Storage | DDPG | Cost and Comfort | Simulation |

| [106] 2024 | RES + Storage | SAC | Cost | Simulation |

| [107] 2019 | RES + Storage | Q-learning | Cost and Comfort | Simulation |

| [108] 2022 | RES + Storage | Q-learning | Cost | Simulation |

| [109] 2023 | RES + Storage | PPO with LSTM networks | Cost | Simulation |

| [110] 2024 | RES + Storage | DRL, specifically DDPG and PPO |

Cost | Simulation |

| [111] 2024 | RES + Storage | Actor-Critic-based RL with Distributional Critic Net |

Cost | Simulation |

| Opportunity | Home Automation | Building Automation |

|---|---|---|

| Demand Response and Load Shifting |

- RL is used to shift energy-intensive activities to off-peak hours based on dynamic pricing or renewable energy availability [32] - Methods like PPO and A2C are used for optimizing the timing of energy use in home devices [116,117] |

- RL enables buildings to participate in demand response programs by shifting large loads (e.g., elevators, HVAC) to off-peak periods or times of high renewable generation [118] - More complex energy balancing strategies are needed due to scale [30,119] |

| Integration with Renewable Energy |

- RL can optimize the use of rooftop solar panels and home batteries by learning when to store energy or sell it back to the grid - Key opportunity lies in coordinating solar generation with storage for maximum efficiency [107] |

- RL manages larger-scale renewable energy systems (e.g., building-integrated PV, wind turbines), optimizing when to use, store, or sell energy to the grid [120,121] - RL models handle interactions with smart grids and microgrids [122] |

| Energy Storage Management |

- RL optimizes home battery usage by learning when to store solar energy or discharge it during peak demand periods [89,106,123] - Future opportunities include real-time adaptation to energy pricing and household consumption patterns [124,125] |

- Large buildings with energy storage systems require RL to balance stored energy with grid demand, renewable generation, and internal consumption [115,126] - RL agents coordinate across multiple storage units and energy systems [118,121,127] |

| Smart Lighting and Occupancy-based Control |

- RL-based lighting systems learn from occupancy sensors and adjust lighting schedules to save energy while maintaining comfort - Personalized lighting control based on user habits is a key development area [128,129] |

- RL for adaptive lighting in large buildings helps reduce energy waste by adjusting lighting across zones based on occupancy [130] - Deep Q-learning has been applied for energy-efficient lighting control in commercial spaces [131] |

| Scalability and Complexity |

- Home automation systems involve fewer devices and simpler control systems, making it easier to deploy RL models and achieve fast optimization results - Future work will focus on personalization and adapting RL to individual preferences [3,132] |

- Building automation systems are more complex, requiring multi-agent RL systems to handle diverse, multi-zone environments [133] - Scalability of RL models to manage multi-objective optimization across large buildings is an ongoing research challenge [40] |

| Integration with Smart Grids and IoT |

- IoT devices in smart homes provide real-time data to RL systems for better energy optimization and appliance control [134] - RL agents can integrate with home microgrids, managing energy flows between renewable sources, storage, and consumption [19,107] |

- In large buildings, RL facilitates participation in smart grids by managing energy exchange, load balancing, and interactions with external energy markets [122,124] - Enhanced IoT connectivity improves RL performance in coordinating various building subsystems [135,136] |

| Renewable Energy Prosumers |

- Homes with solar panels and energy storage can act as “prosumers,” where RL optimizes energy generation, consumption, and selling excess energy back to the grid [70,71,72] | - Buildings with integrated renewable systems participate as prosumers in energy markets, and RL manages the building’s contribution to local energy grids and microgrids [28,137] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).