1. Introduction

Digital twins are rapidly transforming various sectors, offering a powerful means to create digital replicas of real-world objects and systems. In their broadest sense, digital twins can be seen as virtual representations of physical systems that mirror their real-world counterparts. These representations are kept up to date through a continuous flow of information between the physical and digital realms. The concept of digital twins extends beyond a simple one-to-one mapping of physical attributes, encompassing the system’s environment, processes, and their interconnections.

Digital twins are finding applications across a wide range of industries and research fields, demonstrating their versatility in representing and managing complex real-world systems. While initially prominent in industrial settings for testing and simulating the behaviour of machinery and devices, digital twin technology has expanded into diverse sectors. For instance, the European Commission has proposed the ambitious “Destination Earth” initiative [

1], aiming to create a comprehensive environmental model of the Earth for forecasting and mitigating the impacts of climate changes. In the realm of architecture and urban planning, digital twins, particularly within the framework of Building Information Modelling (BIM), are being used for documenting historical structures, designing new buildings, and for large-scale urban planning projects. The increasing use of sensors and the Internet of Things (IoT) has further broadened the applications of digital twins, allowing for real-time monitoring and data analysis in fields like cultural heritage preservation and restoration.

In the ever-evolving landscape of digital innovation, data stands as the lifeblood of every digital twin. As faithful digital replicas of physical systems, these digital objects are not merely static representations but also dynamic entities that thrive on the continuous flow and integration of data. The complexity and diversity of these data necessitate a robust framework for their management, and it is here that ontologies emerge as fundamental tools. Ontologies, designed for the rational and coherent organization of data, provide the ideal structure for managing the intricate web of information that constitutes a digital twin as they enable the classification, interlinking, and querying of data, thereby facilitating a deeper understanding and more effective utilization of the information.

The recent advent of artificial intelligence adds a new dimension to this digital ecosystem. AI technologies offer advanced tools for data analysis, pattern recognition, and predictive modelling, enhancing the capabilities of digital twins and ontologies. This synergy between digital twins, ontologies, and AI creates a powerful combination that can constitute a new pillar of modern data management, in which digital twins provide the virtual canvas, ontologies the structured framework, and AI the analytical skills, collectively enabling unprecedented insights and informed decision-making processes. As digital twins evolve, ontologies ensure that the data remains organized and accessible, while AI leverages this data to drive innovation and efficiency. Together, these elements form the foundation upon which the future of data-driven systems is built, promising a new era of technological advancement and human understanding.

In this paper, we present an exploration of the role that digital twins are assuming in the context of cultural heritage, with a particular emphasis on the critical aspect of data management. We will offer an overview of the Heritage Digital Twin model that we are defining, and delve into the relationship between data, ontologies, and artificial intelligence, highlighting how these elements form the cornerstones that define the future of data-driven cultural digital twin systems, demonstrating how they are collectively advancing our ability to manage, preserve, and understand our shared cultural legacy knowledge.

2. Digital Twins and Cultural Heritage

Digital twins have increasingly become relevant in various research and application domains, reflecting the growing complexity of their role as digital counterparts of real-world entities and phenomena. As explored in many reviews across disciplines [

2,

3], the digital twin concept not only serves to capture the intricacies of digitized entities in both industrial and scientific settings but also reflects a broader philosophical engagement with how we represent and interact with reality. The term is often used loosely, sometimes as a generic descriptor for any digital replica of real-world objects, while at other times or to mean a more rigorous, structured information system that interfaces with the physical world. The Destination Earth initiative [

1], promoted by the European Commission and aimed at creating a complete and accurate digital twin of the planet and its environment, demonstrates that the concepts and terms of this technology are resonating even within high-profile regulatory bodies.

Originally, digital twins served the need for production optimization, performance enhancement, product innovation, and predictive maintenance of the industrial sector. However, over time, the potential of this technology has expanded into various fields, such as environmental engineering and urban planning. Notable examples in these fields include the digital twin of Zurich, aimed at improving urban planning [

4], and the digital twin of Singapore, which integrates data from multiple sources, such as sensors, satellites, and drones, to create a virtual replica of the city for urban growth management and environmental monitoring [

5]. In other fields, such as architecture and construction, digital twins are revolutionizing the design and building processes by enabling accurate simulations of how structures interact with their surrounding environment before they are physically constructed. This allows for early detection of design flaws, reducing maintenance interventions. Furthermore, scientific research has begun to harness the power of digital twins to create virtual laboratories, which simulate complex natural phenomena and deliver reliable results in reduced timeframes, minimizing the risks and costs associated with real-world experimentation. NASA, for instance, has long embraced digital twin technology [

6], and recently has also applied it to its Mars 2020 mission, for which the digital twins of the Perseverance rover and the Ingenuity helicopter were created to simulate their physical counterparts in real-time, allowing for remote control and monitoring of their interaction with the Martian environment.

In light of these numerous and significant examples, the time seems ripe for applying this transformative technology to the realm of cultural heritage.

2.1. Defining Digital Twins for Cultural Heritage

The definition of what a digital twin actually is, varies depending on its intended use and the context in which it is applied. A recent survey by Semeraro et al. [

3] lists about thirty different definitions of digital twin, including the famous (and in some ways foundational) one formulated in 2014 by Grieves [

7]. But when considering its application in cultural heritage, a deeper reflection on methodology is required to define a model capable of ensuring an accurate and faithful representation of the real-world cultural entities. It is important to note that in many cases, digital twins in other fields are created either before the real object exists, as in predictive industrial production or urban planning, or simultaneously with it, as in the case of NASA’s space missions. In contrast, cultural heritage objects typically are complex entities that existed since long before their digital reproductions and with lifespans that extend across multiple temporal and spatial dimensions. Thus, defining the nature and identity of cultural objects is a necessary precondition to formulating an appropriate paradigm for their digital representation. A “Heritage Digital Twin”, as we defined it, must certainly be understood as a “duplicate” of a real cultural entity. However, it would be overly simplistic to reduce this duplication to merely physical and material aspects, overlooking the intricate network of information of which the essential nature of a cultural object consists. Cultural entities, moreover, may be immaterial, as in the case of events and cultural manifestations, such as the

Palio of Siena [

8,

9,

10] or the

Calcio Storico in Florence [

11,

12]. These intangible heritage assets, which exist beyond any physical form, persist through documentation, serving as testimony to past events or as formulations for future activities.

While initial approaches to the digital twin in cultural heritage were closely tied to the notion of 3D visualizations as mere “digital replicas”, especially in archaeology, this has evolved significantly in recent years. In fact, early visual reconstructions, dating back to the late 20th century, were motivated by the need to reproduce the imagined pristine appearance of ruins and artifacts, replacing traditional methods such as drawings and physical casts. However, this approach has rapidly expanded to include the documentation of artifacts, monuments, and sites, leading to an extensive production of 3D models of varying quality and detail. As the field matured, it became clear that shape alone was insufficient for advanced research or practical applications. The 3D-COFORM project (2008-241) [

13] had the merit of demonstrating that comprehensive documentation, linked to 3D models is essential for conservation and restoration purposes, and that information about materials, construction methods, and interventions can be embedded directly into the 3D models. This principle is further extended in Heritage BIM (HBIM) [

14], a specialized adaptation of Building Information Modelling for historic buildings, in which information is structured according to a standard data model, the Industry Foundation Classes (IFCs) [

15], aimed to integrate concepts unique to cultural heritage. Yet, both the 3D-based approach and the HBIM methodology reveal inherent limitations, since they treat the 3D model as the root of all documentation, making any additional data a mere appendage and impeding large-scale comparative analyses across different assets.

A more robust solution lies in a semantic approach, where information is organized in classes and interlinked using meaningful relationships, enabling efficient querying across various heritage objects. For example, by linking materials (such as wood) to heritage assets via properties (such as “is made of”), one can efficiently retrieve all assets constructed using wood. The core of a Cultural Digital Twin, then, is not merely its 3D visualization but the aggregation of various forms of documentation—literary sources, photographs, archival material, scientific analyses, and more. 3D models can indeed be powerful and versatile tools for rendering the tangible aspects of cultural heritage (such as size, shape, colour, and texture of objects and monuments) but they are far less suited for representing the historical narratives, artistic values, social relevance, and other intangible elements that are crucial to fully understanding cultural objects. These aspects require expression through more advanced semantic modelling frameworks. This is where ontologies come into play, allowing for the structured organization of knowledge in a way that goes beyond simple shape or geometry.

2.2. Heritage Digital Twins

According to these considerations, we defined [

16,

17,

18] the concept of Heritage Digital Twin (HDT) as the complex of all available digital information concerning a real-world heritage asset, either movable (e.g., cultural objects), immovable (e.g., monuments and sites), or even intangible (e.g., cultural manifestations or traditions). This paradigm, unlike 3D-centric systems, encompasses all available digital cultural information concerning cultural objects, including visual representations, reports, documents, scientific analyses, conservation data, and historical research, a perspective that emphasises the interconnectedness of various data, moving beyond the limitations of 3D models as the primary source of information.

Moreover, the implementation of digital twins relies on several interconnected components that work together, making digital twins “reactive”, to ensure the effective monitoring and preservation of cultural heritage assets. Components include sensors, i.e., physical devices strategically positioned on or near the heritage asset, aimed at measuring specific physical quantities like temperature, humidity, or vibration, and deciders for comparing the incoming sensor data against established thresholds or rules, for determining whether any actions are required. Additional components in this system are the activators, which carry out real-world actions based on the decisions made by the deciders. The digital twin thus creates a reactive loop that continuously monitors and responds to changing conditions, ensuring optimal preservation of cultural heritage, and whose functioning relies heavily on aggregated data.

Data therefore constitute the backbone of the digital twin system and serves as a comprehensive repository of knowledge about a heritage asset, supporting informed decision-making in all aspects of its management. In this perspective, our digital twin paradigm becomes instrumental in advancing data management and prescribes the use of efficient data organization tools, like ontologies, to improve the effectiveness of heritage documentation.

2.3. Digital Twins and Ontologies

Over time, ontologies have proven to be extremely powerful tools when dealing with intricate and multifaceted data as those produced within the cultural heritage domain. They provide a structured, formal framework that enables the organization, interlinking, and querying of diverse data types, thereby facilitating a deeper understanding and more effective utilization of complex information. Additionally, ontologies provide the formal description of all digital twin operations, ensuring the constant preservation of the context in which its components operate and creating a sort of real-time “digital memory” that makes it possible, at any time, to retrieve, understand and reuse all the information in a complete and accurate way. The use of standardized vocabularies further ensures the consistency and uniformity of data, an essential aspect for guaranteeing interoperability among different systems and platforms. Thanks to these characteristics and their ability to effectively orchestrate the use of aggregated documentation, ontologies can serve as the “engine” of digital twins throughout every phase of their operational life [

19].

Ontologies also offer benefits in terms of long-term preservation of digital twin information, particularly in terms of data durability and context preservation [

20]. Ontological data, in fact, can be permanently stored in plain text files, which are highly durable supports and can be easily read and interpreted by humans and machines, thus becoming an ideal mean for preserving over time the information and the underlying context in which digital twins operated.

We formalized all the above concepts and definitions in a couple of seminal papers [

16,

17] where these considerations have been explored in depth. In the same works, we have also proposed some solutions that seem particularly adequate for dealing with the scenario we have outlined. Thus, we introduced the Reactive Heritage Digital Twin Ontology (RHDTO) [

18], a compatible extension of the widely used the CIDOC CRM conceptual reference model [

21] (henceforth “the CRM”), providing a standardized framework for organizing and interlinking diverse heritage data within digital twins, enabling interoperability with other CRM-compliant systems. The first release of RHDTO was met with considerable enthusiasm from the scientific community, and the paper in which it its described [

16] quickly gained significant popularity, emerging as the winner of the Data 2022 Best Paper Award. Notably, digital twins will also constitute the conceptual foundation of ECHOES, the recently started initiative launched by the European Commission to implement the future European Collaborative Cloud for Cultural Heritage (ECCCH) [

22]. The RHDTO is also the backbone of the forthcoming large scale ARTEMIS project, due to start in 2025, developing virtual restoration tools. The next section of this paper provides a general overview of this new ontology and its various features.

3. The RHDTO Model

The Reactive Heritage Digital Twin Ontology (RHDTO) we defined [

18] is a model developed to manage and ensure interoperability among the vast array of data that constitute the backbone of the heritage digital twin. Its development is grounded in the experience gained from significant data aggregation and integration projects, such as ARIADNE (harmonization of archaeological data) [

23,

24,

25] and 4CH (study and preservation of cultural heritage) [

26]. The RHDT ontology is constructed as an extension of the CRM, a reference model for cultural heritage data, and proposes a series of entities designed to express all available cultural and scientific documentation in a standardized format. Classes such as

HC4 Intangible Aspect,

HC5 Digital Representation, and

HC9 Heritage Activity that we introduced are employed to model cultural entities and their documentation semantically, while specifically designed properties are used to express the complex relationships with events, people, places, and actions that constitute their history and cultural value.

The RHDTO represents an innovative solution to manage and interconnect the broad spectrum of data that are the informational core of the digital twin of a heritage asset. The methodological choice to design it as an extension of the CRM stems from the novelty of the cultural digital twin concept and its logical structure. On the other hand, the CRM is an ISO standard widely used for cultural heritage documentation and compatibility with it guarantees a wide interoperability with existing documentation. The ontology defines ex novo the entities necessary to describe the specific elements of this domain and to model them appropriately, maintaining consistency with the primary model (also called CRMbase to distinguish it from extensions) and its extensions used to model entities such as times, places, events, agents, and physical objects, and their mutual correlations. This synergy implements a high degree of interoperability in the documentation and analysis of cultural heritage, as it allows the alignment of the RHDTO structure with those used in other cultural heritage research domains (such as archaeology and art history, architecture). For instance, it enables the identification and classification of architectural elements according to specific historical periods, styles, construction techniques, and materials employed, and defines the diachronic destinations and uses of specific spaces or elements of a particular building.

Table 1 lists the abbreviations used for referencing the CRM model and its extensions in this paper.

3.1. RHDTO General Classes

The RHDTO model is primarily conceived as a tool to capture the dual nature of cultural heritage (both tangible and intangible) and to provide, through specific classes and properties, a mechanism for dynamically documenting and analysing their mutual relationships. It is also designed to model their digital counterparts and the mechanisms that render them interoperable as interconnected elements of the cultural digital twin. The classes and properties of this ontology are identified by a code formed by the letters HC for classes and HP for properties, followed by a number.

The main class of the model, HC1 Heritage Entity, represents real-world entities relevant for their contribution to society, knowledge, and culture. It is a general-level class that includes both material and immaterial entities, which are modelled through its two subclasses, HC3 Tangible Aspect and HC4 Intangible Aspect. The latter is specifically intended for modelling historical and cultural events, traditions, cults, and practices typical of intangible heritage. The class HC2 Heritage Digital Twin represents cultural digital twins as the network of information that characterizes them, effectively configuring them as informative digital replicas of real-world entities.

The ontology also provides classes used for modelling digital iconographic and multimedia representations (HC5 Digital Representation, HC7 Digital Visual Object, HC8 3D Model) used as additional components of heritage digital twins. Specific classes also exist to express elements of the digital documentation (HC6 Digital Heritage Document) that contribute to defining the identity and history of the described cultural objects. A dedicated class (HC9 Heritage Activity) is devoted to representing activities related to the study, scientific investigation, and digital reproduction of cultural entities. The same class can be used for any other activity relevant to the creation and management of the digital twin.

To model stories, i.e., those fragments of knowledge derived directly from documentation or oral tradition and presented in narrative form to enrich the intangible heritage of the described cultural objects, we used some classes defined in the Narrative Ontology (NOnt) [

16,

17,

30], an ontological model developed within the MINGEI project [

31] and also compatible with the CRM. The

Narrative class of this ontology perfectly fits the concept of story we have formulated, while the

Narration class is used for rendering storytelling, i.e., the modalities in which the facts constituting a story are presented and shared.

The RHDTO model ensures that all instances of the used classes are always equipped with the necessary modalities to be mutually correlated. This is achieved through the use of properties inherited from the CRM, as well as through new properties specifically designed to express the complex links between cultural entities, their history, related documentation, and their digital representation. Among the new properties introduced, we have HP1 has digital twin, designed to indicate the relationship between cultural entities (HC1) and their digital twins (HC2); HP5 has intangible aspect, intended to associate material (HC3) and immaterial (HC4) aspects of cultural entities; HP3 has story, to link cultural entities (HC1) with the stories (Narrative) in which they are mentioned; and HP4 is narrated through, to indicate the storytelling modalities through which a story (Narrative) is told (Narration). This enables the creation of particularly detailed and extensive knowledge graphs that are perfectly capable of reproducing in the round every aspect of the cultural entity for which the digital twin is built, and compatible with any other archive constructed according to the same criteria.

3.2. Reactive Aspects of Heritage Digital Twins

As mentioned in the previous section, the reactive part of the digital twin is represented through a network of sensors, deciders, actuators and other devices able to guarantee complex interactions with the real world. The main classes we introduce in RHDTO to model these components are: HC9 Sensor, a specialized version of the CRMdig:D8 Digital Device, and HC10 Decider class, a subclass of CRMpe:PE1 Service class of the Parthenos Entities Model, another CRM-compatible ontology. As we will outline in the next sections of this paper, deciders are very peculiar components, since they are responsible for analysing input from a variety of sources and generating output instructions directed toward other components or human operators.

The actions performed by deciders are modelled as events through the HC14 Activation Event class. This is where the decision-making process takes place, one of the actions for which the use of artificial intelligence can bring the greatest benefits to the evolution of digital twins. Activators (HC11 Activator), instead, serve as components that execute actions based on the instructions received from deciders.

Specific classes are provided by RHDTO to model aspects such as: the placement of sensors on cultural objects or their surrounding spaces (HP15 is positioned on); the software through which the sensors are operated (HP11 is operated by); the measurements performed by them (HC13 Sensor Measurement); the signals (HC12 Signal) generated and sent by the sensors to the digital twin (HP12 was transmitted to). For representing human actors responsible for ensuring the safety of cultural assets, the CRM:E39 Actor class is used. This interaction underscores the importance of collaboration between the digital system and human operators in effectively managing risks.

A detailed description of all the classes and properties of the RHDTO model, along with illustrative case studies that demonstrate its effective application, is provided in [

18].

4. Artificial Intelligence and Cultural Heritage

The way in which groundbreaking technological advancements increasingly influence our daily lives is now well-known and evident to all. In the case of artificial intelligence, although we may not be certain we can talk about a revolution, it is clear that this technology is rapidly becoming a part of our world, even in sectors seemingly distant from the futuristic scenarios where it is usually imagined, such as cultural heritage and museums. Whether it is completing an unfinished symphony by a legendary composer [

32], reconstructing and understanding ancient texts written in extinct languages [

33], recreating the aspect of lost environments [

34,

35], identifying archaeological sites from aerial or satellite images [

35,

36], or simply providing guidance on how to preserve [

37] or restore [

38] damaged artwork, the new AI systems are proving to be powerful tools, enriching the arsenal of digital tools available to scholars in unprecedented ways.

In recent years, Europe has taken the lead in releasing a series of recommendations that demonstrate a significant commitment to regulating artificial intelligence in an ethical and responsible manner, defining, among other things, the first ever legal framework on AI. The 2020

White Paper on Artificial Intelligence [

39,

40] laid the groundwork by outlining a balanced approach to promote excellence and trust in AI, while the recent

AI Act Regulation of the European Commission [

41] places a strong emphasis on ethics, classifies AI systems based on risk, imposes stringent requirements for high-risk systems, and prohibits uses of AIs that violate fundamental rights. However, the European Commission seemed to underestimate the benefits that AI can bring to the cultural heritage sector. On the other hand, scholars and other operators in the sector have immediately realized the importance of AI technologies at European level and have swiftly put them into action, developing AI-based innovative solutions to enrich and preserve cultural legacy [

42,

43], with the awareness that it represents the living testament to the evolution of human civilization and not just a collection of artifacts and documents. The digital transformation of cultural heritage presents an unparalleled opportunity for AI to flourish as a research domain and to accelerate advanced access to this invaluable resource [

44].

4.1. Artificial Intelligence for Proccessing Cultural Documentation

One of the fields where artificial intelligence certainly excels is in the analysis of cultural documentation, as it brings a myriad of advantages that significantly enhance the preservation, accessibility, and understanding of cultural heritage. For instance, AI technologies can automate the collection and analysis of vast amounts of data, streamline and expedite the digitization and archiving of cultural information, thereby reducing costs and time. Additionally, they can improve accessibility through automated translation and transcription, reducing the manual work involved in traditional documentation methods while ensuring a more comprehensive capture of their content and meaning.

In our past activities, we made many attempts to replicate some of these processes, for instance trying to extract knowledge from textual documentation using NLP and machine learning techniques [

45,

46,

47]. However, these efforts were often limited by the complexity and variability of the data, as well as the need for extensive manual annotation and preprocessing needed to train the NLP tools. With the advent of AI technologies, this process has become significantly more efficient and effective since AI models can handle large volumes of textual data, automatically identifying and extracting relevant information without the need for extensive manual intervention.

By processing vast amounts of unstructured data, AI can unveil relationships and contextual insights hidden within the textual tapestry, detecting, for instance, patterns that aid in understanding the historical conditions and transformations of cultural heritage entities, offering valuable insights for the comprehension of cultural objects. AI systems, in fact, possess the remarkable ability to identify connections and patterns that may elude human cognition, thereby catalysing the creation of new and unexpected knowledge. The latter capability transcends mere data processing, and challenges our traditional notions of knowledge generation, suggesting that the pursuit of understanding, if approached and guided in the right way, can become a very fruitful co-creative human-machine process in a near future. Human supervision, in fact, remains essential in all the phases of this process to guarantee the accuracy and relevance of the extracted knowledge, as well as to guide the interpretative processes in line with domain-specific expertise.

4.2. Artifical Intelligence and Ontologies

As it is widely acknowledged, emerging AI technologies are not without challenges, particularly regarding data accuracy and the reliability of outcomes. In fact, the opaque nature of AIs often makes it difficult to understand the reasoning behind their outputs, often making their responses unreliable. This can pose significant challenges in cultural heritage, where trust and accountability are paramount, since these issues may lead to the misrepresentation of cultural narratives and the distortion of historical facts. Careful verification and validation are thus essential to ensure the integrity and authenticity of the knowledge that is derived or created, and that is eventually integrated into the Heritage Digital Twins.

Ontologies can become essential tools for overcoming issues related to transparency, interpretability, and accountability of the information, and serve as verifiable records of data and decision-making criteria used by AI systems. Since ontologies especially excel in capturing domain-specific knowledge and causal relationships in structured format, they can provide AIs with unambiguous representations of the analysed and generated data and their context, in a “language” that allows them to “reason” in formal ways and produce clean and contextually relevant outputs [

48]. Thanks to these features, the semantic knowledge graphs, typically implemented through ontologies, have the potential to help reduce the risk of “hallucinations”, i.e., the typical scenario in which AIs generate unreliable or erroneous information producing outputs not grounded in the actual data or context.

Thanks to these features, ontologies can also facilitate the establishment of XAI (Explainable Artificial Intelligence) systems, an evolution now considered necessary for making AI decisions and processes understandable and transparent, enabling users to comprehend how models work [

49]. The integration of ontological knowledge not only promotes the development of more explainable and trustworthy tools, but also aligns these technologies with ethical standards and human expectations, contributing to a responsible advancement of AIs [

50,

51].

4.3. Artificial Intelligence and Heritage Digital Twins

AIs and ontologies provide even bigger advantages when employed together for implementing the Heritage Digital Twin model, a system which operates on top of semantically rich knowledge graphs. Particularly with regards to scenarios of documentation and preservation of historical sites, artifacts, and intangible heritage, the combination of these powerful technologies significantly enhances the functionality of digital twins, transforming them from static models into dynamic systems capable of enriching, simulating, predicting, and optimizing real-world processes mostly in real time and in completely new ways.

Some recent attempts to employ AIs in digital twins have produced very encouraging results in different research fields, highlighting several benefits already in the present and leaving room for great future developments [

52,

53]. In cultural heritage, where traditional digital twins may focus on documenting and preserving physical objects or sites, AI-powered components can expand computational features by offering dynamic insights through continuous learning and adaptation. For example, an AI relying on the rich knowledge bases of the Heritage Digital Twins can guess and simulate how an ancient building may have been used, predict the long-term effects of restoration techniques on delicate artifacts, or even assist in reconstructing lost historical knowledge hidden in ancient documents. AI can also assist digital twins in the continuous monitoring and analysis of data coming from sensors and other external digital sources, helping to detect modifications in the condition of heritage objects, whether due to environmental factors, deterioration, or human intervention. Additionally, AI-driven Heritage Digital Twins can speed-up and increase the efficiency of predictive analysis, allowing conservators and cultural heritage professionals to foresee potential risks such as environmental degradation or structural damage even before they actually occur, a predictive capability that would be essential in safeguarding assets that are sensitive to climate change, pollution, and other environmental condition changes over time. Moreover, AI can assist in simulating various conservation strategies within the digital twin, imagining and implementing innovative virtual environments that would allow experts to plan and execute test interventions without endangering the original artifact or site.

The structured nature of the knowledge graph of the Heritage Digital Twins, implemented through ontologies, can further help to ensure that all the AI-driven decision-making processes keep transparent and explainable, allowing AI-empowered digital twins to provide clear motivations for all the operations performed and decisions taken. Additionally, AI can enrich and expand the digital twin knowledge graph by providing explanations along the whole decisions chain, ensuring transparency and accountability also in future operations. This technological synergy is also defining a new paradigm, called Cognitive Digital Twin (CDT), that represents a significant evolution in digital twin technology, leveraging advanced AI capabilities to not only replicate physical entities but also simulate cognitive processes, potential reactions and behaviours [

54].

5. Implementing AI-Powered Heritage Digital Twins

Building on the theoretical considerations discussed in the previous sections, we have refined our Heritage Digital Twin model, identifying scenarios where AI could effectively contribute, in combination with the various components of the Digital Twin, to enhance digital twin’s capabilities, such as the extraction and semantic encoding of information from heritage documentation, the continuous updating and enrichment of the knowledge graph, and the enhancement of analytical and predictive capabilities for identifying and preventing risk situations.

5.1. Artificial Intelligence and Documentation

In light of the above discussion, the most immediate and obvious way to harness the power of AI is in the analysis of historical and archival documentation included within the Heritage Digital Twin. In this scenario, AI is used to process historical documents, research papers, and even multimedia content to extract relevant data, and derive dates, locations, people, and information about objects and events, and structure them according to the classes and properties of the RHDTO. AI can also handle multilingual documents, using language translation models to process texts in various languages and derive information from them. This capability is particularly useful in cultural heritage where documentation may span over different languages and historical periods. The extracted data can then be used to populate the knowledge graph of the Heritage Digital Twin, enriching its informational and historical context.

AI can also be used to foster the continuous updating of the knowledge graph as new documentation becomes available. For instance, AI algorithms can be set to periodically scan for new sources, extract information, and update the knowledge base, ensuring that the Heritage Digital Twin remains up-to-date and widely comprehensive over time. This automated process significantly reduces the manual effort required for knowledge curation and management, making it more efficient and scalable.

The enriched knowledge graph can, in turn, be used to advance the training of the AI, further enhancing its performance, allowing the refinement of its understanding and improving its ability to retrieve relevant information. This makes the AI increasingly “informed” about the most relevant facts concerning the cultural entity reproduced by the Digital Twin and its historical and cultural context.

5.2. Artificial Intelligence and Decider Components

The use of AI can also have a significant impact in enhancing the reactive components of the Heritage Digital Twins. In a previous work [

18], we have defined the Decider as an intelligent component able to process incoming data from sensors and information stored in the digital twin knowledge base to detect potential risks and execute predefined algorithms or apply decision-making rules to determine appropriate actions to preserve cultural heritage entities. In this scenario, AI technologies can improve the efficiency of this essential component [

55], especially in scenarios where real-time decisions are required. In particular, AI would allow the Decider to refine and enhance its decisional process, allowing for faster and more efficient responses to critical issues detected by the digital twin. AI tools, in fact, excels in quickly processing vast amounts of data, identifying complex patterns, and making predictions based on evolving information. Additionally, AI can efficiently combine and analyse data coming from various sources, including historical data, real-time sensor data, and scientific data, enabling more comprehensive and accurate decisions based on a wide range of inputs.

The AI can also capture the decisions made by the Decider and the context in which they were made, by providing a comprehensive view of past actions and their outcomes, in order to create in the knowledge graph historical records of single decisions or decision chains that can be used to improve future decision-making processes. Moreover, the predictive analytics typical of AI improve the capabilities of the digital twin to anticipate future trends and potential risks, allowing the Decider to adopt a proactive rather than reactive approach, thereby helping to prevent issues before they arise.

To optimize these AI features, the system can be additionally trained to recognize aspects critical to conservation and preservation, for instance through the analysis of reports, protocols and recommendations produced by experts in conservation and restoration. This targeted training helps the AI focus on critical elements and make more informed decisions. Once trained, the AI can be further tested through simulated risk scenarios, allowing researchers to assess and refine its decision-making responses under controlled conditions. The responses can then be integrated back into the training process to further refine and improve the AI decision abilities. This iterative approach ensures that the AI is not only capable of making accurate decisions but also continuously improves its performance based on new data and expert feedback.

5.3. Embedding Physics-Based Models

As we already underlined, the efficiency of a Heritage Digital Twins lies in its ability to have a continuous update of its information, even if in fact this may not be feasible for every aspect of the reproduced objects and under all conditions. Indeed, while the information on cultural objects available today is very extensive, relying solely on acquired data for complex systems to work properly can prove difficult and in many cases unreliable. For instance, in the preservation of historical buildings, the internal condition of a centuries-old wall cannot be constantly assessed by breaking it open, and instead, inferences must be made from limited sensor data. Similarly, in analysing ancient artefacts, conservators must rely on sparse, noisy, and indirect observations to infer internal conditions and material composition [

56].

A possible solution for addressing this challenge could lie in the integration, directly in the AI, of predictive physics-based models, which encode the governing laws of nature, with scalable methods for enhancing data simulation, optimization and decision-making [

57]. This would allow AI-driven deciders to provide consistent and accurate decisions based on predefined criteria and algorithms, reducing the risk of human error and bias, and ensuring that decisions are made objectively and reliably.

The use of ontologies, which provide the semantic mechanisms to describe this type of information and orienting AI reasoning, allows filling the gap in the data coming from observation by means of inferences derived from the analysis of the physical and mathematical laws that commonly regulate natural processes. This approach, encompassing the field of computational science, aims to predict complex phenomena such as the deterioration of historical buildings, the response of ancient artifacts to different conservation treatments, and the structural integrity of archaeological sites under various environmental conditions.

6. Extending the RHDTO Model

To document all the proposed scenarios of employing AI in support of the Heritage Digital Twin, we extended the RHDTO to model the various components involved and capture their complex interactions. In particular, we introduced a new class HC15 AI Component, representing the AI component within the digital twin system and describing its role in automating and enhancing various processes performed, such as data analysis, decision-making, and predictive modelling. These processes are represented by means of the new HC16 Simulation and Prediction class, so that they can be recorded in the digital twin’s knowledge base to be examined and reused by AI in future scenarios. Below, a tentative definition of these classes:

This class is intended to model artificial intelligence systems integrated into the Heritage Digital Twin. AI Components provide advanced capabilities of extracting and semantically encoding information from heritage documentation, continuously updating and enriching the digital twin’s knowledge base. Additionally, instances of this class perform tasks such as data analysis, decision-making, and pattern recognition, driven by algorithms or machine learning models, enhancing the analytical and predictive capabilities of digital twins to provide deeper insights into cultural entities and their historical and cultural context. Unlike conventional software, instances of HC15 simulate intelligent behaviour, learning from data and adapting to new conditions while making informed. AI Components are continuously trained using data stored in the knowledge graph, real-time data coming from sensors, and scientific documentation and protocols produced by experts, thus refining its responses through iterative feedback to prioritize key preservation factors. They also leverage physics-based models to infer conditions that cannot be directly observed, such as material degradation of cultural objects.

This class is used to represent events of analysis, simulation and prediction performed by the AI Component within the digital twin system, providing a structured way to document the AI’s analytical activities. Simulations and predictions are critical components of the digital twin system, as they allow for the anticipation of future trends, the identification of potential issues, and the optimization of decision-making processes. By performing simulations, the AI can test various scenarios and evaluate their impacts without the need for real-world experimentation. This is particularly useful in scenarios where real-time decisions are critical, such as emergency response or dynamic system optimization. Predictions, on the other hand, enable the AI to forecast future states or events based on historical data and current conditions, providing valuable insights for proactive maintenance and conservation efforts.

To enhance the functionality and transparency of the AI Component, we also defined some new properties essential for describing the nature and functionalities of the AI Component and the results of the operations performed by it in ontological (and thus, formal) terms. The new properties include:

This property describes the specific algorithm or model employed by the AI Component within the digital twin system to process documentation efficiently, implementing its capability to draw meaningful conclusions from the analysed data. By recording this information, the property emphasizes the methodological foundation of the AI component’s functionality, underscoring its reliance on algorithmic approaches to achieve its objectives.

This property specifies the training documentation, protocols or datasets that were utilized to train the AI component, indicating that the AI has undergone a training process fundamental for enabling it to learn patterns, make predictions, and improve its performance in tasks related to cultural heritage documentation. This property is also essential for identifying the source and nature of the training data, which is critical for understanding the capabilities and limitations of the AI.

A property that indicates the relationship between the AI Component and the documentation or data being examined. This property indicates that the AI has performed a thorough examination of the specified documentation, extracting relevant information, patterns, and insights. The analysis may involve various techniques such as natural language processing, data mining, or pattern recognition to interpret and contextualize the data. The property is also essential for keeping precise and transparent records of the extracted knowledge provenance.

This property refers to the information or insights that are derived from data processing performed by AI components within the context of digital twins. This property captures the significant historical data that AI algorithms analyse and interpret, transforming raw information into structured graphs. The extracted knowledge can encompass various types of data, including but not limited to historical facts, relationships, and context, which are essential for creating a comprehensive semantic graph.

This property is used to establish a direct link between an AI Component and a specific Simulation or Prediction event, exactly indicating the analysis, simulation or prediction performed by an AI Component, thus ensuring traceability, accountability, and transparency in all AI’s operations.

This property can be used to models the connection between the Decider and the AI Component, indicating that the Decider has utilized the AI Component’s support in its decision-making processes. The property is fundamental for documenting the symbiotic relationship between the two components, underscoring the role of AI in augmenting and enhancing the decision-making abilities of the Decider. Additionally, this property is particularly valuable for understanding the context and rationale behind the Decider’s decisions, enabling users to validate and interpret its actions effectively.

Examples of how these classes and properties can be used to semantically model AI-empowered Heritage Digital Twins are provided in

Section 7.

6.1. Why Artifical Intelligences Cannot Be (E39) Actors (and Probably Never Should)

Those readers familiar with ontologies and the CRM world may have noticed that the names of some of the entities we have defined, such as HC16 Simulation or Prediction, or HP20 performed, show great similarities with those of classes and properties already existing in the CRM ecosystem, and may wonder why these classes were not used directly in RHDTO. This is because these entities often refer to concepts orbiting in the sphere of the CRM:E7 Activity class, namely those events specifically carried out by instances of CRM:E39 Actor in CRM. For example, the CRMsci:S7 Simulation or Prediction class is placed in the Activities branch (E7) of the CRM, and therefore among those “actions intentionally carried out by (P14) instances of E39 Actor”, as stated in the E7 scope note.

Even the CRMdig:D7 Digital Machine Event class, which at first glance might seem optimal for representing the reasoning typically performed by AIs, is described in its scope note to “comprise events that happen on physical digital devices following a human activity that intentionally caused its immediate or delayed initiation and results in the creation of a new instance of D1 Digital Object on behalf of the human actor”.

The question of whether an AI should be classified as a CRM:E39 Actor in an ontological framework such as CRM raises significant philosophical and conceptual issues. At the heart of the matter is the distinction between human and machine agency. In the CRM, the E39 Actor class encompasses entities capable of intentional actions, i.e., humans, organizations, and groups that act with purpose, self-awareness, and often within complex socio-cultural contexts. In contrast, AIs, despite their increasing sophistication, operate without true intentionality. AI components, no matter how advanced, remain tools executing algorithms, based on rules and models designed by human agents, to perform tasks or simulate decision-making processes. While AI can mimic certain aspects of human cognition and agency, such as decision-making or adaptive learning, it lacks the intrinsic properties of autonomy, moral responsibility, and consciousness that define actors in a human sense.

Thus, to classify AI as a CRM:E39 Actor would suggest a level of equivalency with human beings or institutions, which raises concerns about anthropomorphizing technology. Such a classification could generate a misleading understanding of AIs capabilities, implying that AI might possess the self-determined agency and the social roles traditionally ascribed to human actors. While AI can perform actions, these are fundamentally reactive, governed by predefined logic or learning models. Its “decisions” are calculative rather than reflective, lacking the contextual and ethical reasoning that characterizes human decision-making. Furthermore, in an ontological perspective, Actors in the CRM are embedded in cultural, historical, and social systems in ways that an AI component cannot be. AI operates within a technical framework, and while it can engage with cultural heritage data, it does so as an instrumental tool, not as an agent with cultural or ethical background.

Another reason why AI cannot be considered an E39 Actor(s) lies in the nature of human decision-making activity. Unlike AI, which relies on algorithms and data processing, humans often make instinctive decisions, guided by intuition or “good feelings” that sometimes turn out to be correct even without consciously knowing why. A well-known example is found in chess, where grandmasters like Garry Kasparov have spoken of making incredibly complex decisions in mere seconds based on intuition rather than detailed analysis. This kind of decision-making draws on a blend of experience and tacit knowledge, something AI cannot replicate.

Therefore, it looks philosophically and ontologically more appropriate to us to place AI Components in our ontology within the category of CRMdig:D14 Software, acknowledging its role as an advanced digital tool, and at the same time to define HC16 Simulation or Prediction as a direct subclass of CRM:E7 Event. This preserves the integrity of the distinction between human and machine agency, recognizing the instrumental power of AI without attributing to it qualities it does not possess. By doing so, we maintain a clear boundary between human actors and AI components, which, while powerful, remain in essence computational tools programmed to serve human purposes.

7. Case Study: Arnolfo di Cambio’s Tower in Florence

To showcase the applicability of our model, and especially of the new AI classes and properties defined, we selected the Tower of Arnolfo di Cambio, one of the most iconic monuments of the city of Florence (Italy). This is the prominent clock tower of

Palazzo Vecchio, the historic building now housing the Florence city council, designed and created in the late 13th century by Arnolfo di Cambio, a notable Italian architect and sculptor, to serves as a symbol of the city’s civic power [

58,

59].

In ontological terms, the Tower, being a monument and thus a physical cultural object, can be represented in RHDTO by means of the HC1 Heritage Entity class. The HC2 Digital Twin class can be instantiated to define the Heritage Digital Twin of the Tower within the semantic space of our system.

Historically, the Tower has been a site of public announcements and a prison, showcasing its multifaceted role in Florence’s medieval and Renaissance history. The Tower’s significance in the history and cultural imagination of Florence is further underscored by extensive historical and archival documentation, including historical maps, city records, and construction documents, that provide insights into the tower’s origins, modifications, and its enduring significance to the city over time. Additionally, the Tower is extensively represented in various artistic media, including 3D models, paintings, prints, and photographs, depicting it in its urban context, and showcasing its architectural grandeur and central role in the Florentine skyline (see

Figure 1).

7.1. Extracting Knowledge from Documentation

All the above documentation can be integrated into the Heritage Digital Twin, both in digital format (i.e., by digitizing and incorporating it into the HDT system) and as semantic representation of its nature and content in the knowledge graph, by means of the advanced analytical capabilities deployed through the use of AI algorithms, thus enhancing the informational richness and historical significance of the Heritage Digital Twin information layer.

An example of how this mechanism could work is to show how the AI Component can be used to derive relevant information from historical documentation concerning the monument. The Tower in fact is mentioned in many important historical sources concerning the history of Florence, including among the others the

Istorie Fiorentine by Giovanni Villani [

60], an author who directly witnessed the historical circumstances surrounding its construction during the late medieval period. Additionally, Giorgio Vasari, in his

Vite [

61], discussed Arnolfo’s work and highlighted the technical and artistic aspects of his Tower, offering a Renaissance perspective on its architectural significance.

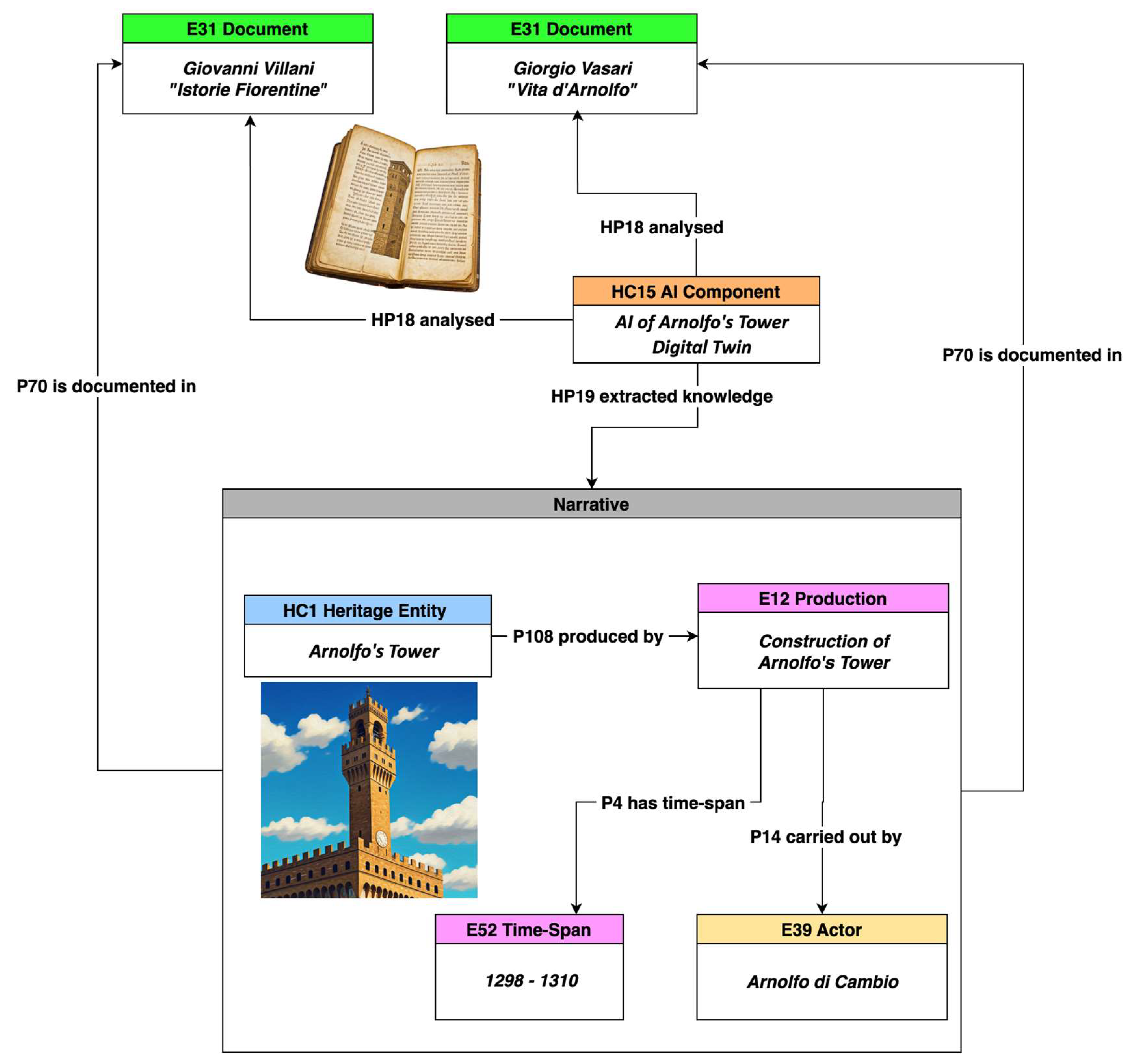

In ontological terms, the CRM:E31 Document class can be declared to represent these documents within the semantic space of our system. Artificial intelligence can be employed to “read” these works, analysing and processing the texts to extract relevant content. Extracted historical information can be then “transcribed” into fragments of semantic knowledge using the classes and properties of the RHDTO and the CRM ecosystem, thereby contributing new data to the history of the cultural object within the Digital Twin’s knowledge base. The extraction process itself can be documented through the new HC15 AI Component class and its properties, namely HP18 analysed and HP19 extracted knowledge, which also provide details on the provenance of the newly generated information fragments. Properties such as CRM:P70 is documented in, appropriately instantiated by the AI, can be also employed to maintain a consistent relationship between the derived knowledge and its source.

From a technical point of view, the way in which the semantic fragments created by the AI enrich the knowledge graph is rooted in the technique of named graphs, which provides a powerful mechanism for organizing and representing data. By employing this technique, AI components can generate specific contexts or subsets of data within the broader graph structure, labelling it with appropriate names, thus allowing for more structured interpretations and analyses of their content. In fact, each named graph is a “semantic box” that encapsulates a distinct layer of information and whose provenance can always be precisely traced, enabling the AI to deal with insights that are both relevant and contextually aware. This approach not only enriches the overall knowledge representation but also facilitates dynamic interactions and relationships among various sections of the information stored within the knowledge graph, ultimately enhancing the system’s capacity for intelligent reasoning.

Figure 2 illustrates an example of how this process can take place, by showing the extraction and the semantic encoding performed by AI of information relating to the time span of the construction of the Tower, performed between 1298 and 1310 by Arnolfo, as reported by Villani and Vasari. The named graph in which this knowledge is encapsulated is rendered instantiating the

Narrative class of the NOnt model.

The process of knowledge extraction described above primarily focuses on using AI on textual documentation. However, as advancements in AI technologies continue to evolve, this capability will extend to images, 3D models, maps, audio-visuals and other forms of documentation, making this approach (and thus, this conceptual model) applicable to other possible use cases.

7.2. Artificial Intelligence and the Reactive Components of Heritage Digital Twins

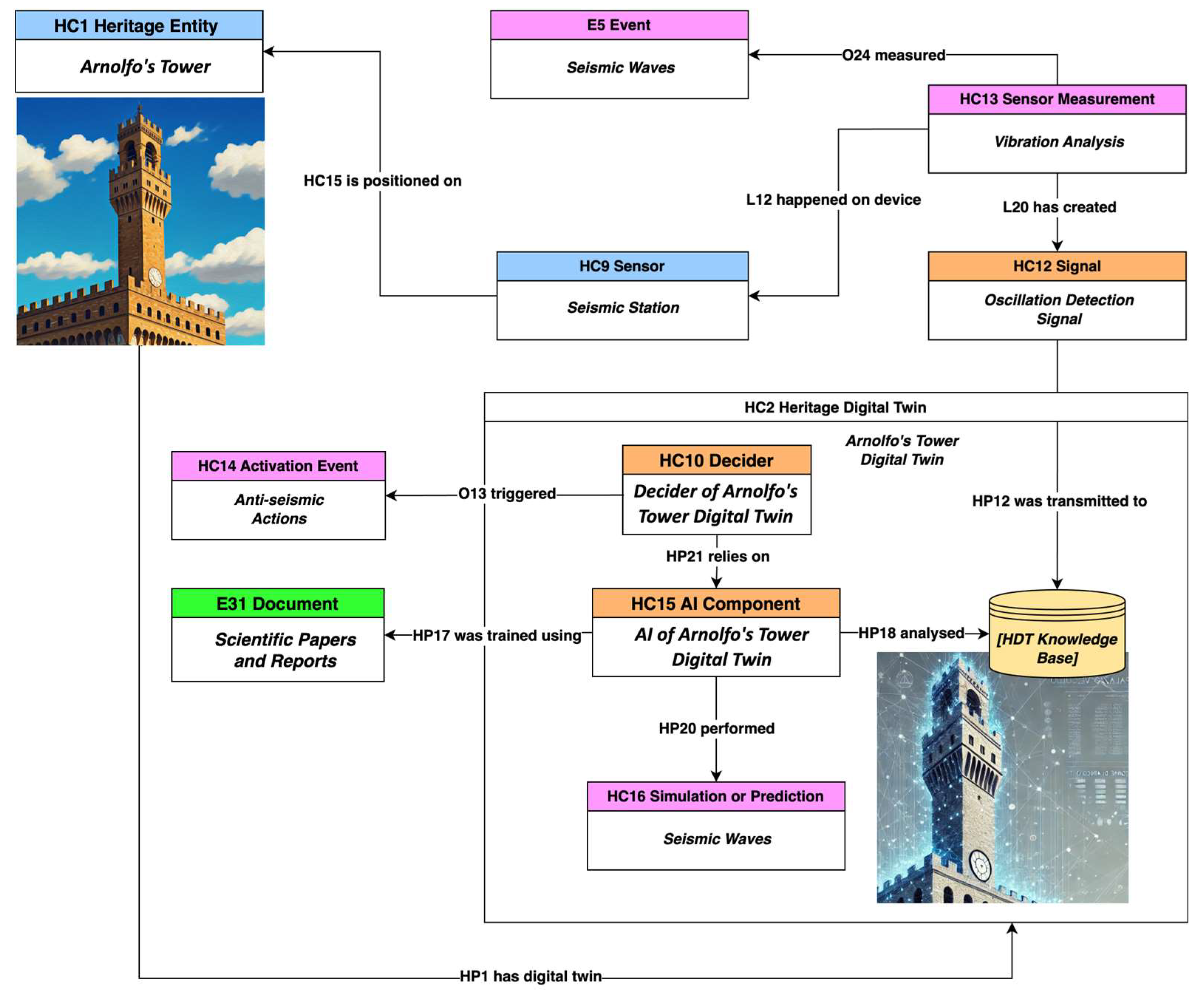

To illustrate how AI can assist the digital twin reactive components, and in particular the Decider, in processing signals from sensors and making informed decisions on the actions to take to ensure the safety of cultural objects, we consider a recent paper concerning the seismic monitoring of Arnolfo’s Tower, reporting an activity that was set up by the researchers from the Italian National Institute of Geophysics and Volcanology (INGV) and the Department of Architecture at the University of Florence, and is still ongoing [

62]. The aim of this seismic study is to deepen the understanding of the tower’s dynamic characteristics and evaluate its response to various vibration sources. To this end, two seismic stations were installed at the base and top of the Tower enabling the identification of its primary modal frequencies using free vibration analysis. During the investigation period considered in the study, the monitoring system detected a heightened sensitivity to low-frequency seismic waves that align with the Tower’s natural frequencies of oscillation, suggesting that the Tower may be more affected by distant seismic events than by nearby ones, despite the latter being clearly perceptible within the city. The study also highlighted the impact of “cultural noise”, i.e., vibrations caused by human activities, on the Tower’s high-frequency oscillations. These sources include the ringing of the Tower’s bell, the movement of visitors, and general urban activity. The researchers plan to extend the monitoring network by adding more sensors within the tower and the neighbouring

Palazzo Vecchio. This expansion will facilitate a more detailed analysis of the modal shapes and the dynamic interactions between the structures. Additionally, further investigation is needed to clarify the differences in the Tower’s responses to nearby versus distant earthquakes, which could have important implications for assessing the seismic vulnerability of historical structures. This continuous monitoring approach would provide crucial data to the Heritage Digital Twin of Arnolfo’s Tower for refining numerical models and improving the accuracy of structural response predictions, as demonstrate by other relevant studies in this field [

63]. It would also allow for the assessment of the impacts of both natural and anthropogenic vibrations on the long-term integrity of the Tower, and other precious insights that can guide future conservation and preservation strategies for these and other similar heritage structures.

The contribution of AI in this context could be significant to analyse and classifying seismic events according to their intensity and distance, thereby identifying those that may pose a greater threat to the stability of the Tower, while distinguishing them from anthropogenic vibrations, such as those generated by large crowds of tourists visiting the Palazzo Vecchio or the underlying Piazza della Signoria. To enhance its effectiveness, scientific reports, including the one detailing this study, could be used to train the AI, equipping it with an understanding of the specific challenges and risks associated with the Tower’s structure and condition. This approach would be akin to having the AI “study” to become a “digital seismologist”, i.e., an “expert” capable of analysing and distinguishing relevant data, and operating more effectively based on specialized knowledge. Naturally, all the processes performed, and decisions made by the Decider and the AI will be encoded using the RHDTO model, thereby becoming an integral part of the Heritage Digital Twin’s knowledge base. This provenance information will allow experts to evaluate the quality of the decisions made, correct any inaccuracies and further refine the AI training.

To semantically represent this scenario, we once again leverage the CRM ecosystem and the existing entities of RHDTO. Additionally, we deploy the newly defined entities of our ontology. To describe the seismic sensors installed on the Tower we instantiate the HC9 Sensor class. The placement of each of the sensors on the monument (HC1 Heritage Entity) is modelled through the HC15 is positioned on property. HC13 Sensor Measurement, in combination with the properties CRMsci:O24 measured and CRMdig:L12 happened on device, can be instantiated to model the events monitored by each sensor, such as seismic movements and the “cultural noise” generated by human actions, including tourist movements, bell ringing, urban traffic, local events, and daily operational tasks. The events themselves are modelled using the CRM:E5 Event class. The resulting seismic signals are represented by the HC12 Signal, and their transmission to the Digital Twin of Arnolfo’s Tower (HC2) is modelled through the HP12 was transmitted to property. The integrated monitoring system receiving these signals, is represented by instances of the HC10 Decider class.

The interaction between the Decider (HC10) and the AI Component of the Heritage Digital Twin, rendered by instantiating the new HC15 AI Component class, implements a system designed to acquire the transmitted values, analyse the Tower’s physical and environmental conditions, integrate information from other sensors, and enrich the Heritage Digital Twin’s Knowledge Base. The role of AI in conducting simulations and predictive tasks is rendered through the property HP20 performed, while the operations themselves are modelled through instances of the HC16 Simulation or Prediction class. The interaction between the Decider and the AI Component for enhanced decision-making is captured by the HP21 relies on property.

Finally, the process by which the AI analyses the digital twin’s Knowledge Base, critical for accessing and assessing relevant information for informed decision-making, is represented by the HP18 analysed property, while the HP17 was trained using property is employed to specify the scientific papers and other resources used for training the AI, thereby enhancing its capabilities and “expertise” in the field of seismology.

The ontological representation of all these processes is illustrated in

Figure 3.

As in the case of knowledge extraction, both the decisions made by the decider and the contributions of the AI—such as the specific insights and analyses it provided—are captured in a named graph aimed at enriching the overall knowledge graph by documenting the decision-making process, the rationale behind the actions taken, the relevant environmental data, and the AI’s analytical contributions. This facilitates future reference, analysis, and potential learning for similar situations.

8. Conclusions

This paper is a first step in showing how the integration of artificial intelligence in Heritage Digital Twins based on extensive semantic knowledge graphs and empowered by ontologies, already represents a significant advancement in the field of cultural heritage data management. These three components, when well-orchestrated, offer unprecedented capabilities for the preservation, analysis, and decision-making processes related to cultural objects. However, the successful integration of these elements requires careful consideration to avoid the pitfalls of the well-known “Three-Body Problem” [

64], with the complexity and potentially chaotic consequences that can arise from the interaction of multiple dynamic systems.

What we illustrate in this paper is only a glimpse into the transformative potential of AI within the digital twins landscape, where these advanced tools reveal new dimensions for enhancing documentation, analysis, and interpretation. We stand at the dawn of a new technological era, that promises to reshape our understanding of interaction, intelligence, and the very fabric of knowledge itself. Yet, it is inevitable that AI applications will, in time, extend far beyond these initial boundaries, unlocking possibilities across diverse domains, both within and outside the digital twin framework.

In parallel, another significant paradigm shift is already underway. While many of today’s most prominent AI tools are semi-commercial, proprietary products, i.e., powerful yet closed systems that limit adaptability and transparency, we are also witnessing the rise of very promising Open-Source alternatives, providing resources that not only democratize access to advanced AI capabilities but also offer enhanced transparency and customization. As this new era unfolds, it invites us to engage thoughtfully with the possibilities and responsibilities embedded in these evolving tools.

We will continue to explore these technologies as they emerge, experimenting with their potential to shape increasingly sophisticated digital twins. At the same time, we will deepen our use of ontologies to govern and refine the power of these tools, ensuring that AI-driven processes align with structured and meaningful frameworks. We firmly believe that in a landscape where the complexity of algorithms can render decisions inscrutable, only ensuring traceability can transform the opaque nature of AI into a more comprehensible narrative, enhancing accountability and fostering a collaborative dialogue between human intuition and artificial reasoning. We also hold the view that he use of AI always necessitates the involvement of human experts, called to provide guidance, validation, and supervision. Only human oversight is able to ensure that the decisions made by the AI are grounded in reality, that the data stored in the knowledge graphs are accurate and remain relevant, and that the Heritage Digital Twins, today and in the future, remain faithful representations of cultural entities, bridging the gap between machine representation and human understanding.

By framing data within a coherent ontological context, we elevate the AI’s output from mere black-box results to a rich tapestry of interrelated concepts and insights, paramount for the implementation of effective Heritage Digital Twins. This transparency fosters a deeper trust in the AI’s outcomes, as scholars can discern not only the results but also the underlying logic that informed them. Only in this way AI can become a participant in a shared epistemological journey, where the interplay of logic and data is made visible, paving the way for more informed and responsible decisions and choices. This not only increases the reliability of AI outputs but also aligns with the EU’s vision of an AI landscape that prioritizes human values, safeguards against biases, and promotes social well-being, serving as a vital step toward ensuring that AI technologies are developed and deployed in a manner that is ethical, responsible, and worthy of public trust.

Author Contributions

All authors have equally contributed to the present paper. All authors have read and agreed to the published version of the manuscript.

Funding

This paper received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data created for this study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- European Commission, Destination Earth. Available online: https://destination-earth.eu (accessed on 21 October 2024).

- Jones, D.; Snider, C.; Nassehi, A.; Yon, J.; Hicks, B. Characterising the Digital Twin: A Systematic Literature Review. CIRP Journal of Manufacturing Science and Technology 2020, 29, 36–52. [CrossRef]

- Semeraro, C.; Lezoche, M.; Panetto, H.; Dassisti, M. Digital Twin Paradigm: A Systematic Literature Review. Computers in Industry 2021, 130, 103469. [CrossRef]

- Schrotter, G.; Hürzeler, C. The Digital Twin of the City of Zurich for Urban Planning. PFG—Journal of Photogrammetry, Remote Sensing and Geoinformation Science 2020, 88, 99–112. [CrossRef]

- Caprari, G.; Castelli, G.; Montuori, M.; Camardelli, M.; Malvezzi, R. Digital Twin for Urban Planning in the Green Deal Era: A State of the Art and Future Perspectives. Sustainability 2022, 14, 6263. [CrossRef]

- Li, L.; Aslam, S.; Wileman, A.; Perinpanayagam, S. Digital Twin in Aerospace Industry: A Gentle Introduction. IEEE Access 2022, 10, 9543–9562. [CrossRef]

- Grieves, M. Digital Twin: Manufacturing Excellence Through Virtual Factory Replication. White paper 2014, 1, 1–7.

- Drechsler, W. The Contrade, the Palio and the Ben Comune: Lessons from Siena. Trames 2006, 10, 99–125.

- Palio di Siena. Available online: https://en.wikipedia.org/wiki/Palio_di_Siena (accessed on 21 November 2024).

- Paradis, T. W. Living the Palio: A Story of Community and Public Life in Siena, Italy; Paradis: Indianapolis, USA, 2019.

- Artusi, L. Calcio Fiorentino. History, art and memoirs of the historical game. From its origins to the present day. Scribo Edizioni: Florence, Italy, 2016.

- Calcio Storico Fiorentino. Available online: https://en.wikipedia.org/wiki/Calcio_storico_fiorentino (accessed on 21 November 2024).

- Niccolucci, F. Technologies, Standards and Business Models for the Formation of Virtual Collections of 3D Replicas of Museum Objects: The 3D-COFORM Project. In 2010 IST-Africa Conference; Durban, South Africa, 2010; pp. 1-8.

- Lovell, L.J.; Davies, R.J.; Hunt, D.V.L. The Application of Historic Building Information Modelling (HBIM) to Cultural Heritage: A Review. Heritage 2023, 6, 6691–6717. [CrossRef]

- Industry Foundation Classes (IFC). Available online: https://www.buildingsmart.org/standards/bsi-standards/industry-foundation-classes/ (accessed on 21 November 2024).

- Niccolucci, F.; Felicetti, A.; Hermon, S. Populating the Data Space for Cultural Heritage with Heritage Digital Twins. Data 2022, 7, 105. [CrossRef]

- Niccolucci, F.; Markhoff, B.; Theodoridou, M.; Felicetti, A.; Hermon, S. The Heritage Digital Twin: A Bicycle Made for Two. The Integration of Digital Methodologies into Cultural Heritage Research. arXiv 2023. [CrossRef]

- Niccolucci, F.; Felicetti, A. Digital Twin Sensors in Cultural Heritage Ontology Applications. Sensors 2024, 24, 3978. [CrossRef]

- Boje, C.; Guerriero, A.; Kubicki, S.; Rezgui, Y. Towards a Semantic Construction Digital Twin: Directions for Future Research. Automation in Construction 2020, 114, 103179. [CrossRef]

- Albers, L.; Große, P.; Wagner, S. Semantic Data-Modeling and Long-Term Interpretability of Cultural Heritage Data—Three Case Studies. In Digital Cultural Heritage; Kremers, H., Ed.; Springer International Publishing: Cham, 2020; pp. 239–253 ISBN 978-3-030-15198-0.

- CIDOC CRM. Available online: https://cidoc-crm.org/ (accessed on 21 November 2024).

- ECHOES—European Cloud for Heritage OpEn Science. Available online: https://www.echoes-eccch.eu (accessed on 21 November 2024).

-

The Ariadne Impact; Richards, J.D., Niccolucci, F., Eds.; Archeolingua: Budapest, 2019; ISBN 978-615-5766-31-2. [CrossRef]

-

International Data Aggregation for Archaeological Research and Heritage Management: the ARIADNE Experience; Aspöck, E.; Richards, J., Eds.; Internet Archaeology, Special Issue 64; 2023. https://intarch.ac.uk/journal/issue64/index.html.

- ARIADNE Research Infrastructure. Available online: https://www.ariadne-research-infrastructure.eu (accessed on 21 November 2024).

- Competence Centre for the Conservation of Cultural (4CH). Available online: https://www.4ch-project.eu (accessed on 21 November 2024).

- Doerr, M.; Stead, S.; Theodoridou, M. Definition of the CRMdig. An Extension of CIDOC-CRM to Support Provenance Metadata. 2022. Available online: https://cidoc-crm.org/sites/default/files/CRMdigv4.0.pdf (accessed on 21 November 2024).

- Kritsotaki, A.; Hiebel, G.; Theodoridou, M.; Doerr, M.; Rousakis, Y. Definition of the CRMsci. 2017. Available online: https://cidoc-crm.org/crmsci/ModelVersion/version-1.2.3 (accessed on 21 November 2024).

- Bruseker, B.; Doerr, M.; Theodoridou, M. Report on the Common Semantic Framework. PARTHENOS Project, 2017. Available online: https://www.parthenos-project.eu/Download/Deliverables/D5.1_Common_Semantic_Framework_Appendices.pdf (accessed on 21 November 2024).

- Meghini, C.; Bartalesi, V.; Metilli, D. Representing Narratives in Digital Libraries: The Narrative Ontology. Semantic Web 2021, 12, 241–264. Available online: https://www.semantic-web-journal.net/content/representing-narratives-digital-libraries-narrative-ontology (accessed on 21 November 2024).

- The MINGEI Project. Available at: https://www.mingei-project.eu/ (accessed on 21 November 2024).

- Brandt, A.K. Beethoven’s Ninth and AI’s Tenth: A Comparison of Human and Computational Creativity. Journal of Creativity 2023, 33, 100068. [CrossRef]

- Rizk, R.; Rizk, D.; Rizk, F.; Kumar, A. A Hybrid Capsule Network-Based Deep Learning Framework for Deciphering Ancient Scripts with Scarce Annotations: A Case Study on Phoenician Epigraphy. In Proceedings of the 2021 IEEE International Midwest Symposium on Circuits and Systems (MWSCAS); IEEE: Lansing, MI, USA, August 9 2021; pp. 617–620. [CrossRef]

- Magnani, M.; Clindaniel, J. Artificial Intelligence and Archaeological Illustration. Advances in Archaeological Practice 2023, 11, 452–460. [CrossRef]

- Cobb, P.J. Large Language Models and Generative AI, Oh My!: Archaeology in the Time of ChatGPT, Midjourney, and Beyond. Advances in Archaeological Practice 2023, 11, 363–369. [CrossRef]

- Argyrou, A.; Agapiou, A. A Review of Artificial Intelligence and Remote Sensing for Archaeological Research. Remote Sensing 2022, 14, 6000. [CrossRef]

- Mishra, M.; Barman, T.; Ramana, G.V. Artificial Intelligence-Based Visual Inspection System for Structural Health Monitoring of Cultural Heritage. Journal of Civil Structural Health Monitoring 2024, 14, 103–120. [CrossRef]

- Cao, J.; Zhang, Z.; Zhao, A.; Cui, H.; Zhang, Q. Ancient Mural Restoration Based on a Modified Generative Adversarial Network. Heritage Science 2020, 8, 7. [CrossRef]

-

White Paper on Artificial Intelligence. A European Approach to Excellence and Trust; European Commission, Ed.; COM(2020) 65 final; European Commission: Brussels, Belgium, 2020.

- Nikolinakos, N. Th. A European Approach to Excellence and Trust: The 2020 White Paper on Artificial Intelligence. In EU Policy and Legal Framework for Artificial Intelligence, Robotics and Related Technologies—The AI Act; Law, Governance and Technology Series; Springer International Publishing: Cham, 2023; Vol. 53, pp. 211–280 ISBN 978-3-031-27952-2.

- Regulation (EU) 2024/1689 of the European Parliament and of the Council of 13 June 2024 Creating Harmonized Rules on Artificial Intelligence and Amending Regulations (Artificial Intelligence Regulation); European Parliament: Brussels, Belgium, 2024. http://data.europa.eu/eli/reg/2024/1689/oj.

- Pasikowska-Schnass, M.; Young-Shin L. Artificial intelligence in the Context of Cultural Heritage and Museums: Complex Challenges and New Opportunities. Technical Report PE 747.120; European Parliamentary Research Service: Brussels, Belgium, 2023. https://www.europarl.europa.eu/thinktank/en/document/EPRS_BRI(2023)747120.

- Münster, S.; Maiwald, F.; Di Lenardo, I.; Henriksson, J.; Isaac, A.; Graf, M.M.; Beck, C.; Oomen, J. Artificial Intelligence for Digital Heritage Innovation: Setting up a R&D Agenda for Europe. Heritage 2024, 7, 794–816. [CrossRef]