Submitted:

04 December 2024

Posted:

05 December 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- (1)

- The paper systematically categorizes 215 research works (2012–present) into ten application areas, offering a holistic perspective on AIoT advancements, challenges, and opportunities in aquaculture. This organization provides a comprehensive understanding unmatched by prior reviews.

- (2)

- To the best of our knowledge, this work represents the most extensive review of AIoT applications in aquaculture, integrating diverse insights to highlight the transformative potential of these technologies and serving as a foundational resource for multidisciplinary research and innovation.

- (3)

- The review addresses critical adoption barriers such as high costs, data privacy concerns, and scalability issues. It also explores future directions, including hybrid AI models, blockchain for secure data management, and edge computing for real-time, remote operations.

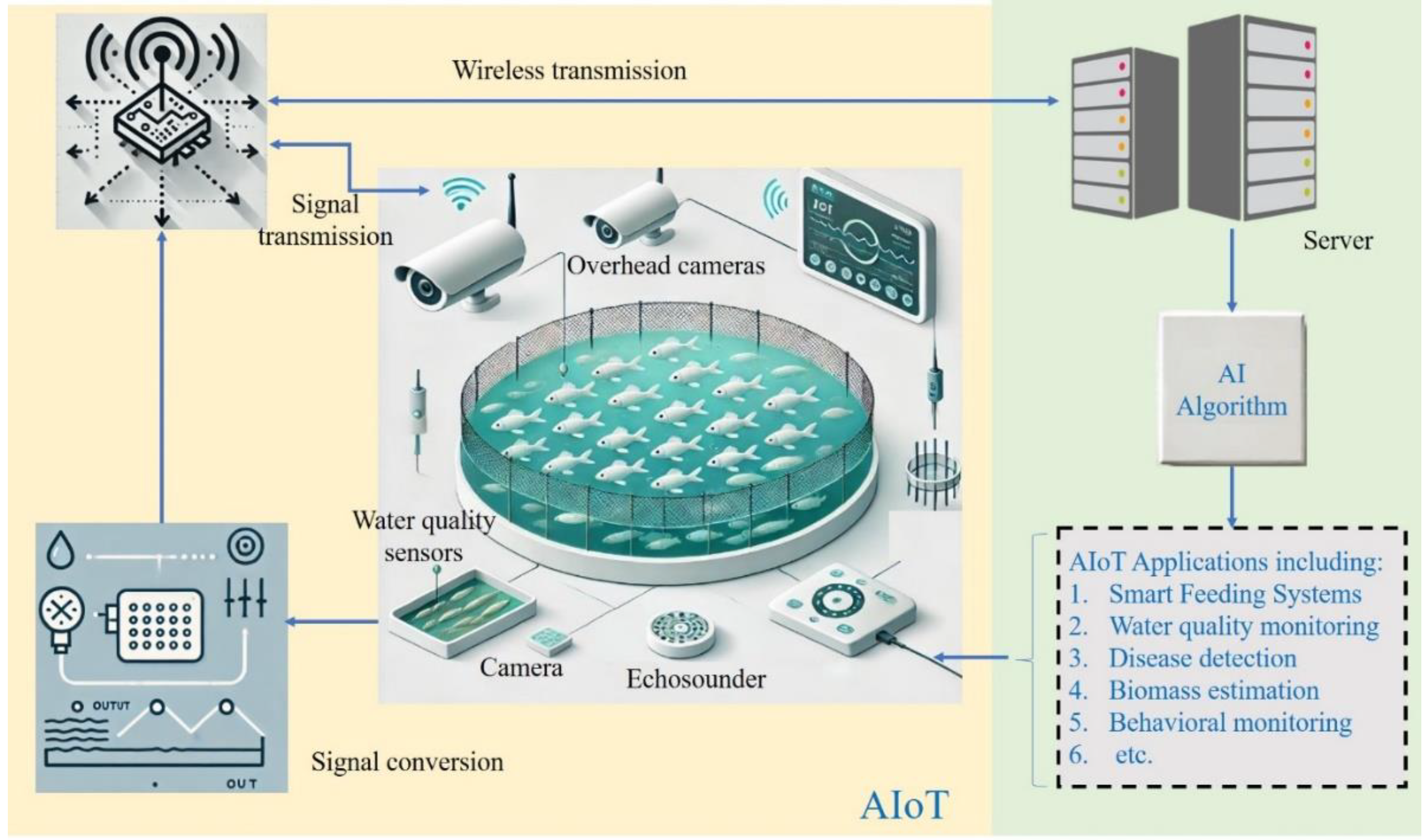

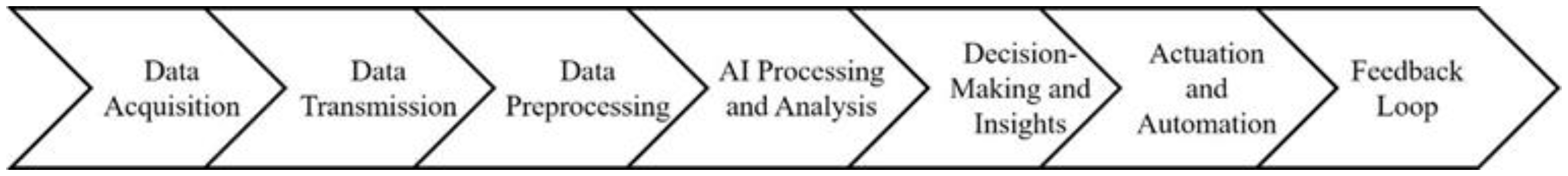

2. AIoT Components in Aquaculture

2.1. IoT Sensors

2.1.1. Water Quality Sensors

2.1.2. Optical Sensors

2.1.3. Motion Sensors

2.1.4. Deployment Strategies

2.2. AI Algorithms

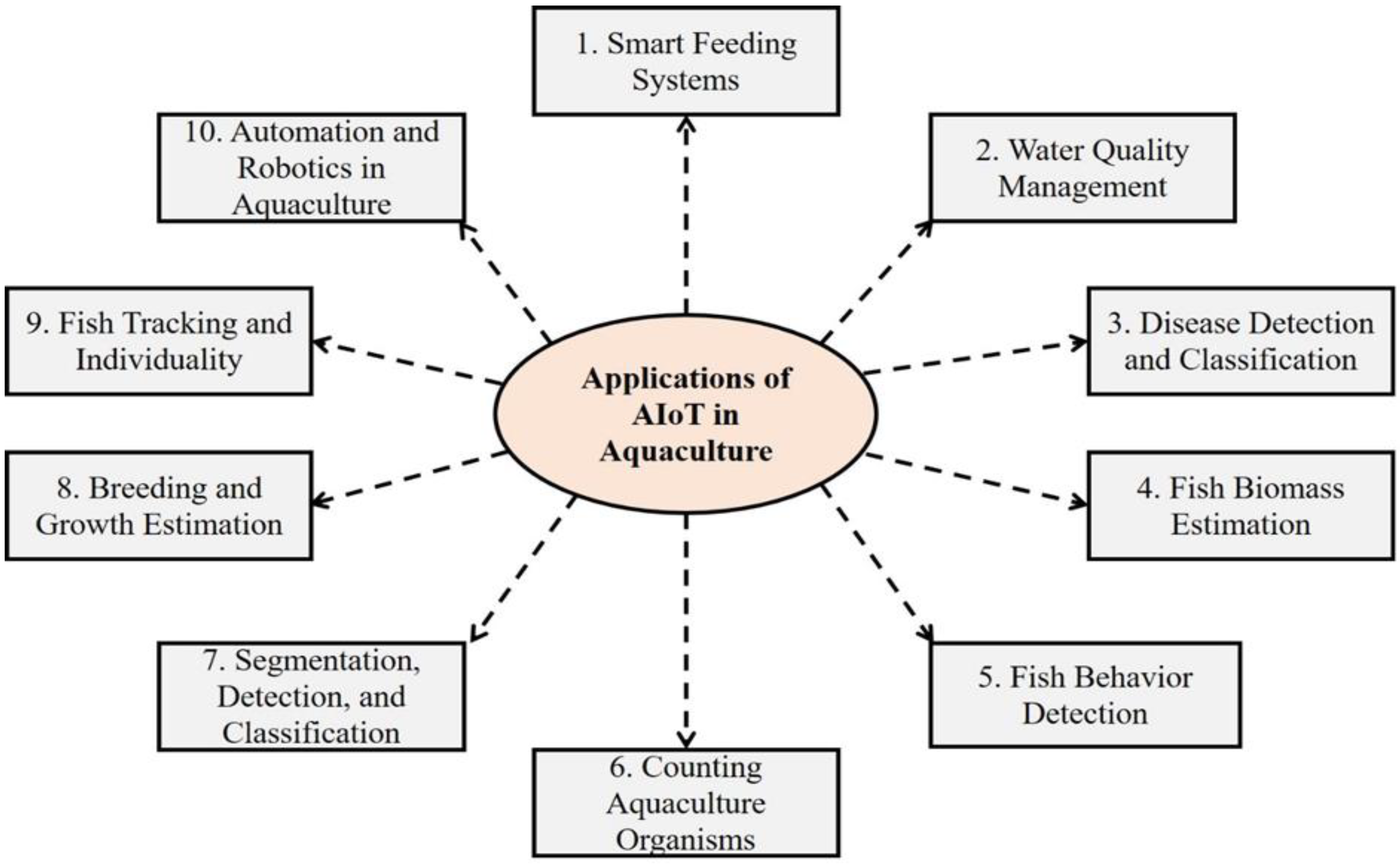

3. AIoT Applications in Aquaculture

3.1. Smart Feeding Systems

3.1.1. Feeding Frequency and Nutrient Requirements

3.1.2. Automated Detection of Feeding Behavior

3.1.3. Acoustic-Based Monitoring and Behavioral Analysis

3.1.4. AI-Enhanced Precision Feeding Systems

3.1.5. Integrating Multimodal Data for Enhanced Monitoring

3.2. Water Quality Management

3.2.1. Water Temperature

3.2.3. Salinity

3.2.4. Dissolved Oxygen (DO)

3.2.5. Turbidity

3.2.6. Chlorophyll

3.2.7. Ammonia

3.3. Disease Detection and Classification

3.3.1. Computer Vision-Based Disease Detection

3.3.2. Water Quality-Linked Disease Prediction

3.3.3. Cross-Modal and Zero-Shot Learning for Disease Identification

3.3.4. Ensemble and Hybrid Models for Disease Classification

3.3.5. Adaptive Neural Fuzzy Systems

3.3.6. Mobile and IoT-Enabled Disease Monitoring Systems

3.3.7. Biosensors for Pathogen Detection

3.4. Fish Biomass Estimation

3.4.1. Computer Vision and Deep Learning for Biomass Estimation

3.4.2. Smart Scales for Real-Time Biomass Monitoring

3.4.3. Sonar and Acoustic Methods for Biomass Estimation

3.4.4. Structure from Motion (SfM) and 3D Modeling for Biomass Estimation

3.4.5. Acoustic Signal Processing for Non-Invasive Biomass Estimation

3.5. Fish Behavior Detection

3.5.1. Abnormal Behavior Detection

3.5.2. Swimming Speed and Locomotion Analysis

3.5.3. Disease Detection Through Behavior Analysis

3.5.4. Shoaling and Social Behavior Modeling

3.5.5. Acoustic and Echogram-Based Behavior Detection

3.6. Counting Aquaculture Organisms

3.6.1. Shrimp Larvae and Seed Counting

3.6.2. Fish counting in Controlled and Natural Environments

3.6.3. Echosounder and Acoustic Counting Methods

3.6.4. Counting Holothurians (Sea Cucumbers)

3.6.5. Automated Scale Counting and Phenotypic Feature Detection

3.7. Segmentation, Detection, and Classification of Aquaculture Species

3.7.1. Fish Species Classification

3.7.2. Underwater Species Detection in Complex Environments

3.7.3. Segmentation in Underwater and SAR Images

3.7.4. Detection and Classification of Aquaculture Structures

3.7.5. Image Processing for Fish Quality Assessment

3.8. Breeding and Growth Estimation

3.8.1. Automated Breeding and Spawning Detection

3.8.2. Fish Population Estimation Using Echosounders and Digital Twins

3.8.3. Growth Estimation

3.8.4. Environmental Monitoring for Growth and Health

3.9. Fish Tracking and Individuality

3.9.1. Individual Fish Identification

3.9.2. Multi-Fish Tracking in Controlled and Unconstrained Environments

3.9.3. Activity Segmentation and Tracking in Sonar and Echogram Data

3.9.4. Fish Group Activity and Behavior Recognition

3.9.5. Tracking Fish Around Marine Structures and Moving Cameras

3.9.6. Individuality and Behavior Tracking for Aquaculture Monitoring

3.10. Automation and Robotics in Aquaculture

3.10.1. Remote Sensing and Monitoring Systems

3.10.2. Robotic and Autonomous Systems for Underwater Monitoring

3.10.3. UAV and AUV-Based Aquaculture Inspections

3.10.4. Hybrid and Smart Monitoring Systems

3.10.5. Advanced Imaging and Sensor Technologies

3.10.6. Digital Twin and Predictive Modeling

3.10.7. Biologically-Inspired Robotics

3.10.9. Specialized Aquaculture Applications

4. Advantages of AIoT in Aquaculture

5. Challenges and Limitations of AIoT in Aquaculture

5.1. High Initial Costs and Infrastructure Requirements

5.2. Environmental Variability and Model Adaptability

5.3. Data Privacy and Security Concerns

5.4. Scalability Challenges

5.5. Complexity and Technical Expertise Requirements

5.6. Limitations in Model Generalization Across Species and Applications

5.7. Environmental and Ecological Impact

6. Future Directions of AIoT in Aquaculture

6.1. Real-time Adaptability and Decision-Making

6.2. Species-specific and Environmental Adaptability

6.3. Scalability and Cost-effectiveness for Broader Adoption

6.4. Integrated Multimodal Systems for Comprehensive Monitoring

6.5. Enhanced Disease Detection and Prevention Through Real-Time Biosensing

6.6. Advanced Automation and Robotics for Efficient Operations

6.7. Sustainable Energy Solutions for Remote Aquaculture

6.8. Digital Twins and Predictive Analytics for Proactive Management

7. Conclusion and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- The State of World Fisheries and Aquaculture 2022; Food and Agriculture Organization of the United Nations (FAO): Rome, Italy, 2022.

- Huang, Y.-P.; Khabusi, S.P. A CNN-OSELM Multi-Layer Fusion Network With Attention Mechanism for Fish Disease Recognition in Aquaculture. IEEE Access 2023, 11, 58729–58744. [Google Scholar] [CrossRef]

- Araujo, G.S.; da Silva, J.W.A.; Cotas, J.; Pereira, L. Fish Farming Techniques: Current Situation and Trends. J. Mar. Sci. Eng. 2022, 10, 1598. [Google Scholar] [CrossRef]

- M. Sun, X. M. Sun, X. Yang, and Y. Xie, “Deep learning in aquaculture: A review,” Journal of Computers, vol. 31, no. 1, pp.294-319, Jan. 2020.

- Yue, G.H.; Tay, Y.X.; Wong, J.; Shen, Y.; Xia, J. Aquaculture species diversification in China. Aquac. Fish. 2023, 9, 206–217. [Google Scholar] [CrossRef]

- Liu, D.; Xu, B.; Cheng, Y.; Chen, H.; Dou, Y.; Bi, H.; Zhao, Y. Shrimpseed_Net: Counting of Shrimp Seed Using Deep Learning on Smartphones for Aquaculture. IEEE Access 2023, 11, 85441–85450. [Google Scholar] [CrossRef]

- Vembarasi, K.; Thotakura, V.P.; Senthilkumar, S.; Ramachandran, L.; Praba, V.L.; Vetriselvi, S.; Chinnadurai, M. White Spot Syndrome Detection in Shrimp using Neural Network Model. 2024 11th International Conference on Computing for Sustainable Global Development (INDIACom). LOCATION OF CONFERENCE, IndiaDATE OF CONFERENCE; pp. 212–217.

- Shen, Y.; Arablouei, R.; de Hoog, F.; Xing, H.; Malan, J.; Sharp, J.; Shouri, S.; Clark, T.D.; Lefevre, C.; Kroon, F.; et al. In-Situ Fish Heart-Rate Estimation and Feeding Event Detection Using an Implantable Biologger. IEEE Trans. Mob. Comput. 2021, 22, 968–982. [Google Scholar] [CrossRef]

- Tricas, T.; Boyle, K. Acoustic behaviors in Hawaiian coral reef fish communities. Mar. Ecol. Prog. Ser. 2014, 511, 1–16. [Google Scholar] [CrossRef]

- Kim, H.; Koo, J.; Kim, D.; Jung, S.; Shin, J.-U.; Lee, S.; Myung, H. Image-Based Monitoring of Jellyfish Using Deep Learning Architecture. IEEE Sensors J. 2016, 16, 2215–2216. [Google Scholar] [CrossRef]

- Weihong, B.; Yun, J.; Jiaxin, L.; Lingling, S.; Guangwei, F.; Wa, J. In-Situ Detection Method of Jellyfish Based on Improved Faster R-CNN and FP16. IEEE Access 2023, 11, 81803–81814. [Google Scholar] [CrossRef]

- Dunker, S.; Boho, D.; Wäldchen, J.; Mäder, P. Combining high-throughput imaging flow cytometry and deep learning for efficient species and life-cycle stage identification of phytoplankton. BMC Ecol. 2018, 18, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Collos, Y.; Harrison, P.J. Acclimation and toxicity of high ammonium concentrations to unicellular algae. Mar. Pollut. Bull. 2014, 80, 8–23. [Google Scholar] [CrossRef] [PubMed]

- Pedraza, A.; Bueno, G.; Deniz, O.; Cristóbal, G.; Blanco, S.; Borrego-Ramos, M. Automated Diatom Classification (Part B): A Deep Learning Approach. Appl. Sci. 2017, 7, 460. [Google Scholar] [CrossRef]

- Ubina, N.A.; Lan, H.-Y.; Cheng, S.-C.; Chang, C.-C.; Lin, S.-S.; Zhang, K.-X.; Lu, H.-Y.; Cheng, C.-Y.; Hsieh, Y.-Z. Digital twin-based intelligent fish farming with Artificial Intelligence Internet of Things (AIoT). Smart Agric. Technol. 2023, 5. [Google Scholar] [CrossRef]

- Singh, M.; Sahoo, K.S.; Nayyar, A. Sustainable IoT Solution for Freshwater Aquaculture Management. IEEE Sensors J. 2022, 22, 16563–16572. [Google Scholar] [CrossRef]

- A. Petkovski, J. A. Petkovski, J. Ajdari, and X. Zenuni, “IoT-based solutions in aquaculture: A systematic literature review,” in Proc. of 44th Int. Convention on Information, Communication and Electronic Technology (MIPRO), Opatija, Croatia, pp.1358-1363, Sep. 2021. [Google Scholar]

- W.-C. Hu, L.-B. W.-C. Hu, L.-B. Chen, B.-H. Wang, G.-W. Li, and X.-R. Huang, “An AIoT-based water quality inspection system for intelligent aquaculture,” in Proc. of IEEE 11th Global Conf. on Consumer Electronics (GCCE), Osaka, Japan, pp.551-552, Oct. 2022.

- Hu, W.-C.; Chen, L.-B.; Wang, B.-H.; Li, G.-W.; Huang, X.-R. Design and Implementation of a Full-Time Artificial Intelligence of Things-Based Water Quality Inspection and Prediction System for Intelligent Aquaculture. IEEE Sensors J. 2023, 24, 3811–3821. [Google Scholar] [CrossRef]

- Rastegari, H.; Nadi, F.; Lam, S.S.; Ikhwanuddin, M.; Kasan, N.A.; Rahmat, R.F.; Mahari, W.A.W. Internet of Things in aquaculture: A review of the challenges and potential solutions based on current and future trends. Smart Agric. Technol. 2023, 4. [Google Scholar] [CrossRef]

- B B. D., T. Bodaragama, E. H. A. D. M. Miyurangana, Y. T. W. S. L. Jayakod, D. M. H. D. Vipulasiri, U. U. S. Rajapaksha, and J. Krishara, “IoT-Based Solution for Fish Disease Detection and Controlling a Fish Tank Through a Mobile Application,” in Proc. of IEEE 9th Int. Conf. for Convergence in Technology (I2CT), Pune, India, pp.1-6, Apr. 2024.

- Li, T.; Rong, S.; Chen, L.; Zhou, H.; He, B. Underwater Motion Deblurring Based on Cascaded Attention Mechanism. IEEE J. Ocean. Eng. 2022, 49, 262–278. [Google Scholar] [CrossRef]

- Hu, W.-C.; Chen, L.-B.; Huang, B.-K.; Lin, H.-M. A Computer Vision-Based Intelligent Fish Feeding System Using Deep Learning Techniques for Aquaculture. IEEE Sensors J. 2022, 22, 7185–7194. [Google Scholar] [CrossRef]

- Nagothu, S.K.; Sri, P.B.; Anitha, G.; Vincent, S.; Kumar, O.P. Advancing aquaculture: fuzzy logic-based water quality monitoring and maintenance system for precision aquaculture. Aquac. Int. 2024, 33. [Google Scholar] [CrossRef]

- Adegboye, M.A.; Aibinu, A.M.; Kolo, J.G.; Aliyu, I.; Folorunso, T.A.; Lee, S.-H. Incorporating Intelligence in Fish Feeding System for Dispensing Feed Based on Fish Feeding Intensity. IEEE Access 2020, 8, 91948–91960. [Google Scholar] [CrossRef]

- Haq, K.P.R.A.; Harigovindan, V.P. Water Quality Prediction for Smart Aquaculture Using Hybrid Deep Learning Models. IEEE Access 2022, 10, 60078–60098. [Google Scholar] [CrossRef]

- Khan, P.W.; Byun, Y.C. Optimized Dissolved Oxygen Prediction Using Genetic Algorithm and Bagging Ensemble Learning for Smart Fish Farm. IEEE Sensors J. 2023, 23, 15153–15164. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, T.; Han, G.; Gou, Y. TD-LSTM: Temporal Dependence-Based LSTM Networks for Marine Temperature Prediction. Sensors 2018, 18, 3797. [Google Scholar] [CrossRef] [PubMed]

- Khabusi, S.P.; Huang, Y.-P.; Lee, M.-F.; Tsai, M.-C. Enhanced U-Net and PSO-Optimized ANFIS for Classifying Fish Diseases in Underwater Images. Int. J. Fuzzy Syst. 2024, 1–18. [Google Scholar] [CrossRef]

- Kumaar, A.S.; Vignesh, A.V.; Deepak, K. FishNet Freshwater Fish Disease Detection using Deep Learning Techniques. 2024 2nd International Conference on Advancement in Computation & Computer Technologies (InCACCT). LOCATION OF CONFERENCE, IndiaDATE OF CONFERENCE; pp. 368–373.

- Pham, T.-N.; Nguyen, V.-H.; Kwon, K.-R.; Kim, J.-H.; Huh, J.-H. Improved YOLOv5 based Deep Learning System for Jellyfish Detection. IEEE Access 2024, PP, 1–1. [Google Scholar] [CrossRef]

- Zhao, Z.; Liu, Y.; Sun, X.; Liu, J.; Yang, X.; Zhou, C. Composited FishNet: Fish Detection and Species Recognition From Low-Quality Underwater Videos. IEEE Trans. Image Process. 2021, 30, 4719–4734. [Google Scholar] [CrossRef] [PubMed]

- Anjum, S.S.; M, S.K.; S, N.F.H.; N, A.M. Ensemble Neural Network Based Fish Species Identification for Emerging Aquaculture Application. 2023 International Conference on Recent Advances in Science and Engineering Technology (ICRASET). LOCATION OF CONFERENCE, IndiaDATE OF CONFERENCE; pp. 1–6.

- Rasmussen, C.; Zhao, J.; Ferraro, D.; Trembanis, A. Deep Census: AUV-Based Scallop Population Monitoring. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 2865–2873. [Google Scholar] [CrossRef]

- Qiu, C.; Wu, Z.; Wang, J.; Tan, M.; Yu, J. Multiagent-Reinforcement-Learning-Based Stable Path Tracking Control for a Bionic Robotic Fish With Reaction Wheel. IEEE Trans. Ind. Electron. 2023, 70, 12670–12679. [Google Scholar] [CrossRef]

- Persson, D.; Nødtvedt, A.; Aunsmo, A.; Stormoen, M. Analysing mortality patterns in salmon farming using daily cage registrations. J. Fish Dis. 2021, 45, 335–347. [Google Scholar] [CrossRef] [PubMed]

- Tavares-Dias, M.; Martins, M.L. An overall estimation of losses caused by diseases in the Brazilian fish farms. J. Parasit. Dis. 2017, 41, 913–918. [Google Scholar] [CrossRef] [PubMed]

- D. Muhammed, E. D. Muhammed, E. Ahvar, S. Ahvar, M. Trocan, M.-J. Montpetit, and R. Ehsani, “Artificial intelligence of things (AIoT) for smart agriculture: A review of architectures, technologies and solutions,” Journal of Network and Computer Applications, vol. 228, no. 1, pp.1-27, Jun. 2024.

- T. Nguyen, H. T. Nguyen, H. Nguyen, and T. Nguyen Gia, “Exploring the integration of edge computing and blockchain IoT: Principles, architectures, security, and applications,” Journal of Network and Computer Applications, vol. 226, pp.1-24, Apr. 2024.

- Pasika, S.; Gandla, S.T. Smart water quality monitoring system with cost-effective using IoT. 6, 0409; e6. [Google Scholar] [CrossRef]

- Liu, S.; Yin, B.; Sang, G.; Lv, Y.; Wang, M.; Xiao, S.; Yan, R.; Wu, S. Underwater Temperature and Salinity Fiber Sensor Based on Semi-Open Cavity Structure of Asymmetric MZI. IEEE Sensors J. 2023, 23, 18219–18233. [Google Scholar] [CrossRef]

- Parra, L.; Lloret, G.; Lloret, J.; Rodilla, M. Physical Sensors for Precision Aquaculture: A Review. IEEE Sensors J. 2018, 18, 3915–3923. [Google Scholar] [CrossRef]

- Akindele, A.A.; Sartaj, M. The toxicity effects of ammonia on anaerobic digestion of organic fraction of municipal solid waste. Waste Manag. 2018, 71, 757–766. [Google Scholar] [CrossRef]

- Matos, T.; Pinto, V.; Sousa, P.; Martins, M.; Fernández, E.; Henriques, R.; Gonçalves, L.M. Design and In Situ Validation of Low-Cost and Easy to Apply Anti-Biofouling Techniques for Oceanographic Continuous Monitoring with Optical Instruments. Sensors 2023, 23, 605. [Google Scholar] [CrossRef] [PubMed]

- Chiang, C.-T.; Chen, T.-Y.; Wu, Y.-T. Design of a Water Salinity Difference Detector for Monitoring Instantaneous Salinity Changes in Aquaculture. IEEE Sensors J. 2020, 20, 3242–3248. [Google Scholar] [CrossRef]

- Chiang, C.-T.; Chang, C.-W. Design of a Calibrated Salinity Sensor Transducer for Monitoring Salinity of Ocean Environment and Aquaculture. IEEE Sensors J. 2015, 15, 5151–5157. [Google Scholar] [CrossRef]

- Chen, F.; Qiu, T.; Xu, J.; Zhang, J.; Du, Y.; Duan, Y.; Zeng, Y.; Zhou, L.; Sun, J.; Sun, M. Rapid Real-Time Prediction Techniques for Ammonia and Nitrite in High-Density Shrimp Farming in Recirculating Aquaculture Systems. Fishes 2024, 9, 386. [Google Scholar] [CrossRef]

- Wang, Y.; Xu, D.; Li, X.; Wang, W. Prediction Model of Ammonia Nitrogen Concentration in Aquaculture Based on Improved AdaBoost and LSTM. Mathematics 2024, 12, 627. [Google Scholar] [CrossRef]

- Delgado, A.; Briciu-Burghina, C.; Regan, F. Antifouling Strategies for Sensors Used in Water Monitoring: Review and Future Perspectives. Sensors 2021, 21, 389. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.-H.; Lee, S.-K.; Lai, Y.-C.; Lin, C.-C.; Wang, T.-Y.; Lin, Y.-R.; Hsu, T.-H.; Huang, C.-W.; Chiang, C.-P. Anomalous Behaviors Detection for Underwater Fish Using AI Techniques. IEEE Access 2020, 8, 224372–224382. [Google Scholar] [CrossRef]

- Chen, P.; Wang, F.; Liu, S.; Yu, Y.; Yue, S.; Song, Y.; Lin, Y. Modeling Collective Behavior for Fish School With Deep Q-Networks. IEEE Access 2023, 11, 36630–36641. [Google Scholar] [CrossRef]

- Rosell-Moll, E.; Piazzon, M.; Sosa, J.; Ferrer, M.; Cabruja, E.; Vega, A.; Calduch-Giner, J.; Sitjà-Bobadilla, A.; Lozano, M.; Montiel-Nelson, J.; et al. Use of accelerometer technology for individual tracking of activity patterns, metabolic rates and welfare in farmed gilthead sea bream (Sparus aurata) facing a wide range of stressors. Aquaculture 2021, 539, 736609. [Google Scholar] [CrossRef]

- Chai, Y.; Yu, H.; Xu, L.; Li, D.; Chen, Y. Deep Learning Algorithms for Sonar Imagery Analysis and Its Application in Aquaculture: A Review. IEEE Sensors J. 2023, 23, 28549–28563. [Google Scholar] [CrossRef]

- Huang, M.; Zhou, Y.-G.; Yang, X.-G.; Gao, Q.-F.; Chen, Y.-N.; Ren, Y.-C.; Dong, S.-L. Optimizing feeding frequencies in fish: A meta-analysis and machine learning approach. Aquaculture 2024, 595. [Google Scholar] [CrossRef]

- Li, H.; Chatzifotis, S.; Lian, G.; Duan, Y.; Li, D.; Chen, T. Mechanistic model based optimization of feeding practices in aquaculture. Aquac. Eng. 2022, 97. [Google Scholar] [CrossRef]

- Cao, Y.; Liu, S.; Wang, M.; Liu, W.; Liu, T.; Cao, L.; Guo, J.; Feng, D.; Zhang, H.; Hassan, S.G.; et al. A Hybrid Method for Identifying the Feeding Behavior of Tilapia. IEEE Access 2023, 12, 76022–76037. [Google Scholar] [CrossRef]

- Wei, M.; Lin, Y.; Chen, K.; Su, W.; Cheng, E. Study on Feeding Activity of Litopenaeus Vannamei Based on Passive Acoustic Detection. IEEE Access 2020, 8, 156654–156662. [Google Scholar] [CrossRef]

- Catarino, M.M.R.S.; Gomes, M.R.S.; Ferreira, S.M.F.; Gonçalves, S.C. Optimization of feeding quantity and frequency to rear the cyprinid fish Garra rufa (Heckel, 1843). Aquac. Res. 2019, 50, 876–881. [Google Scholar] [CrossRef]

- Atoum, Y.; Srivastava, S.; Liu, X. Automatic Feeding Control for Dense Aquaculture Fish Tanks. IEEE Signal Process. Lett. 2014, 22, 1089–1093. [Google Scholar] [CrossRef]

- Zhou, C.; Xu, D.; Chen, L.; Zhang, S.; Sun, C.; Yang, X.; Wang, Y. Evaluation of fish feeding intensity in aquaculture using a convolutional neural network and machine vision. 507. [CrossRef]

- T. Noda, Y. T. Noda, Y. Kawabata, N. Arai, H. Mitamura, and S. Watanabe, “Monitoring escape and feeding behaviors of cruiser fish by inertial and magnetic sensors,” PLoS One, vol. 8, no. 11, pp.1-13, Nov. 2013.

- Zeng, Y.; Yang, X.; Pan, L.; Zhu, W.; Wang, D.; Zhao, Z.; Liu, J.; Sun, C.; Zhou, C. Fish school feeding behavior quantification using acoustic signal and improved Swin Transformer. Comput. Electron. Agric. 2022, 204. [Google Scholar] [CrossRef]

- M. A. Adegboye, A. M. M. A. Adegboye, A. M. Aibinu, J. G. Kolo, T. A. Folorunso, I. Aliyu, and L. S. Ho, “Intelligent Fish feeding regime system using vibration analysis,” World Journal of Wireless Devices and Engineering, vol. 3, no. 1, pp.1-8, Oct. 2019.

- Huang, Y.-P.; Vadloori, S. Optimizing Fish Feeding with FFAUNet Segmentation and Adaptive Fuzzy Inference System. Processes 2024, 12, 1580. [Google Scholar] [CrossRef]

- Chen, H.; Nan, X.; Xia, S. Data Fusion Based on Temperature Monitoring of Aquaculture Ponds With Wireless Sensor Networks. IEEE Sensors J. 2022, 23, 6–20. [Google Scholar] [CrossRef]

- Adhikary, A.; Roy, J.; Kumar, A.G.; Banerjee, S.; Biswas, K. An Impedimetric Cu-Polymer Sensor-Based Conductivity Meter for Precision Agriculture and Aquaculture Applications. IEEE Sensors J. 2019, 19, 12087–12095. [Google Scholar] [CrossRef]

- Liu, W.; Liu, S.; Hassan, S.G.; Cao, Y.; Xu, L.; Feng, D.; Cao, L.; Chen, W.; Chen, Y.; Guo, J.; et al. A Novel Hybrid Model to Predict Dissolved Oxygen for Efficient Water Quality in Intensive Aquaculture. IEEE Access 2023, 11, 29162–29174. [Google Scholar] [CrossRef]

- Li, D.; Wang, X.; Sun, J.; Feng, Y. Radial Basis Function Neural Network Model for Dissolved Oxygen Concentration Prediction Based on an Enhanced Clustering Algorithm and Adam. IEEE Access 2021, 9, 44521–44533. [Google Scholar] [CrossRef]

- Li, D.; Sun, J.; Yang, H.; Wang, X. An Enhanced Naive Bayes Model for Dissolved Oxygen Forecasting in Shellfish Aquaculture. IEEE Access 2020, 8, 217917–217927. [Google Scholar] [CrossRef]

- C. Yingyi, C. C. Yingyi, C. Qianqian, F. Xiaomin, Y. Huihui, and Li Daoliang, “Principal component analysis and long short-term memory neural network for predicting dissolved oxygen in water for aquaculture,” Trans. of the Chinese Society of Agricultural Engineering (CSAE), vol. 34, no. 17, pp.183-191, Aug. 2018.

- Li, Z.; Peng, F.; Niu, B.; Li, G.; Wu, J.; Miao, Z. Water Quality Prediction Model Combining Sparse Auto-encoder and LSTM Network. IFAC-PapersOnLine 2018, 51, 831–836. [Google Scholar] [CrossRef]

- Da Silva, Y.F.; Freire, R.C.S.; Neto, J.V.D.F. Conception and Design of WSN Sensor Nodes based on Machine Learning, Embedded Systems and IoT approaches for Pollutant Detection in Aquatic Environments. IEEE Access 2023, PP, 1–1. [Google Scholar] [CrossRef]

- Liu, S.; Xu, L.; Li, Q.; Zhao, X.; Li, D. Fault Diagnosis of Water Quality Monitoring Devices Based on Multiclass Support Vector Machines and Rule-Based Decision Trees. IEEE Access 2018, 6, 22184–22195. [Google Scholar] [CrossRef]

- Park, Y.; Cho, K.H.; Park, J.; Cha, S.M.; Kim, J.H. Development of early-warning protocol for predicting chlorophyll-a concentration using machine learning models in freshwater and estuarine reservoirs, Korea. Sci. Total. Environ. 2015, 502, 31–41. [Google Scholar] [CrossRef]

- Lee, G.; Bae, J.; Lee, S.; Jang, M.; Park, H. Monthly chlorophyll-a prediction using neuro-genetic algorithm for water quality management in Lakes. Desalination Water Treat. 2016, 57, 26783–26791. [Google Scholar] [CrossRef]

- Gambin, A.F.; Angelats, E.; Gonzalez, J.S.; Miozzo, M.; Dini, P. Sustainable Marine Ecosystems: Deep Learning for Water Quality Assessment and Forecasting. IEEE Access 2021, 9, 121344–121365. [Google Scholar] [CrossRef]

- Cho, H.; Choi, U.J.; Park, H. Deep Learning Application to Time Series Prediction of Daily Chlorophyll-a Concentration. WIT Trans. Ecol. Environ. 2018, 215, 157–163. [Google Scholar] [CrossRef]

- S. Lee and D. Lee, “Four major South Korea’s rivers using deep learning models,” Int. Journal of Environmental Research and Public Health, vol. 15, no. 7, pp.1-15, Jun. 2018.

- John, E.M.; Krishnapriya, K.; Sankar, T. Treatment of ammonia and nitrite in aquaculture wastewater by an assembled bacterial consortium. 526, 7353; 90. [Google Scholar] [CrossRef]

- Yu, H.; Yang, L.; Li, D.; Chen, Y. A hybrid intelligent soft computing method for ammonia nitrogen prediction in aquaculture. Inf. Process. Agric. 2020, 8, 64–74. [Google Scholar] [CrossRef]

- Karri, R.R.; Sahu, J.N.; Chimmiri, V. Critical review of abatement of ammonia from wastewater. 261, 31. [CrossRef]

- Nagaraju, T.V.; B. M., S.; Chaudhary, B.; Prasad, C.D.; R, G. Prediction of ammonia contaminants in the aquaculture ponds using soft computing coupled with wavelet analysis. Environ. Pollut. 2023, 331, 121924. [Google Scholar] [CrossRef]

- A. Vaswani, N. A. Vaswani, N. Shazeer, N. Parmar, et al., “Attention is all you need,” in Proc. of 31st Conf. on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA. pp.1-11, Dec. 2017.

- M. S. Ahmed, T. T. M. S. Ahmed, T. T. Aurpa, and M. A. K. Azad, “Fish disease detection using image based machine learning technique in aquaculture,” Journal of King Saud University - Computer and Information Sciences, vol. 34, no. 8, pp.5170-5182, 22. 20 May.

- M. J. Mia, R. B. M. J. Mia, R. B. Mahmud, M. S. Sadad, H. A. Asad, and R. Hossain, “An in-depth automated approach for fish disease recognition,” Journal of King Saud University - Computer and Information Sciences, vol. 34, no. 9, pp.7174-7183, Feb. 2022.

- S. P. Khabusi, Y. S. P. Khabusi, Y. -P. Huang, and M. -F. Lee, “Attention-based mechanism for fish disease classification in aquaculture,” in Proc. of Int. Conf. on System Science and Engineering (ICSSE), Ho Chi Minh, Vietnam, pp.95-100, Jul. 2023.

- Waleed, A.; Medhat, H.; Esmail, M.; Osama, K.; Samy, R.; Ghanim, T.M. Automatic Recognition of Fish Diseases in Fish Farms. 2019 14th International Conference on Computer Engineering and Systems (ICCES). LOCATION OF CONFERENCE, EgyptDATE OF CONFERENCE; pp. 201–206.

- Sujatha, K.; Mounika, P. Evaluation of ML Models for Detection and Prediction of Fish Diseases: A Case Study on Epizootic Ulcerative Syndrome. 2023 Second International Conference on Electrical, Electronics, Information and Communication Technologies (ICEEICT). LOCATION OF CONFERENCE, IndiaDATE OF CONFERENCE; pp. 1–7.

- S. Malik, T. S. Malik, T. Kumar, and A.K Sahoo, “Fish disease detection using HOG and FAST feature descriptor,” Int. Journal of Computer Science and Information Security (IJCSIS), vol. 15, no. 5, pp.216-221, 17. 20 May.

- Nayan, A.-A.; Saha, J.; Mozumder, A.N.; Mahmud, K.R.; Al Azad, A.K.; Kibria, M.G. A Machine Learning Approach for Early Detection of Fish Diseases by Analyzing Water Quality. Trends Sci. 2021, 18, 351–351. [Google Scholar] [CrossRef]

- Moni, J.; Jacob, P.M.; Sudeesh, S.; Nair, M.J.; George, M.S.; Thomas, M.S. A Smart Aquaculture Monitoring System with Automated Fish Disease Identification. 2024 1st International Conference on Trends in Engineering Systems and Technologies (ICTEST). LOCATION OF CONFERENCE, IndiaDATE OF CONFERENCE; pp. 01–06.

- Mendieta, M.; Romero, D. A cross-modal transfer approach for histological images: A case study in aquaculture for disease identification using zero-shot learning. 2017 IEEE Second Ecuador Technical Chapters Meeting (ETCM). LOCATION OF CONFERENCE, COUNTRYDATE OF CONFERENCE; pp. 1–6.

- Vijayalakshmi, M.; Sasithradevi, A.; Prakash, P. Transfer Learning Approach for Epizootic Ulcerative Syndrome and Ichthyophthirius Disease Classification in Fish Species. 2023 International Conference on Bio Signals, Images, and Instrumentation (ICBSII). LOCATION OF CONFERENCE, IndiaDATE OF CONFERENCE; pp. 1–5.

- Al Maruf, A.; Fahim, S.H.; Bashar, R.; Rumy, R.A.; Chowdhury, S.I.; Aung, Z. Classification of Freshwater Fish Diseases in Bangladesh Using a Novel Ensemble Deep Learning Model: Enhancing Accuracy and Interpretability. IEEE Access 2024, 12, 96411–96435. [Google Scholar] [CrossRef]

- A. Vasumathi, R. P. A. Vasumathi, R. P. Singh, E. S Bharathi, N. Vignesh, and S. Harsith, “Fish disease detection using machine learning,” in Proc. of Int. Conf. on Science Technology Engineering and Management (ICSTEM), Coimbatore, India, pp.1-4, Apr. 2024.

- Gu, J.; Deng, C.; Lin, X.; Yu, D. Expert system for fish disease diagnosis based on fuzzy neural network. 2012 Third International Conference on Intelligent Control and Information Processing (ICICIP). LOCATION OF CONFERENCE, ChinaDATE OF CONFERENCE; pp. 146–149.

- Darapaneni, N.; Sreekanth, S.; Paduri, A.R.; Roche, A.S.; Murugappan, V.; Singha, K.K.; Shenwai, A.V. AI Based Farm Fish Disease Detection System to Help Micro and Small Fish Farmers. 2022 Interdisciplinary Research in Technology and Management (IRTM). LOCATION OF CONFERENCE, IndiaDATE OF CONFERENCE; pp. 1–5.

- Prządka, M.P.; Wojcieszak, D.; Pala, K. Optimization of Au Electrode Parameters for Pathogen Detection in Aquaculture. IEEE Sensors J. 2024, 24, 5785–5796. [Google Scholar] [CrossRef]

- Zhang, T.; Yang, Y.; Liu, Y.; Liu, C.; Zhao, R.; Li, D.; Shi, C. Fully automatic system for fish biomass estimation based on deep neural network. Ecol. Informatics 2023, 79. [Google Scholar] [CrossRef]

- Gao, Z.; Jiang, W.; Man, X.; Zheng, R.; Ma, X. A Method for Estimating Fish Biomass Based on Underwater Binocular Vision. 2024 36th Chinese Control and Decision Conference (CCDC). LOCATION OF CONFERENCE, ChinaDATE OF CONFERENCE; pp. 2656–2660.

- S, S.; E, S.; Nandini, T.S.; T, S. Fish Biomass Estimation Based on Object Detection Using YOLOv7. 2023 4th International Conference for Emerging Technology (INCET). LOCATION OF CONFERENCE, IndiaDATE OF CONFERENCE; pp. 1–6.

- Rossi, L.; Bibbiani, C.; Fronte, B.; Damiano, E.; Di Lieto, A. Application of a smart dynamic scale for measuring live-fish biomass in aquaculture. 2021 IEEE International Workshop on Metrology for Agriculture and Forestry (MetroAgriFor). LOCATION OF CONFERENCE, ItalyDATE OF CONFERENCE; pp. 248–252.

- Rossi, L.; Bibbiani, C.; Fronte, B.; Damiano, E.; Di Lieto, A. Validation campaign of a smart dynamic scale for measuring live-fish biomass in aquaculture. 2022 IEEE International Workshop on Metrology for Agriculture and Forestry (MetroAgriFor). LOCATION OF CONFERENCE, ItalyDATE OF CONFERENCE; pp. 111–115.

- Damiano, E.; Bibbiani, C.; Fronte, B.; Di Lieto, A. Smart and cheap scale for estimating live-fish biomass in offshore aquaculture. 2020 IEEE International Workshop on Metrology for Agriculture and Forestry (MetroAgriFor). LOCATION OF CONFERENCE, ItalyDATE OF CONFERENCE; pp. 160–164.

- Pargi, M.K.; Bagheri, E.; F, R.S.; Huat, K.E.; Shishehchian, F.; Nathalie, N. Improving Aquaculture Systems using AI: Employing predictive models for Biomass Estimation on Sonar Images. 2022 21st IEEE International Conference on Machine Learning and Applications (ICMLA). LOCATION OF CONFERENCE, BahamasDATE OF CONFERENCE; pp. 1629–1636.

- Sthapit, P.; Kim, M.; Kang, D.; Kim, K. Development of Scientific Fishery Biomass Estimator: System Design and Prototyping. Sensors 2020, 20, 6095. [Google Scholar] [CrossRef]

- Vasile, G.; Petrut, T.; D'Urso, G.; De Oliveira, E. IoT Acoustic Antenna Development for Fish Biomass Long-Term Monitoring. OCEANS 2018 MTS/IEEE Charleston. LOCATION OF CONFERENCE, COUNTRYDATE OF CONFERENCE; pp. 1–4.

- Tang, N.T.; Lim, K.G.; Yoong, H.P.; Ching, F.F.; Wang, T.; Teo, K.T.K. Non-Intrusive Biomass Estimation in Aquaculture Using Structure from Motion Within Decision Support Systems. 2024 IEEE International Conference on Artificial Intelligence in Engineering and Technology (IICAIET). LOCATION OF CONFERENCE, MalaysiaDATE OF CONFERENCE; pp. 682–686.

- Hossain, S.A.; Hossen, M. Biomass Estimation of a Popular Aquarium Fish Using an Acoustic Signal Processing Technique with Three Acoustic Sensors. 2018 International Conference on Advancement in Electrical and Electronic Engineering (ICAEEE). LOCATION OF CONFERENCE, BangladeshDATE OF CONFERENCE; pp. 1–4.

- Hu, W.-C.; Chen, L.-B.; Lin, H.-M. A Method for Abnormal Behavior Recognition in Aquaculture Fields Using Deep Learning. IEEE Can. J. Electr. Comput. Eng. 2024, 47, 118–126. [Google Scholar] [CrossRef]

- Chen, L.; Yin, X. Recognition Method of Abnormal Behavior of Marine Fish Swarm Based on In-Depth Learning Network Model. J. Web Eng. 2021, 20, 575–596. [Google Scholar] [CrossRef]

- J. Zhaoa, W. J. Zhaoa, W. Baoa, F. Zhangaet, al., “Modified motion influence map and recurrent neural network-based monitoring of the local unusual behaviors for fish school in intensive aquaculture,” Aquaculture, vol. 493, pp.165-175, 18. 20 May.

- Hassan, W.; Fore, M.; Pedersen, M.O.; Alfredsen, J.A. A New Method for Measuring Free-Ranging Fish Swimming Speed in Commercial Marine Farms Using Doppler Principle. IEEE Sensors J. 2020, 20, 10220–10227. [Google Scholar] [CrossRef]

- Le Quinio, A.; Martignac, F.; Girard, A.; Guillard, J.; Roussel, J.-M.; de Oliveira, E. Fish as a Deformable Solid: An Innovative Method to Characterize Fish Swimming Behavior on Acoustic Videos. IEEE Access 2024, 12, 134486–134497. [Google Scholar] [CrossRef]

- Osterloff, J.; Nilssen, I.; Jarnegren, J.; Buhl-Mortensen, P.; Nattkemper, T.W. Polyp Activity Estimation and Monitoring for Cold Water Corals with a Deep Learning Approach. 2016 ICPR 2nd Workshop on Computer Vision for Analysis of Underwater Imagery (CVAUI). LOCATION OF CONFERENCE, MexicoDATE OF CONFERENCE; pp. 1–6.

- Måløy, H. EchoBERT: A Transformer-Based Approach for Behavior Detection in Echograms. IEEE Access 2020, 8, 218372–218385. [Google Scholar] [CrossRef]

- Hu, W.-C.; Chen, L.-B.; Hsieh, M.-H.; Ting, Y.-K. A Deep-Learning-Based Fast Counting Methodology Using Density Estimation for Counting Shrimp Larvae. IEEE Sensors J. 2022, 23, 527–535. [Google Scholar] [CrossRef]

- Pai, K.M.; Shenoy, K.B.A.; Pai, M.M.M. A Computer Vision Based Behavioral Study and Fish Counting in a Controlled Environment. IEEE Access 2022, 10, 87778–87786. [Google Scholar] [CrossRef]

- Sthapit, P.; Teekaraman, Y.; MinSeok, K.; Kim, K. Algorithm to Estimation Fish Population using Echosounder in Fish Farming Net. 2019 International Conference on Information and Communication Technology Convergence (ICTC). LOCATION OF CONFERENCE, KoreaDATE OF CONFERENCE; pp. 587–590.

- Yu, H.; Wang, Z.; Qin, H.; Chen, Y. An Automatic Detection and Counting Method for Fish Lateral Line Scales of Underwater Fish Based on Improved YOLOv5. IEEE Access 2023, 11, 143616–143627. [Google Scholar] [CrossRef]

- Y. Zhou, H. Y. Zhou, H. Yu, J. Wu, Z. Cui, H. Pang, and F. Zhang,” Fish density estimation with multi-scale context enhanced convolutional neural network,” Journal of Communications and Information Networks, vol.4, no.3, pp.80-89, Sep. 2019.

- H. Chen, Y. H. Chen, Y. Cheng, Y. Dou, et al., “Fry counting method in high-density culture based on image enhancement algorithm and attention mechanism,” IEEE Access, vol. 12, pp.41734-41749, Mar. 2024.

- Zhang, X.; Zeng, H.; Liu, X.; Yu, Z.; Zheng, H.; Zheng, B. In Situ Holothurian Noncontact Counting System: A General Framework for Holothurian Counting. IEEE Access 2020, 8, 210041–210053. [Google Scholar] [CrossRef]

- Kim, K.; Myung, H. Autoencoder-Combined Generative Adversarial Networks for Synthetic Image Data Generation and Detection of Jellyfish Swarm. IEEE Access 2018, 6, 54207–54214. [Google Scholar] [CrossRef]

- N. P. Desai, M. F. N. P. Desai, M. F. Balucha, A. Makrariyab, and R. MusheerAziz, “Image processing model with deep learning approach for fish species classification,” Turkish Journal of Computer and Mathematics Education, vol. 13, no. 1, pp.85-99, Jan. 2022.

- Zhang, X.; Huang, B.; Chen, G.; Radenkovic, M.; Hou, G. WildFishNet: Open Set Wild Fish Recognition Deep Neural Network With Fusion Activation Pattern. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2023, 16, 7303–7314. [Google Scholar] [CrossRef]

- Chuang, M.-C.; Hwang, J.-N.; Williams, K. A Feature Learning and Object Recognition Framework for Underwater Fish Images. IEEE Trans. Image Process. 2016, 25, 1–1. [Google Scholar] [CrossRef] [PubMed]

- Slonimer, A.L.; Dosso, S.E.; Albu, A.B.; Cote, M.; Marques, T.P.; Rezvanifar, A.; Ersahin, K.; Mudge, T.; Gauthier, S. Classification of Herring, Salmon, and Bubbles in Multifrequency Echograms Using U-Net Neural Networks. IEEE J. Ocean. Eng. 2023, 48, 1236–1254. [Google Scholar] [CrossRef]

- Paraschiv, M.; Padrino, R.; Casari, P.; Bigal, E.; Scheinin, A.; Tchernov, D.; Anta, A.F. Classification of Underwater Fish Images and Videos via Very Small Convolutional Neural Networks. J. Mar. Sci. Eng. 2022, 10, 736. [Google Scholar] [CrossRef]

- Tejaswini, H.; Pai, M.M.M.; Pai, R.M. Automatic Estuarine Fish Species Classification System Based on Deep Learning Techniques. IEEE Access 2024, 12, 140412–140438. [Google Scholar] [CrossRef]

- Liu, Z. Soft-shell Shrimp Recognition Based on an Improved AlexNet for Quality Evaluations. J. Food Eng. 2019, 266, 109698. [Google Scholar] [CrossRef]

- Yu, X.; Tang, L.; Wu, X.; Lu, H. Nondestructive Freshness Discriminating of Shrimp Using Visible/Near-Infrared Hyperspectral Imaging Technique and Deep Learning Algorithm. Food Anal. Methods 2017, 11, 768–780. [Google Scholar] [CrossRef]

- Xuan, Q.; Fang, B.; Liu, Y.; Wang, J.; Zhang, J.; Zheng, Y.; Bao, G. Automatic Pearl Classification Machine Based on a Multistream Convolutional Neural Network. IEEE Trans. Ind. Electron. 2017, 65, 6538–6547. [Google Scholar] [CrossRef]

- M. E. Elawady, “Sparse coral classification using deep convolutional neural network,” University of Burgundy Thesis, pp.1-51, Jun. 2014.

- Riabchenko, E.; Meissner, K.; Ahmad, I.; Iosifidis, A.; Tirronen, V.; Gabbouj, M.; Kiranyaz, S. Learned vs. engineered features for fine-grained classification of aquatic macroinvertebrates. 2016 23rd International Conference on Pattern Recognition (ICPR). LOCATION OF CONFERENCE, MexicoDATE OF CONFERENCE; pp. 2276–2281.

- Wu, F.; Cai, Z.; Fan, S.; Song, R.; Wang, L.; Cai, W. Fish Target Detection in Underwater Blurred Scenes Based on Improved YOLOv5. IEEE Access 2023, 11, 122911–122925. [Google Scholar] [CrossRef]

- Qin, X.; Yu, C.; Liu, B.; Zhang, Z. YOLO8-FASG: A High-Accuracy Fish Identification Method for Underwater Robotic System. IEEE Access 2024, PP, 1–1. [Google Scholar] [CrossRef]

- Fan, J.; Zhou, J.; Wang, X.; Wang, J. A Self-Supervised Transformer With Feature Fusion for SAR Image Semantic Segmentation in Marine Aquaculture Monitoring. IEEE Trans. Geosci. Remote. Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- J. Wang, J. J. Wang, J. Fan, and J. Wang, “MDOAU-Net: A lightweight and robust deep learning model for SAR image segmentation in aquaculture raft monitoring,” IEEE Geoscience and Remote Sensing Letters, vol. 19, pp.1-5, Feb. 2022.

- Yu, C.; Liu, Y.; Xia, X.; Lan, D.; Liu, X.; Wu, S. Precise and Fast Segmentation of Offshore Farms in High-Resolution SAR Images Based on Model Fusion and Half-Precision Parallel Inference. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2022, 15, 4861–4872. [Google Scholar] [CrossRef]

- Wang, X.; Zhou, J.; Fan, J. IDUDL: Incremental Double Unsupervised Deep Learning Model for Marine Aquaculture SAR Images Segmentation. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Sánchez, J.S.; Lisani, J.-L.; Catalán, I.A.; Álvarez-Ellacuría, A. Leveraging Bounding Box Annotations for Fish Segmentation in Underwater Images. IEEE Access 2023, 11, 125984–125994. [Google Scholar] [CrossRef]

- Qin, G.; Wang, S.; Wang, F.; Zhou, Y.; Wang, Z.; Zou, W. U_EFF_NET: High-Precision Segmentation of Offshore Farms From High-Resolution SAR Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2022, 15, 8519–8528. [Google Scholar] [CrossRef]

- Fan, J.; Zhao, J.; An, W.; Hu, Y. Marine Floating Raft Aquaculture Detection of GF-3 PolSAR Images Based on Collective Multikernel Fuzzy Clustering. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2019, 12, 2741–2754. [Google Scholar] [CrossRef]

- Fan, J.; Deng, Q. RSC-APMN: Random Sea Condition Adaptive Perception Modulating Network for SAR-Derived Marine Aquaculture Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2024, PP, 1–17. [Google Scholar] [CrossRef]

- Issac, A.; Dutta, M.K.; Sarkar, B.; Burget, R. An efficient image processing based method for gills segmentation from a digital fish image. 2016 3rd International Conference on Signal Processing and Integrated Networks (SPIN). LOCATION OF CONFERENCE, IndiaDATE OF CONFERENCE; pp. 645–649.

- O. Ulucan, D. O. Ulucan, D. Karakaya, and M. Turkan, “A large-scale dataset for fish segmentation and classification,” in Proc. of Innovations in Intelligent Systems and Applications Conference (ASYU), Istanbul, Turkey, pp.1-5, Oct. 2020. [Google Scholar]

- Hsieh, Y.-Z.; Meng, Y.-H. A Video Surveillance System for Determining the Sexual Maturity of Cobia. IEEE Trans. Consum. Electron. 2023, 70, 484–495. [Google Scholar] [CrossRef]

- Ahmed, H.; Ushirobira, R.; Efimov, D.; Tran, D.; Sow, M.; Payton, L.; Massabuau, J.-C. A Fault Detection Method for Automatic Detection of Spawning in Oysters. IEEE Trans. Control. Syst. Technol. 2015, 24, 1140–1147. [Google Scholar] [CrossRef]

- Sthapit, P.; Teekaraman, Y.; MinSeok, K.; Kim, K. Algorithm to Estimation Fish Population using Echosounder in Fish Farming Net. 2019 International Conference on Information and Communication Technology Convergence (ICTC). LOCATION OF CONFERENCE, KoreaDATE OF CONFERENCE; pp. 587–590.

- Le, N.-B.; Woo, H.; Lee, D.; Huh, J.-H. AgTech: A Survey on Digital Twins based Aquaculture Systems. IEEE Access 2024, PP, 1–1. [Google Scholar] [CrossRef]

- Hsieh, Y.-Z.; Lee, P.-Y. Analysis of Oplegnathus Punctatus Body Parameters Using Underwater Stereo Vision. IEEE Trans. Emerg. Top. Comput. Intell. 2023, 8, 879–891. [Google Scholar] [CrossRef]

- Sun, N.; Li, Z.; Luan, Y.; Du, L. Enhanced YOLO-Based Multi-Task Network for Accurate Fish Body Length Measurement. 2024 5th International Conference on Artificial Intelligence and Electromechanical Automation (AIEA). LOCATION OF CONFERENCE, ChinaDATE OF CONFERENCE; pp. 334–339.

- X. Chen, I. X. Chen, I. N’Doye, F. Aljehani, and T. -M. Laleg-Kirati, “Fish weight prediction using empirical and data-driven models in aquaculture systems,” in Proc. of IEEE Conf. on Control Technology and Applications (CCTA), Newcastle upon Tyne, United Kingdom, pp.369-374, Aug. 2024.

- Voskakis, D.; Makris, A.; Papandroulakis, N. Deep learning based fish length estimation. An application for the Mediterranean aquaculture. OCEANS 2021: San Diego – Porto. LOCATION OF CONFERENCE, United StatesDATE OF CONFERENCE;

- D. Pérez, F. J. D. Pérez, F. J. Ferrero, I. Alvarez, M. Valledor, and J. C. Campo, “Automatic measurement of fish size using stereo vision,” in Proc. of IEEE Int. Instrumentation and Measurement Technology Conference (I2MTC), Houston, TX, USA, pp.1-6, 18. 20 May.

- Gorpincenko, A.; French, G.; Knight, P.; Challiss, M.; Mackiewicz, M. Improving Automated Sonar Video Analysis to Notify About Jellyfish Blooms. IEEE Sensors J. 2020, 21, 4981–4988. [Google Scholar] [CrossRef]

- Schraml, R.; Hofbauer, H.; Jalilian, E.; Bekkozhayeva, D.; Saberioon, M.; Cisar, P.; Uhl, A. Towards Fish Individuality-Based Aquaculture. IEEE Trans. Ind. Informatics 2020, 17, 4356–4366. [Google Scholar] [CrossRef]

- Liu, X.; Yue, Y.; Shi, M.; Qian, Z.-M. 3-D Video Tracking of Multiple Fish in a Water Tank. IEEE Access 2019, 7, 145049–145059. [Google Scholar] [CrossRef]

- Gupta, S.; Mukherjee, P.; Chaudhury, S.; Lall, B.; Sanisetty, H. DFTNet: Deep Fish Tracker With Attention Mechanism in Unconstrained Marine Environments. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Shreesha, S.; Pai, M.M.M.; Verma, U.; Pai, R.M. Fish Tracking and Continual Behavioral Pattern Clustering Using Novel Sillago Sihama Vid (SSVid). IEEE Access 2023, 11, 29400–29416. [Google Scholar] [CrossRef]

- Winkler, J.; Badri-Hoeher, S.; Barkouch, F. Activity Segmentation and Fish Tracking From Sonar Videos by Combining Artifacts Filtering and a Kalman Approach. IEEE Access 2023, 11, 96522–96529. [Google Scholar] [CrossRef]

- Marques, T.P.; Cote, M.; Rezvanifar, A.; Slonimer, A.; Albu, A.B.; Ersahin, K.; Gauthier, S. U-MSAA-Net: A Multiscale Additive Attention-Based Network for Pixel-Level Identification of Finfish and Krill in Echograms. IEEE J. Ocean. Eng. 2023, 48, 853–873. [Google Scholar] [CrossRef]

- Xu, X.; Hu, J.; Yang, J.; Ran, Y.; Tan, Z. A Fish Detection and Tracking Method based on Improved Inter-Frame Difference and YOLO-CTS. IEEE Trans. Instrum. Meas. 2024, PP, 1–1. [Google Scholar] [CrossRef]

- Zhao, Z.; Yang, X.; Liu, J.; Zhou, C.; Zhao, C. GCVC: Graph Convolution Vector Distribution Calibration for Fish Group Activity Recognition. IEEE Trans. Multimedia 2023, 26, 1776–1789. [Google Scholar] [CrossRef]

- Zhao, X.; Yan, S.; Gao, Q. An Algorithm for Tracking Multiple Fish Based on Biological Water Quality Monitoring. IEEE Access 2019, 7, 15018–15026. [Google Scholar] [CrossRef]

- Williamson, B.J.; Fraser, S.; Blondel, P.; Bell, P.S.; Waggitt, J.J.; Scott, B.E. Multisensor Acoustic Tracking of Fish and Seabird Behavior Around Tidal Turbine Structures in Scotland. IEEE J. Ocean. Eng. 2017, 42, 948–965. [Google Scholar] [CrossRef]

- Chuang, M.-C.; Hwang, J.-N.; Ye, J.-H.; Huang, S.-C.; Williams, K. Underwater Fish Tracking for Moving Cameras Based on Deformable Multiple Kernels. IEEE Trans. Syst. Man, Cybern. Syst. 2016, 47, 1–11. [Google Scholar] [CrossRef]

- Zhang, Y.; Ning, Y.; Zhang, X.; Glamuzina, B.; Xing, S. Multi-Sensors-Based Physiological Stress Monitoring and Online Survival Prediction System for Live Fish Waterless Transportation. IEEE Access 2020, 8, 40955–40965. [Google Scholar] [CrossRef]

- Le, N.-B.; Huh, J.-H. AgTech: Building Smart Aquaculture Assistant System Integrated IoT and Big Data Analysis. IEEE Trans. AgriFood Electron. 2024, 2, 471–482. [Google Scholar] [CrossRef]

- Xu, Y.; Lu, L. An Attention-Fused Deep Learning Model for Accurately Monitoring Cage and Raft Aquaculture at Large-Scale Using Sentinel-2 Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2024, 17, 9099–9109. [Google Scholar] [CrossRef]

- Liu, J.; Lu, Y.; Guo, X.; Ke, W. A Deep Learning Method for Offshore Raft Aquaculture Extraction Based on Medium-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2023, 16, 6296–6309. [Google Scholar] [CrossRef]

- Soltanzadeh, R.; Hardy, B.; Mcleod, R.D.; Friesen, M.R. A Prototype System for Real-Time Monitoring of Arctic Char in Indoor Aquaculture Operations: Possibilities & Challenges. IEEE Access 2019, 8, 180815–180824. [Google Scholar] [CrossRef]

- Ai, B.; Xiao, H.; Xu, H.; Yuan, F.; Ling, M. Coastal Aquaculture Area Extraction Based on Self-Attention Mechanism and Auxiliary Loss. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2022, 16, 2250–2261. [Google Scholar] [CrossRef]

- Kim, T.; Hwang, K.-S.; Oh, M.-H.; Jang, D.-J. Development of an Autonomous Submersible Fish Cage System. IEEE J. Ocean. Eng. 2013, 39, 702–712. [Google Scholar] [CrossRef]

- Abdurohman, M.; Putrada, A.G.; Deris, M.M. A Robust Internet of Things-Based Aquarium Control System Using Decision Tree Regression Algorithm. IEEE Access 2022, 10, 56937–56951. [Google Scholar] [CrossRef]

- Xia, J.; Ma, T.; Li, Y.; Xu, S.; Qi, H. A Scale-Aware Monocular Odometry for Fishnet Inspection With Both Repeated and Weak Features. IEEE Trans. Instrum. Meas. 2023, 73, 1–11. [Google Scholar] [CrossRef]

- Nguyen, N.T.; Matsuhashi, R. An Optimal Design on Sustainable Energy Systems for Shrimp Farms. IEEE Access 2019, 7, 165543–165558. [Google Scholar] [CrossRef]

- Luna, F.D.V.B.; Aguilar, E.d.l.R.; Naranjo, J.S.; Jaguey, J.G. Robotic System for Automation of Water Quality Monitoring and Feeding in Aquaculture Shadehouse. IEEE Trans. Syst. Man, Cybern. Syst. 2016, 47, 1575–1589. [Google Scholar] [CrossRef]

- Kim, J.; Song, S.; Kim, T.; Song, Y.-W.; Kim, S.-K.; Lee, B.-I.; Ryu, Y.; Yu, S.-C. Collaborative Vision-Based Precision Monitoring of Tiny Eel Larvae in a Water Tank. IEEE Access 2021, 9, 100801–100813. [Google Scholar] [CrossRef]

- M.-D. Yang, K.-S. M.-D. Yang, K.-S. Huang, J. Wan, H. P. Tsai, and L.-M. Lin, “Timely and quantitative damage assessment of oyster racks using UAV images,” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol. 11, no. 8, pp.2862-2868, Aug. 2018.

- J. O. Gaya, L. T. J. O. Gaya, L. T. Goncalves, A. C. Duarte, B. Zanchetta, P. Drews, and S. S. C. Botelho, “Vision-based obstacle avoidance using deep learning,” in Proc. of XIII Latin American Robotics Symposium and IV Brazilian Robotics Symposium (LARS/SBR), Recife, Brazil, pp.7-12, Oct. 2016.

- Tun, T.T.; Huang, L.; Preece, M.A. Development and High-Fidelity Simulation of Trajectory Tracking Control Schemes of a UUV for Fish Net-Pen Visual Inspection in Offshore Aquaculture. IEEE Access 2023, 11, 135764–135787. [Google Scholar] [CrossRef]

- Xanthidis, M.; Skaldebø, M.; Haugaløkken, B.; Evjemo, L.; Alexis, K.; Kelasidi, E. ResiVis: A Holistic Underwater Motion Planning Approach for Robust Active Perception Under Uncertainties. IEEE Robot. Autom. Lett. 2024, 9, 9391–9398. [Google Scholar] [CrossRef]

- Ouyang, B.; Wills, P.S.; Tang, Y.; Hallstrom, J.O.; Su, T.-C.; Namuduri, K.; Mukherjee, S.; Rodriguez-Labra, J.I.; Li, Y.; Ouden, C.J.D. Initial Development of the Hybrid Aerial Underwater Robotic System (HAUCS): Internet of Things (IoT) for Aquaculture Farms. IEEE Internet Things J. 2021, 8, 14013–14027. [Google Scholar] [CrossRef]

- Lin, F.-S.; Yang, P.-W.; Tai, S.-K.; Wu, C.-H.; Lin, J.-L.; Huang, C.-H. A Machine-Learning-Based Ultrasonic System for Monitoring White Shrimps. IEEE Sensors J. 2023, 23, 23846–23855. [Google Scholar] [CrossRef]

- L. Xu, H. L. Xu, H. Yu, H. Qin, et al., “Digital twin for aquaponics factory: Analysis, opportunities, and research challenges,” IEEE Trans. on Industrial Informatics, vol. 20, no. 4, pp.5060-5073, Apr. 2024.

- Misimi, E.; Øye, E.R.; Sture. ; Mathiassen, J.R. Robust classification approach for segmentation of blood defects in cod fillets based on deep convolutional neural networks and support vector machines and calculation of gripper vectors for robotic processing. Comput. Electron. Agric. 2017, 139, 138–152. [Google Scholar] [CrossRef]

- Chen, W.; Li, X. Deep-learning-based marine aquaculture zone extractions from dual-polarimetric SAR imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2024, PP, 1–16. [Google Scholar] [CrossRef]

- Lu, Y.; Zhao, Y.; Yang, M.; Zhao, Y.; Huang, L.; Cui, B. BI²Net: Graph-Based Boundary–Interior Interaction Network for Raft Aquaculture Area Extraction From Remote Sensing Images. IEEE Geosci. Remote. Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Zhang, X.; Ma, S.; Su, C.; Shang, Y.; Wang, T.; Yin, J. Coastal Oyster Aquaculture Area Extraction and Nutrient Loading Estimation Using a GF-2 Satellite Image. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2020, 13, 4934–4946. [Google Scholar] [CrossRef]

- Zhang, Y.; Yamamoto, M.; Suzuki, G.; Shioya, H. Collaborative Forecasting and Analysis of Fish Catch in Hokkaido From Multiple Scales by Using Neural Network and ARIMA Model. IEEE Access 2022, 10, 7823–7833. [Google Scholar] [CrossRef]

- Kristmundsson, J.; Patursson. ; Potter, J.; Xin, Q. Fish Monitoring in Aquaculture Using Multibeam Echosounders and Machine Learning. IEEE Access 2023, 11, 108306–108316. [Google Scholar] [CrossRef]

- Cheng, W.K.; Khor, J.C.; Liew, W.Z.; Bea, K.T.; Chen, Y.L. Integration of Federated Learning and Edge-Cloud Platform for Precision Aquaculture. IEEE Access 2024, PP, 1–1. [Google Scholar] [CrossRef]

- M. A. Abid, M. M. A. Abid, M. Amjad, K. Munir, H. U. R. Siddique, and A. D. Jurcut, “IoT-based smart Biofloc monitoring system for fish farming using machine learning,” IEEE Access, vol. 12, pp.86333-86345, Jun. 2024.

- Y. Han, J. Y. Han, J. Huang, F. Ling et al., “Dynamic mapping of inland freshwater aquaculture areas in Jianghan plain, China,” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol. 16, pp.4349-4361, Apr. 2023.

- Singh, S.; Ahmad, S.; Amrr, S.M.; Khan, S.A.; Islam, N.; Gari, A.A.; Algethami, A.A. Modeling and Control Design for an Autonomous Underwater Vehicle Based on Atlantic Salmon Fish. IEEE Access 2022, 10, 97586–97599. [Google Scholar] [CrossRef]

- Dyrstad, J.S.; Mathiassen, J.R. Grasping virtual fish: A step towards robotic deep learning from demonstration in virtual reality. 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO). LOCATION OF CONFERENCE, COUNTRYDATE OF CONFERENCE; pp. 1181–1187.

- Zhong, Y.; Chen, Y.; Wang, C.; Wang, Q.; Yang, J. Research on Target Tracking for Robotic Fish Based on Low-Cost Scarce Sensing Information Fusion. IEEE Robot. Autom. Lett. 2022, 7, 6044–6051. [Google Scholar] [CrossRef]

- Milich, M.; Drimer, N. Design and Analysis of an Innovative Concept for Submerging Open-Sea Aquaculture System. IEEE J. Ocean. Eng. 2018, 44, 707–718. [Google Scholar] [CrossRef]

- Yang, R.-Y.; Tang, H.-J.; Huang, C.-C. Numerical Modeling of the Mooring System Failure of an Aquaculture Net Cage System Under Waves and Currents. IEEE J. Ocean. Eng. 2020, 45, 1396–1410. [Google Scholar] [CrossRef]

- Liu, X.; Zhu, H.; Song, W.; Wang, J.; Yan, L.; Wang, K. Research on Improved VGG-16 Model Based on Transfer Learning for Acoustic Image Recognition of Underwater Search and Rescue Targets. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2024, 17, 18112–18128. [Google Scholar] [CrossRef]

- Kuang, L.; Shi, P.; Hua, C.; Chen, B.; Zhu, H. An Enhanced Extreme Learning Machine for Dissolved Oxygen Prediction in Wireless Sensor Networks. IEEE Access 2020, 8, 198730–198739. [Google Scholar] [CrossRef]

- M. Singh, K. S. M. Singh, K. S. Sahoo, and A. H. Gandomi, “An intelligent-IoT-based data analytics for freshwater recirculating aquaculture system,” IEEE Internet of Things Journal, vol. 11, no. 3, pp.4206-4217, Feb. 2024.

- Cao, S.; Zhou, L.; Zhang, Z. Corrections to “Prediction of Dissolved Oxygen Content in Aquaculture Based on Clustering and Improved ELM”. IEEE Access 2021, 9, 135508–135512. [Google Scholar] [CrossRef]

- M. Mendieta and D. Romero “A cross-modal transfer approach for histological images: A case study in aquaculture for disease identification using Zero-Shot learning,” in Proc. of IEEE Second Ecuador Technical Chapters Meeting (ETCM), Salinas, Ecuador, pp.1-6, Oct. 2017.

- Waleed, A.; Medhat, H.; Esmail, M.; Osama, K.; Samy, R.; Ghanim, T.M. Automatic Recognition of Fish Diseases in Fish Farms. 2019 14th International Conference on Computer Engineering and Systems (ICCES). LOCATION OF CONFERENCE, EgyptDATE OF CONFERENCE; pp. 201–206.

- Wambura, S.; Li, H. Deep and Confident Image Analysis for Disease Detection. VSIP '20: 2020 2nd International Conference on Video, Signal and Image Processing. LOCATION OF CONFERENCE, IndonesiaDATE OF CONFERENCE; pp. 91–99.

- S. Shephard, D. G. S. Shephard, D. G. Reid, H. D. Gerritsen, and K. D. Farnsworth, “Estimating biomass, fishing mortality, and “total allowable discards” for surveyed non-target fish,” ICES Journal of Marine Science, vol. 72, no. 2, pp.458-466, Aug. 2014.

- Han, F.; Zhu, J.; Liu, B.; Zhang, B.; Xie, F. Fish Shoals Behavior Detection Based on Convolutional Neural Network and Spatiotemporal Information. IEEE Access 2020, 8, 126907–126926. [Google Scholar] [CrossRef]

- Chicchon, M.; Bedon, H.; Del-Blanco, C.R.; Sipiran, I. Semantic Segmentation of Fish and Underwater Environments Using Deep Convolutional Neural Networks and Learned Active Contours. IEEE Access 2023, 11, 33652–33665. [Google Scholar] [CrossRef]

- Pravin, S.C.; Rohith, G.; Kiruthika, V.; Manikandan, E.; Methelesh, S.; Manoj, A. Underwater Animal Identification and Classification Using a Hybrid Classical-Quantum Algorithm. IEEE Access 2023, 11, 141902–141914. [Google Scholar] [CrossRef]

- Huang, T.-W.; Hwang, J.-N.; Romain, S.; Wallace, F. Fish Tracking and Segmentation From Stereo Videos on the Wild Sea Surface for Electronic Monitoring of Rail Fishing. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 3146–3158. [Google Scholar] [CrossRef]

- Ren, W.; Wang, X.; Tian, J.; Tang, Y.; Chan, A.B. Tracking-by-Counting: Using Network Flows on Crowd Density Maps for Tracking Multiple Targets. IEEE Trans. Image Process. 2020, 30, 1439–1452. [Google Scholar] [CrossRef]

- Ubina, N.A.; Lan, H.-Y.; Cheng, S.-C.; Chang, C.-C.; Lin, S.-S.; Zhang, K.-X.; Lu, H.-Y.; Cheng, C.-Y.; Hsieh, Y.-Z. Digital twin-based intelligent fish farming with Artificial Intelligence Internet of Things (AIoT). Smart Agric. Technol. 2023, 5. [Google Scholar] [CrossRef]

| Application | Dataset | AI Algorithm | Study | Results | Limitations | Future Directions |

|---|---|---|---|---|---|---|

| Smart Feeding Systems | Fish activity data, water quality parameters, and environmental factors from various sources | CNN, ResNet, OSELM, SVM, RNN, Gradient Boosting, Naive Bayes | [8,23,25,54,55,56,57,58,59,60,61,62,63,64,188] | Improved feeding efficiency, reduced waste, enhanced fish growth rates | Specificity to controlled environments and limited species diversity | Integrating more species, real-time IoT-enabled feeding systems, edge computing |

| Water quality management | Water quality metrics (DO, pH, temperature, turbidity), historical data, environmental sensors, and WSNs collected across various aquaculture and reservoir environments | LSTM, ANN, SVM, CNN, LightGBM, PSO, Gradient Boosting, PCA-LSTM, SAE-LSTM, | [13,16,18,26,27,28,40,41,43,45,46,47,48,65,66,67,68,69,70,71,72,73,74,75,76,77,79,80,81,82,176,203,204,205] | High accuracy in water quality prediction, improved sustainability measures | Variability in generalizability across aquaculture environments | Real-time monitoring, scaling for larger environments, incorporating additional water quality factors |

| Disease detection, classification, and prevention | Fish images, histological images, water quality data, and environmental factors were collected across aquaculture sites. | CNN, ResNet, SVM, AlexNet, Random Forest, Transfer Learning, Decision Trees | [2,7,21,29,30,84,85,86,88,89,90,91,92,93,94,95,96,97,98,206,207,208] | High classification accuracy, effective early disease intervention | Limited disease types covered in studies and reliance on controlled images | Expanding datasets for varied diseases, development of mobile applications for real-time diagnosis |

| Fish Biomass Estimation | High-resolution images, stereo vision, sonar imaging, and underwater acoustic signals collected in various fish farming environments | YOLOv5, Mask R-CNN, DL-YOLO, 3D reconstruction, LAR, SfM | [99,100,101,102,103,104,105,106,107,108,109,209] | High biomass estimation accuracy, aiding in resource management and sustainable production | Susceptible to environmental variables (e.g., water clarity, lighting) | Real-time monitoring, broader species adaptability, integrating IoT for continuous tracking |

| Fish Behavior Detection | Video data, echogram data, and telemetry data from controlled and natural aquaculture environments | ResNeXt3×1D, LeNet-5, EchoBERT, FR-CNN, deformable models, DQN, CNN, RNN | [50,51,110,111,112,113,114,115,116,210] | Effective identification of abnormal behaviors and collective behavior patterns | Dependent on environmental conditions like lighting and noise | Real-time monitoring, expanding behavior categories, edge computing deployment |

| Counting Aquaculture Organisms | Images, videos, and acoustics for various fish, shrimp larvae, fry, and holothurian populations collected across aquaculture sites | YOLOv2, v5, Faster R-CNN, Multi-Scale CNN, MCNN, SGDAN, ShrimpCountNet, GAN | [6,34,117,118,119,120,121,122,123,124] | High counting accuracy, fast execution time, practical for commercial applications | Limited accuracy in extremely high-density populations and dependence on specific environmental conditions | Optimizing for diverse aquaculture species, reducing hardware requirements for mobile deployment |

| Segmentation, detection and classification of aquaculture species | SAR images, echograms, underwater images and videos, from secondary sources like GaoFen-3, NOAA, and Fish4Knowledge, as well as primary datasets. | YOLOv5, ANN, Mask R-CNN, Cascade R-CNN, SegNet, SVM, EfficientNet, U-Net, CNN, Transformer-based models, SAEs–LR | [10,11,14,31,32,33,125,126,127,128,129,130,131,132,133,134,135,136,137,138,139,140,141,142,143,144,145,146,211,212] | High segmentation and classification accuracy, effective monitoring and species recognition | Limited generalization to broader species and varied underwater conditions | Expanding datasets and models for new species and environmental settings, optimizing models for mobile use |

| Breeding and Growth Estimation | Diverse datasets including cobia images, oyster valve activity, fish length and weight data, and simulated growth data | YOLOv8, OpenPose, FHRCNN, CNN, LSTM, stereo vision, ResNetv2 | [12,32,33,146,147,148,149,150,151,152,153,154,155,156,157] [128,136,138,141] | High accuracy in growth and breeding stage predictions, non-invasive measurement approaches | Limited adaptability to varying environmental conditions, accuracy affected by underwater factors | Expanding to broader species, integrating real-time sensors, and refining models for environmental robustness |

| Fish Tracking and Individuality | Diverse datasets including Atlantic salmon irises, sonar recordings, Fish4Knowledge, and in situ videos from tidal and aquaculture environments | CNN, Kalman Filter, YOLO-CTS, Deep-SORT, DFTNet, GMM, GCVC, DMK, ARIMA | [158,159,160,161,162,163,164,165,166,167,168,169,213,214] | High tracking and identification accuracy, effective behavior analysis in complex environments | Limited long-term identification stability for iris-based systems, impacted by environmental noise | Enhancing biometric stability, integrating sensory inputs (e.g., sonar), refining detection for complex scenes |

| Automation and Robotics | Datasets from primary and secondary sources, including satellite images (Sentinel, GF-1, GF-2), underwater video, sensor data from aquaculture sites, simulation data for autonomous systems | YOLOv5, Mask R-CNN, LSTM, Digital Twins, RNN, CNN, Transformer-based models, multi-agent RL, image processing, federated learning, genetic optimization, 3DCNN | [10,15,35,66,170,171,172,173,174,175,176,177,178,179,180,181,182,183,184,185,186,187,189,190,191,192,193,194,195,196,197,198,199,200,201,202] | Improved monitoring, management, and operational efficiency in aquaculture through autonomous systems and robotic solutions | Limited robustness in complex or variable environmental conditions, reliance on robust connectivity and energy | Enhancing environmental adaptability, expanding capabilities to new species and settings, integrating multi-sourced data and IoT |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).