INTRODUCTION

The rapid evolution of technology has brought forth unprecedented opportunities and challenges in this digital era. Ethical issues in responsible data research have become a principal concern among these challenges necessitating thoughtful consideration and proactive management (Esmer & Arıbaş, 2022). As data becomes a pivotal asset for organizations and individuals alike, the methodologies and practices encompassing its collection, analysis, and storage must be scrutinized to ensure ethical compliance (Edquist et al., 2022). The importance of ethics in responsible data research has been amplified within the realm of cybersecurity where researchers are tasked with securing sensitive information and protecting the privacy of individuals and organizations. Cybersecurity is not just about defending against malicious attacks but also about maintaining the trust of stakeholders through ethical conduct and moral lapses in cybersecurity can result in ramifications such as breaches of confidentiality, loss of data integrity, and violation of public trust (Macnish & Van der Ham, 2020). This implies providing a comprehensive understanding of the ethical deliberations cybersecurity researchers must navigate to balance security and moral standards in an effective manner. The examination of case studies, regulatory frameworks, and best practices can equip cybersecurity practitioners with the knowledge and tools required to uphold ethical standards in their work alongside underscoring the need for continuous ethical vigilance in a rapidly evolving digital landscape while ensuring that the data protection standards align with the broader societal values of fairness, privacy, and respect for individuals' rights (Miller & Bossomaier, 2024).

The objective of this paper is to delve into the exploration of the ethical dimensions of responsible data research while emphasizing the integration of cybersecurity perspectives such as privacy and confidentiality, informed consent, data integrity & manipulation, and misuse of technology. The convergence of ethics in responsible data research and cybersecurity creates a complex landscape and broad overview of how the researchers must navigate legal, technical, and moral challenges (Pattison, 2020). This also highlights the importance of integrating ethical principles into cybersecurity practices to protect confidentiality and maintain trust in digital systems.

Several information technology organizations including the Association for Computing Machinery (ACM) and the Institute of Electrical and Electronics Engineers (IEEE) have established a code of ethics and list of behavioral requirements for researchers. There are a list of activities that organizations can perform to maintain ethical behaviors of personnel as they conduct responsible data research for meeting business needs. Ethical concerns are commonly experienced cybersecurity researchers during the performance of their job functions with each having potential negative implications and coinciding responsible actions which should be taken to safeguard morale behavior (Raul, 2021). Cybersecurity practitioners are routinely exposed to ethical issues due to their responsibility of securing and protecting the data and livelihood of individuals and organizations in a technological environment which is experiencing unprecedented growth, data proliferation to a variety of devices, significant design complexity, and an ever-expanding threat landscape (Dunn-Cavelty, 2018). In addition to considering risk and expense of controls while designing security solutions, cybersecurity researchers must also consider the ethical consequences of architectural decisions as well as decisions to accept risks. Cybersecurity researchers have responsibilities to protect the organizations including employees, investors, customers, and stakeholders to which they are aligned but also possess social accountability to protect the society itself (Richards et al., 2020).

BACKGROUND

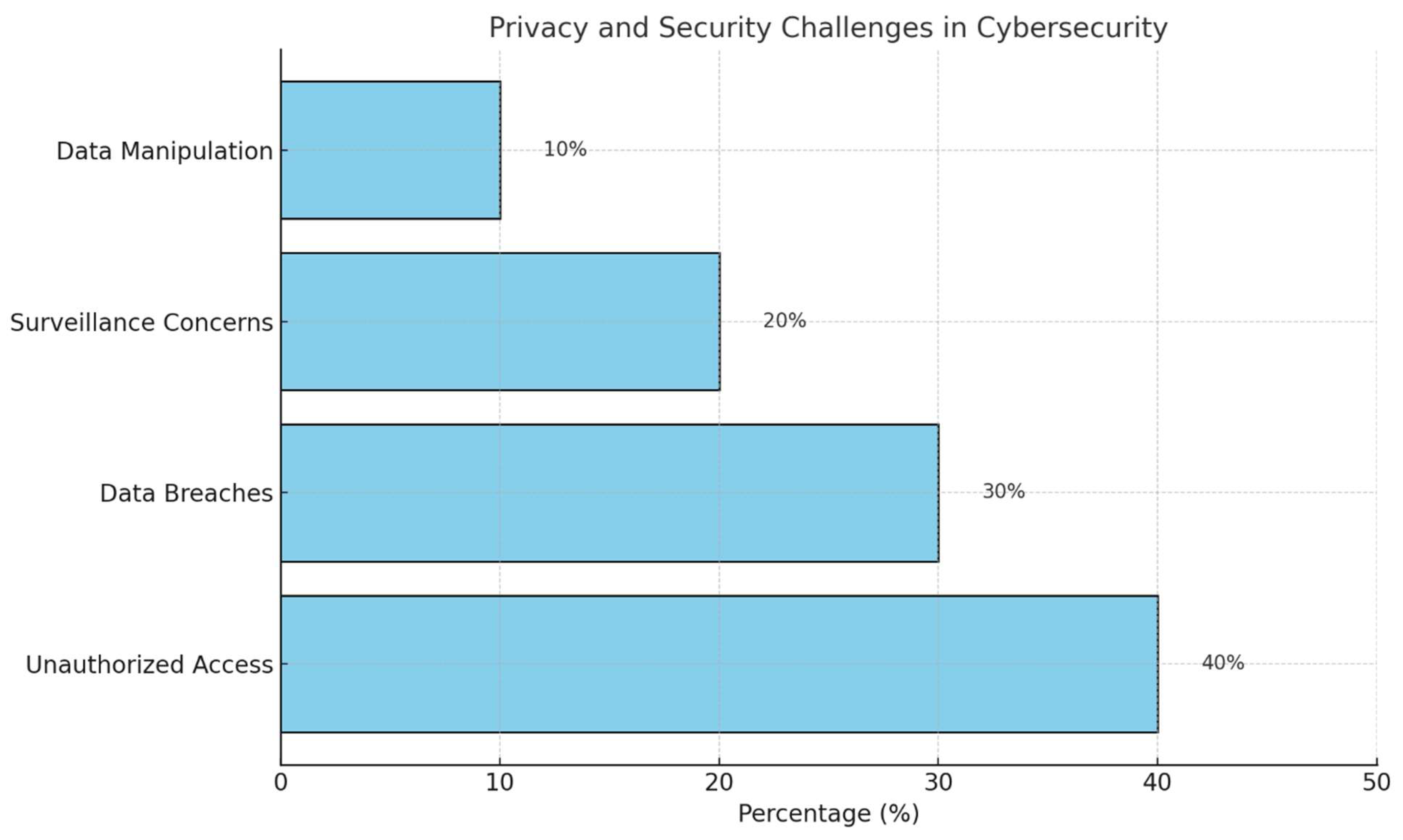

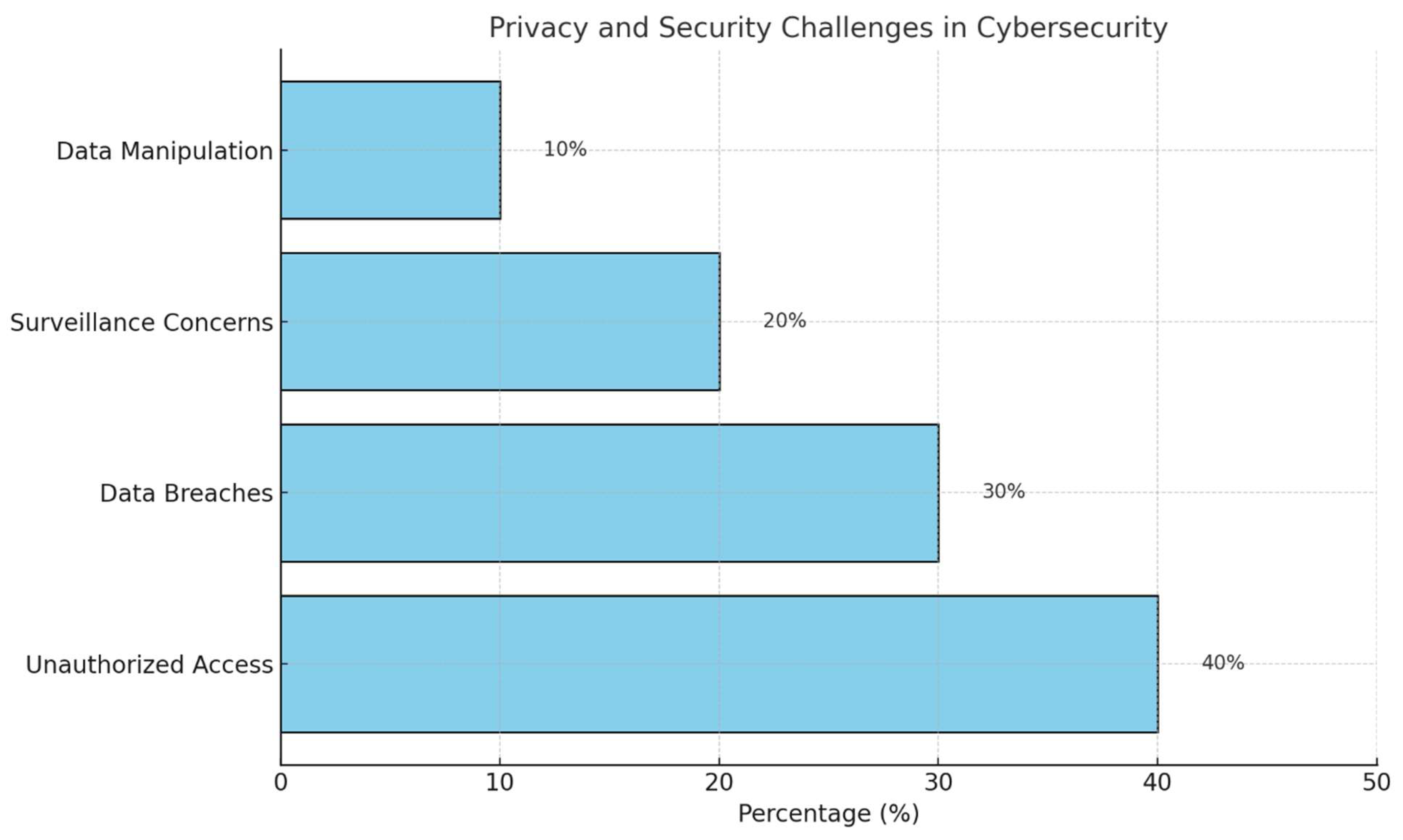

For nearly three decades, the digital age is characterized by significant technological advancements that have transformed various aspects of human life. These advancements have improved the various facets of life including the standard of living, enhanced communication, and facilitated global connectivity ranging from cloud computing and artificial intelligence (Ademola, 2020). The technology has permeated every sector by creating massive amounts of data that drive both innovation and efficiency. Education, Entertainment, Finance, and Healthcare are just a few of these areas that have been revolutionized by digital technologies leading towards creating more personalized and efficient services. As digital devices started becoming more integrated into daily life, they generate and transmit large volumes of data and this data while valuable for improving services and fostering innovation also poses significant privacy and security risks (Aderibigbe, 2021). Some of the critical concerns arising from this extensive amounts of data proliferation can lead to unauthorized access to personal data, data breaches, and information misuse.

This speedy digital transformation has also introduced complex ethical dilemmas specifically in responsible data research in cybersecurity. The proliferation of data across multiple devices combined with the increasing sophistication of cyber threats demands a robust ethical framework to guide best cybersecurity practices (Allahrakha, 2023). Cybercriminals use sophisticated techniques to exploit vulnerabilities in systems resulting in potentially devastating consequences for individuals, businesses, and governments. These threats range from identity theft and financial fraud to large-scale attacks on critical infrastructure. Due to the evolving nature of these threats, cybersecurity measures must constantly adapt to safeguard sensitive information and maintain public trust (Artz, 2008).

A robust ethical framework is critical to provide for an orderly conduct of cybersecurity practices. Ethical guidelines will help ensure that the collection, storage, and data usage are conducted in a manner that respects individuals' privacy rights and conforms with the legal standards (Atapour-Abarghouei et al., 2020). They also provide a groundwork for developing policies and procedures that address the ethical challenges posed by new technologies and cyber threats. Establishing and maintaining such a robust ethical framework requires a multifaceted approach which involves creating policies that promote transparency and accountability in managing information, ensuring that individuals are informed about how their data is being utilized and protected (Bauer et al., 2020). Additionally, it calls for the implementation of security measures that prevent unauthorized access and mitigate the impact of cyber-attacks. Continuous education and training awareness for cybersecurity researchers are also critical as they must stay updated on the latest threats and ethical standards (Bynum, 2001). Ultimately, the goal is to balance the benefits of digital transformation with the need to protect individuals' rights and maintain the integrity of digital systems. Organizations can navigate the complexities of the digital age and build a safer and trustworthy digital environment for all by fostering a culture of ethical responsibility (Chen et al., 2023).

MAIN FOCUS OF THE PAPER

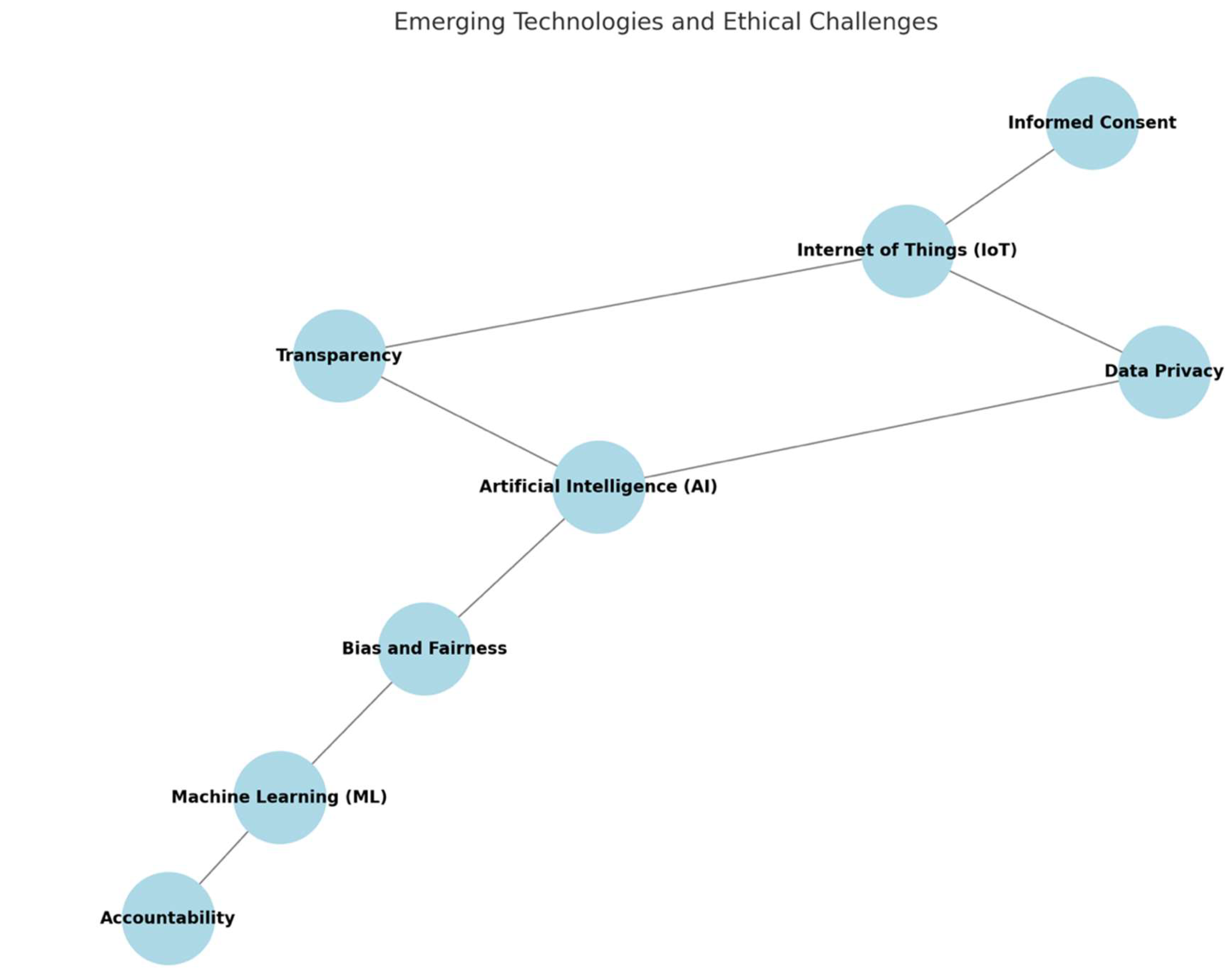

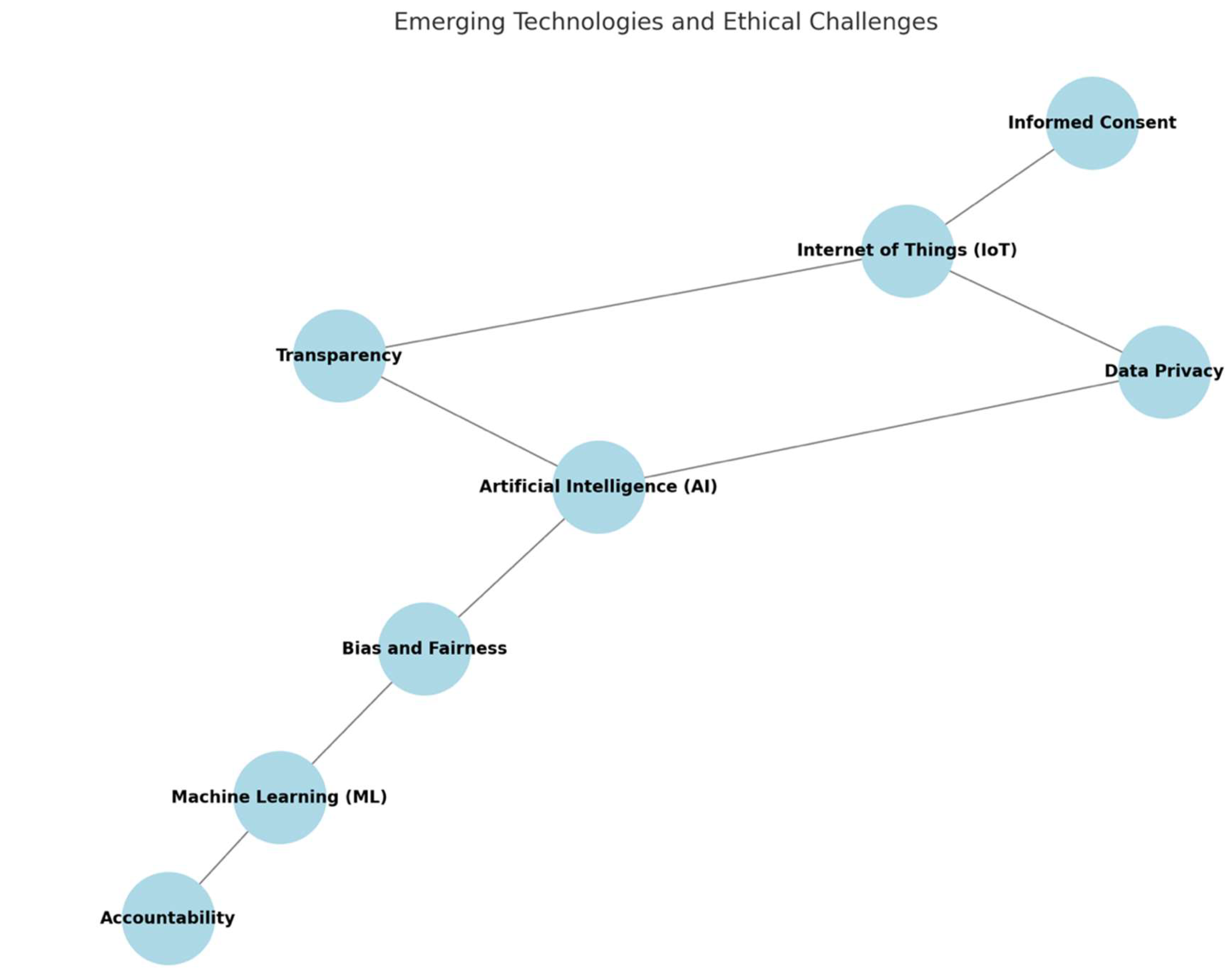

Cybersecurity researchers are positioned at the confluence of technology and ethics. They experience ethical challenges that curtail from their dual responsibilities which includes protecting sensitive information and safeguarding the security of technological systems (Christen et al., 2020). These challenges are multifaceted and require a nuanced understanding of both technological capabilities and ethical principles including but not limited to issues related to consent, data manipulation and integrity, privacy, and the potential technology misuse. Ethical failures in cybersecurity can lead to severe consequences such as infringement of trust, financial damages, and individual harm (Edquist et al., 2022).

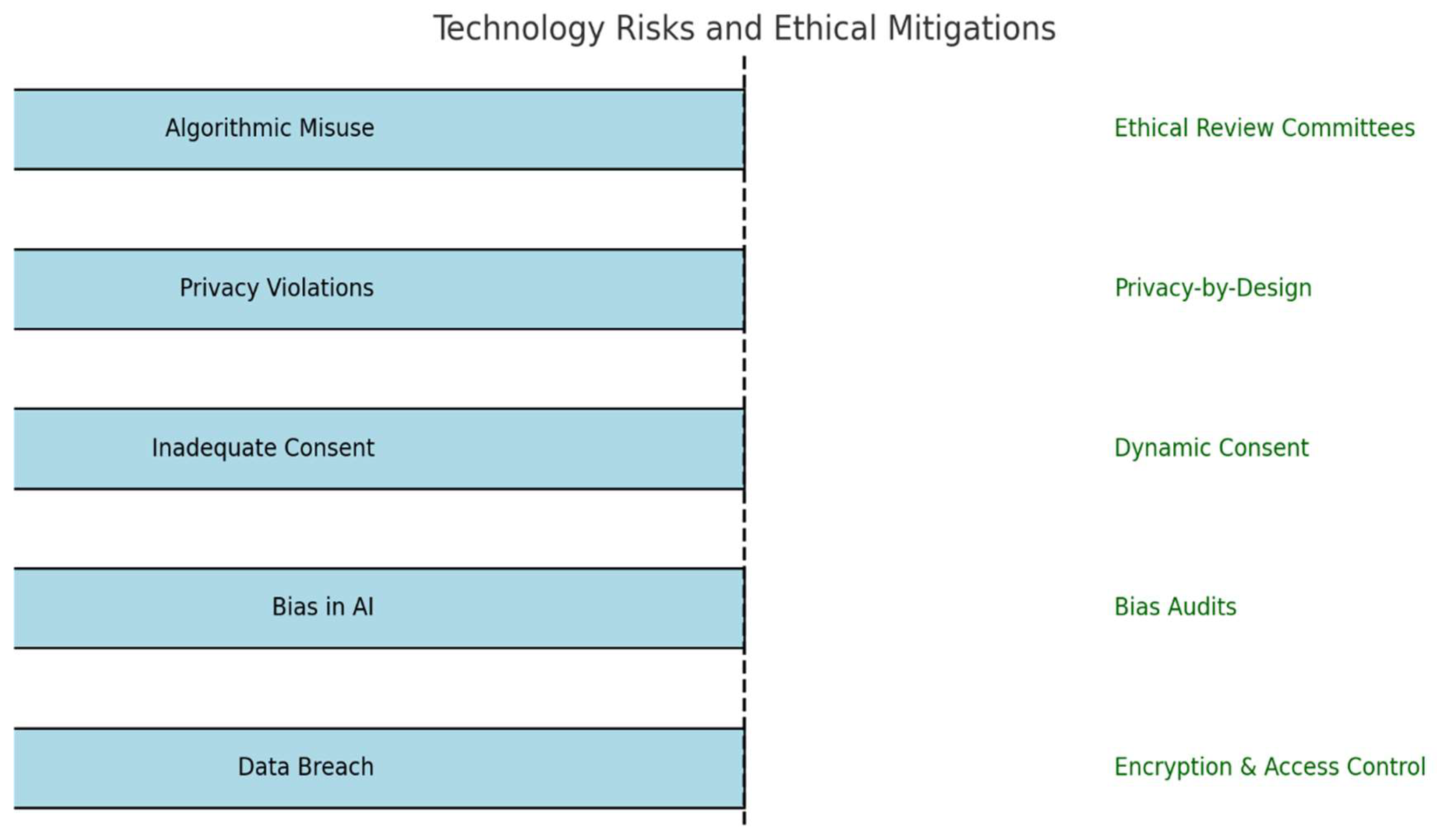

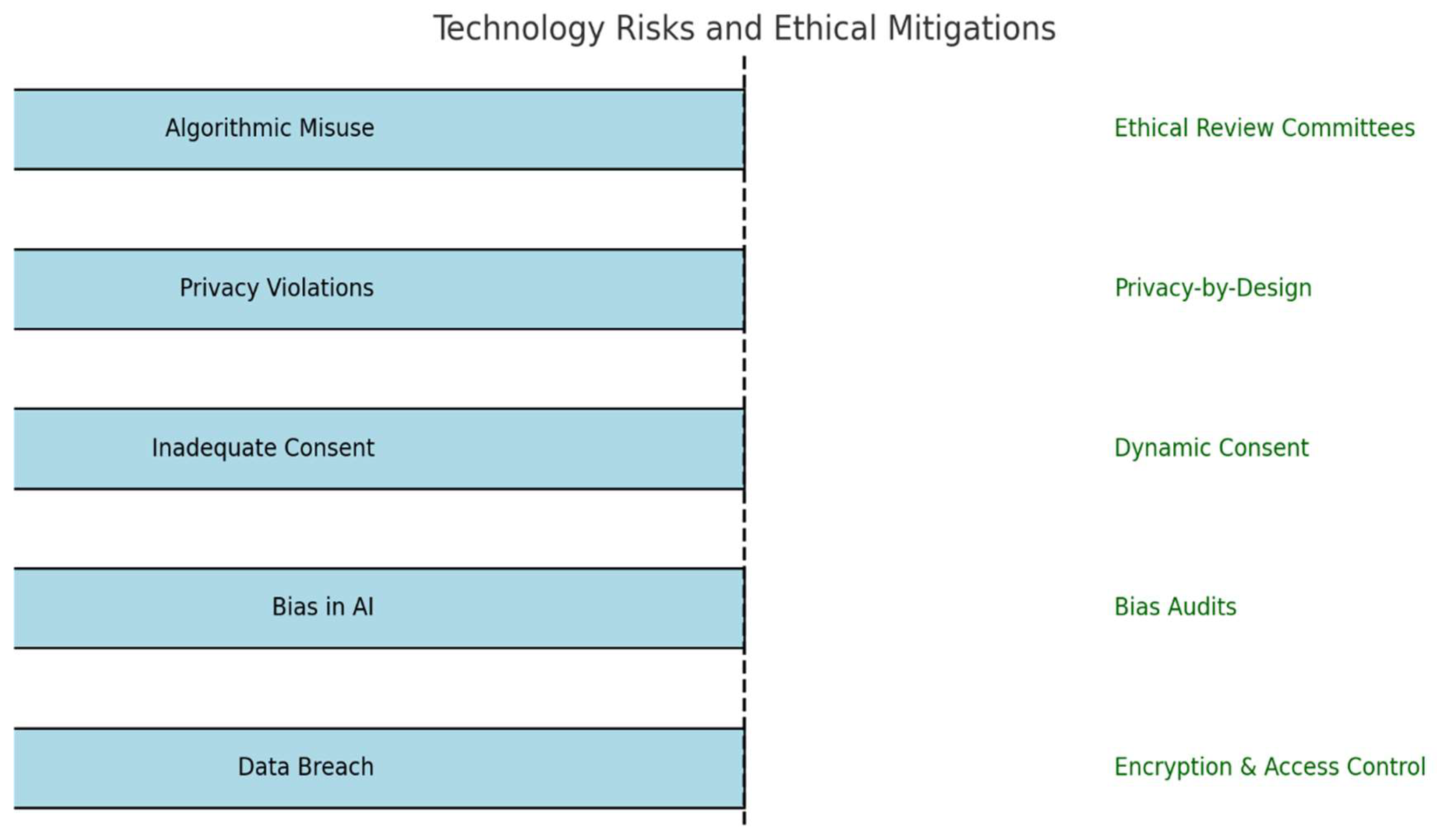

One of the primary ethical challenges in cybersecurity is consent. Data is collected and used without the explicit consent of the individuals concerned in many cases (Ademola, 2020). This lack of transparency can lead to mistrust and ethical breaches. Cybersecurity researchers must strive to implement security practices that ensure informed consent where individuals are fully aware of what data is being collected, how it will be used, and the measures in place to protect it (Dupuis & Renaud, 2021). Data manipulation and integrity can pose added ethical challenges. The accuracy and reliability of data is crucial as manipulated or corrupted data can lead to misinformation and potentially harmful choices. Cybersecurity researchers must uphold stringent standards to prevent unauthorized modifications to data and ensure its integrity throughout its lifecycle (Esmer & Arıbaş, 2022). Privacy is another critical ethical issue. Cybersecurity researchers frequently possess access to vast amounts of personal and sensitive information. Ensuring the confidentiality of this data is paramount and yet the appropriate measures taken to secure it can sometimes disregard on individual privacy (Herrmann & Pridöhl, 2020). For instance, intrusive monitoring and surveillance practices can be effective for security purposes but they can also violate privacy rights if not properly regulated and justified. The potential technology misuse can also present significant ethical problems (Familoni, 2024)). Innovative technologies such as artificial intelligence and machine learning can be powerful in defending against cyber threats. However, these same technologies can be misused for malicious purposes such as crafting sophisticated malware or conducting intrusive reconnaissance. Cybersecurity researchers must navigate the ethical implications of developing and deploying these technologies in order to ensure that these technologies are used in a responsible and ethical way (Formosa et al., 2021). Ethical failures in cybersecurity can also lead to severe consequences since security is everyone’s responsibility. Breaches of trust can occur when organizations fail to protect sensitive information resulting in significant reputational damage and loss of customer confidence (Frohmann, 2000). Financial losses can be substantial for individuals whose data is compromised and for organizations experiencing the counteract and recovering from data breaches. Furthermore, the individual harm can be profound including identity theft, financial fraud, and other forms of cybercrime (Esmer & Arıbaş, 2022).

The ethical challenges in cybersecurity are complex and multifaceted often requiring a delicate balance between technological capabilities and ethical principles (Hawamleh et al., 2020; Joanna, 2021). In order to address these ethical challenges, cybersecurity researchers must adhere to established ethical frameworks and continuously update their knowledge and skills to keep pace with evolving threats and technologies. This includes fostering a culture of ethical awareness and responsibility within their organizations and ensuring that the ethical considerations are integral to all cybersecurity practices and decisions (Bauer et al., 2020).

Cybersecurity researchers can protect sensitive data and technological systems while maintaining public trust and safeguarding individual rights by upholding high ethical standards and continuously adapting to new challenges.

CODE OF ETHICS

Code of Ethics provide a foundation for addressing these issues by offering guidelines for ethical decision-making and help delineate acceptable behavior from unethical practices. By adhering to these guidelines, cybersecurity researchers can navigate complex ethical dilemmas, make informed decisions that balance technical requirements with ethical considerations, and align their actions with moral standards (Lee, 2020).

Two prominent organizations including the Association for Computing Machinery (ACM) and the Institute of Electrical and Electronics Engineers (IEEE) have established codes of ethics and behavioral requirements for researchers in various fields. These frameworks provide comprehensive guidelines for ethical conduct related to fairness, honesty, and respect for privacy. Despite these guidelines, the dynamic nature of the digital landscape requires continuous evaluation and adaptation of ethical standards to address the emerging challenges around responsible data research (Loi & Christen, 2020). The ACM Code of Ethics and Professional Conduct guidelines document was designed to inspire and guide the ethical conduct of all computing researchers. It highlights the importance of contributing to society and human well-being, avoiding harm to others, being honest and trustworthy, and respecting privacy and confidentiality (Anderson, 2018). These principles guide researchers in making decisions that prioritize and uphold ethical standards in their work. For instance, the ACM code emphasizes the need for transparency in data practices and the protection of users' personal information. Similarly, the IEEE Code of Ethics (2020) outlines the commitment of engineers to ethical practices. It includes tenets such as improving the understanding of technology and its potential consequences, maintaining and improving technical competence, and avoiding real or perceived conflicts of interest (IEEE, 2020). For instance, when confronted with a decision about whether to implement a particular security measure or not, researchers can refer to these codes to evaluate the potential impact on privacy and fairness. The IEEE (2020) code also stresses the importance of treating all persons fairly and with respect, which includes safeguarding the privacy and dignity of individuals in the digital realm. Also, the IEEE Code of Ethics stresses the importance of transparency and accountability in professional activities encouraging engineers to act in ways that are honest, impartial, and fair.

Despite the robust guidelines provided by these leading organizations, the dynamic nature of the digital landscape requires continuous evaluation and adaptation of ethical standards. The rapid development of technological advancements mean that the new ethical dilemmas are constantly emerging (Lehtonen, 2021). For instance, the advent of artificial intelligence and machine learning introduces questions about culpability, bias, and the ethical use of autonomous systems. Ethical frameworks must be flexible and responsive and require ongoing dialogue among researchers, ethicians, policymakers, and the general public to ensure that the ethical guidelines remain pertinent and current in order to address these emerging challenges (Macnish & Van der Ham, 2020). Continuous professional development and education are crucial for cybersecurity researchers to stay abreast of the latest ethical issues and best practices (Lonsdale, 2020). Organizations must foster a culture of ethical awareness and accountability. This involves not only adhering to established codes of ethics but also encouraging open discussions about ethical dilemmas and supporting ethical decision-making processes. Internal policies and procedures should reflect the organization's commitment to ethical standards and support procedures should be implemented to address and resolve ethical concerns (Lucas, 2017).

Code of Ethics provide a structured approach to addressing ethical dilemmas by ensuring that the decisions are well-reasoned, fair, and aligned with societal values. This approach not only protects individuals and organizations but also fosters a culture of ethical responsibility within the cybersecurity profession (Mahfood et al., 2005). By adhering to these ethical principles, cybersecurity researchers can make informed decisions that not only meet technical requirements but also uphold the highest standards of ethical conduct.

ETHICAL ISSUES IN CYBERSECURITY RESEARCH

Ethical issues highlight the need for fairness and non-discrimination. The researcher should analyze whether the technology disproportionately affects certain groups or individuals and take steps to mitigate any biases (Manjikian, 2017). They must ensure the technology is applied rightfully and does not unjustly target or exclude specific population. They also stress the importance of transparency and accountability in research activities. The researcher would document their decision-making process, provide clear rationales for their choices, and be open to scrutiny (Mbinjama-Gamatham & Olivier, 2020). This transparency helps build trust with stakeholders and demonstrates a commitment to ethical standards. A key principle in ethical guidelines is to avoid harm (Onyancha, 2015). For instance, a researcher would need to weigh the potential security benefits of the surveillance technology against the possible harm to individuals' privacy and civil liberties. They should strive to find a solution that enhances security without causing undue harm or infringing on rights (Miller & Bossomaier, 2024).

Intersection of Cybersecurity and Research with Human Subjects

The intersection of cybersecurity and research with human subjects is a critical area where ethical considerations overlap while presenting unique challenges and opportunities. Research involving human subjects often requires collecting, storing, and analyzing sensitive personal data making it susceptible to cyber threats such as data breaches, unauthorized access, and misuse. Cybersecurity principles and codes of ethics were developed to protect data and digital infrastructure and this can be extended to enhance the ethical framework governing research with human subjects (Fiesler et al., 2024). Institutions can better protect participants’ privacy, uphold their rights, and ensure the responsible use of data by integrating cybersecurity standards into research practices. The integration of cybersecurity practices into human subjects research is not just a technical requirement but a fundamental ethical obligation that helps ensure the responsible use of data in an increasingly digital world.

Applying Ethical Codes in Real-World Scenarios

Research with human subjects frequently involves the collection of sensitive data including personal identifiers, health records, and behavioral information which are valuable targets for cyber attackers (Fiesler et al., 2024). This intersection necessitates a dual focus on safeguarding the data and protecting the individuals behind it. Cybersecurity codes such as the Association for Computing Machinery (ACM) and the Institute of Electrical and Electronics Engineers (IEEE) focus on principles like fairness, transparency, accountability, and avoiding harm which align closely with the ethical guidelines for human subjects research.

The IEEE Code of Ethics outlines the commitment to “protect privacy and respect confidentiality” and to “avoid real or perceived conflicts of interest” which are principles that directly apply to managing human subjects data ethically in the context of cybersecurity (IEEE, 2020). These principles can be operationalized in research settings by implementing robust data protection measures and maintaining transparency about how data is stored and secured.

APPLICATION OF CYBERSECURITY CODES IN RESEARCH WITH HUMAN SUBJECTS

Data Protection and Privacy for Safeguarding Personal Data

One of the most significant intersections between cybersecurity and human subjects research is the shared commitment to data protection and privacy (Andrews et al., 2024). Cybersecurity codes advocate for the implementation of strong encryption methods, secure data storage solutions, and access controls to prevent unauthorized access (Maalem Lahcen et al., 2020). Institutions conducting research must adopt these cybersecurity measures to prevent data breaches and unauthorized disclosures (Dalkıran, 2024). This can be applied in real-world scenarios by using secure platforms for data collection that employ end-to-end encryption to ensure that data is protected from the point of collection to storage and analysis. One scenario would be researchers making use of secure web forms with built-in encryption features when conducting surveys or interviews that involve collecting sensitive information. In another instance cybersecurity principles suggest performing regular audits of data security practices to ensure they are up-to-date and resilient against emerging cyber threats to reinforce the need for continual improvement of security measures in research settings.

Accountability and Ethical Data Use for Preventing Misuse of Data

Cybersecurity codes emphasize accountability in data handling and require that data is used ethically to maintain researcher accountability for any actions that compromise data security (Macnamara, 2024). Institutions should implement strict access controls and ensure that data is used solely for the purposes outlined in the research (Parthasarathy et al., 2023). This directly applies to research with human subjects where the misuse of data can lead to significant ethical breaches. Accountability can be reinforced in real-world applications by implementing role-based access controls that limit data access to authorized personnel only ensuring that sensitive data cannot be accessed or used by individuals not directly involved in the research (Andrews et al., 2024). Employing logging and monitoring systems that track who accesses data and when the access occurred helps maintain accountability and provides a record that can be reviewed if any ethical or security concerns arise.

Avoiding Harm and Mitigating Cybersecurity Risks to Protect Participants

Avoiding harm is a shared principle in both cybersecurity and human subjects research. This means implementing measures that prevent data loss, unauthorized access, and other cyber threats that could harm research participants. The critical need for robust cybersecurity protocols to protect participant data from being compromised prevents potential psychological, financial, or social harm (McKee, 2024; Stegenga et al., 2023). Institutions can operationalize this by conducting regular risk assessments to identify potential vulnerabilities in their data handling and storage practices. For instance, researchers should select platforms with strong security credentials and configure settings to maximize data protection when using cloud storage solutions (Gunathilake, 2024). Also, employing data minimization techniques to collect only the data necessary for the study can reduce the risk of harm in case of a data breach.

SOLUTIONS AND RECOMMENDATIONS

The following principles are fundamental to ethics for responsible data research in cybersecurity. These principles ensure that researchers conduct their work with integrity, fairness, and a strong commitment to protecting individuals' rights (Pattison, 2020). By embedding these values into their daily practices, cybersecurity researchers can navigate ethical challenges effectively and uphold the highest standards of ethical conduct in their field.

- Addressing Current Ethical Challenges: This provides researchers with the tools to effectively address current ethical challenges(Rajasekharaiah et al., 2020). In the face of issues like data breaches, privacy concerns, and the ethical use of emerging technologies, having a robust ethical foundation helps researchers make informed and principled decisions (Morgan & Gordijn, 2020). For instance, when faced with a decision about using a new technology that could enhance security but also raise privacy concerns, ethical guidelines provide a basis for weighing the benefits and risks in a balanced manner.

- Alignment with Societal Values: Cybersecurity practices must be aligned with broader societal values such as justice, fairness, and respect for individual rights. By grounding their actions in these values, researchers can navigate ethical challenges in a way that respects societal norms and expectations (Raul, 2021). For instance, prioritizing the protection of user privacy and data security reflects the societal value placed on personal privacy and trust in digital systems.

Accountability: Cybersecurity and research are closely tied to transparency and involve holding researchers responsible for their actions and decisions throughout the research lifecycle. Accountability can be operationalized by establishing clear roles and responsibilities for each team member to implement regular ethical audits and maintain adherence to established ethical standards (Dalkıran, 2024). It is essential to have mechanisms in place such as peer review and ethical oversight committees that actively monitor research activities and intervene when ethical breaches are identified. These structures help ensure that researchers are not only aware of ethical norms but also consistently apply them in their work.

Accountability in research means that researchers are answerable for their actions and decisions. Operationalizing accountability involves setting up structures and processes that monitor and evaluate research activities. Ethical review boards, regular audits, and clear reporting lines within research teams are critical mechanisms that enforce accountability. Regular training on ethical standards and peer review processes helps researchers remain vigilant about their responsibilities and remain compliant with best practices (Stegenga et al., 2023).

Accountability requires researchers to uphold the principles of responsible authorship and data stewardship. This includes acknowledging the contributions of all team members accurately and ensuring that data is stored securely and handled according to ethical guidelines. Accountability mechanisms should facilitate prompt corrective actions including retractions, public disclosures, and disciplinary measures when ethical breaches occur.

- Consistency: This offers a set of standardized guidelines that researchers can follow in order to ensure consistency in decision-making across various scenarios. This consistency helps in maintaining a uniform approach to ethical issues and reducing the risk of arbitrary or biased decisions (Richards et al., 2020). For instance, when dealing with data breaches, researchers can rely on established protocols that emphasize prompt disclosure, mitigation efforts, and transparency, while making sure that the responses are predictable and fair.

- Fairness: Cybersecurity practices should not disproportionately harm or disadvantage any group. Fairness guides researchers to consider the broader societal implications of their actions and strive for justifiable outcomes (Sakka & Spyrou, 2015). This requires implementing bias checks at various stages including data collection, model development, and data interpretation. Fairness can be enhanced through standardized protocols that minimize the potential for bias by ensuring that all groups are equitably represented in the data (Pirani, 2024). Research teams must regularly audit their methodologies and algorithms to identify any systemic biases and adjust their processes accordingly to avoid unjust outcomes.

Fairness in research entails ensuring that all participants and groups are treated equitably and without bias (Miteu, 2024). This requires deliberate efforts to include diverse populations in studies to avoid the exclusion or overrepresentation of any group which can lead to skewed results and unethical outcomes. Fairness can be operationalized by establishing clear criteria for participant selection and consistently reviewing these criteria to prevent discrimination (Pirani, 2024). Researchers should employ randomized sampling techniques and carefully monitor their methodologies to avoid biases that might affect vulnerable or underrepresented groups. Fairness also extends to the equitable distribution of research benefits. This involves ensuring that the outcomes of research, such as medical treatments or technological innovations, are accessible to all groups rather than only benefiting certain demographics or geographic locations (Karunarathna et al., 2024; Richards et al., 2020). Implementing community engagement strategies and feedback loops with study populations can help researchers understand the needs and expectations of different groups by tailoring their approaches to be more inclusive and fairer. Researchers help prevent biases and ensure that their actions promote justice and equality contributing to a more inclusive and equitable digital environment by striving for the principle of fairness (Schlehahn, 2020; Miteu, 2024).

- Honesty: Ethics for responsible data research must emphasize the need for honesty in all research activities (Schultz, 2005). This principle requires cybersecurity researchers to be truthful about the capabilities and limitations of cybersecurity measures. This includes transparent communication of potential risks and vulnerabilities to stakeholders which would ensure them to have an accurate understanding of the security landscape (Sharan & Boruah, 2016). Honesty also involves accurately reporting findings and incidents without exaggeration and/or omission. By adhering to the principle of honesty, researchers build trust with clients, colleagues, and the public thereby fostering a culture of integrity and reliability.

- Preparation for Future Developments: Shou (2012) states that as technology and cyber threats evolve, so do the ethical challenges associated with them. Ethical frameworks prepare researchers to adapt to these changes by promoting continuous learning and ethical vigilance (Shou, 2012). This proactive approach ensures that researchers are not only reactive to ethical issues but also prepared to anticipate and address new challenges as they arise. For instance, staying informed about the ethical implications of artificial intelligence and machine learning in cybersecurity allows researchers to integrate these technologies responsibly and ethically.

- Respect for Privacy: Respecting privacy is a core tenet of ethical conduct in cybersecurity. Ethics of conduct guide researchers to implement measures that protect individuals' personal information and uphold confidentiality (Wolf et al., 2024). This involves adopting practices that minimize data collection in order to make sure that only necessary information is gathered and stored in a secure fashion (Shukla et al., 2022). Researchers must also be vigilant in protecting data from unauthorized access, breaches, or misuse. Respect for privacy requires ongoing diligence to uphold privacy rights and balancing the need for security with the imperative to protect individuals' personal information (Van den Hoven, 2010). By prioritizing the principle of privacy, cybersecurity researchers help maintain public trust and confidence in digital systems.

Institutions conducting research with human subjects that leverage data on humans face significant challenges in preserving privacy and protections in the digital age. The proliferation of digital technologies and vast data collection capabilities has intensified the need for robust ethical standards and protective measures. Institutions must navigate complex legal, technical, and ethical landscapes to safeguard participants' rights, privacy, and data security. Implementing comprehensive frameworks that address these challenges can help institutions maintain trust, compliance, and ethical integrity.

Informed consent is a cornerstone of ethical research involving human subjects. Institutions must ensure that participants are fully aware of how their data will be used, stored, and shared. This process must extend beyond traditional consent forms to include detailed information on data security, potential risks, and how data privacy will be maintained. It is important to emphasize clear and accessible consent forms that outline the scope of data use, potential risks, and the measures taken to protect participant privacy (De Roche & Silver, 2024). Digital consent platforms can enhance transparency by allowing participants to view and control their consent status dynamically and to adapt their permissions as needed. Institutions should also consider incorporating ongoing consent mechanisms to periodically inform participants about how their data is being used and provide the opportunity to withdraw consent if desired. This approach allows the participants to maintain control over their data throughout the research lifecycle by aligning with ethical standards that prioritize individual autonomy and privacy.

Institutions must employ advanced data anonymization techniques that prevent the identification of individuals from datasets to protect the privacy of human subjects. Data anonymization involves removing or encrypting personal identifiers and implementing techniques such as data aggregation and differential privacy to further obscure individual data points (Parthasarathy et al., 2023). Differential privacy adds statistical noise to datasets to help prevent re-identification of individuals while preserving the overall utility of the data for analysis. Institutions must also regularly audit anonymization practices for effectiveness against evolving re-identification risks as computational techniques for data mining become more sophisticated. Researchers should avoid collecting more personal information than necessary and adopt a “privacy by design” approach to integrate privacy considerations into the research process from the outset.

Data security is paramount when handling sensitive information about human subjects. Institutions must implement robust cybersecurity measures including encryption, secure data storage, and stringent access controls to protect data from unauthorized access, breaches, and misuse. Institutions should employ multi-layered security protocols such as two-factor authentication, regular security audits, and intrusion detection systems to safeguard data (Dalkıran, 2024). Access to sensitive data should be restricted to authorized personnel who are adequately trained in data security and ethical handling of human subjects’ information. Role-based access controls (RBACs) enforce that individuals only have access to the data necessary for their specific research tasks. Institutions should implement logging and monitoring systems that track data access and usage allowing for prompt detection and response to unauthorized activities.

Institutions must establish comprehensive data governance policies that outline standards for data collection, use, sharing, and retention. These policies should be aligned with legal requirements such as the General Data Protection Regulation (GDPR) and other relevant data protection laws. The importance of data governance frameworks that prioritize ethical considerations, such as the minimization of data collection and the protection of participant confidentiality (Schneble Butz, 2024). Data governance policies should also specify the protocols for data sharing with third parties to ascertain that any shared data remains secure and that recipients adhere to the same ethical and legal standards. Institutions can implement data use agreements that clearly define the permissible uses of shared data and the responsibilities of all parties involved in maintaining data security and privacy.

Preserving privacy and protections for human subjects also requires ongoing training for researchers and staff on data protection best practices and ethical standards. Institutions should provide regular training sessions that cover the latest developments in data privacy laws, cybersecurity measures, and ethical research practices. Continuous education helps researchers stay informed about the evolving ethical landscape and reinforces the importance of protecting participant data (Pirani, 2024). Institutional Review Boards (IRBs) play a critical role in monitoring research involving human subjects. These boards should conduct regular reviews of research protocols to ensure compliance with privacy standards and assess whether the measures taken to protect data are adequate. Enhanced oversight mechanisms including post-approval monitoring and audits can help identify potential ethical violations early and ensure that corrective actions are implemented quickly.

Privacy-Enhancing Technologies (PETs) such as encryption, secure multi-party computation, and homomorphic encryption provide additional layers of protection for data used in research. These technologies enable researchers to perform data analysis without directly accessing sensitive information to reduce the risk of data breaches and privacy violations (McKee, 2024). Institutions can enhance data security and ensure that privacy protections are maintained even when data is shared or analyzed by adding PETs into their data handling processes.

- Avoiding Harm: This requires proactive measures to protect research participants from physical, psychological, and data-related risks. The importance of informed consent and data anonymization is highlighted as a means to prevent harm (De Roche & Silver, 2024). Researchers must assess the potential impacts of their studies and take steps to mitigate risks such as securing data against misuse and providing participants with clear information about how their data will be used. The principle of avoiding harm assures that findings are not misused in ways that could disadvantage specific groups or lead to harmful policy decisions. This principle can be operationalized through rigorous risk assessments and the implementation of safety protocols that minimize potential harms. Acquiring informed consent is important and involves clearly communicating the risks, benefits, and purpose of the research to participants allowing them to make fully informed decisions about their involvement (Pirani, 2024).

Researchers must maintain that data privacy and confidentiality are upheld throughout the research process (Wolf et al., 2024). This includes using secure data storage solutions, anonymizing datasets, and restricting access to sensitive information. Researchers are also advised to consider the broader societal implications of their findings because misuse of data or misinterpretation of results can lead to harmful consequences beyond the immediate study context. Developing clear guidelines for data use and regularly reviewing these policies helps mitigate the risk of harm, particularly in areas involving sensitive or high-stakes data (Mbinjama-Gamatham & Oliver, 2020).

- Transparency: Transparency is crucial for maintaining trust between researchers and participants. Institutions should provide participants with clear information about how their data will be used, the measures in place to protect their privacy, and their rights regarding data access and withdrawal. Open communication and the provision of easy-to-understand privacy notices can significantly enhance participants' confidence in the research process (Mozersky et al., 2020). Institutions can also consider implementing participant portals that allow individuals to view their consent status, access information about ongoing studies, and control the use of their data. Such platforms empower participants and promote a collaborative relationship between researchers and the communities that are studied. Key operational steps include openly sharing information about research goals, methodologies, data handling procedures, and potential conflicts of interest. Transparency can be enhanced through comprehensive documentation and the public sharing of research protocols and data analysis plans, allowing others to scrutinize and replicate the research (Parthasarathy et al., 2024). This level of openness helps to demystify research processes and fosters accountability by making researchers’ actions observable and subject to evaluation.

Transparency should also extend to the results and limitations of the study. Researchers are encouraged to report negative results and disclose uncertainties in their findings to maintain transparency in reporting to avoid misinterpretation and misuse of research data while protecting public interests (Dalkıran, 2024). Creating clear, accessible summaries of research outcomes and sharing data responsibly through open-access platforms also contribute to greater transparency.

Organizations can adopt several strategies to maintain ethical conduct and integrate ethical principles into everyday operations and decision-making processes. These strategies include developing clear policies, conducting regular audits and assessments, and providing whistleblower protections (Wylde et al., 2022). Developing clear policies involves establishing comprehensive and actionable guidelines that outline ethical standards and expectations for all employees, and covering key areas such as conflict of interest, data privacy, informed consent, and responsible use of technology (Van de Poel, 2020). Some essential steps include creation of procedures where policies are easily accessible to all employees, implementing training sessions to educate employees about these policies, and regularly reviewing and updating policies in conjunction with evolving ethical standards and emerging challenges (Yaokumah et al., 2020)). Regular audits and assessments including compliance audits to assess adherence to ethical guidelines and risk assessments to identify potential ethical risks help organizations address issues in a proactive manner. Utilizing audit and assessment results to identify areas for improvement and sharing findings with relevant stakeholders to maintain transparency and accountability further demonstrate the organization’s commitment to ethical conduct. Providing whistleblower protections such as secure and confidential reporting mechanisms, strong anti-retaliation policies, support for whistleblowers, and clear follow-up procedures for handling reports of unethical behavior encourages reporting and supports ethical behavior (Zhang & Ghorbani, 2021).

Enhancing Ethical Awareness

Cultivating ethical behavior among cybersecurity researchers is crucial to raise awareness about the ethical implications of their work. Comprehensive training programs that cover beyond technical skills to encompass ethical principles and their real-world applications are essential alongside addressing topics such as data privacy, informed consent, and the ethical use of emerging technologies (Wiafe et al., 2020). Continuous learning through workshops, seminars, and online courses or webinars keeps researchers well-versed on evolving ethical standards and new challenges while case studies and simulations illustrate ethical dilemmas and appropriate responses underlining the consequences of decisions and the importance of ethical behavior (Loi & Christen, 2020). Ethical leadership within organizations is vital with senior management modeling integrity and ethical decision-making, developing and enforcing clear policies, and fostering open communication about ethical concerns without fear of retribution. Community engagement involving diverse stakeholders, underrepresented and underprivileged communities is critical in relation to ethical cybersecurity practices, conducting public awareness campaigns, and creating collaborative platforms for dialogue between researchers, policymakers, and community members (Joanna, 2021; Lee, 2020). Organizations can significantly enhance ethical awareness among cybersecurity researchers by implementing these strategies in order to ensure that cybersecurity practices not only protect data and systems but also uphold the highest standards of integrity and respect for individual rights.

Ethical Decision Making

Adopting ethical decision-making models helps researchers evaluate the ethical dimensions of their actions and well-considered decisions aligned with ethical principles. These models provide a structured approach to navigating complex ethical dilemmas involving three key steps of identifying ethical issues, evaluating alternatives, and making informed decisions (Lucas, 2017). The first step involves recognizing and clearly defining the ethical issues involved, understanding the context in which they arise, and formulating the ethical dilemma by considering the interests of all relevant stakeholders (Formosa et al., 2021). The second step entails generating a range of possible actions, analyzing the potential outcomes and impacts on stakeholders, and weighing these alternatives against established ethical principles, such as integrity, transparency, and respect for individual rights (Anderson, 2018; IEEE, 2020). The final step requires choosing the action that best aligns with ethical principles and promotes consistency, developing a clear implementation plan, transparent communication of the decision and its rationale, and reviewing and reflecting on the outcomes to improve future ethical decision-making (Hawamleh et al., 2020).

Organizations can effectively implement ethical policies and practices, create a robust ethical framework that promotes integrity, transparency, and accountability within the organization and significantly enhance ethical awareness among cybersecurity researchers while upholding the highest standards of integrity and trust (Hermann & Pridöhl, 2020) by adopting these strategies.

REGULATORY AND LEGAL FRAMEWORKS GOVERNING CYBERSECURITY AND HUMAN RESEARCH PROTECTIONS

Several key regulatory and legal frameworks play a critical role in shaping how data is managed, protected, and ethically used. These frameworks set standards that help institutions and researchers safeguard participant data, maintain compliance with ethical norms, and navigate complex legal landscapes. Understanding the impact of these regulations on ethical decision-making and the measures necessary for compliance is essential for researchers conducting studies that involve human data.

The ethical and legal frameworks governing human research protections are essential for safeguarding the rights, dignity, and welfare of research participants. These frameworks provide guidelines that researchers must follow to ensure that human subjects are treated ethically, their data is handled responsibly, and their privacy is protected. Understanding these frameworks is crucial for researchers who handle sensitive personal data. This section provides a detailed discussion of key ethical and legal frameworks such as the Common Rule, HIPAA, and GDPR, and explores how these regulations apply to cybersecurity and research involving human subjects.

General Data Protection Regulation (GDPR)

The GDPR is one of the most comprehensive data protection laws enacted by the European Union (EU) to regulate the processing of personal data and protect the privacy rights of individuals. It applies to any organization that handles data of EU citizens regardless of where the organization is located. The GDPR emphasizes principles such as data minimization, consent, transparency, lawfulness, fairness, and accountability which align closely with ethical standards in both cybersecurity and human research protections (Bakare et al., 2024). The GDPR impacts ethical decision-making by requiring researchers to justify their data collection practices and maintain neutrality to the intended research outcomes. Researchers must obtain explicit consent from participants while detailing how their data will be used and stored (De Roche & Silver, 2024). This regulation also enforces the right to data access, adjustment, and removal compelling researchers to establish clear processes for responding to participants' data requests.

GDPR places stringent requirements on obtaining informed consent, ensuring data subject rights, and implementing strong data protection measures for research involving human subjects. Researchers must provide clear information about how personal data will be used, stored, and protected. The emphasis of GDPR on data minimization means that researchers should only collect data that is necessary for the specific research purpose and avoid excessive data collection. GDPR compliance in research settings requires the implementation of technical measures such as data anonymization, encryption, and pseudonymization to protect personal data from unauthorized access (Dupuis & Renaud, 2021). Researchers must also conduct Data Protection Impact Assessments (DPIAs) when data processing is likely to result in high risks to data subjects, particularly when using new technologies or handling sensitive data.

Researchers must establish transparent data handling practices including clear data processing agreements and participant rights management processes to comply with GDPR. Institutions should designate Data Protection Officers (DPOs) responsible for overseeing compliance and conducting regular audits of data security practices. GDPR also mandates that researchers report data breaches to authorities within 72 hours which requires the development of robust incident response plans (Bakare et al., 2024). GDPR compliance is not just a legal requirement but an ethical imperative to protect participants’ data for IRBs and researchers. GDPR mandates that data collection must be lawful, transparent, and limited to what is necessary for the research purpose. Researchers are also required to obtain explicit consent from participants detailing the specific purposes for which their data will be used.

IRBs can ensure GDPR compliance by implementing robust consent processes that are clear and specific about data usage. Consent forms should outline data protection measures detailing how data will be stored, who will have access, and how long the data will be retained. IRBs should regularly review consent procedures and data protection practices to ensure they meet GDPR standards (Bakare et al., 2024). IRBs can provide researchers with templates and guidelines that include GDPR compliant language to reduce the burden on researchers navigating these complex requirements independently.

Health Insurance Portability and Accountability Act (HIPAA)

HIPAA is a U.S. law that sets national standards for the protection of health information. It governs how healthcare providers, insurers, and researchers handle sensitive patient data. The Privacy Rule of HIPAA addresses how personally identifiable health information must be protected and mandates safeguards to prevent unauthorized access (Tangudu et al., 2024). The law’s Privacy Rule and Security Rule are particularly relevant to research as they establish guidelines for how personal health information (PHI) must be protected during and after data collection. The stringent requirements of HIPAA for data security and privacy have direct implications for ethical decision-making for researchers. The Security Rule requires that researchers must implement administrative, physical, and technical safeguards to ensure that health data is protected from breaches (Bakare et al., 2024). HIPAA requires that researchers obtain waivers of authorization if full consent cannot be obtained which will involve rigorous review by IRBs.

Researchers and institutions must develop comprehensive data management and security plans that outline how PHI will be protected throughout the research process (Dalkıran, 2024). These plans must include protocols for data anonymization, secure data storage, and restricted access to sensitive information in digital environments where data breaches are a significant concern. Researchers can adhere to HIPAA by conducting regular risk assessments and implementing risk management strategies that address potential vulnerabilities in data handling practices. Training staff on data protection policies and maintaining audit trails of data access are crucial steps for meeting the security requirements. The establishment of data use agreements that define the permissible use of PHI in research further strengthens compliance efforts and aligns with HIPAA’s focus on minimizing unauthorized data use.

Common Rule (45 CFR 46)

The Common Rule governs research involving human subjects in the U.S. It establishes the requirements for IRB review, informed consent, and the protection of vulnerable populations. The revised version introduced new provisions for informed consent that emphasizes greater transparency and includes concise explanations of risks and benefits. Its primary purpose is to ensure that research is conducted ethically, with respect for persons, beneficence, and justice as its core principles.

The Common Rule directly impacts how ethical decisions are made in research involving human subjects concerning the adequacy of consent and the protection of participants' privacy. It mandates that IRBs must review and approve the adequacy of privacy protections and data security measures in research proposals to align closely with cybersecurity principles. This integration underscores the need for researchers to be vigilant about both ethical and legal obligations to guarantee that all aspects of data management are compliant and protective of participants’ rights (Bakare et al., 2024).

The Common Rule applies to all federally funded research involving human subjects requiring IRB review to assess the ethical implications of research proposals. This regulation is relevant in research settings involving cybersecurity where sensitive data about human subjects is often collected, stored, and analyzed. The Common Rule mandates that IRBs evaluate the adequacy of privacy protections and data security measures to minimize risks to participants. Researchers are required to implement robust data protection protocols such as encryption and access controls to comply with these ethical standards. Compliance with the Common Rule involves preserving data privacy measures align with the principles of respect for persons and beneficence, protecting participants from harm while preserving their autonomy (Pirani, 2024). This includes employing additional safeguards such as anonymizing data and securing informed consent specifically for data collection and security risks in cybersecurity research.

IMPACT OF REGULATIONS ON ETHICAL DECISION-MAKING

The regulations previously discussed impose rigorous requirements that shape the ethical landscape of research involving human subjects as they enforce accountability requiring researchers to actively consider the potential impacts of their work on participants' rights and welfare.

One of the central ethical dilemmas influenced by the regulations is balancing the need for data utility with the obligation to protect participant privacy. The emphasis of GDPR on data minimization requires researchers to collect only the data necessary for their studies which challenges traditional research practices that often prioritize comprehensive data collection (Capili & Anastasi, 2024). This regulation requires ethical decision-making that prioritizes participants' privacy while still achieving meaningful research outcomes. Regulations such as the GDPR and the Common Rule mandate transparent and comprehensive informed consent processes. This has significant ethical implications as researchers must attest that participants are fully aware of how their data will be used and what risks are involved. The requirement for clear communication not only fulfills legal obligations but also aligns with ethical standards that emphasize respect for participant autonomy (Chen et al., 2023). Regulations foster accountability by mandating rigorous oversight through mechanisms such as IRB reviews, data protection impact assessments (DPIAs), and security audits. Ethical oversight ensures that researchers are held responsible for the ethical implications of their work which promotes a culture of transparency and integrity. The need for regular assessments encourages researchers to reflect continuously on their practices and adjust them to meet evolving standards (Christen et al., 2020).

Ensuring Compliance and Addressing Ethical and Legal Challenges

Researchers must develop detailed data management plans (DMPs) that outline how data will be collected, stored, analyzed, and shared to adhere to compliance with regulatory requirements. These plans should specify security measures such as encryption, access controls, and data anonymization techniques. DMPs are essential for operationalizing both ethical and legal obligations as they serve as living documents that guide researchers in their daily practices (Parthasarathy et al., 2024). Researchers and institutional staff must receive ongoing training on current regulatory requirements and ethical standards. Regular workshops and seminars can help keep researchers updated on changes to laws like GDPR and HIPAA, as well as best practices for data security and privacy. Education is critical for fostering a culture of compliance and ethical awareness among research teams (Resnik et al., 2024)

Proactive engagement with IRBs and data protection officers (DPOs) is essential for navigating the ethical and legal complexities of research involving human subjects. These oversight bodies provide valuable guidance on compliance issues and can help researchers identify potential ethical challenges early in the research process. Researchers can ensure that their projects align with both regulatory standards and ethical best practices by fostering open communication and collaboration. Researchers must have clear response protocols in place in the event of a data breach or ethical violation. Immediate actions should include notifying affected participants, conducting thorough investigations, and implementing corrective measures to prevent future incidents. Ethical decision-making in such scenarios requires transparency, accountability, and a commitment to rectifying harm (Edquist, 2022).

The Role of Institutional Review Boards (IRBs) in Human Research Protections

IRBs are critical oversight bodies that evaluate research proposals to ensure that ethical and legal standards are met. They actively assess the adequacy of data privacy and security measures to ensure that the participant data is handled responsibly and ethically.

IRBs review research proposals to manage data privacy concerns which includes examining how informed consent will be obtained, the types of data collected, the security measures in place, and how data will be stored and shared. IRBs often require researchers to implement specific privacy-enhancing technologies such as secure data storage and anonymization techniques to minimize risks to participants (Friesen et al., 2023). IRBs also evaluate cybersecurity risks in research involving human subjects when digital data collection or online platforms are used. They assess whether the proposed data security measures align with current cybersecurity standards and whether the research team is adequately prepared to handle potential data breaches (Dalal et al., 2022). IRBs may request additional safeguards or modifications to research protocols if the proposed cybersecurity measures are deemed insufficient (Peled-Raz et al., 2021).

Intersection of Cybersecurity Ethics and Human Research Protections

The integration of cybersecurity ethics into human research protections is becoming increasingly vital as research methodologies evolve to incorporate advanced digital technologies. IRBs serve as critical gatekeepers to make sure that research involving human subjects is conducted ethically with robust measures in place to protect participants' data from cyber threats. The unique challenges posed by cybersecurity in research settings need a clearer understanding of how IRBs specifically address these concerns. A better understanding of how IRBs operate within this evolving landscape can be developed by recognizing the safety and rights of participants. IRBs are traditionally responsible for evaluating the ethical dimensions of research involving human subjects including aspects such as informed consent, risk minimization, and the protection of vulnerable populations. As digital data collection has become more prevalent, IRBs have had to expand their oversight to include cybersecurity-related concerns in recent years. This shift reflects the growing recognition that data security is not just a technical issue but a fundamental ethical consideration in human research.

One of the primary ways IRBs address cybersecurity concerns is by rigorously evaluating the data security measures proposed in research protocols. IRBs require researchers to outline comprehensive data management plans that include technical safeguards such as encryption, secure data storage, and access controls to protect sensitive information from unauthorized access (White, 2020). IRBs assess whether these measures are sufficient to prevent data breaches and other cyber threats by handling participant data responsibly throughout the research lifecycle. IRBs often mandate the use of encryption protocols to secure data both in transit and at rest to reduce the risk of data interception during online data collection or storage. Access to sensitive data is restricted to authorized personnel and IRBs may require role-based access controls to limit exposure to the minimum necessary individuals involved in the study.

Cybersecurity concerns extend to how data privacy and security risks are communicated to participants during the informed consent process. IRBs require informed consent documents to include clear information about data security measures and potential cybersecurity risks to allow participants to make fully informed decisions about their involvement in the research (De Roche & Silver, 2024). This transparency is vital in building trust between researchers and participants in studies involving sensitive data such as health information or behavioral patterns. IRBs guarantee that consent forms are not only comprehensive but also accessible often recommending layered consent models where detailed information about cybersecurity measures is provided in a clear and non-technical language.

CONNECTING CYBERSECURITY ETHICS TO HUMAN RESEARCH PROTECTIONS

The ethical principles of cybersecurity such as confidentiality, integrity, and availability intersect with the core tenets of human research protections. IRBs play a pivotal role in operationalizing these principles within research settings to align cybersecurity ethics with broader ethical standards in human research. One of the moral obligations in cybersecurity is the prevention of unauthorized data use and misuse which aligns with the mandate of Common Rule to minimize risks to participants by affirming that data is only used for the purposes outlined in the research protocol (Kavak et al., 2021). IRBs actively review the data handling procedures proposed by researchers to reinforce compliance with this principle. The importance of IRBs scrutinizing data sharing agreements, ensuring that data is not shared with third parties without explicit consent and appropriate data security measures (Barnes et al., 2020). IRBs also require that researchers implement audit trails and monitoring systems that track data access to enable prompt detection of any unauthorized activities. This approach not only protects participants but also upholds the ethical integrity of the research by preventing data from being exploited in ways that could cause harm.

AI and ML technologies pose unique ethical challenges in research related to bias and fairness. IRBs have started to address these issues by incorporating ethical reviews of AI and ML models into their standard evaluation processes. IRBs now require researchers to demonstrate how they will assess and mitigate biases in AI-driven analyses to assure that outcomes do not unfairly disadvantage specific groups (McKee, 2024). This involves scrutinizing the datasets used to train AI models and not perpetuate existing biases. IRBs also evaluate whether researchers are employing explainable AI techniques which make the decision-making processes of AI systems more transparent and understandable to both researchers and participants (Davison et al., 2024).

Implementing Failsafes for Enhancing Protections Against Cybersecurity Threats

IRBs can implement a range of failsafes that enhance protections against cybersecurity threats. These measures help establish that both institutions and researchers adhere to ethical standards even as digital technologies continue to evolve (Stoudt et al., 2024). IRBs can encourage the use of PETs which allow data to be analyzed without exposing sensitive information. These technologies align with ethical standards that prioritize participant privacy and reduce the risk of data breaches. PETs are valuable in research settings that involve large datasets and complex data sharing arrangements (Knight et al., 2024) Dynamic consent models offer a more adaptive approach to managing participant consent that allows individuals to update their consent preferences as the research evolves (Stoudt et al., 2024). IRBs can recommend these models to enhance participant autonomy and certify that the consent remains relevant as data use changes over time (Yaokumah et al., 2020). This is important in studies involving AI and ML where new data applications may emerge after the initial consent is obtained.

IRBs should implement continuous monitoring of research project by conducting post-approval audits to verify compliance with data security standards and consent agreements. This ongoing oversight helps identify potential cybersecurity vulnerabilities early and stipulates that corrective actions can be taken (Zhang & Ghorbani, 2021). The value of continuous monitoring in maintaining the integrity of research involving human subjects in data-centric and technologically complex studies (Wylde et al., 2022).

HUMAN RESEARCH PROTECTIONS, DATA SECURITY, PRIVACY, AND AI CHALLENGES

AI and ML technologies offer powerful tools for analyzing large datasets and generating insights that were previously unattainable (Ajwang & Ikoha, 2024). These technologies pose significant ethical challenges when they are used in research involving human subjects. The use of AI-driven models can raise issues of data misuse, privacy violations, and biases that may result in harmful or discriminatory outcomes. There is a need for IRBs to closely examine the development and application of AI and ML models within research protocols to ensure they do not inadvertently harm participants or reinforce existing biases (Ajwang & Ikoha; McKee, 2024).

The rapid integration of AI & ML into research practices has transformed how data is collected, analyzed, and applied that created both opportunities and new ethical challenges (Buchanan, 2020). This technological shift has amplified concerns about data security, privacy, and the protection of human research participants. IRBs play a crucial role in overseeing these emerging complexities directing that the research involving human subjects adheres to ethical and legal standards. There is a critical need for human research protections alongside robust data security measures while growing challenges posed by AI and ML need to be addressed (Sontan & Samuel, 2024). IRBs can effectively manage these aspects and outlines potential failsafes to safeguard human subjects codifying that institutions and researchers maintain the highest standards of ethical conduct. IRBs must expand their focus to include the ethical implications of data security, privacy, and algorithmic decision-making. IRBs are now required to evaluate not only the traditional aspects of human subject protections but also the complex data flows and potential risks associated with modern research methodologies (Friesen et al., 2023).

Data Security and Privacy Oversight

As research increasingly relies on digital tools that collect and store sensitive data, data security and privacy are foundational to ethical research involving human subjects. IRBs must enforce that researchers have implemented comprehensive security measures including encryption, secure access controls, and regular audits to prevent unauthorized data access and protect participant confidentiality. IRBs should rigorously assess data management plans to confirm that they meet regulatory standards like GDPR and HIPAA which set stringent requirements for data security and privacy protections (Dalkıran, 2024). IRBs must scrutinize how researchers obtain informed consent by establishing that participants are fully aware of data privacy risks and the measures taken to mitigate them. Transparent communication about data handling, storage, and potential cybersecurity threats is essential to uphold participants’ trust and autonomy (Dalal et al., 2022).

Navigating the Ethical Complexities of AI and ML in Human Research

One of the major ethical concerns with AI is algorithmic bias where models trained on biased datasets produce skewed results that can disproportionately affect certain groups (Sontan & Samuel, 2024). IRBs should mandate that researchers conduct bias assessments and validation checks to validate that AI models are fair and representative of diverse populations. This aligns with the ethical principle of justice which requires equitable treatment of all research participants.

The opaque nature of many AI and ML models complicates ethical oversight because it can be challenging to understand how decisions are made (Traianou & Hammersley, 2024). IRBs should encourage researchers to adopt explainable AI techniques that offer insights into the decision-making processes of their models (Lapid et al., 2023). This approach promotes transparency and allows participants and stakeholders to better understand the potential impacts of AI-driven research. IRBs help maintain that the research is accountable and ethically sound by requiring clear documentation of AI methodologies.

Data minimization refers to collecting only the necessary data for the research purpose which is a key strategy to reduce privacy risks. Research proposals must prioritize data minimization and employ de-identification techniques such as anonymization and pseudonymization to protect participants’ identities as outlined by IRB (Miller & Bossmaier, 2024). These approaches are essential for maintaining privacy in large-scale data analysis and for safeguarding sensitive information, particularly when data sharing is involved (Barnes et al., 2020).

Traditional consent models may not fully capture the complexities of AI and data-driven research as the uses of data can evolve over time (Familoni, 2024). IRBs should recommend dynamic consent models to allow participants to update their consent preferences as the study progresses and new uses of data emerge. Layered consent approaches where information is presented in stages based on participants’ needs and interests can enhance understanding and engagement (Davison et al., 2024). This adaptive approach aligns with ethical standards that prioritize respect for participants’ autonomy and informed decision-making.

IRBs should implement continuous monitoring and post-approval audits of research projects to maintain ongoing compliance with ethical standards. This proactive approach allows IRBs to review data security practices, assess compliance with consent agreements, and verify that researchers adhere to privacy protocols throughout the study. Ongoing oversight is critical for identifying potential ethical violations early and maintaining the integrity of the research (Pirani, 2024).

IRBs can benefit from establishing AI ethics committees that include experts in data science, cybersecurity, ethics, and law. These committees can provide specialized guidance on the ethical challenges associated with AI and ML to help IRBs make informed decisions about complex research proposals (Knight et al., 2024). Engaging with experts affirms that IRBs remain up-to-date with technological advancements and are equipped to address the unique risks posed by AI in human research (Koali et al., 2024).

Institutional and Researcher Accountability

IRBs must enforce strict accountability measures to direct institutions and researchers to comply with ethical standards and legal regulations. This involves verification of research protocols that align with the requirements of key regulations such as the Common Rule, HIPAA, and GDPR (Bakare et al., 2024). Researchers must provide detailed data management plans that outline the security measures in place to protect participant data and preserve privacy. Institutions should support IRBs by providing access to training resources that enhance IRB members’ understanding of AI, data security, and ethical research practices. Continuous education for IRB members is critical and enables them to evaluate the ethical implications of cutting-edge research methodologies (Stegenga et al., 2023).

Enhancing Practical Guidance for IRBs for Implementing Ethical Frameworks in Data-Driven Research

IRBs require practical tools and actionable strategies to effectively manage ethical issues in research involving human subjects in the realm of cybersecurity (Lapid et al., 2023). IRBs play a pivotal role in protecting participants by maintaining that research adheres to ethical standards and regulatory requirements. The rapidly evolving nature of data security, privacy, and AI technologies requires practical guidance on how to apply these frameworks in real-world scenarios. There are practical guidelines, tools, and examples that IRBs can use to address cybersecurity and ethical concerns in research to enhance their ability to safeguard human subjects.

IRBs can benefit from standardized checklists that help assess the adequacy of cybersecurity measures and ethical considerations in research protocols. These checklists serve as practical tools to assure that all critical aspects of data protection and participant safety are addressed.

Data Security Measures:

Is data encrypted both in transit and at rest? (Yes/No)

Are access controls implemented to restrict data access to authorized personnel only? (Yes/No)

Are data storage solutions compliant with regulatory standards such as GDPR and HIPAA? (Yes/No)

Does the consent form clearly outline data privacy risks and security measures? (Yes/No)

Are participants informed of how their data will be used, stored, and shared? (Yes/No)

Are potential biases in AI and ML models assessed and mitigated? (Yes/No)

Is the AI model explainable, allowing participants to understand how their data contributes to outcomes? (Yes/No)

This checklist aligns with recommendations which emphasize the need for systematic evaluations to identify and mitigate cybersecurity risks in research involving human subjects.

IRBs can employ decision-making frameworks that guide ethical evaluations when dealing with complex cybersecurity and AI-related concerns. These frameworks provide a structured approach for assessing the ethical implications of research protocols to help IRBs navigate challenging decisions.

Identify Ethical and Cybersecurity Concerns:

Define the ethical issues related to data security, privacy, and AI.

Assess the potential risks to participants and data integrity.

Reference relevant ethical frameworks, such as the Common Rule, GDPR, and HIPAA, to ensure compliance.

Use guidance which provides standards for assessing data privacy and security measures.

Involve researchers, data security experts, and legal advisors in discussions to explore ethical concerns.

Consult with participants when feasible to understand their perspectives on data security and privacy.

IRBs can learn from successful policies and practices implemented at other institutions. Incorporating dynamic consent models allows participants to adjust their consent preferences as the study evolves which will enhance participant autonomy and engagement. Dynamic consent models are adaptable and responsive to participants’ evolving needs while enhancing ethical oversight in data-centric research (Davison et al., 2024). Such models provide an additional layer of protection for participants to remain informed and in control of their data throughout the research lifecycle.

IRBs must be equipped with the knowledge and skills necessary to evaluate cybersecurity and ethical concerns effectively (Lapid et al., 2023). Continuous training and access to specialized resources can enhance IRB members’ ability to address complex issues in research involving digital technologies. IRBs should participate in targeted training programs that cover key areas such as data security protocols, AI ethics, and regulatory compliance. Training modules should include cybersecurity best practices, AI and ML ethics, and regulatory compliance. Cybersecurity best practices should cover encryption, secure data storage, and access control measures (Formosa et al., 2021). AI and ML ethics should include assessing algorithmic bias, transparency, and the implications of automated decision-making (Familoni, 2024). Regulatory compliance should be comprised of navigating GDPR, HIPAA, and other relevant legal frameworks (Bakare et al., 2024). IRBs can enhance their decision-making capabilities by collaborating with cybersecurity experts, data scientists, and legal advisors who can provide specialized insights into the ethical implications of research technologies (Frohmann, 2000). Establishing AI ethics committees within IRBs can also provide valuable support to prepare IRBs to evaluate the complexities of data-driven research (Haneef & Agarwal, 2024).

CONCLUSION