1. Introduction

The core of phone GUI automation is to simulate human interactions with phone interfaces programmatically, thereby accomplishing a series of complex tasks. Phone automation is widely applied in areas such as application testing and shortcut instructions, aiming to enhance operational efficiency or free up human resource Azim and Neamtiu (2013); Degott et al. (2019); Koroglu et al. (2018); Li et al. (2019); Pan et al. (2020). Traditional phone automation often relies on predefined scripts and templates, which, while effective, tend to be rigid and inflexible when facing complex and variable user interfaces and dynamic environment Arnatovich and Wang (2018); Deshmukh et al. (2023); Nass (2024); Nass et al. (2021); Tramontana et al. (2019). These methods can be viewed as early forms of agents, designed to perform specific tasks in a predetermined manner.

An agent, in the context of computer science and artificial intelligence, is an entity that perceives its environment through sensors and acts upon that environment through actuators to achieve specific goals Guo et al. (2024); Jin et al. (2024); Li et al. (2024c); Wang et al. (2024c). Agents can range from simple scripts that execute fixed sequences of actions to complex systems capable of learning, reasoning, and adapting to new situations Huang et al. (2024); Jin et al. (2024); Wang et al. (2024c). Traditional agents in phone automation are limited by their reliance on static scripts and lack of adaptability, making it challenging for them to handle the dynamic and complex nature of modern mobile interfaces.

Building intelligent autonomous agents with abilities in task planning, decision-making, and action execution has been a long-term goal of artificial intelligence Albrecht and Stone (2018). As artificial intelligence technologies have advanced, the development of agents has progressed from these traditional agents Anscombe (2000); Dennett (1988); Shoham (1993) to AI agents Gao et al. (2018); Inkster et al. (2018); Poole and Mackworth (2010) that incorporate machine learning and decision-making capabilities. These AI agents can learn from data, make decisions based on probabilistic models, and adapt to changes in the environment to some extent. However, they still face limitations in understanding complex user instructions Amershi et al. (2014); Luger and Sellen (2016) and managing highly dynamic environment Christiano et al. (2017); Köhl et al. (2019).

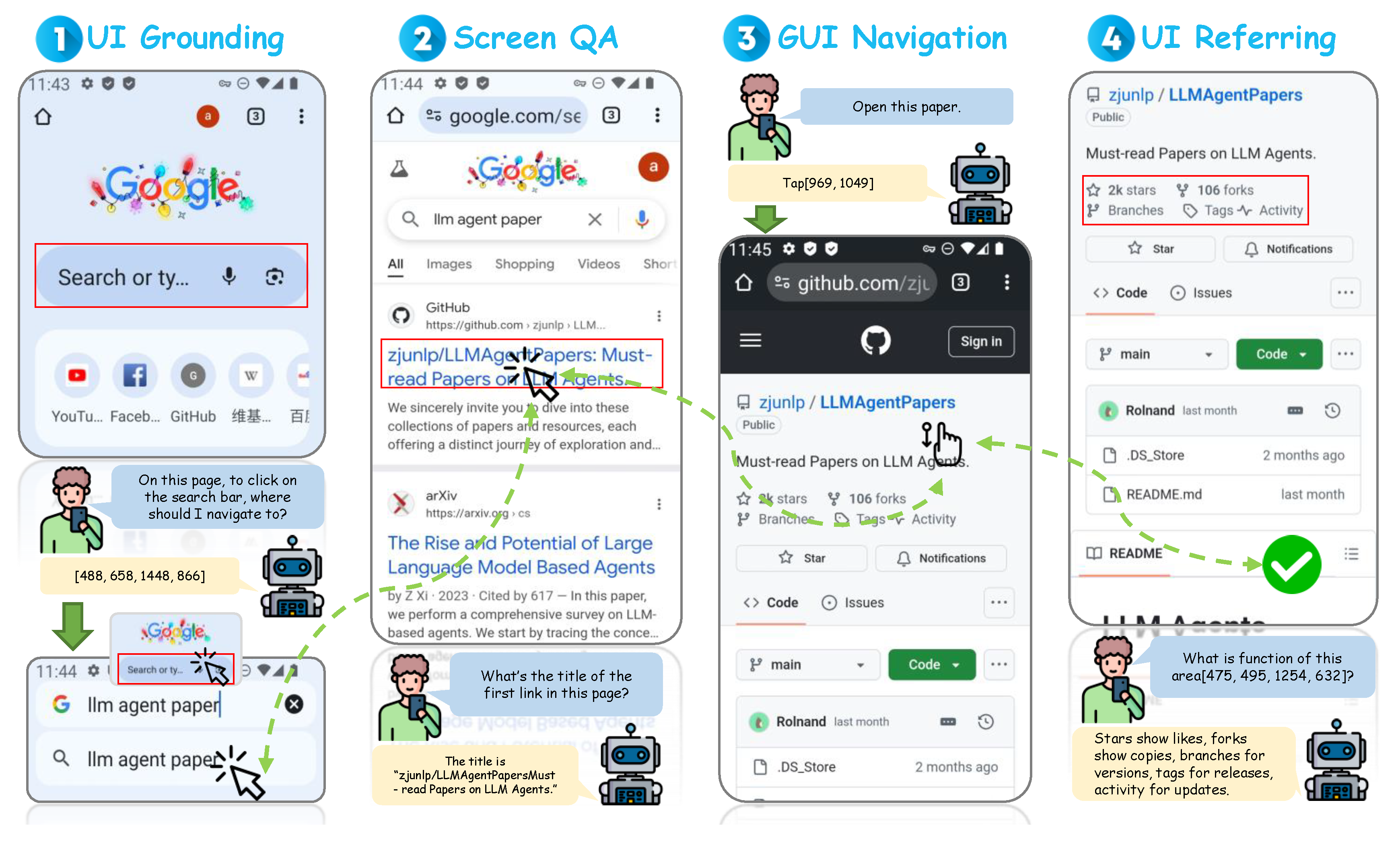

With the rapid development of LLMs like the GPT series Achiam et al. (2023); Brown (2020); Radford (2018a); Radford et al. (2019), agents based on these models have exhibited powerful capabilities in numerous fields Boiko et al. (2023); Dasgupta et al. (2023); Dong et al. (2024); Hong et al. (2023); Li et al. (2023a); Park et al. (2023); Qian et al. (2023, 2024a); Wang et al. (2023c); Xia et al. (2023). As illustrated in

Figure 1, there are key differences between conversational LLMs and LLM-based agents. While conversational LLMs primarily focus on understanding and generating human language—engaging in dialogue, answering questions, summarizing information, and translating language—LLM-based agents extend beyond these capabilities by integrating perception and action components. This integration enables them to interact with the external environment through multimodal inputs, such as visual data from user interfaces, and perform actions that alter environmental states Hong et al. (2023); Qian et al. (2024a); Wang et al. (2023c). By combining perception, reasoning, and action, these agents can parse intricate instructions, formulate operational commands, and autonomously perform highly complex tasks, bridging the gap between language understanding and real-world interactions Guo et al. (2024); Li et al. (2024c); Xi et al. (2023).

Applying LLM-based agents to phone automation has brought a new paradigm to traditional automation, making operations on phone interfaces more intelligent Hong et al. (2024); Song et al. (2023b); Zhang et al. (2023a); Zheng et al. (2024). LLM-powered phone GUI agents are intelligent systems that leverage large language models to understand, plan, and execute tasks on mobile devices by integrating natural language processing, multimodal perception, and action execution capabilities. On smartphones, these agents can recognize and analyze user interfaces, understand natural language instructions, perceive interface changes in real time, and respond dynamically. Unlike traditional script-based automation that relies on coding fixed operation paths, these agents can autonomously plan complex task sequences through multimodal processing of language instructions and interface information. They have strong adaptability and flexible pathways, greatly improving user experience by understanding human intentions, performing complex long-chain planning, and executing tasks automatically, thereby improving efficiency in a wide range of scenarios, including not only phone automated testing but also executing complex tasks such as configuring intricate phone settings Wen et al. (2024), navigating maps Wang et al. (2024a,b), and facilitating online shopping Zhang et al. (2023a).

Clarifying the development trajectory of phone GUI agents is crucial. On one hand, with the support of large language models Achiam et al. (2023); Brown (2020); Radford (2018a); Radford et al. (2019), phone GUI agents can significantly enhance the efficiency of phone automation scenarios, making operations more intelligent and no longer limited to coding fixed operation paths. This enhancement not only optimizes phone automation processes but also expands the application scope of automation. On the other hand, phone GUI agents can understand and execute complex natural language instructions, transforming human intentions into specific operations such as automatically scheduling appointments, booking restaurants, summoning transportation, and even achieving functionalities similar to autonomous driving in advanced automation. These capabilities demonstrate the potential of phone GUI agents in executing complex tasks, providing convenience to users and laying practical foundations for AI development.

With the increasing research on large language models in phone automation Liu et al. (2024c); Lu et al. (2024b); Wang et al. (2024a,b); Wen et al. (2024, 2023); Zhang et al. (2024b), the research community’s attention to this field has grown rapidly. However, there is still a lack of dedicated systematic surveys in this area, especially comprehensive explorations of phone automation from the perspective of large language models. Given the importance of phone GUI agents, the purpose of this paper is to fill this gap by systematically summarizing current research achievements, reviewing relevant literature, analyzing the application status of large language models in phone automation, and pointing out directions for future research.

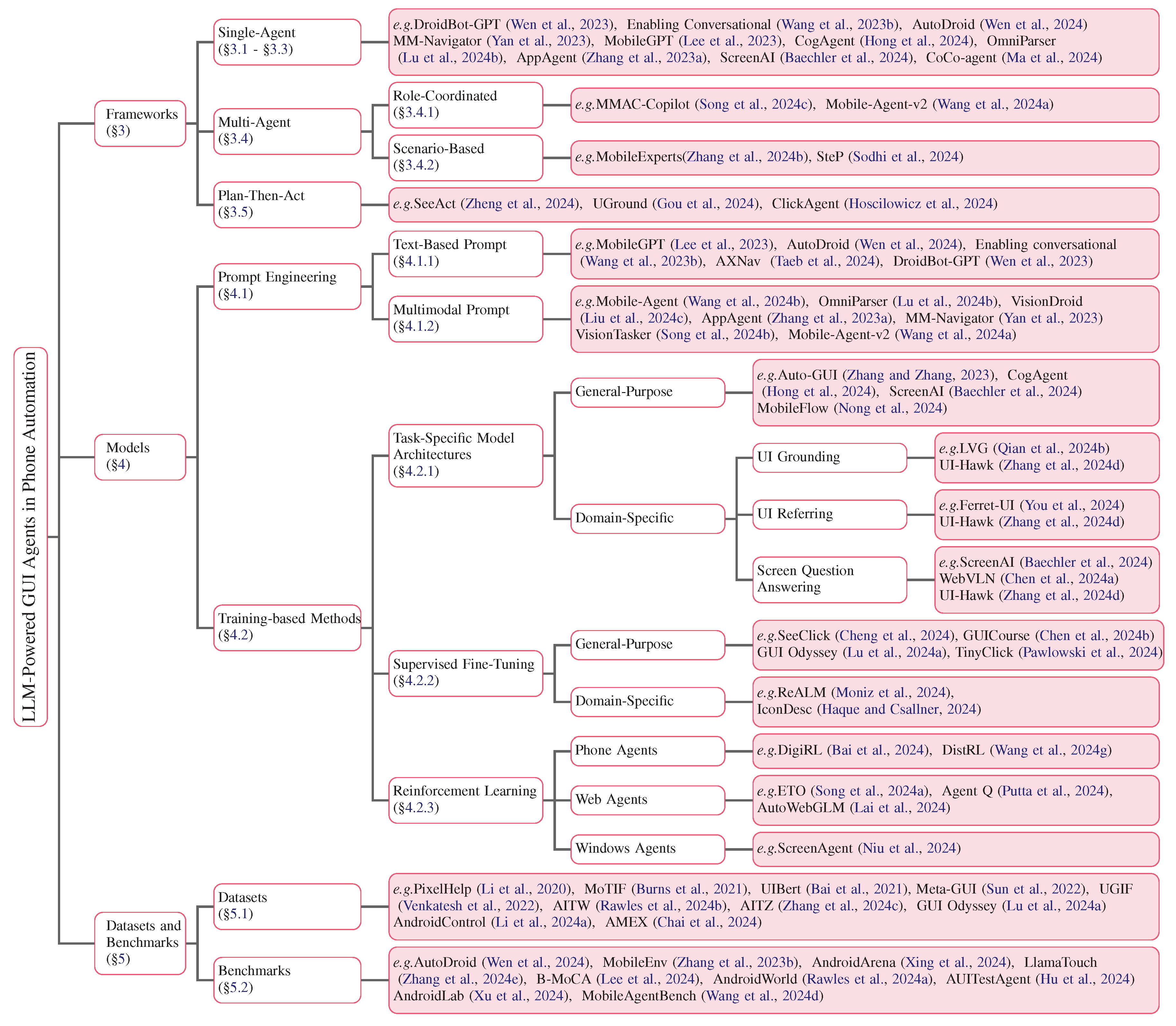

To provide a comprehensive overview of the current state and future prospects of LLM-Powered GUI Agents in Phone Automation, we present a taxonomy that categorizes the field into three main areas: Frameworks of LLM-powered phone GUI agents, Large Language Models for Phone Automation, and Datasets and Evaluation Methods

Figure 2. This taxonomy highlights the diversity and complexity of the field, as well as the interdisciplinary nature of the research involved.

Unlike previous literature reviews, which primarily focus on traditional phone automated testing methods, most existing surveys emphasize manual scripting or rule-based automation approaches without leveraging LLMs Arnatovich and Wang (2018); Deshmukh et al. (2023); Nass (2024); Nass et al. (2021); Tramontana et al. (2019). These traditional methods face significant challenges in coping with dynamic changes, complex user interfaces, and the scalability required for modern applications. Although recent surveys have explored broader areas of multimodal agents and foundation models for GUI automation, such as Foundations and Recent Trends in Multimodal Mobile Agents: A Survey Wu et al. (2024), GUI Agents with Foundation Models: A Comprehensive Survey Wang et al. (2024f), and Large Language Model-Brained GUI Agents: A Survey Zhang et al. (2024a), these works primarily cover general GUI-based automation and multimodal applications.

However, a dedicated and focused survey on the role of large language models in phone GUI automation remains absent in the existing literature. This paper addresses the above-mentioned gap by systematically reviewing the latest developments, challenges, and opportunities in LLM-powered phone GUI agents, thereby offering a more targeted exploration of this emerging domain. Our main contributions can be summarized as follows:

A Comprehensive and Systematic Survey of LLM-Powered Phone GUI Agents. We provide an in-depth and structured overview of recent literature on LLM-powered phone automation, examining its developmental trajectory, core technologies, and real-world application scenarios. By comparing LLM-driven methods to traditional phone automation approaches, this survey clarifies how large models transform GUI-based tasks and enable more intelligent, adaptive interaction paradigms.

Methodological Framework from Multiple Perspectives. Leveraging insights from existing studies, we propose a unified methodology for designing LLM-driven phone GUI agents. This encompasses framework design (e.g., single-agent vs. multi-agent vs. plan-then-act frameworks), LLM model selection and training (prompt engineering vs. training-based methods), data collection and preparation strategies (GUI-specific datasets and annotations), and evaluation protocols (benchmarks and metrics). Our systematic taxonomy and method-oriented discussion serve as practical guidelines for both academic and industrial practitioners.

In-Depth Analysis of Why LLMs Empower Phone Automation. We delve into the fundamental reasons behind LLMs’ capacity to enhance phone automation. By detailing their advancements in natural language comprehension, multimodal grounding, reasoning, and decision-making, we illustrate how LLMs bridge the gap between user intent and GUI actions. This analysis elucidates the critical role of large models in tackling issues of scalability, adaptability, and human-like interaction in real-world mobile environment.

Insights into Latest Developments, Datasets, and Benchmarks. We introduce and evaluate the most recent progress in the field, highlighting innovative datasets that capture the complexity of modern GUIs and benchmarks that allow reliable performance assessment. These resources form the backbone of LLM-based phone automation, enabling systematic training, fair evaluation, and transparent comparisons across different agent designs.

Identification of Key Challenges and Novel Perspectives for Future Research. Beyond discussing mainstream hurdles (e.g., dataset coverage, on-device constraints, reliability), we propose forward-looking viewpoints on user-centric adaptations, security and privacy considerations, long-horizon planning, and multi-agent coordination. These novel perspectives shed light on how researchers and developers might advance the current state of the art toward more robust, secure, and personalized phone GUI agents.

By addressing these aspects, our survey not only provides an up-to-date map of LLM-powered phone GUI automation but also offers a clear roadmap for future exploration. We hope this work will guide researchers in identifying pressing open problems and inform practitioners about promising directions to harness LLMs in designing efficient, adaptive, and user-friendly phone GUI agents.

2. Development of Phone Automation

The evolution of phone automation has been marked by significant technological advancements Kong et al. (2018), particularly with the emergence of LLMs Achiam et al. (2023); Brown (2020); Radford (2018a); Radford et al. (2019). This section explores the historical development of phone automation, the challenges faced by traditional methods, and how LLMs have revolutionized the field.

2.1. Phone Automation Before the LLM Era

Before the advent of LLMs, phone automation was predominantly achieved through traditional technical methods Amalfitano et al. (2014); Azim and Neamtiu (2013); Kirubakaran and Karthikeyani (2013); Kong et al. (2018); Linares-Vásquez et al. (2017); Zhao et al. (2024). This subsection delves into the primary areas of research and application during that period, including automation testing, shortcuts, and Robotic Process Automation (RPA), highlighting their methodologies and limitations.

2.1.1. Automation Testing

Phone applications (apps) have become extremely popular, with approximately 1.68 million apps in the Google Play Store

1. The increasing complexity of apps Hecht et al. (2015) has raised significant concerns about app quality. Moreover, due to rapid release cycles and limited human resources, developers find it challenging to manually construct test cases. Therefore, various automated phone app testing techniques have been developed and applied, making phone automation testing the main application of phone automation before the era of large models Kirubakaran and Karthikeyani (2013); Kong et al. (2018); Linares-Vásquez et al. (2017); Zein et al. (2016). Test cases for phone apps are typically represented by a sequence of GUI events Jensen et al. (2013) to simulate user interactions with the app. The goal of automated test generators is to produce such event sequences to achieve high code coverage or detect bugs Zhao et al. (2024).

In the development history of phone automation testing, we have witnessed several key breakthroughs and advancements. Initially, random testing (e.g., Monkey Testing Machiry et al. (2013)) was used as a simple and fundamental testing method, detecting application stability and robustness by randomly generating user actions. Although this method could cover a wide range of operational scenarios, its testing process lacked focus and was difficult to reproduce and pinpoint specific issues Kong et al. (2018).

Subsequently, model-based testing Amalfitano et al. (2012, 2014); Azim andNeamtiu (2013) became a more systematic testing approach. It establishes a user interface model of the application, using predefined states and transition rules to generate test cases. This method improved testing coverage and efficiency, but the construction and maintenance of the model required substantial manual involvement, and updating the model became a challenge for highly dynamic applications.

With the development of machine learning techniques, learning-based testing methods began to emerge Degott et al. (2019); Koroglu et al. (2018); Li et al. (2019); Pan et al. (2020). These methods generate test cases by analyzing historical data to learn user behavior patterns. For example, Humanoid Li et al. (2019) uses deep learning to mimic human tester interaction behavior and uses the learned model to guide test generation like a human tester. However, this method relies on human-generated datasets to train the model and needs to combine the model with a set of predefined rules to guide testing.

Recently, reinforcement learning Ladosz et al. (2022) has shown great potential in the field of automated testing. DinoDroid Zhao et al. (2024) is an example that uses Deep Q-Network (DQN) Fan et al. (2020) to automate testing of Android applications. By learning behavior models of existing applications, it automatically explores and generates test cases, not only improving code coverage but also enhancing bug detection capabilities. Deep reinforcement learning methods can handle more complex state spaces and make more intelligent decisions but also face challenges such as high training costs and poor model generalization capabilities Luo et al. (2024).

2.1.2. Shortcuts

Shortcuts on mobile devices refer to predefined rules or trigger conditions that enable users to execute a series of actions automatically Bridle and McCreath (2006); Guerreiro et al. (2008); Kennedy and Everett (2011). These shortcuts are designed to streamline interaction by reducing repetitive manual input. For instance, the Tasker app on the Android platform

2 and the Shortcuts feature on iOS

3 allow users to automate tasks like turning on Wi-Fi, sending text messages, or launching apps under specific conditions such as time, location, or events. These implementations leverage simple IF-THEN and manually-designed logic but are inherently limited in scope and flexibility.

2.1.3. Robotic Process Automation

Robotic Process Automation(RPA) applications on phone devices aim to simulate human users performing repetitive tasks across applications Agostinelli et al. (2019). Phone RPA tools generate repeatable automation processes by recording user action sequences. These tools are used in enterprise environment to automate tasks such as data entry and information gathering, reducing human errors and improving efficiency, but they struggle with dynamic interfaces and require frequent script updates Pramod (2022); Syed et al. (2020).

2.2. Challenges of Traditional Methods

Despite the advancements made, traditional phone automation methods faced significant challenges that hindered further development. This subsection analyzes these challenges, including lack of generality and flexibility, high maintenance costs, difficulty in understanding complex user intentions, and insufficient intelligent perception, highlighting the need for new approaches.

2.2.1. Limited Generality

Traditional automation methods are often tailored to specific applications and interfaces, lacking adaptability to different apps and dynamic user environment Asadullah and Raza (2016); Clarke et al. (2016); Li et al. (2017); Patel and Pasha (2015). For example, automation scripts designed for a specific app may not function correctly if the app updates its interface or if the user switches to a different app with similar functionality. This inflexibility makes it difficult to extend automation across various usage scenarios without significant manual reconfiguration.

These methods typically follow predefined sequences of actions and cannot adjust their operations based on changing contexts or user preferences. For instance, if a user wants an automation to send a customized message to contacts who have birthdays on a particular day, traditional methods struggle because they cannot dynamically access and interpret data from the contacts app, calendar, and messaging app simultaneously. Similarly, automating tasks that require conditional logic—such as playing different music genres based on the time of day or weather conditions—poses a challenge for traditional automation tools, as they lack the ability to integrate real-time data and make intelligent decisions accordingly Liu et al. (2023); Majeed et al. (2020).

2.2.2. High Maintenance Costs

Writing and maintaining automation scripts require professional knowledge and are time-consuming and labor-intensive Kodali and Mahesh (2017); Kodali et al. (2019); Lamberton et al. (2017); Meironke and Kuehnel (2022); Moreira et al. (2023). Taking RPA as an example, as applications continually update and iterate, scripts need frequent modifications. When an application’s interface layout changes or functions are updated, RPA scripts originally written for the old version may not work properly, requiring professionals to spend considerable time and effort readjusting and optimizing the scripts Agostinelli et al. (2022); Ling et al. (2020); Tripathi (2018).

The high entry barrier also limits the popularity of some automation features Le et al. (2020); Roffarello et al. (2024). For example, Apple’s Shortcuts

4 can combine complex operations, such as starting an Apple Watch fitness workout, recording training data, and sending statistical data to the user’s email after the workout. However, setting up such a complex shortcut often requires the user to perform a series of complicated operations on the phone following fixed rules. This is challenging for ordinary users, leading many to abandon usage due to the complexity of manual script writing.

2.2.3. Poor Intent Comprehension

Rule-based and script-based systems can only execute predefined tasks or engage in simple natural language interactions Cowan et al. (2017); Kepuska and Bohouta (2018). Simple instructions like "open the browser" can be handled using traditional natural language processing algorithms, but complex instructions like "open the browser, go to Amazon, and purchase a product" cannot be completed. These traditional systems are based on fixed rules and lack in-depth understanding and parsing capabilities for complex natural language Anicic et al. (2010); Kang et al. (2013); Karanikolas et al. (2023).

They require users to manually write scripts to interact with the phone, greatly limiting the application of intelligent assistants that can understand complex human instructions. For example, when a user wants to check flight information for a specific time and book a ticket, traditional systems cannot accurately understand the user’s intent and automatically complete the series of related operations, necessitating manual script writing with multiple steps, which is cumbersome and requires high technical skills.

2.2.4. Weak Screen GUI Perception

Different applications present a wide variety of GUI elements, making it challenging for traditional methods like RPA to accurately recognize and interact with diverse controls Banerjee et al. (2013); Brich et al. (2017); Chen et al. (2018); Fu et al. (2024). Traditional automation often relies on fixed sequences of actions targeting specific controls or input fields, exhibiting Weak Screen GUI Perception that limits their ability to adapt to variations in interface layouts and component types. For example, in an e-commerce app, the product details page may include dynamic content like carousels, embedded videos, or interactive size selection menus, which differ significantly from the simpler layout of a search results page. Traditional methods may fail to accurately identify and interact with the "Add to Cart" button or select product options, leading to unsuccessful automation of purchasing tasks.

Moreover, traditional automation struggles with understanding complex screen information such as dynamic content updates, pop-up notifications, or context-sensitive menus that require adaptive interaction strategies. Without the ability to interpret visual cues like icons, images, or contextual hints, these methods cannot handle tasks that involve navigating through multi-layered interfaces or responding to real-time changes. For instance, automating the process of booking a flight may involve selecting dates from a calendar widget, choosing seats from an interactive seat map, or handling security prompts—all of which require sophisticated perception and interpretation of the interface Zhang et al. (2024e).

These limitations significantly impede the widespread application and deep development of traditional phone automation technologies. Without intelligent perception capabilities, automation cannot adapt to the complexities of modern app interfaces, which are increasingly dynamic and rich in interactive elements. This underscores the urgent need for new methods and technologies that can overcome these bottlenecks and achieve more intelligent, flexible, and efficient phone automation.

2.3. LLMs Boost Phone Automation

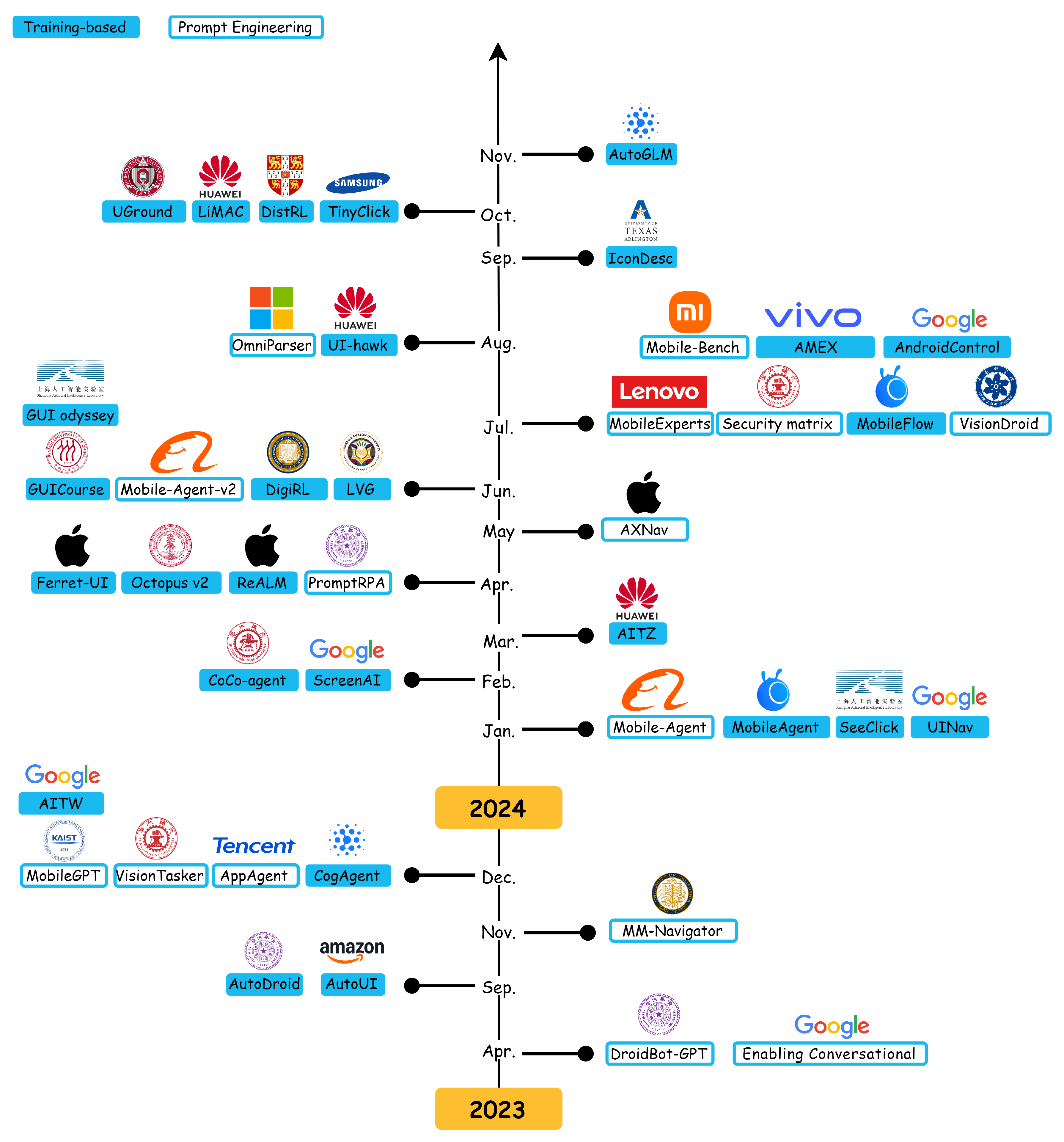

The advent of LLMs has marked a significant shift in the landscape of phone automation, enabling more dynamic, context-aware, and sophisticated interactions with mobile devices. As illustrated in

Figure 3, the research on LLM-powered phone GUI agents has progressed through pivotal milestones, where models become increasingly adept at interpreting multimodal data, reasoning about user intents, and autonomously executing complex tasks. This section clarifies how LLMs address traditional limitations and examines why

scaling laws can further propel large models in phone automation. As will be detailed in §

4 and §

5, LLM-based solutions for phone automation generally follow two routes: (1)

Prompt Engineering, where

pre-trained models are guided by carefully devised prompts, and (2)

Training-Based Methods, where LLMs undergo additional optimization on GUI-focused datasets. The following subsections illustrate how LLMs mitigate the core challenges of traditional phone automation—ranging from

contextual semantic understanding and

GUI perception to

reasoning and decision making—and briefly highlight the role of

scaling laws in enhancing these capabilities.

Scaling Laws in LLM-Based Phone Automation. Scaling laws—originally observed in general-purpose LLMs, where increasing model capacity and training data yields emergent capabilities Brown et al. (2020); Hagendorff (2023); Kaplan et al. (2020)—have similarly begun to manifest in phone GUI automation. As datasets enlarge and encompass more diverse apps, usage scenarios, and user behaviors, recent findings Chen et al. (2024b); Cheng et al. (2024); Lu et al. (2024a); Pawlowski et al. (2024) show consistent gains in step-by-step automation tasks such as clicking buttons or entering text. This data scaling not only captures broader interface layouts and device contexts but also reveals latent “emergent” competencies, allowing LLMs to handle more abstract, multi-step instructions. Empirical evidence from in-domain scenarios Li et al. (2024a) further underscores how expanded coverage of phone apps and user patterns systematically refines automation accuracy. In essence, as model sizes and data complexity grow, phone GUI agents exploit these scaling laws to bridge the gap between user intent and real-world GUI interactions with increasing efficiency and sophistication.

Contextual Semantic Understanding. LLMs have transformed natural language processing for phone automation by learning from extensive textual corpora Brown et al. (2020); Devlin (2018); Radford (2018b); Vaswani (2017); Wen et al. (2024); Zhang et al. (2023a). This training captures intricate linguistic structures and domain knowledge Karanikolas et al. (2023), allowing agents to parse multi-step commands and generate context-informed responses. MobileAgent Wang et al. (2024b), for example, interprets user directives like scheduling appointments or performing transactions with high precision, harnessing the Transformer architecture Vaswani (2017) for efficient encoding of complex prompts. Consequently, phone GUI agents benefit from stronger natural language grounding, bridging user-intent gaps once prevalent in script-based systems.

Screen GUI with Multi-Modal Perception. Screen GUI perception in earlier phone automation systems typically depended on static accessibility trees or rigid GUI element detection, which struggled to adapt to changing app interfaces. Advances in LLMs, supported by large-scale multimodal datasets Chang et al. (2024); Minaee et al. (2024); Zhao et al. (2023), allow models to unify textual and visual signals in a single representation. Systems like UGround Gou et al. (2024), Ferret-UI You et al. (2024), and UI-Hawk Zhang et al. (2024d) excel at grounding natural language descriptions to on-screen elements, dynamically adjusting as interfaces evolve. Moreover, SeeClick Cheng et al. (2024) and ScreenAI Baechler et al. (2024) demonstrate that learning directly from screenshots—rather than purely textual metadata—can further enhance adaptability. By integrating visual cues with user language, LLM-based agents can respond more flexibly to a wide range of UI designs and interaction scenarios.

Reasoning and Decision Making. LLMs also enable advanced reasoning and decision-making by combining language, visual context, and historical user interactions. Pre-training on broad corpora equips these models with the capacity for complex reasoning Wang et al. (2023a); Yuan et al. (2024), multi-step planning Song et al. (2023a); Valmeekam et al. (2023), and context-aware adaptation Koike et al. (2024); Talukdar and Biswas (2024). MobileAgent-V2 Wang et al. (2024a), for instance, introduces a specialized planning agent to track task progress while a decision agent optimizes actions. AutoUI Zhang and Zhang (2023) applies a multimodal chain-of-action approach that accounts for both previous and forthcoming steps, and SteP Sodhi et al. (2024) uses stacked LLM modules to solve diverse web tasks. Similarly, MobileGPT Lee et al. (2023) leverages an app memory system to minimize repeated mistakes and bolster adaptability. Such architectures demonstrate higher success rates in complex phone operations, reflecting a new level of autonomy in orchestrating tasks that previously demanded handcrafted scripts.

Overall, LLMs are transforming phone automation by reinforcing semantic understanding, expanding multimodal perception, and enabling sophisticated decision-making strategies. The scaling laws observed in datasets like AndroidControl Li et al. (2024a) reinforce the notion that a larger volume and diversity of demonstrations consistently elevate model accuracy. As these techniques mature, LLM-driven phone GUI agents continue to redefine how users interact with mobile devices, ultimately paving the way for a more seamless and user-centric automation experience.

2.4. Emerging Commercial Applications

The integration of LLMs has enabled novel commercial applications that leverage phone automation, offering innovative solutions to real-world challenges. This subsection highlights several prominent cases, presented in chronological order based on their release dates, where LLM-based GUI agents are reshaping user experiences, improving efficiency, and providing personalized services.

Apple Intelligence. On June 11, 2024, Apple introduced its personal intelligent system, Apple Intelligence

5, seamlessly integrating AI capabilities into iOS, iPadOS, and macOS. It enhances communication, productivity, and focus features through intelligent summarization, priority notifications, and context-aware replies. For instance, Apple Intelligence can summarize long emails, transcribe and interpret call recordings, and generate personalized images or “Genmoji.” A key aspect is on-device processing, which ensures user privacy and security. By enabling the system to operate directly on the user’s device, Apple Intelligence safeguards personal information while providing an advanced, privacy-preserving phone automation experience.

vivo PhoneGPT. On October 10, 2024, vivo unveiled OriginOS 5

6, its newest mobile operating system, featuring an AI agent ability named PhoneGPT. By harnessing large language models, PhoneGPT can understand user instructions, preferences, and on-screen information, autonomously engaging in dialogues and detecting GUI states to operate the smartphone. Notably, it allows users to order coffee or takeout with ease and can even carry out a full phone reservation process at a local restaurant through extended conversations. By integrating the capabilities of large language models with native system states and APIs, PhoneGPT illustrates the great potential of phone GUI agents.

Honor YOYO Agent. Released on October 24, 2024, the Honor YOYO Agent

7 exemplifies an phone automation assistant that adapts to user habits and complex instructions. With just one voice or text command, YOYO can automate multi-step processes—such as comparing prices to secure discounts when shopping, automatically filling out forms, ordering beverages aligned with user preferences, or silencing notifications during online meetings. By learning from user behaviors, YOYO reduces the complexity of human-device interaction, offering a more effortless and intelligent phone experience.

Anthropic Claude Computer Use. On October 22, 2024, Anthropic unveiled the Computer Use feature for its Claude 3.5 Sonnet model

8. This feature allows an AI agent to interact with a computer as if a human were operating it, observing screenshots, moving the virtual cursor, clicking buttons, and typing text. Instead of requiring specialized environment adaptations, the AI can “see” the screen and perform actions that humans would, bridging the gap between language-based instructions and direct computer operations. Although initial performance is still far below human proficiency, this represents a paradigm shift in human-computer interaction. By teaching AI to mimic human tool usage, Anthropic reframes the challenge from “tool adaptation for models” to “model adaptation to existing tools.” Achieving balanced performance, security, and cost-effectiveness remains an ongoing endeavor.

Zhipu.AI AutoGLM. On October 25, 2024, Zhipu.AI introduced AutoGLM Liu et al. (2024b), an intelligent agent that simulates human operations on smartphones. With simple text or voice commands, AutoGLM can like and comment on social media posts, purchase products, book train tickets, or order takeout. Its capabilities extend beyond mere API calls—AutoGLM can navigate interfaces, interpret visual cues, and execute tasks that mirror human interaction steps. This approach streamlines daily tasks and demonstrates the versatility and practicality of LLM-driven phone automation in commercial applications.

These emerging commercial applications—from Apple’s privacy-focused on-device intelligence to vivo’s PhoneGPT, Honor’s YOYO agent, Anthropic’s Computer Use, and Zhipu.AI’s AutoGLM—showcase how LLM-based agents are transcending traditional user interfaces. They enable more natural, efficient, and personalized human-device interactions. As models and methods continue to evolve, we can anticipate even more groundbreaking applications, further integrating AI into the fabric of daily life and professional workflows.

3. Frameworks and Components of Phone GUI Agents

LLM-powered phone GUI agents can be designed using different architectural paradigms and components, ranging from straightforward, single-agent systems Wang et al. (2023b, 2024b); Wen et al. (2024, 2023); Zhang et al. (2023a); to more elaborate multi-agent Wang et al. (2024a); Zhang et al. (2024b,f); or multi-stage Gou et al. (2024); Hoscilowicz et al. (2024); Zheng et al. (2024) approaches. A fundamental scenario involves a single agent that operates incrementally, without precomputing an entire action sequence from the outset. Instead, the agent continuously observes the dynamically changing mobile environment—where available UI elements, device states, and relevant contextual factors may shift in unpredictable ways—and cannot be exhaustively enumerated in advance. As a result, the agent must adapt its strategy step-by-step, making decisions based on the current situation rather than following a fixed plan. This iterative decision-making process can be effectively modeled using a Partially Observable Markov Decision Process (POMDP), a well-established framework for handling sequential decision-making under uncertainty Monahan (1982); Spaan (2012). By modeling the task as a POMDP, we capture its dynamic nature, the impossibility of pre-planning all actions, and the necessity of adjusting the agent’s approach at each decision point.

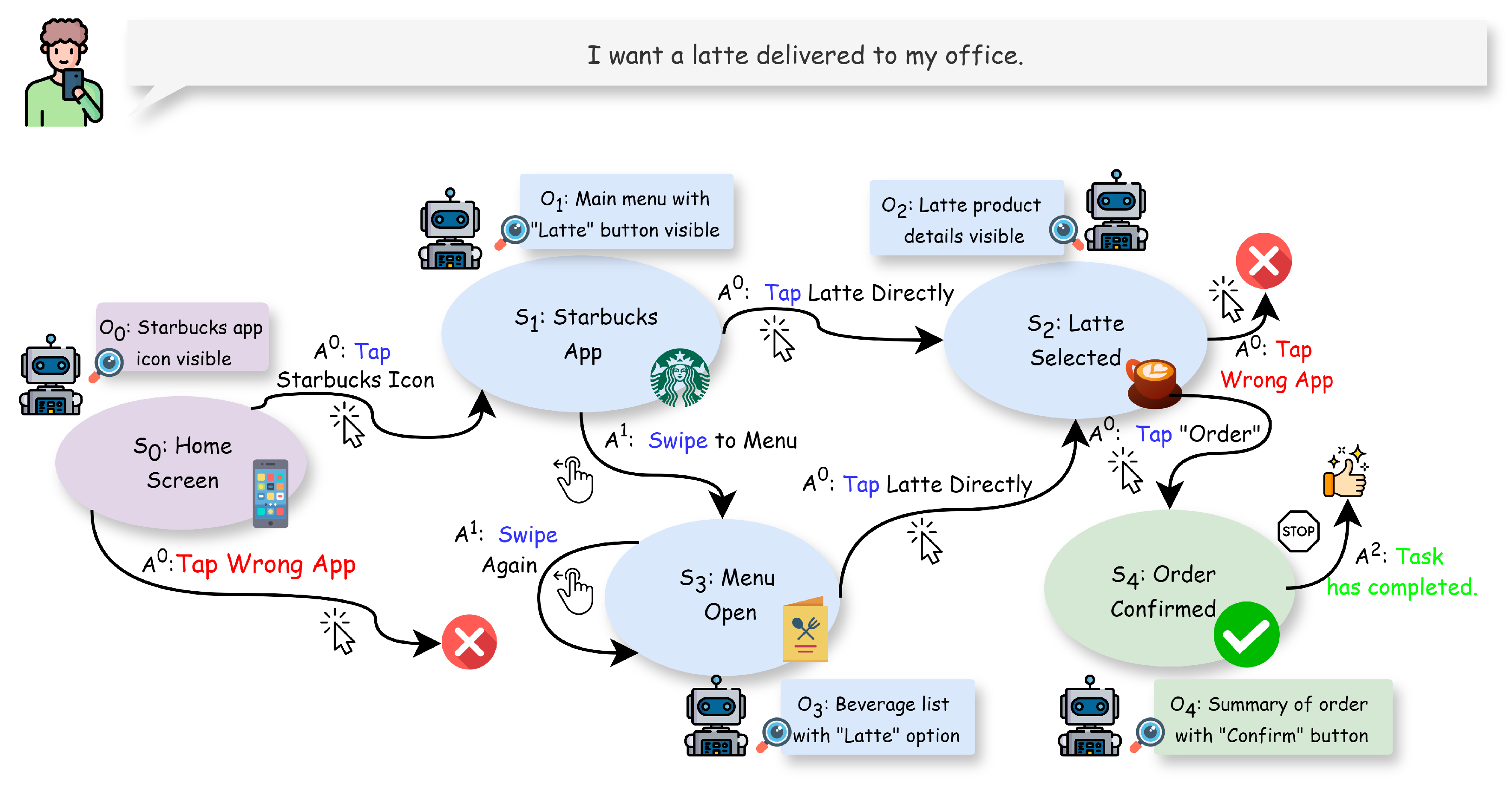

As illustrated in

Figure 4, consider a simple example: the agent’s goal is to order a latte through the Starbucks app. The app’s interface may vary depending on network latency, promotions displayed, or the user’s last visited screen. The agent cannot simply plan all steps in advance; it must observe the current screen, identify which UI elements are present, and then choose an action (like tapping the Starbucks icon, swiping to a menu, or selecting the latte). After each action, the state changes, and the agent re-evaluates its options. This dynamic, incremental decision-making is precisely why POMDPs are a suitable framework. In the POMDP formulation for phone automation:

States (S). At each decision point, the agent’s perspective is described as a state, a comprehensive snapshot of all relevant information that could potentially influence the decision-making process. This state encompasses the current UI information (e.g., screenshots, UI trees, OCR-extracted text, icons), the phone’s own status (network conditions, battery level, location), and the task context (the user’s goal—“order a latte”—and the agent’s progress toward it). The state represents the complete, underlying situation of the environment at time t, which may not be directly observable in its entirety.

Actions (A). Given the state

at time

t, the agent selects from available actions (taps, swipes, typing text, launching apps) that influence the subsequent state. The details of how phone GUI agents make decisions are introduced in §

3.2, and the design of the action space is discussed in §

3.3.

Transition Dynamics (). When the agent executes an action at time t, it leads to a new state . Some transitions may be deterministic (e.g., tapping a known button reliably opens a menu), while others are uncertain (e.g., network delays, unexpected pop-ups). Mathematically, we have the transition probability which describes the likelihood of transitioning from state to state given action .

Observations (O). The agent receives

observations at time

t which are partial and imperfect reflections of the true state

. In the phone automation context, these observations could be, for example, a glimpse of the visible UI elements (not the entire UI tree), a brief indication of the network status (such as a signal icon without detailed connection parameters), or a partial view of the battery level indicator. These observations

provide the agent with some, but not all, of the information relevant to the state

. The agent must infer and make decisions based on these limited observations, attempting to reach the desired goal state despite the partial observability. The details of phone GUI agent perception are discussed in §

3.1.

Under this POMDP-based paradigm, the agent aims to make decisions that lead to the goal state by observing the current state and choosing appropriate actions. It continuously re-evaluates its strategy as conditions evolve, promoting real-time responsiveness and dynamic adaptation. The agent observes the state at time t, chooses an action , and then based on the resulting observation and new state , refines its strategy.

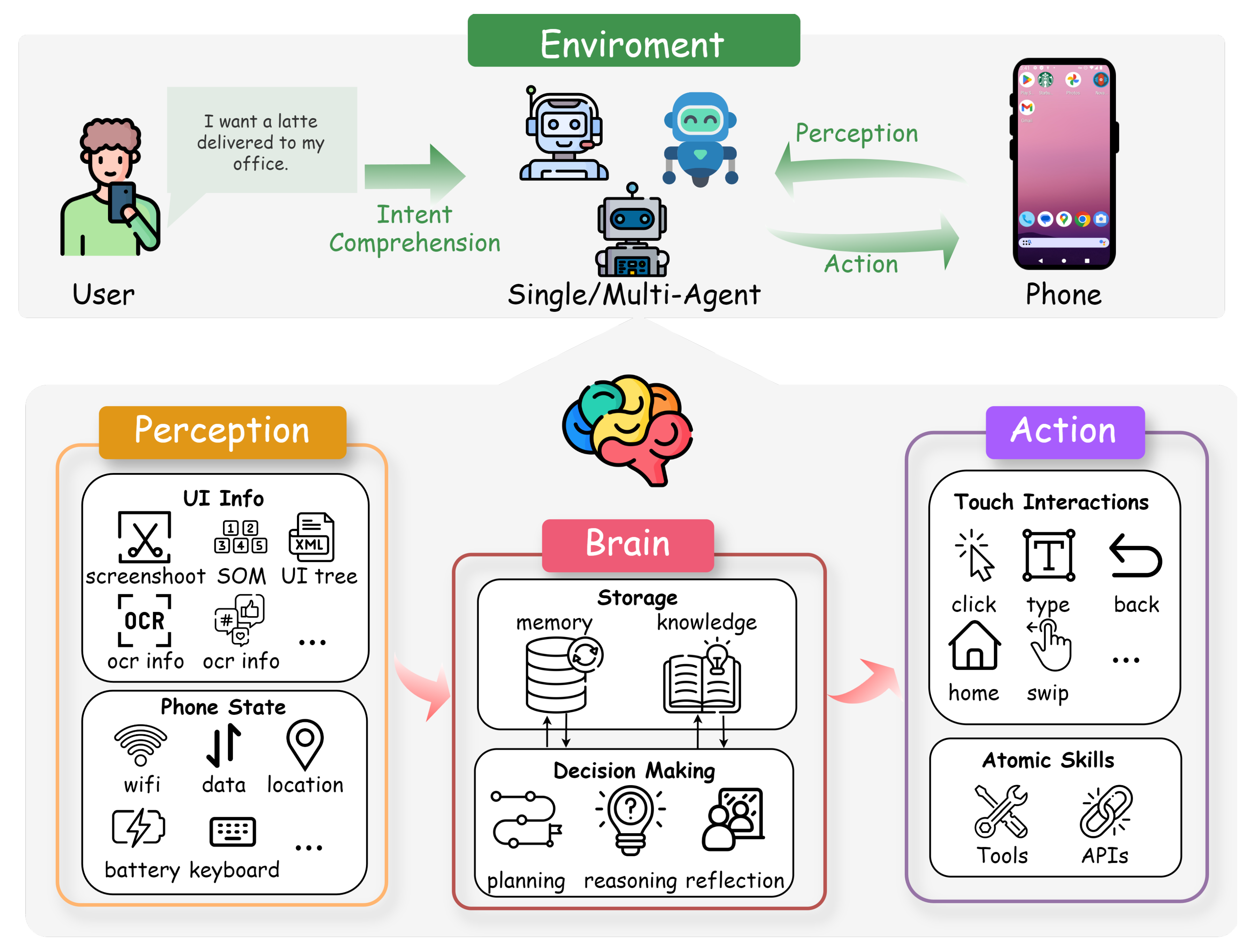

As illustrated in

Figure 5, frameworks of phone GUI agents aim to integrate perception, reasoning, and action capabilities into cohesive agents that can interpret user intentions, understand complex UI states, and execute appropriate operations within mobile environment. By examining these frameworks, we can identify best practices, guide future advancements, and choose the right approach for various applications and contexts.

To address limitations in adaptability and scalability, §

3.4 introduces multi-agent frameworks, where specialized agents collaborate, enhance efficiency, and handle more diverse tasks in parallel. Finally, §

3.5 presents the Plan-Then-Act Framework, which explicitly separates the planning phase from the execution phase. This approach allows agents to refine their conceptual plans before acting, potentially improving both accuracy and robustness.

3.1. Perception in Phone GUI Agents

Perception is a fundamental component of the basic framework for LLM-powered phone GUI agents. It is responsible for capturing and interpreting the state of the mobile environment, enabling the agent to understand the current context and make informed decisions. In the overall pipeline, perception serves as the initial step in the POMDP, providing the necessary input for the reasoning and action modules to operate effectively.

3.1.1. UI Information Perception

UI information is crucial for agents to interact seamlessly with the mobile interface. It can be further categorized into UI tree-based and screenshot-based approaches, supplemented by techniques like Set-of-Marks (SoM) and Icon & OCR enhancements.

UI tree is a structured, hierarchical representation of the UI elements on a mobile screen Medhi et al. (2013); Räsänen and Saarinen (2015). Each node in the UI tree corresponds to a UI component, containing attributes such as class type, visibility, and resource identifiers.

9 Early datasets like PixelHelp Li et al. (2020), MoTIF Burns et al. (2021), and UIBert Bai et al. (2021) utilized UI tree data to enable tasks such as mapping natural language instructions to UI actions and performing interactive visual environment interactions. DroidBot-GPT Wen et al. (2023) was the first work to investigate how pre-trained language models can be applied to app automation without modifying the app or the model. DroidBot-GPT uses the UI tree as its primary perception information. The challenge lies in converting the structured UI tree into a format that LLMs can process effectively. DroidBot-GPT addresses this by transforming the UI tree into natural language sentences. Specifically, it extracts all user-visible elements, generates prompts like “A view <name>that can...” for each element, and combines them into a cohesive description of the current UI state. This approach mitigates the issue of excessively long and complex UI trees by presenting the information in a more natural and concise format suitable for LLMs. Subsequent developments, such as Enabling Conversational Interaction Wang et al. (2023b) and AutoDroid Wen et al. (2024), further refined this approach by representing the view hierarchy as HTML. Enabling Conversational Interaction introduces a method to convert the view hierarchy into HTML syntax, mapping Android UI classes to corresponding HTML tags and preserving essential attributes such as class type, text, and resource identifiers. This representation aligns closely with the training data distribution of LLMs, enhancing their ability to perform few-shot learning and improving overall UI understanding. AutoDroid extends this work by developing a GUI parsing module that converts the GUI into a simplified HTML representation using specific HTML tags like <button>, <checkbox>, <scroller>, <input>, and <p>. Additionally, AutoDroid implements automatic scrolling of scrollable components to ensure that comprehensive UI information is available to the LLM, thereby enhancing decision-making accuracy and reducing computational overhead.

Screenshots provide a visual snapshot of the current UI, capturing the appearance and layout of UI elements. Unlike UI trees, which require API access and can become unwieldy with complex hierarchies, screenshots offer a more flexible and often more comprehensive representation of the UI. Additionally, UI trees present challenges such as missing or overlapping controls and the inability to directly reference UI elements programmatically, making screenshots a more practical and user-friendly alternative for quickly assessing and sharing the state of a user interface. AutoUI Zhang and Zhang (2023) introduced a multimodal agent that relies on screenshots for GUI control, eliminating the dependency on UI trees. This approach allows the agent to interact with the UI directly through visual perception, enabling more natural and human-like interactions. AutoUI employs a chain-of-action technique that uses both previously executed actions and planned future actions to guide decision-making, achieving high action type prediction accuracy and efficient task execution. Following AutoUI, a series of multimodal solutions emerged, including MM-Navigator Yan et al. (2023), CogAgent Hong et al. (2024), AppAgent Zhang et al. (2023a), VisionTasker Song et al. (2024b), and MobileGPT Lee et al. (2023). These frameworks leverage screenshots in combination with supplementary information to enhance UI understanding and interaction capabilities.

Set-of-Mark (SoM) is a prompting technique used to annotate screenshots with OCR, icon, and UI tree information, thereby enriching the visual data with textual descriptors Yang et al. (2023). For example, MM-Navigator Yan et al. (2023) uses SoM to label UI elements with unique identifiers, allowing the LLM to reference and interact with specific components more effectively. This method has been widely adopted in subsequent works such as AppAgent Zhang et al. (2023a), VisionDroid Liu et al. (2024c), and OmniParser Lu et al. (2024b), which utilize SoM to enhance the agent’s ability to interpret and act upon UI elements based on visual, textual, and structural cues.

Icon & OCR enhancements provide additional layers of information that complement the visual data, enabling more precise action decisions. For instance, Mobile-Agent-v2 Wang et al. (2024a) integrates OCR and icon data with screenshots to provide a richer context for the LLM, allowing it to interpret and execute more complex instructions that require understanding both text and visual icons. Icon & OCR enhancements are employed in various works, including VisionTasker Song et al. (2024b), MobileGPT Lee et al. (2023), and OmniParser Lu et al. (2024b), to improve the accuracy and reliability of phone GUI agents.

3.1.2. Phone State Perception

Phone state information, such as keyboard status and location data, further contextualizes the agent’s interactions. For example, Mobile-Agent-v2 Wang et al. (2024a) uses keyboard status to determine when text input is required. Location data, while not currently utilized, represents a potential form of phone state information that could be used to recommend nearby services or navigate to specific addresses. This additional state information enhances the agent’s ability to perform context-aware actions, making interactions more intuitive and efficient.

The perception information gathered through UI trees, screenshots, SoM, OCR, and phone state is converted into prompt tokens that the LLM can process. This conversion is crucial for enabling seamless interaction between the perception module and the reasoning and action modules. Detailed methodologies for transforming perception data into prompt formats are discussed in §

4.1.

3.2. Brain in Phone GUI Agents

The brain of an LLM-based phone automation agent is its cognitive core, primarily constituted by a LLM. The LLM serves as the agent’s reasoning and decision-making center, enabling it to interpret inputs, generate appropriate responses, and execute actions within the mobile environment. Leveraging the extensive knowledge embedded within LLMs, agents benefit from advanced language understanding, contextual awareness, and the ability to generalize across diverse tasks and scenarios.

3.2.1. Storage

Storage encompasses both memory and knowledge, which are critical for maintaining context and informing the agent’s decision-making processes.

Memory refers to the agent’s ability to retain information from past interactions with users and the environment Xi et al. (2023). This is particularly useful for cross-application operations, where continuity and coherence are essential for completing multi-step tasks. For example, Mobile-Agent-v2 Wang et al. (2024a) integrates a memory unit that records task-related focus content from historical screens. This memory is accessed by the decision-making module when generating operations, ensuring that the agent can reference and update relevant information dynamically.

Knowledge pertains to the agent’s understanding of phone automation tasks and the functionalities of various apps. This knowledge can originate from multiple sources:

Pre-trained Knowledge. LLMs are inherently equipped with a vast amount of general knowledge, including common-sense reasoning and familiarity with programming and markup languages such as HTML. This pre-existing knowledge allows the agent to interpret and generate meaningful actions based on the UI representations.

Domain-Specific Training. Some agents enhance their knowledge by training on phone automation-specific datasets. Works such as AutoUI Zhang and Zhang (2023), CogAgent Hong et al. (2024), ScreenAI Baechler et al. (2024), CoCo-agent Ma et al. (2024), and Ferret-UI You et al. (2024) have trained LLMs on datasets tailored for mobile UI interactions, thereby improving their capability to understand and manipulate mobile interfaces effectively. For a more detailed discussion of knowledge acquisition through model training, see §

4.2.

Knowledge Injection. Agents can enhance their decision-making by incorporating knowledge derived from exploratory interactions and stored contextual information. This involves utilizing data collected during offline exploration phases or from observed human demonstrations to inform the LLM’s reasoning process. For instance, AutoDroid Wen et al. (2024) explores app functionalities and records UI transitions in a UI Transition Graph (UTG) memory, which are then used to generate task-specific prompts for the LLM. Similarly, AppAgent Zhang et al. (2023a) compiles knowledge from autonomous interactions and human demonstrations into structured documents, enabling the LLM to make informed decisions based on comprehensive UI state information and task requirements.

3.2.2. Decision Making

Decision Making is the process by which the agent determines the appropriate actions to perform based on the current perception and stored information Xi et al. (2023). The LLM processes the input prompts, which include the current UI state, historical interactions from memory, and relevant knowledge, to generate action sequences that accomplish the assigned tasks.

Planning involves devising a sequence of actions to achieve a specific task goal Song et al. (2023a); Xi et al. (2023). Effective planning is essential for decomposing complex tasks into manageable steps and adapting to changes in the environment. For instance, Mobile-Agent-v2 Wang et al. (2024a) incorporates a planning agent that generates task progress based on historical operations, ensuring effective operation generation by the decision agent. Additionally, approaches like Dynamic Planning of Thoughts (D-PoT) have been proposed to dynamically adjust plans based on environmental feedback and action history, significantly improving accuracy and adaptability in task execution Zhang et al. (2024f).

Reasoning enables the agent to interpret and analyze information to make informed decisions Chen et al. (2024e); Gandhi et al. (2024); Plaat et al. (2024). It involves understanding the context, evaluating possible actions, and selecting the most appropriate ones to achieve the desired outcome. By leveraging chain-of-thought(COT) Wei et al. (2022), LLMs enhance their reasoning capabilities, allowing them to think step-by-step and handle intricate decision-making processes. This structured approach facilitates the generation of coherent and logical action sequences, ensuring that the agent can navigate complex UI interactions effectively.

Reflection allows the agent to assess the outcomes of its actions and make necessary adjustments to improve performance Shinn et al. (2024). It involves evaluating whether the executed actions meet the expected results and identifying any discrepancies or errors. For example, Mobile-Agent-v2 Wang et al. (2024a) includes a reflection agent that evaluates whether the decision agent’s operations align with the task goals. If discrepancies are detected, the reflection agent generates appropriate remedial measures to correct the course of action. This continuous feedback loop enhances the agent’s reliability and ensures that it can recover from unexpected states or errors during task execution.

By integrating robust planning, advanced reasoning, and reflective capabilities, the Decision Making component of the Brain ensures that LLM-powered phone GUI agents can perform tasks intelligently and adaptively. These mechanisms enable the agents to handle a wide range of scenarios, maintain task continuity, and improve their performance over time through iterative learning and adjustment.

3.3. Action in Phone GUI Agents

The Action component is a critical part of LLM-powered phone GUI agents, responsible for executing decisions made by the Brain within the mobile environment. By bridging high-level commands generated by the LLM with low-level device operations, the agent can effectively interact with the phone’s UI and system functionalities. Actions encompass a wide variety of operations, ranging from simple interactions like tapping a button to complex tasks such as launching applications or modifying device settings. Execution mechanisms leverage tools like Android’s UI Automator Patil et al. (2016), iOS’s XCTest Lodi (2021), or popular automation frameworks such as Appium Singh et al. (2014) and Selenium Gundecha (2015); Sinclair to send precise commands to the phone. Through these mechanisms, the agent ensures that decisions are translated into tangible, reliable operations on the device.

The types of actions in phone GUI agents are diverse and can be broadly categorized based on their functionalities.

Table 1 summarizes these actions, providing a clear overview of the operations agents can perform.

The above categories reflect the key interactions required for phone automation. Touch interactions form the foundation of UI navigation, while gesture-based actions add flexibility for dynamic control. Typing and input enable text-based operations, whereas system operations and media controls extend the agent’s capabilities to broader device functionalities. By combining these actions, phone GUI agents can achieve high accuracy and adaptability in executing user tasks, ensuring a seamless experience even in complex and dynamic environment.

3.4. Multi-Agent Framework

While single-agent frameworks based on LLMs have achieved significant progress in screen understanding and reasoning, they operate as isolated entities Dorri et al. (2018); Torreno et al. (2017). This isolation limits their flexibility and scalability in complex tasks that may require diverse, coordinated skills and adaptive capabilities. Single-agent systems may struggle with tasks that demand continuous adjustments based on real-time feedback, multi-stage decision-making, or specialized knowledge in different domains. Furthermore, they lack the ability to leverage shared knowledge or collaborate with other agents, reducing their effectiveness in dynamic environment Song et al. (2024c); Tan et al.; Wang et al. (2024a); Xi et al. (2023).

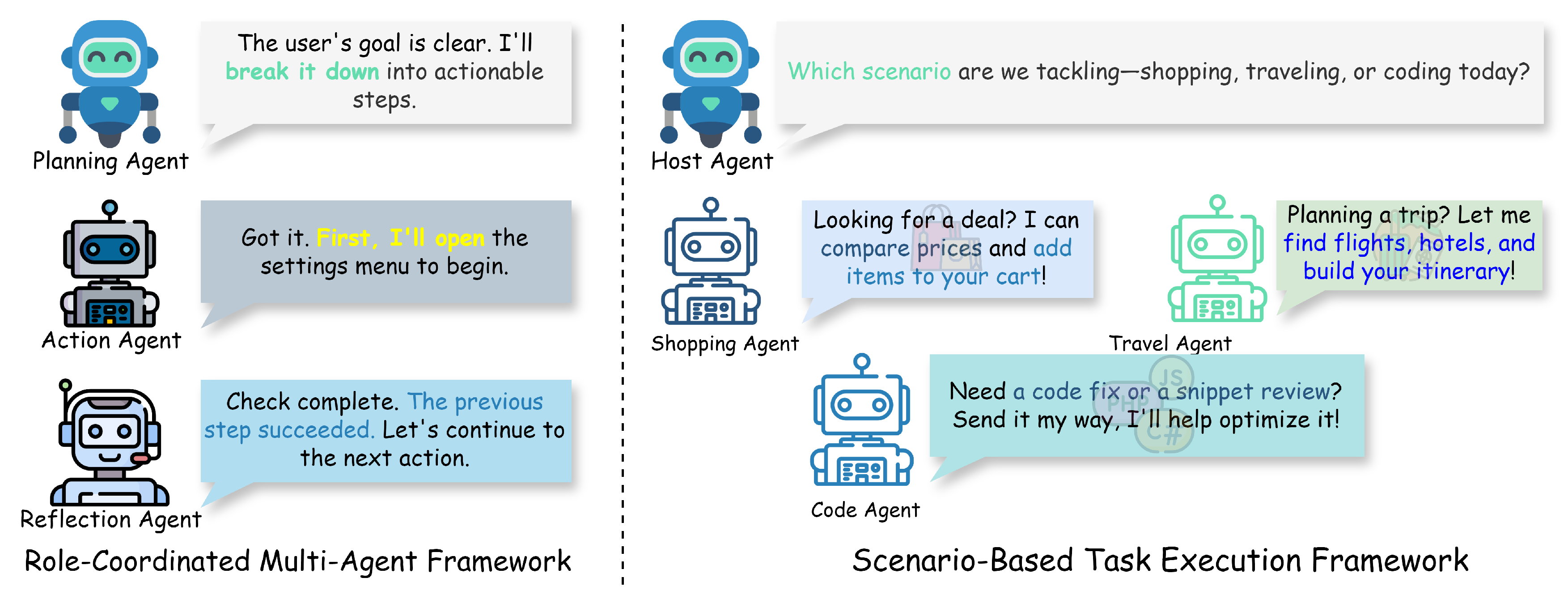

Multi-agent frameworks address these limitations by facilitating collaboration among multiple agents, each with specialized functions or expertise Chen et al. (2019); Li et al. (2024b). This collaborative approach enhances task efficiency, adaptability, and scalability, as agents can perform tasks in parallel or coordinate their actions based on their specific capabilities. As illustrated in

Figure 6, multi-agent frameworks in phone automation can be categorized into two primary types: the

Role-Coordinated Multi-Agent Framework and the

Scenario-Based Task Execution Framework. These frameworks enable more flexible, efficient, and robust solutions in phone automation by either organizing agents based on general functional roles or dynamically assembling specialized agents according to specific task scenarios.

3.4.1. Role-Coordinated Multi-Agent

In the Role-Coordinated Multi-Agent Framework, agents are assigned general functional roles such as planning, decision-making, memory management, reflection, or tool invocation. These agents collaborate through a predefined workflow, with each agent focusing on its specific function to collectively achieve the overall task. This approach is particularly beneficial for tasks that require a combination of these general capabilities, allowing each agent to specialize and optimize its role within the workflow.

For example, in MMAC-Copilot Song et al. (2024c), multiple agents with distinct general functions collaborate as an OS copilot. The Planner strategically manages and allocates tasks to other agents, optimizing workflow efficiency. Meanwhile, the Librarian handles information retrieval and provides foundational knowledge, and the Programmer is responsible for coding and executing scripts, directly interacting with the software environment. The Viewer interprets complex visual information and translates it into actionable commands, while the Video Analyst processes and analyzes video content. Additionally, the Mentor offers strategic oversight and troubleshooting support. Each agent contributes its specialized function to the collaborative workflow, thereby enhancing the system’s overall capability to handle complex interactions with the operating system.

Similarly, in MobileAgent-v2 Wang et al. (2024a), three agents with general roles are utilized: a planning agent, a decision agent, and a reflection agent. The planning agent compresses historical actions and state information to provide a concise representation of task progress. The decision agent uses this information to navigate the task effectively, while the reflection agent monitors the outcomes of actions and corrects any errors, ensuring accurate task completion. This role-based collaboration reduces context length, improves task progression, and enhances focus content retention through a memory unit managed by the decision agent.

In general computer automation, Cradle Tan et al. leverages foundational agents with general roles to achieve versatile computer control. Agents specialize in functions like command generation or state monitoring, enabling Cradle to tackle general-purpose tasks across multiple software environment.

3.4.2. Scenario-Based Task Execution

In the Scenario-Based Task Execution Framework, tasks are dynamically assigned to specialized agents based on specific task scenarios or application domains. Each agent is endowed with capabilities tailored to a particular scenario, such as shopping, code editing, or navigation. By assigning tasks to agents specialized in the relevant domain, the system improves task success rates and efficiency.

For instance, MobileExperts Zhang et al. (2024b) forms different expert agents through an Expert Exploration phase. In the exploration phase, each agent receives tailored tasks broken down into sub-tasks to streamline the exploration process. Upon completion of a sub-task, the agent extracts three types of memories from its trajectory: interface memories, procedural memories (tools), and insight memories for use in subsequent execution phases. When a new task arrives, the system dynamically forms an expert team by selecting agents whose expertise matches the task requirements, enabling them to collaboratively execute the task more effectively. Similarly, in the SteP Sodhi et al. (2024) framework, agents are specialized based on specific web scenarios such as shopping, GitLab, maps, Reddit, or CMS platforms. Each scenario agent possesses specific capabilities and knowledge relevant to its domain. When a task is received, it is dynamically assigned to the appropriate scenario agent, which executes the task leveraging its specialized expertise. This approach enhances flexibility and adaptability, allowing the system to handle a wide range of tasks across different domains more efficiently.

Through dynamic task assignment and specialization, the Scenario-Based Task Execution Framework optimizes multi-agent systems to adapt to diverse and evolving contexts, significantly enhancing both the efficiency and effectiveness of task execution. As illustrated in

Figure 6, the Role-Coordinated Framework relies on agents with general functional roles collaborating through a fixed workflow, suitable for tasks requiring a combination of general capabilities. In contrast, the Scenario-Based Framework dynamically assigns tasks to specialized agents tailored to specific scenarios, providing a flexible structure that adapts to the varying complexity and requirements of real-world tasks.

Despite the potential of multi-agent frameworks in phone automation, several challenges remain. In the Role-Coordinated Framework, coordinating agents with general functions requires efficient workflow design and may introduce overhead in communication and synchronization. In the Scenario-Based Framework, maintaining and updating a diverse set of specialized agents can be resource-intensive, and dynamically assigning tasks requires effective task recognition and agent selection mechanisms. Future research could explore hybrid frameworks that combine the strengths of both approaches, leveraging general functional agents while also incorporating specialized scenario agents as needed. Additionally, developing advanced algorithms for agent collaboration, learning, and adaptation can further enhance the intelligence and robustness of multi-agent systems. Integrating external knowledge bases, real-time data sources, and user feedback can also improve agents’ decision-making capabilities and adaptability in dynamic environment.

3.5. Plan-Then-Act Framework

While single-agent and multi-agent frameworks enhance adaptability and scalability, some tasks benefit from explicitly separating high-level planning from low-level execution. This leads to what we term the Plan-Then-Act Framework. In this paradigm, the agent first formulates a conceptual plan—often expressed as human-readable instructions—before grounding and executing these instructions on the device’s UI.

The Plan-Then-Act approach addresses a fundamental challenge: although LLMs and multimodal LLMs (MLLMs) excel at interpreting instructions and reasoning about complex tasks, they frequently struggle to precisely map their textual plans to concrete UI actions. By decoupling these stages, the agent can focus on what should be done (planning) and then handle how to do it on the UI (acting). Recent works highlight the effectiveness of this approach:

• SeeAct Zheng et al. (2024) demonstrates that GPT-4V(ision) Achiam et al. (2023) can generate coherent plans for navigating websites. However, bridging the gap between textual plans and underlying UI elements remains challenging. By clearly delineating planning from execution, the system can better refine its plan before finalizing actions.

• UGround Gou et al. (2024) and related efforts You et al. (2024); Zhang et al. (2024d) emphasize advanced visual grounding. Under a Plan-Then-Act framework, the agent first crafts a task solution plan, then relies on robust visual grounding models to locate and manipulate UI components. This modular design enhances performance across diverse GUIs and platforms, as the grounding model can evolve independently of the planning mechanism.

• LiMAC (Lightweight Multi-modal App Control) Christianos et al. (2024) also embodies a Plan-Then-Act spirit. LiMAC’s Action Transformer (AcT) determines the required action type (the plan), and a specialized VLM is invoked only for natural language needs. By structuring decision-making and text generation into distinct stages, LiMAC improves responsiveness and reduces compute overhead, ensuring that reasoning and UI interaction are cleanly separated.

• ClickAgent Hoscilowicz et al. (2024) similarly employs a two-phase approach. The MLLM handles reasoning and action planning, while a separate UI location model pinpoints the relevant coordinates on the screen. Here, the MLLM’s plan of which element to interact with is formed first, and only afterward is the element’s exact location identified and the action executed.

The Plan-Then-Act Framework offers several advantages. Modularity allows improvements in planning without requiring changes to the UI grounding and execution modules, and vice versa. Error Mitigation enables the agent to revise its plan before committing to actions; if textual instructions are ambiguous or infeasible, they can be corrected, reducing wasted actions and improving reliability. Additionally, improved visual grounding models, OCR enhancements, and scenario-specific knowledge can further refine the Plan-Then-Act approach, making agents more adept at handling intricate, real-world tasks. In summary, the Plan-Then-Act Framework represents a natural evolution in designing LLM-powered phone GUI agents. By separating planning from execution, agents can achieve clearer reasoning, improved grounding, and ultimately more effective and reliable task completion.

4. LLMs for Phone Automation

LLMs Achiam et al. (2023); Brown (2020); Radford (2018a); Radford et al. (2019) have emerged as a transformative technology in phone automation, bridging natural language inputs with executable actions. By leveraging their advanced language understanding, reasoning, and generalization capabilities, LLMs enable agents to interpret complex user intents, dynamically interact with diverse mobile applications, and effectively manipulate GUIs.

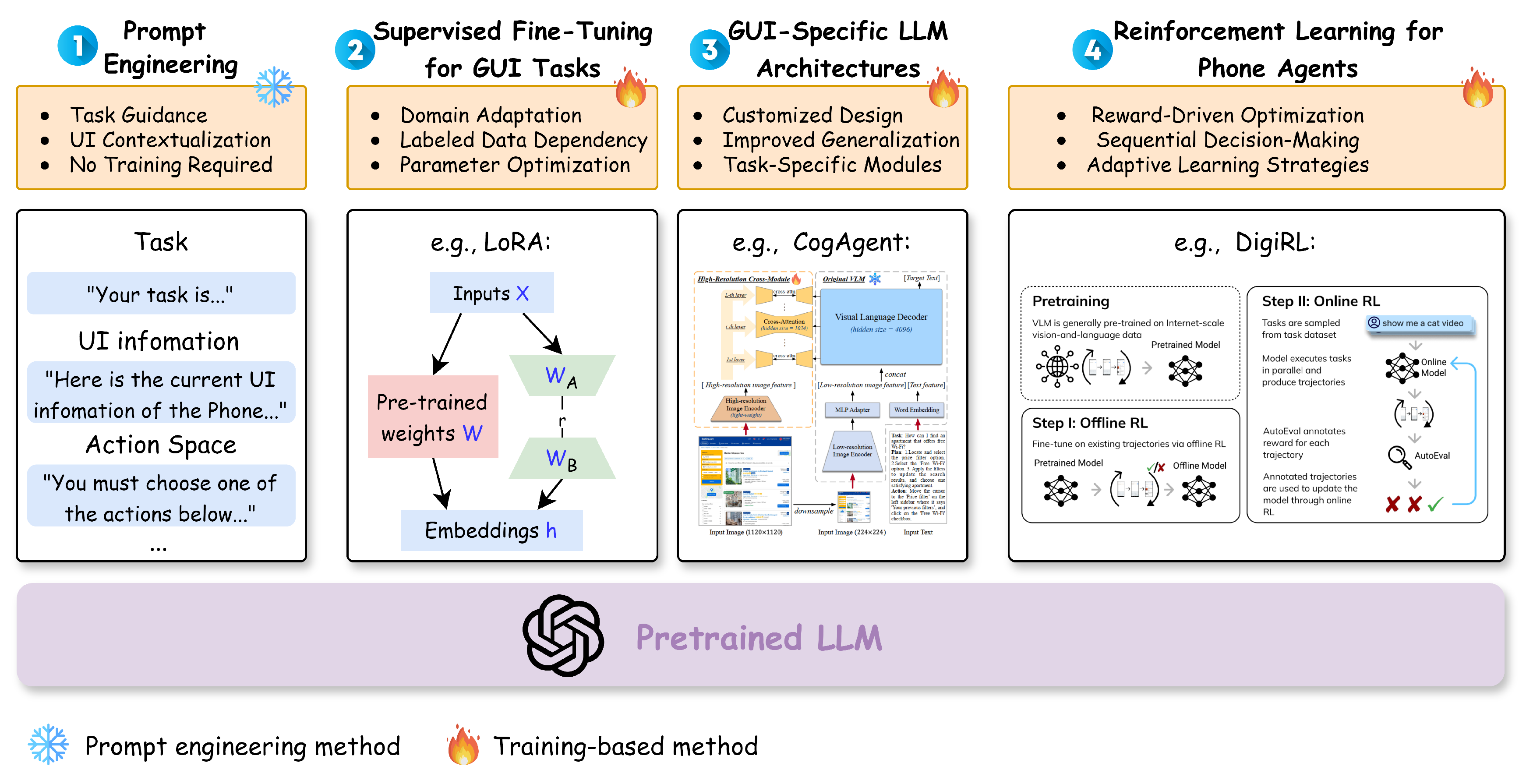

In this section, we explore two primary approaches to leveraging LLMs for phone automation:

Training-Based Methods and

Prompt Engineering.

Figure 7 illustrates the differences between these two approaches in the context of phone automation.

Training-Based Methods involve adapting LLMs specifically for phone automation tasks through techniques like supervised fine-tuning Chen et al. (2024b); Cheng et al. (2024); Lu et al. (2024a); Pawlowski et al. (2024) and reinforcement learning Bai et al. (2024); Song et al. (2024a); Wang et al. (2024g). These methods aim to enhance the models’ capabilities by training them on GUI-specific data, enabling them to understand and interact with GUIs more effectively.

Prompt Engineering, on the other hand, focuses on designing input prompts to guide pre-trained LLMs to perform desired tasks without additional training Chen et al. (2022); Wei et al. (2022); Yao et al. (2024). By carefully crafting prompts that include relevant information such as task descriptions, interface states, and action histories, users can influence the model’s behavior to achieve specific automation goals Song et al. (2023b); Wen et al. (2023); Zhang et al. (2023a).

4.1. Prompt Engineering

LLMs like the GPT series Brown (2020); Radford (2018a); Radford et al. (2019) have demonstrated remarkable capabilities in understanding and generating human-like text. These models have revolutionized natural language processing by leveraging massive amounts of data to learn complex language patterns and representations.

Prompt engineering is the practice of designing input prompts to effectively guide LLMs to produce desired outputs for specific tasks Chen et al. (2022); Wei et al. (2022); Yao et al. (2024). By carefully crafting the prompts, users can influence the model’s behavior without the need for additional training or fine-tuning. This approach allows for leveraging the general capabilities of pre-trained models to perform a wide range of tasks by simply providing appropriate instructions or examples in the prompt.

In the context of phone automation, prompt engineering enables the utilization of general-purpose LLMs to perform automation tasks on mobile devices. Recently, a plethora of works have emerged that apply prompt engineering to achieve phone automation Liu et al. (2024c); Lu et al. (2024b); Song et al. (2023b); Taeb et al. (2024); Wang et al. (2024a,b); Wen et al. (2023); Yan et al. (2023); Yang et al.(2024); Zhang et al. (2023a, 2024b). These works leverage the strengths of LLMs in natural language understanding and reasoning to interpret user instructions and generate corresponding actions on mobile devices.

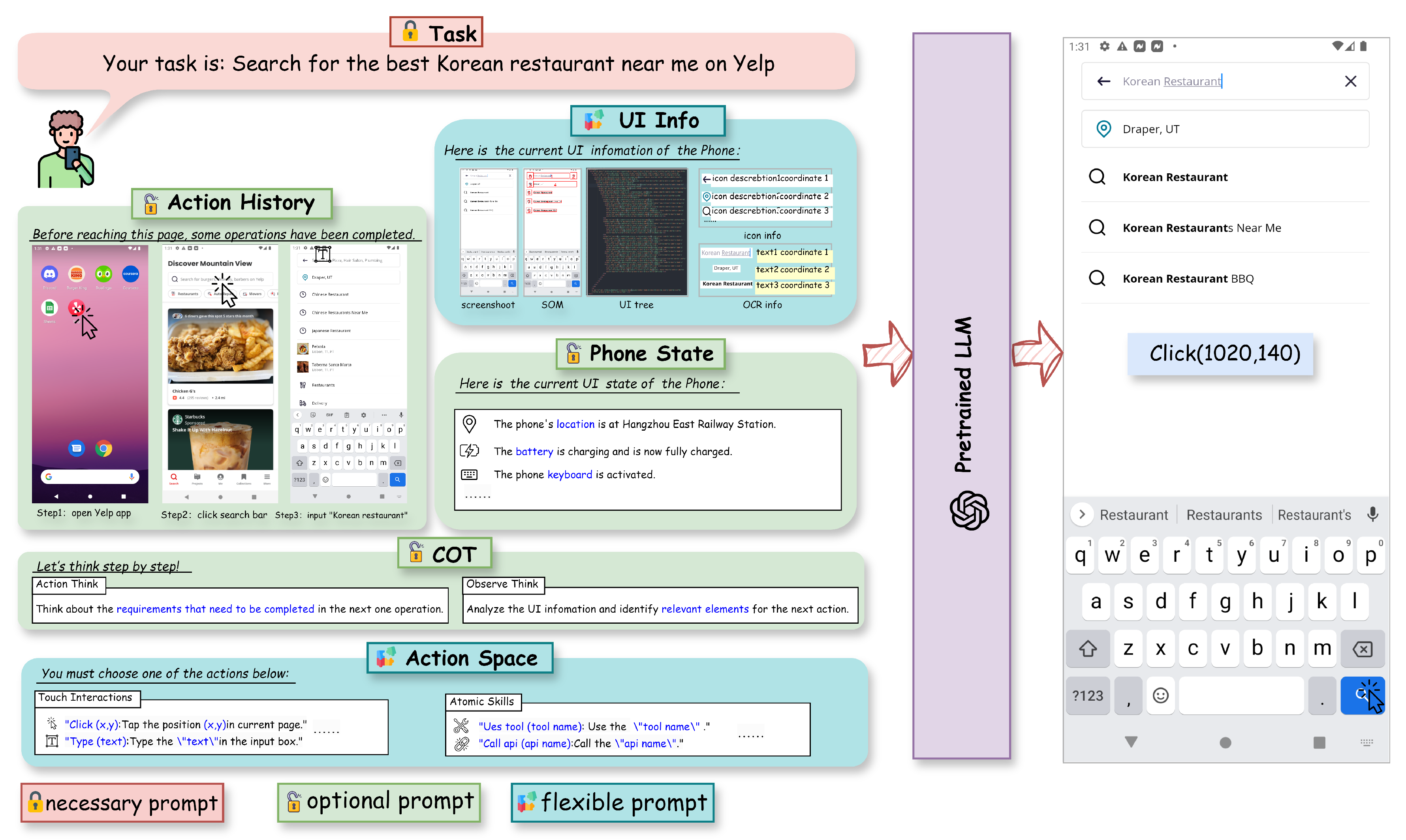

The fundamental approach to achieving phone automation through prompt engineering entails the creation of prompts that encapsulate a comprehensive set of information. These prompts should include a detailed task description, such as searching for the best Korean restaurant on Yelp. They also integrate the current UI information of the phone, which may encompass screenshots, SoM, UI tree structures, icon details, and OCR data. Additionally, the prompts should account for the phone’s real-time state, including its location, battery level, and keyboard status, as well as any pertinent action history and the range of possible actions (action space). The COT prompt Wei et al. (2022) is also a crucial component, guiding the thought process for the next operation. The LLM then analyzes this rich prompt and determines the subsequent action to execute. This methodical process is vividly depicted in

Figure 8.

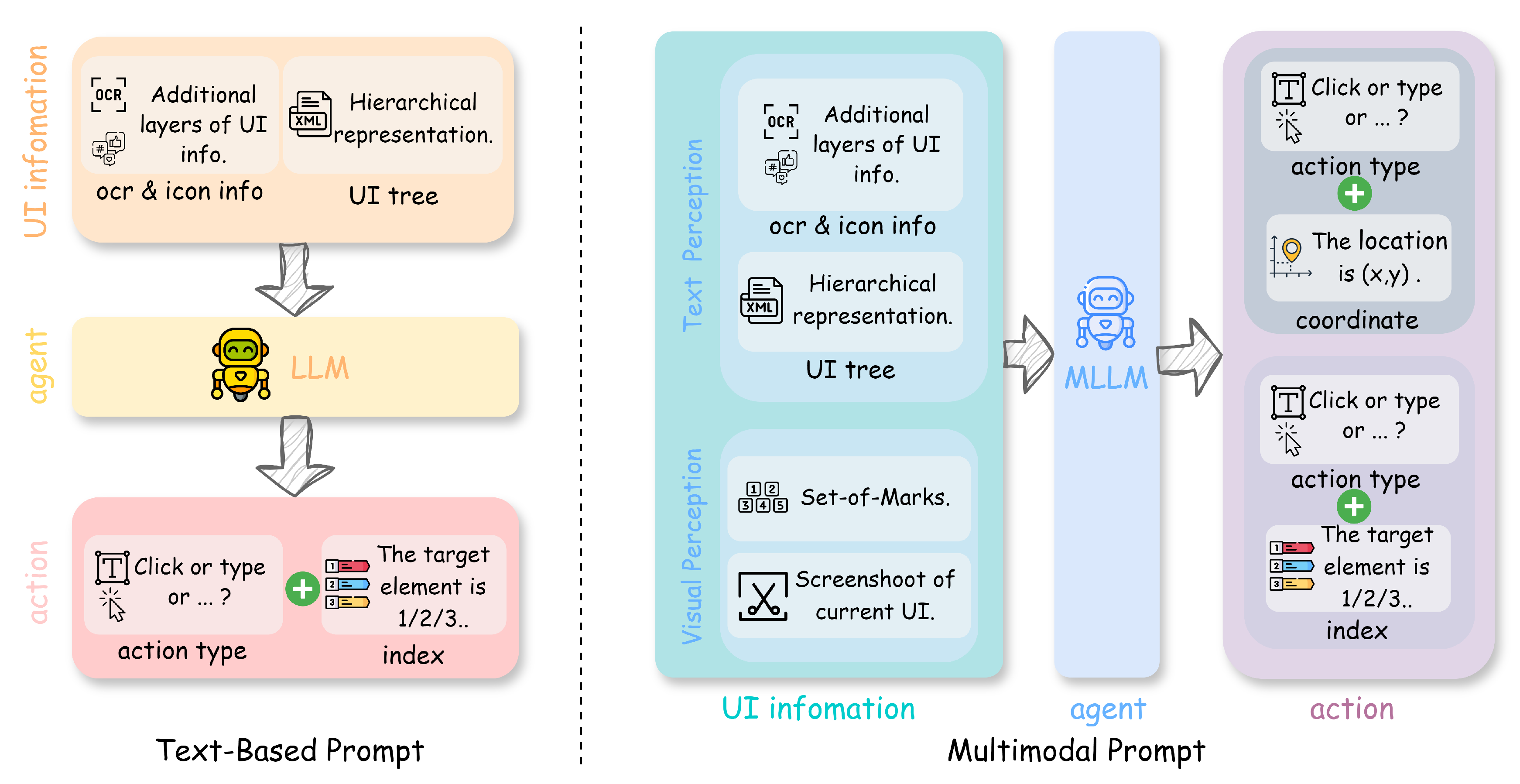

This section explores the application of prompt engineering in phone automation, categorizing related works based on the type of prompts used:

Text-Based Prompt and

Multimodal Prompt. As illustrated in

Figure 9, the approach to automation significantly diverges between these two prompt types.

Table 2 summarizes notable methods, highlighting their main UI information, the type of model used, and other relevant details such as task types and grounding strategies.

4.1.1. Text-Based Prompt

In the domain of text-based prompt automation, the primary architecture involves a single text-modal LLM serving as the agent for mobile device automation. This agent operates by interpreting UI information presented in the form of a UI tree. It is important to note that, to date, the approaches discussed have primarily utilized UI tree data and have not extensively incorporated OCR text and icon information. We believe that solely relying on OCR and icon information is insufficient for fully representing screen UI information; instead, as demonstrated in Mobile-agent-v2 Wang et al. (2024a), they are best used as auxiliary information alongside screenshots. These text-based prompt agents make decisions by selecting elements from a list of candidates based on the textual description of the UI elements. For instance, to initiate a search, the LLM would identify and select the search button by its index within the UI tree rather than its screen coordinates, as depicted in

Figure 9.

The study by Enabling Conversational Wang et al. (2023b) marked a significant step in this field. It explored the use of task descriptions, action spaces, and UI trees to map instructions to UI actions. However, it focused solely on the execution of individual instructions without delving into sequential decision-making processes. DroidBot-GPT Wen et al. (2023) is a landmark in applying pre-trained language models to app automation. It is the first to explore the use of LLMs for app automation without requiring modifications to the app or the model. DroidBot-GPT perceives UI trees, which are structural representations of the app’s UI, and integrates user-provided tasks along with action spaces and output requirements. This allows the model to engage in sequential decision-making and automate tasks effectively. AutoDroid Wen et al. (2024) takes this concept further. It employs a UI Transition Graph (UTG) generated through random exploration to create an App Memory. This memory, combined with the commonsense knowledge of LLMs, enhances decision-making and significantly advances the capabilities of phone GUI agents. MobileGPT Lee et al. (2023) introduces a hierarchical decision-making process. It simulates human cognitive processes—exploration, selection, derivation, and recall—to augment the efficiency and reliability of LLMs in mobile task automation. Lastly, AXNav Taeb et al. (2024) showcases an innovative application of Prompt Engineering in accessibility testing. AXNav interprets natural language instructions and executes them through an LLM, streamlining the testing process and improving the detection of accessibility issues, thus aiding the manual testing workflows of QA professionals.

Each of these contributions, while unique in their approach, is united by the common thread of Prompt Engineering. They demonstrate the versatility and potential of text-based prompt automation in enhancing the interaction between LLMs and mobile applications.

4.1.2. Multimodal Prompt

With the advancement of large pre-trained models, Multimodal Large Language Models (MLLMs) have demonstrated exceptional performance across various domains Achiam et al. (2023); Bai et al. (2023); Chen et al. (2024c,d); Li et al. (2023b); Liu et al. (2024a); Wang et al. (2024e, 2023d); Ye et al. (2023), significantly contributing to the evolution of phone automation. Unlike text-only models, multimodal models integrate visual and textual information, addressing limitations such as the inability to access UI trees, missing control information, and inadequate global screen representation. By leveraging screenshots for decision-making, multimodal models facilitate a more natural simulation of human interactions with mobile devices, enhancing both accuracy and robustness in automated operations.

The fundamental framework for multimodal phone automation is illustrated in

Figure 9. Multimodal prompts integrate visual perception (

e.g., screenshots) and textual information (

e.g., UI tree, OCR, and icon data) to guide MLLMs in generating actions. The action outputs can be categorized into two methods:

SoM-Based Indexing Methods and

Direct Coordinate Output Methods. These methods define how the agent identifies and interacts with UI elements, either by referencing annotated indices or by pinpointing precise coordinates.

SoM-Based Indexing Methods. SoM-based methods involve annotating UI elements with unique identifiers within the screenshot, allowing the MLLM to reference these elements by their indices when generating actions. This approach mitigates the challenges associated with direct coordinate outputs, such as precision and adaptability to dynamic interfaces. MM-Navigator Yan et al. (2023) represents a breakthrough in zero-shot GUI navigation using GPT-4V Achiam et al. (2023). By employing SoM prompting Yang et al. (2023), MM-Navigator annotates screenshots through OCR and icon recognition, assigning unique numeric IDs to actionable widgets. This enables GPT-4V to generate indexed action descriptions rather than precise coordinates, enhancing action execution accuracy. Building upon the SoM-based approach, AppAgent Zhang et al. (2023a) integrates autonomous exploration and human demonstration observation to construct a comprehensive knowledge base. This framework allows the agent to navigate and operate smartphone applications through simplified action spaces, such as tapping and swiping, without requiring backend system access. Tested across 10 different applications and 50 tasks, AppAgent showcases superior adaptability and efficiency in handling diverse high-level tasks, further advancing multimodal phone automation. OmniParser Lu et al. (2024b) enhances the SoM-based method by introducing a robust screen parsing technique. It combines fine-tuned interactive icon detection models and functional captioning models to convert UI screenshots into structured elements with bounding boxes and labels. This comprehensive parsing significantly improves GPT-4V’s ability to generate accurately grounded actions, ensuring reliable operation across multiple platforms and applications.

Direct Coordinate Output Methods. Direct coordinate output methods enable MLLMs to determine the exact (x, y) positions of UI elements from screenshots, facilitating precise interactions without relying on indexed references. This approach leverages the advanced visual grounding capabilities of MLLMs to interpret and interact with the UI elements directly. VisionTasker Song et al. (2024b) introduces a two-stage framework that combines vision-based UI understanding with LLM task planning. Utilizing models like YOLOv8 Varghese and Sambath (2024) and PaddleOCR Du et al. (2020), VisionTasker parses screenshots to identify widgets and textual information, transforming them into natural language descriptions. This structured semantic representation allows the LLM to perform step-by-step task planning, enhancing the accuracy and practicality of automated mobile task execution. The Mobile-Agent series Wang et al. (2024a,b) leverages visual perception tools to accurately identify and locate both visual and textual UI elements within app screenshots. Mobile-Agent-v1 utilizes coordinate-based actions, enabling precise interaction with UI elements. Mobile-Agent-v2 extends this by introducing a multi-agent architecture comprising planning, decision, and reflection agents. MobileExperts Zhang et al. (2024b) advances the direct coordinate output method by incorporating tool formulation and multi-agent collaboration. This dynamic, tool-enabled agent team employs a dual-layer planning mechanism to efficiently execute multi-step operations while reducing reasoning costs by approximately 22%. By dynamically assembling specialized agents and utilizing reusable code block tools, MobileExperts demonstrates enhanced intelligence and operational efficiency in complex phone automation tasks. VisionDroid Liu et al. (2024c) applies MLLMs to automated GUI testing, focusing on detecting non-crash functional bugs through vision-based UI understanding. By aligning textual and visual information, VisionDroid enables the MLLM to comprehend GUI semantics and operational logic, employing step-by-step task planning to enhance bug detection accuracy. Evaluations across multiple datasets and real-world applications highlight VisionDroid’s superior performance in identifying and addressing functional bugs.

While multimodal prompt strategies have significantly advanced phone automation by integrating visual and textual data, they still face notable challenges. Approaches that do not utilize SoM maps and instead directly output coordinates rely heavily on the MLLM’s ability to accurately ground UI elements for precise manipulation. Although recent innovations Liu et al. (2024c); Wang et al. (2024a); Zhang et al. (2024b) have made progress in addressing the limitations of MLLMs’ grounding capabilities, there remains considerable room for improvement. Enhancing the robustness and accuracy of UI grounding is essential to achieve more reliable and scalable phone automation.

4.2. Training-Based Methods

The subsequent sections delve into these approaches, discussing the development of task-specific model architectures, supervised fine-tuning strategies and reinforcement learning techniques in both general-purpose and domain-specific scenarios.

4.2.1. Task-Specific LLM-based Agents

To advance AI agents for phone automation, significant efforts have been made to develop Task Specific Model Architectures that are tailored to understand and interact with GUIs by integrating visual perception with language understanding. These models address unique challenges posed by GUI environment, such as varying screen sizes, complex layouts, and diverse interaction patterns. A summary of notable Task Specific Model Architectures is presented in