1. Introduction

Optimal control synthesis plays a critical role in managing the dynamics of controlled objects or processes. This is particularly relevant when the dynamics are described by Ito stochastic differential equations (SDE). It should be noted that the choice of Ito SDE is due to their prevalence as applied models and their widespread use in biology, chemistry, telecommunications, etc. The main type of random perturbations in stochastic differential equations is the symmetric Wiener process, the trajectories of which have the reflection property, which very well characterizes the symmetry of this process. The symmetry of the solution of the stochastic differential equation is also well reflected in the example of this work, where the symmetry of the solution trajectories is traced relative to the so-called averaged trajectory, and optimal control does not violate this property. The paper [

1] considers the optimal control of linear SDE of general type perturbed by a random process with independent increments and a quadratic quality functional. It is shown that under certain conditions the optimal control is linear and can be determined by solving additional vector quadratic problem. The optimal solution of the deterministic problem with feedback is obtained. In paper [

2], the theory of optimal control for stochastic systems whose performance is measured by the exponent of an integral form is developed. In paper [

3], the problem of the existence of optimal control for stochastic systems with a nonlinear quality functional is solved. Main model in the work [

4] is the linear autonomous SDE in the form,

where

with

. It should be noted that the results of the paper are devoted to the quadratic quality functional and necessary and sufficient conditions for the stability of the systems (

1), and the stability conditions are formulated in terms of Riccati-type jumping operator properties. Note that the linear case (

1) is most often considered as a first approximation of the dynamics of a real phenomenon, since the optimal control in this case can be found in closed form and the approximations of the optimal control can be compared with the exact values.

In the article [

5], the main attention is focused on SDE with external switches. In this paper, a number of important remarks are made on the calculation of the product (infinitesimal) operator of a random process given by a SDE with Markov switches.

In the article [

6], the main attention is paid to SDE with external switches and Poisson perturbations. Using the Belman equation, sufficient conditions for the existence of optimal control for a general quality function are found, and a closed form of optimal control is found for the linear case with a quadratic quality function.

It should be noted that the transition from difference equations to differential equations gives rise to a number of complications in the synthesis of optimal control, since it leads to a transition from solving more complex dynamical systems such as the analog of the Lyapunov equation for nonautonomous systems. On the other hand, the presence of random variables of different structures leads to the use of the infinitesimal operator [

7,

8], the calculation of which will depend on the nature of the random variables that affect the calculation of the infinitesimal operator.

In this paper, we will focus on the use of semi-Markov processes [

8] as the main source of external random disturbances. It should be noted that the use of semi-Markov processes significantly extends the range of application of theoretical results for many applied problems, since the condition of exponential distribution of the time spent in the state

for continuous Markov processes

is very strict for many applied problems. A description based on a Markov process will be incorrect if, for example, an assumption is made about the minimum time spent in a particular state

, where

is the minimum time spent in the state. In this case, the use of semi-Markov processes is more efficient, since it allows us to control the properties of the residence time, the size of the jump

and the interdependence between them based on the semi-Markov kernel

[

8]. On the other hand, it should be noted that the use of Markov processes greatly simplifies the study of the system, since it requires estimation only on the basis of the intensities of the Markov process

and usually when studying systems with external Markov disturbances it is assumed that

In this study, we will not focus on the asymptotic properties of the random process

, but will consider the problem of synthesizing optimal control on a finite interval

, so the article will not consider additional conditions on the semi-Markov process that ensure its ergodicity [

7,

9,

10]. Instead, the main attention will be paid to the elementary study of the dynamics of the main process

at a fixed value of the external perturbation

, which allows us to more effectively study the optimal control based on the methods proposed for Markov processes.

In this paper, we consider a model example that illustrates the steps of synthesizing optimal control under the condition that the state residence time is determined by , i.e., the state residence time is discrete and with probability 1 greater than .

2. Problem Statement

Consider a stochastic dynamical system defined on a probabilistic basis

[

7,

11]. The system is governed by the stochastic differential equation (SDE):

Here

is a semi-Markov process with values in

, which characterised by generator [

9]

where

specifies the distribution of jumps of the nested Markov chain

[

8],

– is the conditional distribution of time spent in the state

y. It should be noted that the representation of the generator based on the splitting (

4) greatly simplifies the main calculations in proving the main theoretical results of this paper, but allows generalization to the general case of a semi-Markov kernel

;

;

is a standard Wiener process; a control

is

m-measured function from the set of admissible controls

U[

12]; the processes

w and

are independent [

7,

11].

As in works [

11,

13], we assume that the measured by the set of variables functions

and

satisfy the boundedness condition and the Lipschitz condition

The semi-Markov process

has the following effect on the trajectories of the process

x. Suppose that on the interval

the process

takes the value

. Then the movement will occur due to the system

According to [

10,

11], if the conditions (

5) and (

6) are met, the system (

7) has on the interval

a unique solution with finite second moment up to stochastic equivalence.

Then, at time

, the value of the process

changes:

. Then, on the interval

, the motion will occur due to the system

According to [

10,

11], if conditions (

5), (

6) are met, system (

8) has a unique solution with a finite second moment on the interval

.

Thus, conditions (

5), (

6) guarantee the existence of a unique solution to the Cauchy problem (

2), (

3) on the interval

, the second moment for which is finite. Thus, for the existence of a solution on

, we will assume that the semi-Markov process is defined on

, i.e.

.

3. Sufficient Conditions for Optimality

We introduce a sequence of functions and class .

On the functions

we define the weak infinitesimal operator (WIO)

where

is the strong solution (

2) on the interval

with control

.

The problem of optimal control is to find a control

from the set

U that minimizes the scalar quality functional [

12]

for some fixed

,

,

, and

.

To obtain sufficient conditions for optimality, we need to prove several auxiliary statements.

Lemma 1. Let:

1) there exists a unique solution to the Cauchy problem (

2), (

3), whose second moment is finite for each

t;

2) a sequence of functions from class V exists;

3) for

, the WIO

is defined on the solutions of (

2), (

3).

Then

the equality

met.

Proof. For the Markov process

with respect to the

-algebra

constructed on the interval

, the following Dynkin formula [

7] holds

where

and

.

If

then for the solution of the problem (

2), (

3) we get the following equality

Similarly, write the Dynkin formula on the interval

and, subtracting it from (

12), we obtain (

11). Lemma 1 is proved. □

Lemma 2. Let:

1) conditions 1) and 2) of Lemma 1 are fulfilled;

2) for

,

has the sense the equation

with a boundary condition

where

is the WIO defined by (

9).

Then

, can be write as

Proof. Consider the solution

of the problem (

2), (

3) for

, constructed according to the corresponding initial condition.

We integrate (

13) respect to

s from

to

T and calculate the mathematical expectation. We get

According to Lemma 1, there exists a first term (

16) that is equal to the increment (

11):

where

according to (

14), and

. Thus,

Substituting (

17) into (

16), we obtain the statement of Lemma 2. □

Theorem 1. Let:

1) there exists a unique solution to the Cauchy problem (2), (3), whose second moment is finite for each t;

2) there exists a sequence of functions and an optimal control that satisfy the equation

with a boundary condition

3) , the following inequality holds

where is the WIO (9) on the solutions of (2), (3).

Then the control is optimal and

The sequence of functions is called the control cost or Bellman function, and equation (18) can be written as the Bellman equation

Proof. An optimal control is also an admissible control. Therefore, there exists a solution

for which (

18) takes the form

where

taken at the point

.

We integrate (

23) from

t to

T, calculate the mathematical expectation, and, taking into account (

19), we obtain

Now let

be an arbitrary control from the class of admissible controls

U. Then, according to condition 3) of the theorem, the following inequality holds

We integrate (

24) over

, and calculate the mathematical expectation

at fixed

and initial value

x. Accounting Lemmas 1 and 2, we obtain

And this, in fact, is the definition of optimal control in the sense of minimizing the quality functional . Theorem 1 is proved. □

4. General Solution of the Optimal Control Problem

The following theorem holds.

Theorem 2.

The weak infinitesimal operator on the solutions of the Cauchy problem (2), (3) on the functions is calculated by the formula

where is a scalar product, , , >> is a transpose sign, is a matrix trace,

is a conditional density of the process for :

In the last term in the formula (25) in the function the last argument indicates the value of the semi-Markov process at a given time, i.e. .

Proof. The first three terms can be obtained in the same way as in [

14]. Let us obtain the form of the last term, which is related to the semi-Markov parameter

. To do this, consider the hypotheses

. Considering the hypotheses

, we obtain that the last term will correspond to the hypotheses

, i.e.

For the first term, the absence of a change in the state of the semi-Markov process, the conditions imposed on the coefficients of the initial equation allow us to write

On the other hand, when the state of the semi-Markov process

changes at time

t, taking into account the form of the conditional transition probability

(

26), we obtain

Theorem 2 is proved. □

The first equation for finding

can be obtained by substituting (

25) into (

18). We get

with a boundary condition

The second equation, for finding the optimal control

, can be obtained from (

27) by differentiating respect to

u, since

delivers the minimum of the left-hand side of (

27):

where

is a

-Jacobian, which consists of the elements

(

– similarly),

,

.

The solution of the system (

27), (

29) is a very difficult task even with the use of modern computer technologies. Therefore, it is advisable to consider a simplified version of the problem (

2), (

3), (

10), namely, a linear system with a quadratic quality functional.

5. Synthesis of Optimal Control for a Linear Stochastic System

Consider the problem of optimal control for a linear stochastic dynamical system given by the stochastic differential equation

with the initial conditions

Here are piecewise continuous integral matrix-functions of appropriate dimension.

The problem of optimal control for the system (

30), (

31) is to find a control

from the set of admissible controls

U such that minimizes the quadratic quality functional

is a uniformly positive definite respect to

-matrix,

and

are non-negatively definite

-matrices. To simplify the notation, we introduce the notation

Theorem 3.

The optimal control for the problem (30)–(32) has the next form:

where the non-negatively definite -matrix defines the Bellman functional

Here g is a non-negative scalar function.

Proof. Bellman’s equation for (

30)–(

32) has the form

where

Substitute (

36) into (

35):

The form of the optimal control is obtained by differentiating (

37) since

minimizes the left side (

37):

where

Theorem 3 is proved. □

6. Construction of the Bellman Equation

Substituting (

33) and (

34) into equation (

35), we obtain the following equation for

:

Equating to zero the quadratic form in

x and expressions that do not depend on

x, taking into account the matrix equality

, we obtain a system of differential equations for finding the matrices

:

with a boundary condition

Thus, we can formulate the following theorem.

Theorem 4. If the quality functional for the system (30), (31) is (32), and the control cost is (34), then the system of differential equations for finding the matrices has the form (39)–(41).

Next, we will prove the solvability of the system (

39)–(

41). Let us use the Bellman iteration method [

15]. To simplify the calculations, we consider the interval

where

and omit the index

k for

and

P. Let us define the zero approximation

where

is a bounded piecewise continuous matrix. Substitute (

42) into (

27) and find the value of

from the resulting equation, which corresponds to the control of (

42).

Next, we substitute

into the Bellman equation (

22) and find the control

that minimizes (

22).

Continuing this process, we obtain a sequence of controls

and functional

in the form

where

is the solution of the boundary value problem (

39)–(

41) for

.

For

the next estimate is correct

The convergence of the functions

to

, the controls

to

, and the convergence of the sequence of matrices

to

can be proved using (

44) [

12].

The next estimate is correct:

Thus, the following theorem holds.

Theorem 5. The approximate solution of the optimal control synthesis problem for the problem (30)–(32) is carried out using successive Bellman approximations, where the n-th approximation of the optimal control and the Bellman functional for each interval is given by the formula (43). In this case, the error is estimated by the inequality (46).

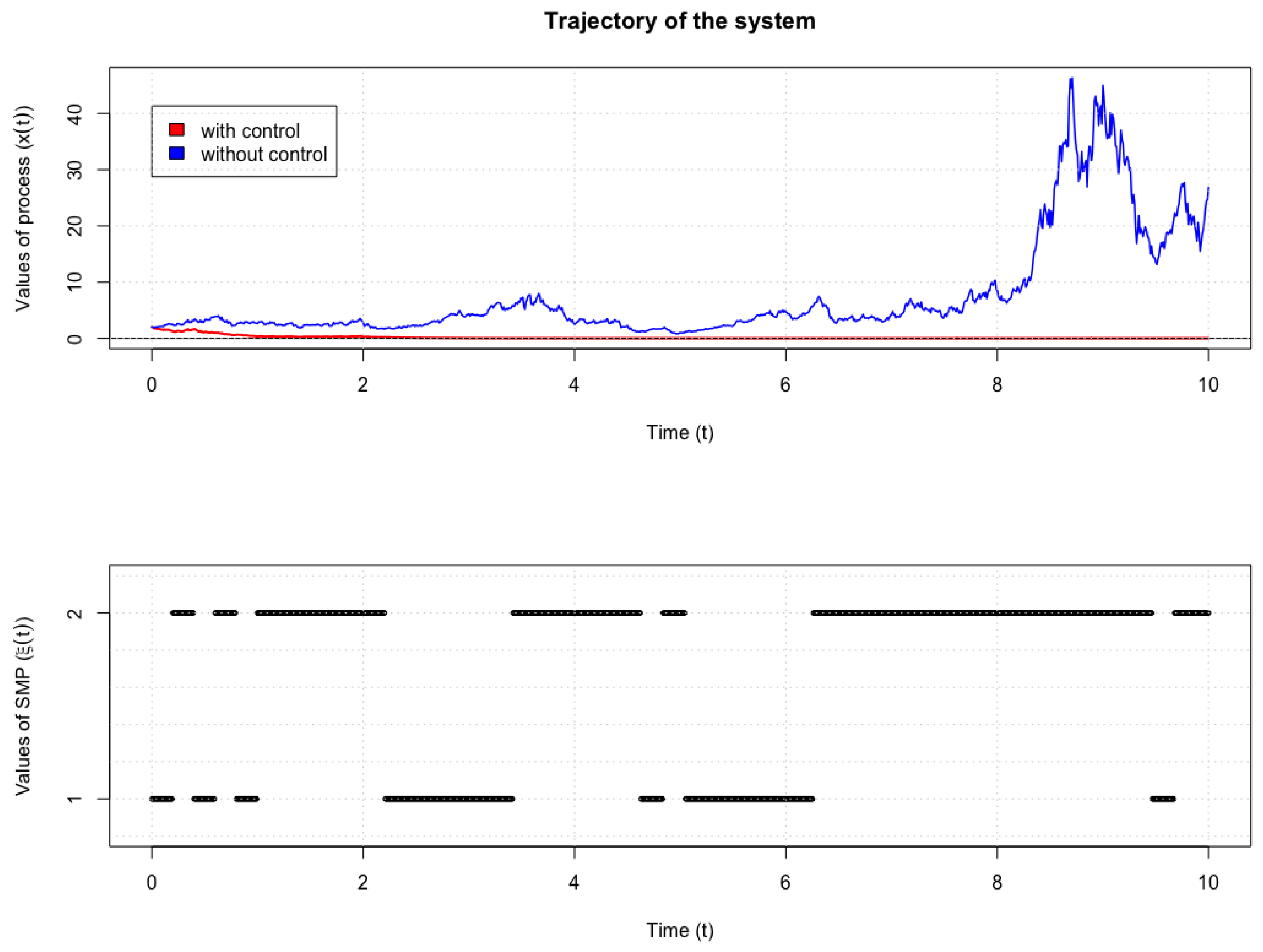

7. Model Example

with the initial condition

Here, is a semi-Markov process with two states with transition probabilities for a nested Markov chain and time in state , where . .

The matrices from the quality functional (

32) are assumed to be equal to

The Bellman functional will be found in the form

In this case, the system (

39)–(

41) has the form

with a boundary condition

Where .

The optimal control is as follows

The realization of the solution of the system (46)–(

47) without control and under the influence of optimal control is shown in

Figure 1.

Figure 1.

Solution of the system (46)–(

47) with the given values of the coefficients.

Figure 1.

Solution of the system (46)–(

47) with the given values of the coefficients.

8. Discussion

The main focus of this paper is on theoretical derivations of the optimal control system for stochastic differential equations in the presence of external perturbations described by semi-Markov processes. This generalization allows us to more accurately describe the dynamics of real processes under various kinds of restrictions on the spend time

in states, which is impossible in the case of a Markov process. In Theorem 2, we find an explicit form of the infinitesimal operator, which is determined on the basis of the coefficients of the original equation and the characteristics of the semi-Markov process. This representation allows us to synthesize the optimal control

based on the Belman equation (

18) with the boundary condition (

19). For the linear case of the system of the form (

30), the search for optimal control is carried out on the basis of solving the Riccati equation (

39), which also arises in the case of the presence of a Markovian external perturbation.

The main focus in the following works for dynamical systems with semi-Markovian external perturbations will be on taking into account the ergodic properties of the semi-Markovian process

when analyzing the asymptotic behavior of the system. In contrast to systems with Markovian external switches, where the ergodic properties of

were described on the basis of intensities

, for the semi-Markovian case, conditions on the times of steady-state and jumps will play an important role. Thus, the parameter estimate of model (

2) will have not only an estimate of the parameters

, but also an estimate of the distribution of the residence time in the states. Therefore, the following algorithm can be proposed for system analysis and parameter estimation:

-

Estimation of switching moments

This estimation can be realized using a generalized unit root test developed for time series [

16];

Estimation state spase for semi-Markov process

,

Estimation coefficients

for SDE (

2);

The presented framework of stochastic dynamic systems with semi-Markov parameters offers a promising tool for systems biology and systems medicine. In systems biology, it can help model complex molecular interactions, such as oxidative stress and mitochondrial dysfunction, influenced by stochastic perturbations. In systems medicine, this approach supports personalized treatment strategies by capturing patient-specific dynamics. For instance, it can predict disease progression and optimize therapies in conditions like Parkinson’s Disease. By integrating theoretical modeling with clinical data, this framework bridges the gap between understanding disease mechanisms and advancing precision medicine.

9. Conclusions

In this paper, we solve the problem of synthesis of optimal control for stochastic dynamical systems with semi-Markov parameters. In the linear case, an algorithm for finding the optimal control is obtained and its convergence is substantiated.

[custom]

Funding

This research was supported by ELIXIR-LU

https://elixir-luxembourg.org/, the Luxembourgish node of ELIXIR, with funding and infrastructure provided by the Luxembourg Centre for Systems Biomedicine (LCSB). LCSB’s support contributed to the computational analyses and methodological development presented in this study.

Acknowledgments

The authors would like to acknowledge the institutional support provided by the Luxembourg Centre for Systems Biomedicine (LCSB) at the University of Luxembourg and Yuriy Fedkovych Chernivtsi National University, which facilitated the completion of this work.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Lindquist, A. Optimal control of linear stochastic systems with applications to time lag systems. Information Sciences 1973, 5, 81–124. [Google Scholar] [CrossRef]

- Kumar P., R.; van Schuppen J., H. . On the optimal control of stochastic systems with an exponential-of-integral performance index. Journal of Mathematical Analysis and Applications, 1981; 80, 312–332. [Google Scholar]

- Buckdahn, R.; Labed, B.; Rainer, C.; Tamer, L. Existence of an optimal control for stochastic control systems with nonlinear cost functional. Stochastics 2010, 82(3), 241–256. [Google Scholar] [CrossRef]

- Dragan, V.; Popa, I.-L. The Linear Quadratic Optimal Control Problem for Stochastic Systems Controlled by Impulses. Symmetry 2024, 16, 1170. [Google Scholar] [CrossRef]

- Das, A.; Lukashiv, T. O.; Malyk, I. V. Optimal control synthesis for stochastic dynamical systems of random structure with the markovian switchings. Journal of Automation and Information Sciences 2017, 4(49), 37–47. [Google Scholar] [CrossRef]

- Antonyuk, S. V.; Byrka, M. F.; Gorbatenko, M. Y.; Lukashiv, T. O.; Malyk, I. V. Optimal Control of Stochastic Dynamic Systems of a Random Structure with Poisson Switches and Markov Switching. Journal of Mathematics 2020. [Google Scholar] [CrossRef]

- Dynkin, E.B. Markov Processes; Academic Press: New York, USA, 1965. [Google Scholar]

- Koroliuk, V.; Limnios, N. Stochastic Systems in Merging Phase Space; World Scientific Publishing Co Pte Ltd: Hackensack, NJ, USA, 2005. [Google Scholar]

- Ibe, O. Markov Processes for Stochastic Modelling. 2nd Edition; Elsevier: London, UK, 2013. [Google Scholar]

- Gikhman, I. I.; Skorokhod, A. V. Introduction to the Theory of Random Processes; W. B. Saunders: Philadelphia, PA, USA, 1969. [Google Scholar]

- Øksendal, B. Stochastic Differential Equation. Springer: New York, USA, 2013.

- Kolmanovskii V., B.; Shaikhet L., E. Control of Systems with Aftereffect; American Mathematical Society: Providence, RI, USA, 1996. [Google Scholar]

- Jacod, J.; Shiryaev, A. N. Limit Theorems for Stochastic Processes. Vols. 1 and 2; Fizmatlit: Moscow, Russia, 1994. (in Russian) [Google Scholar]

- Lukashiv, T. One Form of Lyapunov Operator for Stochastic Dynamic System with Markov Parameters. Journal of Mathematics 2016. [Google Scholar] [CrossRef]

- Bellman, R. Dynamic Programming; Princeton University Press: Princeton, NJ, USA, 1972. [Google Scholar]

- Narayan, P.; Popp, S. A New Unit Root Test with Two Structural Breaks in Level and Slope at Unknown Time. Journal of Applied Statistics 2010, 37, 1425–1438. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 1996 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).